Table of Contents

- 1 Madonna di Canneto

- 2 Water spring Madonna di Canneto

- 2.1 Methodology

- 2.2 Rainfall

- 2.2.1 Soil moisture condition

- 2.2.2 TR-55: 'Urban Hydrology for Small Watersheds'

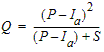

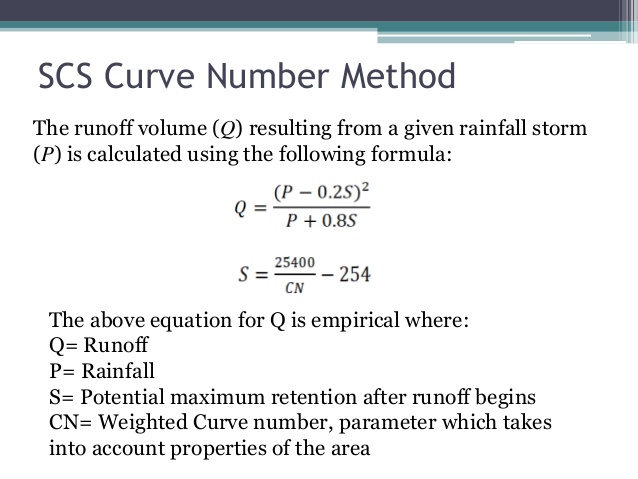

- 2.2.3 SCS CN- or curve number method

- 2.2.4 Runoff curve numbers for cultivated/other agricultural lands and soil types

- 2.2.5 Calculate runoff depth

- 2.2.6 The determination of CN values: by formula method, or by conversion table method

- 2.3 Temperature

- 2.4 Flow rate

- 2.5 Solar Radiation

- 2.6 Prediction of flow rate Madonna di Canneto source

Madonna di Canneto¶

Can you help preserve "blue gold" using data to predict water availability?

Acea Smart Water Analytics

https://www.kaggle.com/c/acea-water-prediction/data

Data Description¶

This competition uses nine different datasets, completely independent and not linked to each other. Each dataset can represent a different kind of waterbody. As each waterbody is different from the other, the related features as well are different from each other. So, if for instance we consider a water spring we notice that its features are different from the lake’s one. This is correct and reflects the behavior and characteristics of each waterbody. The Acea Group deals with four different type of waterbodies: water spring (for which three datasets are provided), lake (for which a dataset is provided), river (for which a dataset is provided) and aquifers (for which four datasets are provided).

Let’s see how these nine waterbodies differ from each other.

Aquifer¶

Auser¶

- This waterbody consists of two subsystems, called NORTH and SOUTH, where the former partly influences the behavior of the latter. Indeed, the north subsystem is a water table (or unconfined) aquifer while the south subsystem is an artesian (or confined) groundwater. The levels of the NORTH sector are represented by the values of the SAL, PAG, CoS and DIEC wells, while the levels of the SOUTH sector by the LT2 well.

Petrignano Aquifer¶

- The wells field of the alluvial plain between Ospedalicchio di Bastia Umbra and Petrignano is fed by three underground aquifers separated by low permeability septa. The aquifer can be considered a water table groundwater and is also fed by the Chiascio river. The groundwater levels are influenced by the following parameters: rainfall, depth to groundwater, temperatures and drainage volumes, level of the Chiascio river.

Doganella Aquifer¶

- The wells field Doganella is fed by two underground aquifers not fed by rivers or lakes but fed by meteoric infiltration. The upper aquifer is a water table with a thickness of about 30m. The lower aquifer is a semi-confined artesian aquifer with a thickness of 50m and is located inside lavas and tufa products. These aquifers are accessed through wells called Well 1, ..., Well 9. Approximately 80 % of the drainage volumes come from the artesian aquifer. The aquifer levels are influenced by the following parameters: rainfall, humidity, subsoil, temperatures and drainage volumes.

Luco Aquifer¶

- The Luco wells field is fed by an underground aquifer. This aquifer not fed by rivers or lakes but by meteoric infiltration at the extremes of the impermeable sedimentary layers. Such aquifer is accessed through wells called Well 1, Well 3 and Well 4 and is influenced by the following parameters: rainfall, depth to groundwater, temperature and drainage volumes.

Water spring¶

Amiata¶

- The Amiata waterbody is composed of a volcanic aquifer not fed by rivers or lakes but fed by meteoric infiltration. This aquifer is accessed through Ermicciolo, Arbure, Bugnano and Galleria Alta water springs. The levels and volumes of the four sources are influenced by the parameters: rainfall, depth to groundwater, hydrometry, temperatures and drainage volumes.

Madonna di Canneto¶

- The Madonna di Canneto spring is situated at an altitude of 1010m above sea level in the Canneto valley. It does not consist of an aquifer and its source is supplied by the water catchment area of the river Melfa.

- Settefrati is a commune with an altitude of 784 m and surface area of 50,6 km².

Lupa¶

- this water spring is located in the Rosciano Valley, on the left side of the Nera river. The waters emerge at an altitude of about 375 meters above sea level through a long draining tunnel that crosses, in its final section, lithotypes and essentially calcareous rocks. It provides drinking water to the city of Terni and the towns around it.

River¶

Arno¶

- Arno is the second largest river in peninsular Italy and the main waterway in Tuscany and it has a relatively torrential regime, due to the nature of the surrounding soils (marl and impermeable clays). Arno results to be the main source of water supply of the metropolitan area of Florence-Prato-Pistoia. The availability of water for this waterbody is evaluated by checking the hydrometric level of the river at the section of Nave di Rosano.

Lake¶

Bilancino¶

- Bilancino lake is an artificial lake located in the municipality of Barberino di Mugello (about 50 km from Florence). It is used to refill the Arno river during the summer months. Indeed, during the winter months, the lake is filled up and then, during the summer months, the water of the lake is poured into the Arno river.

Each waterbody has its own different features to be predicted. The table below shows the expected feature to forecast for each waterbody.

It is of the utmost importance to notice that some features like rainfall and temperature, which are present in each dataset, don’t go alongside the date. Indeed, both rainfall and temperature affect features like level, flow, depth to groundwater and hydrometry some time after it fell down. This means, for instance, that rain fell on 1st January doesn’t affect the mentioned features right the same day but some time later. As we don’t know how many days/weeks/months later rainfall affects these features, this is another aspect to keep into consideration when analyzing the dataset.

A short, tabular description of the waterbodies is available also downloading all datasets.

More information about the behavior of each kind of waterbody can be found at the following links:

- Aquifer https://en.wikipedia.org/wiki/Aquifer

- Water spring https://en.wikipedia.org/wiki/Spring_(hydrology)

- River https://en.wikipedia.org/wiki/River

- Lake https://en.wikipedia.org/wiki/Lake

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

sns.set()

sns.set_context('notebook')

np.set_printoptions(threshold=20, precision=2, suppress=True)

pd.options.display.max_rows = 57

pd.options.display.max_columns = 38

pd.set_option('precision', 2)

from IPython.display import Image, display

%config InlineBackend.figure_format = 'retina';

from IPython.core.display import display, HTML, SVG

display(HTML("<style>.container {width:70% !important;}</style>"))

#sns.axes_style()

{'axes.facecolor': '#EAEAF2',

'axes.edgecolor': 'white',

'axes.grid': True,

'axes.axisbelow': True,

'axes.labelcolor': '.15',

'figure.facecolor': 'white',

'grid.color': 'white',

'grid.linestyle': '-',

'text.color': '.15',

'xtick.color': '.15',

'ytick.color': '.15',

'xtick.direction': 'out',

'ytick.direction': 'out',

'lines.solid_capstyle': <CapStyle.round: 'round'>,

'patch.edgecolor': 'w',

'patch.force_edgecolor': True,

'image.cmap': 'rocket',

'font.family': ['sans-serif'],

'font.sans-serif': ['Arial',

'DejaVu Sans',

'Liberation Sans',

'Bitstream Vera Sans',

'sans-serif'],

'xtick.bottom': False,

'xtick.top': False,

'ytick.left': False,

'ytick.right': False,

'axes.spines.left': True,

'axes.spines.bottom': True,

'axes.spines.right': True,

'axes.spines.top': True}

imgValle= Image( url="http://vanoproy.be/css/Valle_Canneto-Irto-Bellaveduta-RoccaAltiera.jpg", width=1300) # http://vanoproy.be/css filename

imgInfil= Image( url="http://vanoproy.be/python/figs/evaporation_infiltration_en.png", width=500)

imgValle

This map of VdC I found on a website reporting a daylong hike, which trail is imaged by the blue trace. The water spring itself surfaces on the right side of the valley, near the 1025 m notation.

South of the peak Monti della Meta there is a "plateau", a quasi horizontal surface with some local depressions, which can serve as a supplementory infiltration area for the water spring.

I can imaging there is some underground connection as dissolving calcium could have made conduits, while earthquakes and tectonics could have made cracks in the rocks.

Introduction: the study of springs¶

In recent years, long and frequent droughts have affected many countries in the world. These events require an ever more careful and rational management of water resources. Most of the globe’s unfrozen freshwater reserves are stored in aquifers. Groundwater is generally a renewable resource that shows good quality and resilience to fluctuations. Thus, if properly managed, groundwater could ensure long-term supply in order to meet increasing water demand.

For this purpose, it is of crucial importance to be able to predict the flow rates provided by springs. These represent the transitions from groundwater to surface water and reflect the dynamics of the aquifer, with the whole flow system behind. Moreover, spring influences water bodies into which they discharge. The importance of springs in groundwater research is highlighted in some significant contributions. In-depth studies on springs started only after the concept of sustainability was introduced in the management of water resources.

A spring hydrograph is the consequence of several processes governing the transformation of precipitation in the spring recharge area into the single output discharge at the spring. A water balance states that the change rate in water stored in the feeding aquifer is balanced by the rate at which water flows into and out of the aquifer. A quantitative water balance generally has to take the following terms into account: precipitation, infiltration, surface runoff, evapotranspiration, groundwater recharge, soil moisture deficit, spring discharge, lateral inflow to the aquifer, leakage between the aquifer and the underlying aquitard, well pumpage from the aquifer, and change of the storage in the aquifer.

In many cases, the evaluation of the terms of the water balance is very complicated. The complexity of the problem arises from many factors: hydrologic, hydrographic, and hydrogeological features, geologic and geomorphologic characteristics, land use, land cover, water withdrawals, and climatic conditions.

Even more complicated would be to estimate future spring discharges by using a model based on the balance equations. Therefore, simplified approaches are frequently pursued for practical purposes.

Many authors have addressed the problem of correlating the spring discharges to the rainfall through different approaches...

Note: you can skip the earthquake chapter, as these phenomenon are unpredictable. However, closeby earthquakes have an influence: they do distort the data.

Earthquakes =pd.read_csv(r"earthquake_15_20_EMSC.csv",header=0, sep=",", engine='python', encoding="UTF-8",parse_dates=True );

Earthquakes["Date"] = pd.to_datetime( Earthquakes.Date, format='%Y/%m/%d' , ) # euro dates utc=True

Earthquakes.set_index("Date", inplace=True)

Earthquakes["Dst"] = np.sqrt(np.abs((41.683364-Earthquakes.Latitude ) )+ np.abs((13.910494 -Earthquakes.Longitude)))#**2 **2

Earthquakes.nlargest(19, "Magnitude")

| Time UTC | Latitude | Longitude | Depth | Depth Type | Magnitude Type | Magnitude | Region Name | Last Update | Eqid | Dst | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||

| 2019-06-23 | 20:43:48 | 41.86 | 12.77 | 9 | f | ML | 3.7 | SOUTHERN ITALY | 2019-06-24 03:07 | 772989 | 1.15 |

| 2017-02-03 | 21:33:03 | 41.05 | 12.72 | 2 | ML | 3.7 | SOUTHERN ITALY | 2017-02-04 13:22 | 564948 | 1.35 | |

| 2017-02-03 | 23:08:05 | 41.01 | 12.69 | 2 | ML | 3.5 | SOUTHERN ITALY | 2017-02-04 13:21 | 564959 | 1.38 | |

| 2018-12-29 | 23:52:48 | 41.87 | 12.78 | 10 | f | ML | 2.9 | SOUTHERN ITALY | 2018-12-30 19:51 | 735792 | 1.15 |

| 2017-10-25 | 13:35:55 | 41.56 | 13.19 | 11 | f | ML | 2.9 | SOUTHERN ITALY | 2017-10-25 13:46 | 626212 | 0.92 |

| 2017-09-28 | 06:57:15 | 41.53 | 12.95 | 5 | ML | 2.8 | SOUTHERN ITALY | 2017-09-28 07:10 | 620966 | 1.06 | |

| 2017-02-04 | 02:10:42 | 41.07 | 12.68 | 11 | f | ML | 2.8 | SOUTHERN ITALY | 2017-02-04 02:29 | 565004 | 1.36 |

| 2017-02-04 | 00:20:14 | 41.08 | 12.80 | 10 | f | ML | 2.8 | SOUTHERN ITALY | 2017-02-04 00:34 | 564975 | 1.31 |

| 2016-12-27 | 21:56:24 | 41.97 | 13.43 | 10 | ML | 2.7 | SOUTHERN ITALY | 2016-12-27 22:28 | 555582 | 0.88 | |

| 2019-03-22 | 02:04:14 | 41.55 | 13.39 | 10 | ML | 2.6 | SOUTHERN ITALY | 2019-03-22 02:13 | 752723 | 0.81 | |

| 2019-01-01 | 07:33:55 | 41.69 | 13.37 | 11 | ML | 2.5 | SOUTHERN ITALY | 2019-01-01 07:48 | 736231 | 0.74 | |

| 2018-11-15 | 07:35:55 | 41.68 | 12.76 | 11 | ML | 2.5 | SOUTHERN ITALY | 2018-11-15 07:57 | 725618 | 1.07 | |

| 2018-03-22 | 02:30:37 | 41.65 | 13.19 | 9 | ML | 2.5 | SOUTHERN ITALY | 2018-03-22 02:48 | 655561 | 0.87 | |

| 2017-07-18 | 08:13:28 | 41.81 | 13.21 | 9 | ML | 2.5 | SOUTHERN ITALY | 2017-07-18 08:20 | 605876 | 0.91 | |

| 2017-01-14 | 07:33:01 | 41.72 | 12.68 | 10 | ML | 2.5 | SOUTHERN ITALY | 2017-01-14 08:09 | 559767 | 1.13 | |

| 2016-03-02 | 06:12:33 | 41.95 | 12.64 | 10 | ML | 2.5 | SOUTHERN ITALY | 2016-03-02 06:25 | 491805 | 1.24 | |

| 2015-07-07 | 17:47:27 | 41.93 | 12.79 | 11 | ML | 2.5 | SOUTHERN ITALY | 2015-07-07 17:56 | 449766 | 1.17 | |

| 2015-04-07 | 07:37:23 | 41.93 | 12.70 | 12 | ML | 2.5 | SOUTHERN ITALY | 2015-04-07 07:51 | 435480 | 1.21 | |

| 2019-11-15 | 00:59:07 | 41.87 | 12.77 | 10 | ML | 2.4 | SOUTHERN ITALY | 2019-11-15 01:08 | 805396 | 1.15 |

Earthquakes.head(9)

Distances =pd.read_csv(r"http://vanoproy.be/css/Distances.csv",header=0, sep=",", engine='python', encoding="UTF-8",parse_dates=True );

Distances["Date"] = pd.to_datetime( Distances.Date, format='%Y/%m/%d', ) # euro dates

Distances.set_index("Date", inplace=True)

Distances.head()

| Dist | |

|---|---|

| Date | |

| 2019-11-15 | 79.98 |

| 2019-11-15 | 96.82 |

| 2019-11-13 | 96.60 |

| 2019-11-09 | 96.39 |

| 2019-06-23 | 96.60 |

Distances.csv was made to calculate the correct distance of 2 points on a globe, before I found out the haversine def. in Datasist package. Therefore you find the 2 methods for distances here.

Earthquakes2= pd.merge(Earthquakes, Distances,left_index=True,right_index=True,how="left" )

Earthquakes2.head()

| Time UTC | Latitude | Longitude | Depth | Depth Type | Magnitude Type | Magnitude | Region Name | Last Update | Eqid | Dst | Dist | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | ||||||||||||

| 2014-11-26 | 21:55:22 | 41.86 | 12.74 | 10 | ML | 2.0 | SOUTHERN ITALY | 2014-11-29 09:25 | 410944 | 1.18 | NaN | |

| 2015-02-04 | 09:36:59 | 41.92 | 12.67 | 16 | ML | 2.4 | SOUTHERN ITALY | 2015-02-04 09:48 | 425738 | 1.26 | 106.14 | |

| 2015-02-28 | 19:21:04 | 41.75 | 13.23 | 9 | ML | 2.2 | SOUTHERN ITALY | 2015-02-28 19:36 | 429768 | 0.68 | NaN | |

| 2015-03-07 | 15:14:35 | 41.64 | 12.98 | 20 | ML | 2.0 | SOUTHERN ITALY | 2015-03-07 15:37 | 430751 | 0.93 | NaN | |

| 2015-03-20 | 05:06:40 | 41.87 | 13.27 | 8 | ML | 2.4 | SOUTHERN ITALY | 2015-03-20 05:16 | 432811 | 0.67 | 57.02 |

from datasist.feature_engineering import haversine_distance #afstanden

haversine_distance(41.683364, 13.910494, 41.87, 12.77)

0 96.82 dtype: float64

afstanden=[(41.87, 12.77), (41.86, 12.77), (41.67, 12.75), (41.86, 12.77), (41.55, 13.39), (41.69, 13.37), (41.87, 12.78), (41.69, 12.67), (41.68, 12.76), (41.65, 13.19), (41.53, 13.48), (41.56, 13.19), (41.53, 12.95), (41.47, 12.97), (41.82, 13.21), (41.81, 13.21), (41.98, 13.05), (41.72, 13.21), (41.99, 12.93), (41.07, 12.68), (41.08, 12.8), (41.01, 12.69), (41.05, 12.72), (41.72, 12.68), (41.73, 12.68), (41.97, 13.43), (41.95, 12.64), (41.52, 12.94), (41.93, 12.79), (41.65, 12.8), (41.93, 12.7), (41.93, 12.72), (41.87, 13.27), (41.92, 12.67)]

Earthquakes["Dist"]=pd.Series(afstanden)

EQpiv =Earthquakes.pivot("Eqid", "Depth","Magnitude")

fig, ax = plt.subplots(1,1, figsize=(19, 8.5))

sns.heatmap( data=EQpiv, annot=True, fmt=".1f",cmap="magma" ); #rows="Magnitude", columns="Dst",Earthquakes .corr()

Earthquakes with magnitude 2.5 - 4¶

Intensity = Dist² * original Magnitude

fig, ax = plt.subplots(1,1, figsize=(15, 5))

plt.xscale( "log")

sns.scatterplot (x=Earthquakes2["Dist"], y=Earthquakes2["Magnitude"] ,data=Earthquakes2,hue=Earthquakes2.Depth, label=Earthquakes2.Eqid,palette="magma");

Earthquakes2["ProxiMagnit"]=Earthquakes2["Magnitude"] /np.sqrt(Earthquakes2["Dist"])# Intensity = Dist²* original Magnitude

fig, ax = plt.subplots(1,1, figsize=(14, 5))

sns.scatterplot( data= Earthquakes2,x=Earthquakes2["ProxiMagnit"], y=Earthquakes2["Dist"],hue=Earthquakes2["Magnitude"],size= Earthquakes2.Depth);

Earthquakes2.nlargest(9, "ProxiMagnit")

| Time UTC | Latitude | Longitude | Depth | Depth Type | Magnitude Type | Magnitude | Region Name | Last Update | Eqid | Dst | Dist | ProxiMagnit | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||

| 2019-03-22 | 02:04:14 | 41.55 | 13.39 | 10 | ML | 2.6 | SOUTHERN ITALY | 2019-03-22 02:13 | 752723 | 0.54 | 45.74 | 0.38 | |

| 2016-12-27 | 21:56:24 | 41.97 | 13.43 | 10 | ML | 2.7 | SOUTHERN ITALY | 2016-12-27 22:28 | 555582 | 0.56 | 51.00 | 0.38 | |

| 2019-06-23 | 20:43:48 | 41.86 | 12.77 | 9 | f | ML | 3.7 | SOUTHERN ITALY | 2019-06-24 03:07 | 772989 | 1.15 | 96.60 | 0.38 |

| 2019-01-01 | 07:33:55 | 41.69 | 13.37 | 11 | ML | 2.5 | SOUTHERN ITALY | 2019-01-01 07:48 | 736231 | 0.54 | 44.89 | 0.37 | |

| 2017-10-25 | 13:35:55 | 41.56 | 13.19 | 11 | f | ML | 2.9 | SOUTHERN ITALY | 2017-10-25 13:46 | 626212 | 0.73 | 61.44 | 0.37 |

| 2017-12-23 | 12:17:20 | 41.53 | 13.48 | 10 | ML | 2.3 | SOUTHERN ITALY | 2017-12-23 12:25 | 637193 | 0.46 | 39.65 | 0.37 | |

| 2017-02-03 | 21:33:03 | 41.05 | 12.72 | 2 | ML | 3.7 | SOUTHERN ITALY | 2017-02-04 13:22 | 564948 | 1.35 | 121.78 | 0.34 | |

| 2017-02-03 | 21:33:03 | 41.05 | 12.72 | 2 | ML | 3.7 | SOUTHERN ITALY | 2017-02-04 13:22 | 564948 | 1.35 | 126.44 | 0.33 | |

| 2017-07-18 | 08:13:28 | 41.81 | 13.21 | 9 | ML | 2.5 | SOUTHERN ITALY | 2017-07-18 08:20 | 605876 | 0.71 | 59.80 | 0.32 |

import csv

Water_Spring_Madonna_di_Canneto =pd.read_csv(r"Water_Spring_Madonna_di_Canneto.csv",sep=",",encoding="UTF-8",decimal="." ,header=0,error_bad_lines=False,warn_bad_lines=False,quoting=csv.QUOTE_NONE)

Water_Spring_Madonna_di_Canneto["Date"] = pd.to_datetime( Water_Spring_Madonna_di_Canneto.Date, format='%d/%m/%Y' ) # euro dates

Water_Spring_Madonna_di_Canneto.set_index("Date", inplace=True) #Water_Spring_Madonna_di_Canneto.head()

dfC= Water_Spring_Madonna_di_Canneto["2015":"2020"] # > quake plot

import datetime

sns.set_style("whitegrid", {'grid.linestyle': '--'})

fig, ax = plt.subplots(1,1, figsize=(21, 8), sharex=True) #, Earthquakes2 2019 - 2020

Earthquakes2= Earthquakes2[Earthquakes2.Magnitude >= 2.25]

vert= Earthquakes2.index.date ##ax.vline( datetime.date(2015, 2, 28), [0] ,1) #list( )

for v in vert:

plt.axvline( v, 0 ,1 , color="darkorange", lw=0.6) # datetime.date(2015, 2, 28)

sns.scatterplot(x=dfC.index, y= dfC.Flow_Rate_Madonna_di_Canneto, data=dfC, s=4)

sns.scatterplot(x="Date", y= Earthquakes2.Magnitude*100, data=Earthquakes2, hue="Dist", label="Magnitude$ML*100$") #kind="line" ax=ax

plt.xticks(rotation=90); #axe.setxaxis(rotation=90)

plt.xlim(pd.to_datetime( "2015-01-01"),pd.to_datetime("2019-12-29" ) ); plt.ylim(180,380);

The quake of 2018-03-22 02:30:37 with Magnitude 2.5 might have caused a short period of outflow. (depth:9 and eqid:655561 distance:0.71)

vert

Earthquakes magnitude > 5¶

Some more energetic quakes, but less distorting because they originated further away from the spring.

#!pip install install openpyxl

Earthquake15 =pd.read_excel(r"http://vanoproy.be/css/Water_Spring_Madonna_di_Canneto.xlsx",header=0, parse_dates=True, sheet_name='seismic' );

Earthquake15["Date_event"] = pd.to_datetime( Earthquake15.Date_event, format='%B %d %Y' ) # euro dates

Earthquake15.set_index("Date_event", inplace=True)

Earthquake15["Dst"] = np.sqrt(np.abs((41.683364-Earthquake15.Latitude )**2 )+ np.abs((13.910494-Earthquake15.Longitude)**2 ))

Earthquake15.head(21)

| Date_time_UTC | Mw | Epicenter_location | Depth_km | Latitude | Longitude | Dst | |

|---|---|---|---|---|---|---|---|

| Date_event | |||||||

| 2016-08-24 | August 24 2016 01:36:32 | 6.0 | Accumoli | 8.1 | 42.70 | 13.23 | 1.22 |

| 2016-08-24 | August 24 2016 02:33:28 | 5.3 | Norcia | 8.0 | 42.79 | 13.15 | 1.34 |

| 2016-10-26 | October 26 2016 17:10:36 | 5.4 | Castelsantangelo sul Nera | 8.7 | 42.88 | 13.13 | 1.43 |

| 2016-10-26 | October 26 2016 19:18:05 | 5.9 | Castelsantangelo sul Nera | 7.5 | 42.91 | 13.13 | 1.45 |

| 2016-10-30 | October 30 2016 06:40:17 | 6.5 | Norcia | 9.2 | 42.83 | 13.11 | 1.40 |

| 2017-01-18 | January 18 2017 09:25:40 | 5.1 | Capitignano | 10.0 | 42.55 | 13.28 | 1.07 |

| 2017-01-18 | January 18 2017 10:14:09 | 5.5 | Capitignano | 9.6 | 42.53 | 13.28 | 1.05 |

| 2017-01-18 | January 18 2017 10:25:23 | 5.4 | Capitignano | 9.4 | 42.50 | 13.28 | 1.04 |

| 2017-01-18 | January 18 2017 13:33:36 | 5.0 | Barete | 9.5 | 42.47 | 13.27 | 1.01 |

Earthquake15.info()

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 9 entries, 2016-08-24 to 2017-01-18 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Date_time_UTC 9 non-null object 1 Mw 9 non-null float64 2 Epicenter_location 9 non-null object 3 Depth_km 9 non-null float64 4 Latitude 9 non-null float64 5 Longitude 9 non-null float64 6 Dst 9 non-null float64 dtypes: float64(5), object(2) memory usage: 576.0+ bytes

Lati= Earthquake15["Latitude"].values ; Longi=Earthquake15["Longitude"].values #.values .values# .items()

print(Lati)

hvs1=[]; hvs2=[]

for La in Lati:#

for Lon in Longi:

print(La, Lon, type(hvs1)) #Lo

Earthquake15hvs = haversine_distance(41.683364, 13.910494 ,float(La), 50 ) # havers gives 2 values

print(Earthquake15hvs )

#hvs1= hvs1.append(Earthquake15hvs[0]);# hvs2= hvs2.append(Earthquake15hvs[1])

# Earthquake15["hvs"]

Earthquake15["hvs"].values

Earthquake15hvs

0 2957.21 dtype: float64

Merge the Earthquakes data with Water_Spring_Madonna_di_Canneto...

dfCE= pd.merge(Water_Spring_Madonna_di_Canneto, Earthquakes2.Dist,left_index=True,right_index=True,how="left" ) dfCE

dfCE.Dist= dfCE.Dist.replace( np.nan,10000000)

import datetime

start = datetime.datetime(2004, 1, 1); end = datetime.datetime(2019, 1, 1)

pd.options.display.max_rows = 57

%precision 4

'%.4f'

Groundwater discharge¶

From Wikipedia, the free encyclopedia https://en.wikipedia.org/wiki/Groundwater_discharge

Groundwater discharge is the volumetric flow rate of groundwater through an aquifer.

Total groundwater discharge, as reported through a specified area, is similarly expressed as:

${\displaystyle Q={\frac{dh}{dl}}KA}$

where

Q is the total groundwater discharge ( m3/s),

K is the hydraulic conductivity of the aquifer (L/s),

dh/dl is the hydraulic gradient ([L·L−1]; unitless), and

A is the area which the groundwater is flowing through ( m2)

For example, this can be used to determine the flow rate of water flowing along a plane with known geometry. The discharge potential ${\textstyle \Phi }$

The discharge potential is a potential in groundwater mechanics which links the physical properties, hydraulic head, with a mathematical formulation for the energy as a function of position. The discharge potential,${\textstyle \Phi }$ L3·T−1, is defined in such way that its gradient equals the discharge vector.

${\displaystyle Q_{x}=-{\frac {\partial \Phi }{\partial x}}}$ & ${\displaystyle Q_{y}=-{\frac {\partial \Phi }{\partial y}}}$

Thus the hydraulic head may be calculated in terms of the discharge potential, for confined flow as

${\displaystyle \Phi =K. H\phi }$

and for unconfined shallow flow as

where

$H$ is the thickness of the aquifer [L],

${ \phi }$ is the hydraulic head [L], and

${\textstyle C}$ is an arbitrary constant [L3·T−1] given by the boundary conditions.

As mentioned the discharge potential may also be written in terms of position. The discharge potential is a function of the Laplace's equation

which solution is a linear differential equation. Because the solution is a linear differential equation for which superposition principle holds, it may be combined with other solutions for the discharge potential, e.g. uniform flow, multiple wells, analytical elements (analytic element method). See also Groundwater flow equation

Water spring Madonna di Canneto¶

The Madonna di Canneto spring is situated at an altitude of 1010m above sea level in the Canneto valley.

It does not consist of an aquifer and its source is supplied by the water catchment area of the river Melfa.

The surface area of Settefrati itself is 50,6 km².

Some interesting info about the valley¶

Valle di Canneto has been named after the bamboo plants which once grew there. The valley consists of several smaller streams and several springs, of which only few bear a name. Nonetheless, a spring called "Madonna" cannot be found on maps, perhaps cos it runs dry so regularly. Anyhow, the sanctuary bears this name, and attracts pelgrims since centuries.

Location

References¶

- SPRINGS (CLASSIFICATION, FUNCTION,CAPTURING), Soulios G., Dep. of Geology, Aristotle University of Thessaloniki, Bulletin of the Geological Society of Greece, Vol. 43, (2010), DOI: 10.12681/bgsg.11174

- Urban hydrology for small watersheds. Tech. Release 55., U.S. Department of Agriculture, Soil Conservation Service. 1986.

- RUNOFF CURVE NUMBER METHOD: EXAMINATION OF THE INITIAL ABSTRACTION RATIO, Richard H. Hawkins, Professor, University of Arizona, Tucson, (USDA, Natural Resources Conservation Service, 2002)

- Chapter 20 - Watershed Yield, Part 630 Hydrology, National Engineering Handbook, 2009, USDA

- Groundwater - Hydrology of springs, Chapter 4 - Spring discharge hydrograph, Neven Kresic, Amec Foster Wheeler, Bonacci o., Univ Split Croatia, (2010)

- Karst Groundwater Availability and Sustainable Development, F. Fiorillo, V. Ristić Vakanjac, I. Jemcov, S. P Milanovic, University of Belgrade, February 2015), DOI: 10.1007/978-3-319-12850-4_15

- Predicted Maps for Soil Organic Matter Evaluation: The Case of Abruzzo Region (Italy). Piccini, C.; Francaviglia, R.; Marchetti, A., Land 2020, 9, 349. https://doi.org/10.3390/land9100349

- The "APEX" Agricultural Policy Environmental eXtender Model theoretical documentation, Version 0604, BREC Report # 2008-17.

import csv

Water_Spring_Madonna_di_Canneto =pd.read_csv(r"Water_Spring_Madonna_di_Canneto.csv",sep=",",encoding="UTF-8",decimal="." ,header=0,error_bad_lines=False,warn_bad_lines=False,quoting=csv.QUOTE_NONE)

Water_Spring_Madonna_di_Canneto.head() # engine='python',

| Date | Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | |

|---|---|---|---|---|

| 0 | 01/01/2012 | 0.0 | 5.25 | NaN |

| 1 | 02/01/2012 | 5.6 | 6.65 | NaN |

| 2 | 03/01/2012 | 10.0 | 8.85 | NaN |

| 3 | 04/01/2012 | 0.0 | 6.75 | NaN |

| 4 | 05/01/2012 | 1.0 | 5.55 | NaN |

Water_Spring_Madonna_di_Canneto["Date"] = pd.to_datetime( Water_Spring_Madonna_di_Canneto.Date, format='%d/%m/%Y' ) # euro dates, utc=True

Water_Spring_Madonna_di_Canneto.set_index("Date", inplace=True)

Water_Spring_Madonna_di_Canneto["doy"] = Water_Spring_Madonna_di_Canneto.index.dayofyear

Water_Spring_Madonna_di_Canneto["Month"] = Water_Spring_Madonna_di_Canneto.index.month

Water_Spring_Madonna_di_Canneto.head(6)

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| Date | |||||

| 2012-01-01 | 0.0 | 5.25 | NaN | 1 | 1 |

| 2012-01-02 | 5.6 | 6.65 | NaN | 2 | 1 |

| 2012-01-03 | 10.0 | 8.85 | NaN | 3 | 1 |

| 2012-01-04 | 0.0 | 6.75 | NaN | 4 | 1 |

| 2012-01-05 | 1.0 | 5.55 | NaN | 5 | 1 |

| 2012-01-06 | 5.2 | 5.05 | NaN | 6 | 1 |

Water_Spring_Madonna_di_Canneto.tail(6)

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | date | date_check | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2020-06-25 | NaN | NaN | 224.01 | 177 | 6 | 2020-06-25 | 1 days |

| 2020-06-26 | NaN | NaN | 223.92 | 178 | 6 | 2020-06-26 | 1 days |

| 2020-06-27 | NaN | NaN | 223.86 | 179 | 6 | 2020-06-27 | 1 days |

| 2020-06-28 | NaN | NaN | 223.76 | 180 | 6 | 2020-06-28 | 1 days |

| 2020-06-29 | NaN | NaN | 223.77 | 181 | 6 | 2020-06-29 | 1 days |

| 2020-06-30 | NaN | NaN | 223.75 | 182 | 6 | 2020-06-30 | 1 days |

Water_Spring_Madonna_di_Canneto.info()

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 3104 entries, 2012-01-01 to 2020-06-30 Data columns (total 5 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Rainfall_Settefrati 2557 non-null float64 1 Temperature_Settefrati 2557 non-null float64 2 Flow_Rate_Madonna_di_Canneto 1387 non-null float64 3 doy 3104 non-null int64 4 Month 3104 non-null int64 dtypes: float64(3), int64(2) memory usage: 145.5 KB

Water_Spring_Madonna_di_Canneto_original= Water_Spring_Madonna_di_Canneto.copy()

imgCapo=Image( filename="capodacqua-in-val-canneto.jpg"); imgCapo

Methodology¶

The Methodology to use depends on the properties of the water source and local hydrogeology. There is no clear cut path. An example of a Methodology is presented in the next illustration. It provides a view how the topics are related to each other, and how unknown info and data matters.

Rainfall¶

Water_Spring_Madonna_di_Canneto.Rainfall_Settefrati.plot(figsize=(20, 4)); # ["2019"]

This series stops end 2018, and there's nada from 2019 on... however there is flow rate data provided after this moment up to 30/6/2020, which I don't want to ignore nor leave untrained.

I could use the averages distilled from the other years. It is a balancing act between the scarcity of data and the weight of the margin of error when inserting averages.

average36= Water_Spring_Madonna_di_Canneto.loc["2013-01-01":"2015-12-31"] # 00:00:00 00:00:00

average5= Water_Spring_Madonna_di_Canneto.loc["2017-01-01":"2019-12-31"] #

average3650=average36.append( average5)

average365= average3650.groupby('{:%m-%d}'.format).mean()

averageTemp= Water_Spring_Madonna_di_Canneto.groupby('{:%m-%d}'.format).mean()

averageTemp.doy= averageTemp.doy.astype("int32") # correction for doy 361.25

averageTemp2020 = averageTemp # copy of df for 2020

averageTemp#.index #.Rainfall_Settefrati

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| 01-01 | 1.49 | 4.54 | 268.39 | 1 | 1 |

| 01-02 | 3.17 | 5.24 | 268.14 | 2 | 1 |

| 01-03 | 6.59 | 6.67 | 268.43 | 3 | 1 |

| 01-04 | 3.47 | 6.89 | 270.83 | 4 | 1 |

| 01-05 | 6.29 | 5.87 | 270.67 | 5 | 1 |

| ... | ... | ... | ... | ... | ... |

| 12-27 | 15.26 | 5.96 | 269.19 | 361 | 12 |

| 12-28 | 3.29 | 6.19 | 268.90 | 362 | 12 |

| 12-29 | 1.51 | 4.71 | 269.00 | 363 | 12 |

| 12-30 | 1.49 | 3.54 | 269.14 | 364 | 12 |

| 12-31 | 0.11 | 3.94 | 268.76 | 365 | 12 |

366 rows × 5 columns

The series contains a leap year 2016: so we have to drop leap day "02-29" for 2019, but we leave it in for the averages of the year 2020.

averageTemp2019

averageTemp2019= averageTemp.drop(averageTemp.index[59]) # leap years: drop leap day "02-29"

dr2019 =pd.date_range(start='1/1/2019', end='31/12/2019'); dr2019

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05', '2019-01-06', '2019-01-07', '2019-01-08',

'2019-01-09', '2019-01-10',

...

'2019-12-22', '2019-12-23', '2019-12-24', '2019-12-25',

'2019-12-26', '2019-12-27', '2019-12-28', '2019-12-29',

'2019-12-30', '2019-12-31'],

dtype='datetime64[ns]', length=365, freq='D')

averageTemp2019.index= dr2019

averageTemp2019

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| 2019-01-01 | 1.49 | 4.54 | 268.39 | 1 | 1 |

| 2019-01-02 | 3.17 | 5.24 | 268.14 | 2 | 1 |

| 2019-01-03 | 6.59 | 6.67 | 268.43 | 3 | 1 |

| 2019-01-04 | 3.47 | 6.89 | 270.83 | 4 | 1 |

| 2019-01-05 | 6.29 | 5.87 | 270.67 | 5 | 1 |

| ... | ... | ... | ... | ... | ... |

| 2019-12-27 | 15.26 | 5.96 | 269.19 | 361 | 12 |

| 2019-12-28 | 3.29 | 6.19 | 268.90 | 362 | 12 |

| 2019-12-29 | 1.51 | 4.71 | 269.00 | 363 | 12 |

| 2019-12-30 | 1.49 | 3.54 | 269.14 | 364 | 12 |

| 2019-12-31 | 0.11 | 3.94 | 268.76 | 365 | 12 |

365 rows × 5 columns

dr2020 =pd.date_range(start='1/1/2020', end='31/12/2020'); dr2020

DatetimeIndex(['2020-01-01', '2020-01-02', '2020-01-03', '2020-01-04',

'2020-01-05', '2020-01-06', '2020-01-07', '2020-01-08',

'2020-01-09', '2020-01-10',

...

'2020-12-22', '2020-12-23', '2020-12-24', '2020-12-25',

'2020-12-26', '2020-12-27', '2020-12-28', '2020-12-29',

'2020-12-30', '2020-12-31'],

dtype='datetime64[ns]', length=366, freq='D')

averageTemp2020.index= dr2020

averageTemp2020

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| 2020-01-01 | 1.49 | 4.54 | 268.39 | 1 | 1 |

| 2020-01-02 | 3.17 | 5.24 | 268.14 | 2 | 1 |

| 2020-01-03 | 6.59 | 6.67 | 268.43 | 3 | 1 |

| 2020-01-04 | 3.47 | 6.89 | 270.83 | 4 | 1 |

| 2020-01-05 | 6.29 | 5.87 | 270.67 | 5 | 1 |

| ... | ... | ... | ... | ... | ... |

| 2020-12-27 | 15.26 | 5.96 | 269.19 | 361 | 12 |

| 2020-12-28 | 3.29 | 6.19 | 268.90 | 362 | 12 |

| 2020-12-29 | 1.51 | 4.71 | 269.00 | 363 | 12 |

| 2020-12-30 | 1.49 | 3.54 | 269.14 | 364 | 12 |

| 2020-12-31 | 0.11 | 3.94 | 268.76 | 365 | 12 |

366 rows × 5 columns

averageTemp2019= pd.DatetimeIndex(averageTemp2019, yearfirst= True) #['Date'] .index format="%Y-%m-%d"

Water_Spring_Madonna_di_Canneto_original["2019-01-01":"2019-12-31"].index

averageTemp.Rainfall_Settefrati.index = Water_Spring_Madonna_di_Canneto_original["2019-01-01":"2019-12-31"].index Water_Spring_Madonna_di_Canneto["2019-01-01":"2019-12-31"].loc["Rainfall_Settefrati"] = averageTemp["01-01":"12-31"].Rainfall_Settefrati

averageTemp.Rainfall_Settefrati["2019-01-01":"2020-12-31"].plot(figsize=(14, 4)); #

#Water_Spring_Madonna_di_Canneto=Water_Spring_Madonna_di_Canneto.drop("Rainfall_5")

Water_Spring_Madonna_di_Canneto.tail()

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| Date | |||||

| 2020-06-26 | NaN | NaN | 223.92 | 178 | 6 |

| 2020-06-27 | NaN | NaN | 223.86 | 179 | 6 |

| 2020-06-28 | NaN | NaN | 223.76 | 180 | 6 |

| 2020-06-29 | NaN | NaN | 223.77 | 181 | 6 |

| 2020-06-30 | NaN | NaN | 223.75 | 182 | 6 |

Finally updating the original dataset with the distilled averages of 'dayofyear' rainfall

Water_Spring_Madonna_di_Canneto.update( averageTemp2019["2019-01-01":"2019-12-31"].Rainfall_Settefrati)

and also we update the averages of period 2020

Water_Spring_Madonna_di_Canneto.update( averageTemp2020["2020-01-01":"2020-12-31"].Rainfall_Settefrati)

averageTemp2020["2020-01-01":"2020-12-31"]

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | |

|---|---|---|---|---|---|

| 2020-01-01 | 1.49 | 4.54 | 268.39 | 1 | 1 |

| 2020-01-02 | 3.17 | 5.24 | 268.14 | 2 | 1 |

| 2020-01-03 | 6.59 | 6.67 | 268.43 | 3 | 1 |

| 2020-01-04 | 3.47 | 6.89 | 270.83 | 4 | 1 |

| 2020-01-05 | 6.29 | 5.87 | 270.67 | 5 | 1 |

| ... | ... | ... | ... | ... | ... |

| 2020-12-27 | 15.26 | 5.96 | 269.19 | 361 | 12 |

| 2020-12-28 | 3.29 | 6.19 | 268.90 | 362 | 12 |

| 2020-12-29 | 1.51 | 4.71 | 269.00 | 363 | 12 |

| 2020-12-30 | 1.49 | 3.54 | 269.14 | 364 | 12 |

| 2020-12-31 | 0.11 | 3.94 | 268.76 | 365 | 12 |

366 rows × 5 columns

Soil moisture condition¶

in studies this classification is a reliable indicator, but those were based on real measurements, not estimates as here is the case.

Determination of the soil moisture condition based on 5-day antecedent rainfall totals. The AMC is estimated according to the Soil Conservation Service definitions (SCS, 1986). On top of that the state of the vegetation is a major factor in most events, and included in the strategy here.

Antecedent Soil moisture conditions are based on rainfall amounts and the state of the vegetation (dormant season or not).

AMCclass=pd.read_csv(r"http://vanoproy.be/css/AMC.csv",header=1, sep=",", engine='python', encoding="UTF-8",parse_dates=True );

AMCclass.head()

| AMC class | moisture | Dormant season | Growing season | |

|---|---|---|---|---|

| 0 | AMC I | dry | P<12.7 | P<35.6 |

| 1 | AMC II | medium | 12.7<P<27.9 | 35.6<P<53.3 |

| 2 | AMC III | wet | P>27.9 | P>53.3 |

A dormant season for vegetation is the condition when the month is 11,12,1,2,3. We included the first 20 days of April because the higher elevation means lower mean temperatures, thus a longer winter dormancy can be expected.

Water_Spring_Madonna_di_Canneto["Week"]= Water_Spring_Madonna_di_Canneto.index.isocalendar().week # ["Date"]

Water_Spring_Madonna_di_Canneto["Dormant"]= np.where( (Water_Spring_Madonna_di_Canneto.index.month.isin([11,12,1,2,3])) | (Water_Spring_Madonna_di_Canneto.index.isocalendar().week.isin([15,14,16]) ) ,1,0 ) # add ["1 Apr":"15 Apr"]

Water_Spring_Madonna_di_Canneto["Dormant"].describe()

count 3104.00 mean 0.48 std 0.50 min 0.00 25% 0.00 50% 0.00 75% 1.00 max 1.00 Name: Dormant, dtype: float64

Water_Spring_Madonna_di_Canneto["2014-04-15":"2014-04-21"]#.loc["Dormant"]

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Week | Dormant | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2014-04-15 | 25.2 | 8.90 | NaN | 105 | 4 | 16 | 1 |

| 2014-04-16 | 3.4 | 6.25 | NaN | 106 | 4 | 16 | 1 |

| 2014-04-17 | 2.6 | 6.05 | NaN | 107 | 4 | 16 | 1 |

| 2014-04-18 | 0.0 | 8.10 | NaN | 108 | 4 | 16 | 1 |

| 2014-04-19 | 13.0 | 7.85 | NaN | 109 | 4 | 16 | 1 |

| 2014-04-20 | 12.6 | 13.05 | NaN | 110 | 4 | 16 | 1 |

| 2014-04-21 | 0.0 | 14.05 | NaN | 111 | 4 | 17 | 0 |

Water_Spring_Madonna_di_Canneto["2015-04-14":"2015-04-21"]#.loc["Dormant"]

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Week | Dormant | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2015-04-14 | 0.4 | 14.60 | 274.95 | 104 | 4 | 16 | 1 |

| 2015-04-15 | 0.0 | 15.25 | 273.63 | 105 | 4 | 16 | 1 |

| 2015-04-16 | 0.0 | 14.90 | 273.63 | 106 | 4 | 16 | 1 |

| 2015-04-17 | 0.2 | 14.35 | 273.81 | 107 | 4 | 16 | 1 |

| 2015-04-18 | 0.0 | 12.80 | 273.90 | 108 | 4 | 16 | 1 |

| 2015-04-19 | 1.4 | 13.25 | 274.12 | 109 | 4 | 16 | 1 |

| 2015-04-20 | 0.2 | 11.80 | 274.14 | 110 | 4 | 17 | 0 |

| 2015-04-21 | 0.0 | 12.50 | 273.99 | 111 | 4 | 17 | 0 |

Water_Spring_Madonna_di_Canneto[(Water_Spring_Madonna_di_Canneto["Rainfall_Settefrati"].rolling(5).sum()>(27.9)) & (Water_Spring_Madonna_di_Canneto["Dormant"]==1)]

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Rainfall_5 | Year | Rainfall_Set | Flow_Rate_Mad | TmnStd | Flow_m3_7 | Flow_m3_3 | Flow_m3_22 | Flow_7 | Flow_3 | Flow_22 | Flow_m3_90 | Rainfall_m3_90 | Flow_90 | Rainfall_90 | TmnStd_4 | T_7 | Rainfall_3 | Rainfall_4 | Rainfall_50 | Rainfall_7 | Rainfall_22 | Rainfall_30 | RainCutOff_6 | RainCutOff_5 | RainOvers_6 | CutOff | RainCutOff | RainCutOffmm | RCO_F_cumdif | R_F_cumdif | Dormant | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||||||||||||||||||||||||

| 2015-03-17 | 7.60 | 7.55 | 290.69 | 76 | 3 | 29.4 | 2015 | 51300.0 | 25115.51 | 1.42 | 171405.03 | 75227.64 | 532398.39 | 1983.85 | 870.69 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.63 | 7.27 | 23.6 | 29.4 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 7.6 | 2.28e+05 | 7.60e+05 | 76090.98 | 1 |

| 2015-03-18 | 0.00 | 8.40 | 285.42 | 77 | 3 | 29.4 | 2015 | 0.0 | 24660.09 | 2.27 | 171405.03 | 74838.76 | 532398.39 | 1983.85 | 866.19 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.96 | 7.27 | 16.2 | 23.6 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 0.0 | 0.00e+00 | 7.35e+05 | 51430.89 | 1 |

| 2015-03-25 | 37.30 | 10.25 | 282.40 | 84 | 3 | 38.1 | 2015 | 251775.0 | 24399.44 | 4.12 | 171516.07 | 73540.03 | 532398.39 | 1985.14 | 851.16 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 3.39 | 9.21 | 37.3 | 38.1 | 158.0 | 38.1 | 84.3 | 105.8 | 38.1 | 38.1 | 0.0 | 0 | 37.3 | 1.12e+06 | 1.71e+06 | 137089.82 | 1 |

| 2015-03-26 | 6.00 | 13.35 | 275.94 | 85 | 3 | 44.1 | 2015 | 40500.0 | 23841.00 | 7.22 | 170971.15 | 72792.63 | 532398.39 | 1978.83 | 842.51 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 5.09 | 9.73 | 43.3 | 43.3 | 158.0 | 44.1 | 84.3 | 105.8 | 44.1 | 44.1 | 0.0 | 0 | 6.0 | 1.80e+05 | 1.86e+06 | 153748.82 | 1 |

| 2015-03-27 | 10.80 | 12.00 | 273.92 | 86 | 3 | 54.1 | 2015 | 72900.0 | 23666.67 | 5.87 | 170144.30 | 71907.11 | 532398.39 | 1969.26 | 832.26 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 5.81 | 10.20 | 54.1 | 54.1 | 158.0 | 54.9 | 84.3 | 105.8 | 54.9 | 54.1 | 0.8 | 0 | 10.8 | 3.24e+05 | 2.16e+06 | 202982.15 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2020-03-08 | 1.76 | NaN | 224.81 | 68 | 3 | 0.0 | 2020 | NaN | 19423.98 | NaN | 135857.20 | 58267.82 | 420393.06 | 1572.42 | 674.40 | 4865.66 | 1.71e+06 | NaN | 19829.03 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 1 |

| 2020-03-09 | 1.34 | NaN | 224.79 | 69 | 3 | 0.0 | 2020 | NaN | 19422.08 | NaN | 135906.66 | 58268.74 | 420470.02 | 1572.99 | 674.41 | 4866.55 | 1.71e+06 | NaN | 19833.75 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 1 |

| 2020-03-10 | 3.29 | NaN | 224.67 | 70 | 3 | 0.0 | 2020 | NaN | 19411.10 | NaN | 135923.03 | 58257.16 | 420542.18 | 1573.18 | 674.27 | 4867.39 | 1.71e+06 | NaN | 19834.27 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 1 |

| 2020-04-05 | 9.64 | NaN | 224.61 | 96 | 4 | 0.0 | 2020 | NaN | 19406.60 | NaN | 135798.90 | 58198.83 | 426837.27 | 1571.75 | 673.60 | 4940.25 | 1.72e+06 | NaN | 19865.32 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 1 |

| 2020-04-06 | 1.83 | NaN | 224.50 | 97 | 4 | 0.0 | 2020 | NaN | 19396.86 | NaN | 135798.71 | 58195.41 | 426827.48 | 1571.74 | 673.56 | 4940.13 | 1.72e+06 | NaN | 19866.59 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 1 |

173 rows × 37 columns

Water_Spring_Madonna_di_Canneto= Water_Spring_Madonna_di_Canneto.dropna()

pd.set_option('mode.chained_assignment',None)

#Water_Spring_Madonna_di_Canneto= dfW

dfW= Water_Spring_Madonna_di_Canneto

Water_Spring_Madonna_di_Canneto["Rainfall_5"] =Water_Spring_Madonna_di_Canneto["Rainfall_Settefrati"].rolling(5).sum().fillna(0, )#method="bfill"

#Rainfall_5

#dfW=dfW.drop("Rainfall_5")

#dfW.tail()

Water_Spring_Madonna_di_Canneto.tail()

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Week | Dormant | Rainfall_5 | |

|---|---|---|---|---|---|---|---|---|

| Date | ||||||||

| 2020-06-26 | 1.43 | NaN | 223.92 | 178 | 6 | 26 | 0 | 6.96 |

| 2020-06-27 | 1.50 | NaN | 223.86 | 179 | 6 | 26 | 0 | 6.26 |

| 2020-06-28 | 0.26 | NaN | 223.76 | 180 | 6 | 26 | 0 | 6.06 |

| 2020-06-29 | 0.09 | NaN | 223.77 | 181 | 6 | 27 | 0 | 5.63 |

| 2020-06-30 | 1.84 | NaN | 223.75 | 182 | 6 | 27 | 0 | 5.11 |

Formulations for determination of dry, average and wet vegetation based on the amount of rainfall of previous 5 days.

conditions = [

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]>53.3) & (Water_Spring_Madonna_di_Canneto["Dormant"]==0) ,

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]>27.9) & (Water_Spring_Madonna_di_Canneto["Dormant"]==1),

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]>35.6 ) & (Water_Spring_Madonna_di_Canneto["Dormant"]==0) ,

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]>(27.9)) & (Water_Spring_Madonna_di_Canneto["Dormant"]==1),

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]<=35.6 ) & (Water_Spring_Madonna_di_Canneto["Dormant"]==0) ,

(Water_Spring_Madonna_di_Canneto["Rainfall_5"]<=(27.9)) & (Water_Spring_Madonna_di_Canneto["Dormant"]==1),

]

choices = [2,2,1,1, 0.01,0.01]

Water_Spring_Madonna_di_Canneto['Wet'] = np.select(conditions, choices, default=np.nan)

dfW["Wet"].describe()

dfW["Wet"].plot();

Water_Spring_Madonna_di_Canneto[(Water_Spring_Madonna_di_Canneto["Rainfall_5"]>53.3) & (Water_Spring_Madonna_di_Canneto["Dormant"]==0)]

TR-55: 'Urban Hydrology for Small Watersheds'¶

Author: USDA Soil Conservation Service (SCS). Now Natural Resources Conservation Service (NRCS)

"Technical Release 55" (TR-55) presents simplified procedures to calculate storm runoff volume, peak rate of discharge, hydrographs, and storage volumes required for floodwater reservoirs. These procedures are applicable to small watersheds, especially urbanizing watersheds, in the United States." Comments:

TR-55 is perhaps the most widely used approach to hydrology in the US. Originally released in 1975, TR-55 provides a number of techniques that are useful for modeling small watersheds. Since the initial publication predated the widespread use of computers, TR-55 was designed primarily as a set of manual worksheets. A TR-55 computer program is now available, based closely on the manual calculations of TR-55.

TR-55 utilizes the SCS runoff equation to predict the peak rate of runoff as well as the total volume. TR-55 also provides a simplified "tabular method" for the generation of complete runoff hydrographs. The tabular method is a simplified technique based on calculations performed with TR-20. TR-55 specifically recommends the use of more precise tools, such as TR-20, if the assumptions of TR-55 are not met.

Recommendations:

While the TR-55 manual remains a most useful reference (it contains complete curve number tables and rainfall maps, among other things) most engineers have sought out more advanced or more accurate hydrology software. How to get it:

You can download the TR-55 Manual here. (2MB PDF format) The complete software and documentation is available from the NRCS TR-55 web page. Also see the NRCS Win-TR-55 web page.

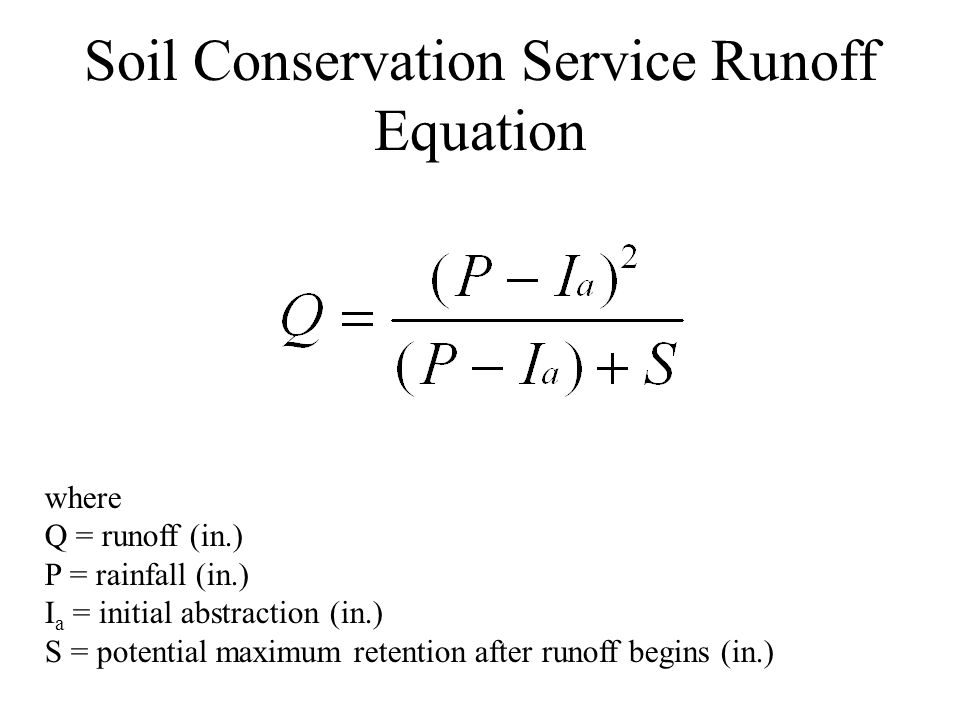

SCS CN- or curve number method¶

Runoff Equation¶

The SCS Runoff equation is used with the SCS Unit Hydrograph method to turn rainfall into runoff. It is an empirical method that expresses how much runoff volume is generated by a certain volume of rainfall.

The variable input parameters of the equation are the rainfall amount for a given duration and the basin’s runoff curve number (CN). For convenience, the runoff amount is typically referred to as a runoff volume even though it is expressed in units of depth (in., mm). In fact, this runoff depth is a normalized volume since it is generally distributed over a sub-basin or catchment area.

In hydrograph analysis the SCS runoff equation is applied against an incremental burst of rain to generate a runoff quantity. This runoff quantity is then distributed according to the unit hydrograph procedure, which ultimately develops the full runoff hydrograph.

The general form of the equation (U.S. customary units) is:

Q = Runoff depth (in)

P = Rainfall (in)

S = Maximum retention after runoff begins (in), or soil moisture storage deficit

$I_a$ = Initial abstraction

The initial abstraction includes water captured by vegetation, depression storage, evaporation, and infiltration. For any P, this abstraction must be satisfied before any runoff is possible. The universal default for the initial abstraction is given by the equation:

The ratio ${ \lambda }$, 0.2, was rarely modified. Recently, Woodward et al. (2003) analysing event rainfall-runoff data from several hundred plots recommended using $\lambda $=0.05. However, a different ratio ${ \lambda }$ has another CN set, so you have to recalculate S and CN!.

The potential maximum retention after runoff begins, S, is related to the soil and land use/vegetative cover characteristics of the watershed by the equation:

...where the runoff curve number is developed by coincidental tabulation of soil/land use extents in the weighted runoff curve number parameter, CN. CN has a range of 0 to 100.

Alternative for European metric in meter:

Estimation of surface runoff by curve numbers:

SCS Hydrologic Soil Groups: Soil textures¶

A. Sand, loamy sand, or sandy loam

B. Silt loam or loam

C. Sandy clay loam

D. Clay loam, silty clay loam, sandy clay, silty clay, or clay

imgInfil

Runoff curve numbers for cultivated/other agricultural lands and soil types¶

import pandas as pd

CN=pd.read_csv(r"evapotrans/SCS.csv", encoding="UTF-8",engine="python", header=[3]) # header=[2,3] skiprows

CN

| Cover type | Treatment | Hydrologic condition | A | B | C | D | |

|---|---|---|---|---|---|---|---|

| 0 | Fallow | Bare soil | — | 77 | 86 | 91 | 94 |

| 1 | Woods | - | Poor | 45 | 66 | 77 | 83 |

| 2 | Woods | - | Fair | 36 | 60 | 73 | 79 |

| 3 | Woods | - | Good | 30 | 55 | 70 | 77 |

WoodsC= CN.loc[3,"C"]; WoodsD= CN.loc[3,"D"]; BareC= CN.loc[0,"C"]; BareD= CN.loc[0,"D"];

print(WoodsC , WoodsD , BareC , BareD)

70 77 91 94

From soil maps I found that the soil right below the mountainous rocks should be loam, loamy sand or sandy loam. So it must be soil group B, A or mixture.

First we try the coefficients for group B, soil in good condition.

P=30

CN=55

S= (1000/CN)-10

Q = (P- 0.2 *S)**2/(P + 0.8*S) # S is related to the soil and cover conditions of the watershed through the CN.

print(round(Q,4))

22.0136

Calculate runoff depth¶

It is confusingly called a 'depth', but it is really a volume unit.

Water_Spring_Madonna_di_Canneto.head()

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Rainfall_5 | Year | Rainfall_Set | Flow_Rate_Mad | TmnStd | Flow_m3_7 | Flow_m3_3 | Flow_m3_22 | Flow_7 | Flow_3 | Flow_22 | Flow_m3_90 | Rainfall_m3_90 | Flow_90 | Rainfall_90 | TmnStd_4 | T_7 | Rainfall_3 | Rainfall_4 | Rainfall_50 | Rainfall_7 | Rainfall_22 | Rainfall_30 | RainCutOff_6 | RainCutOff_5 | RainOvers_6 | CutOff | RainCutOff | RainCutOffmm | RCO_F_cumdif | R_F_cumdif | Dormant | Wet | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | ||||||||||||||||||||||||||||||||||||||

| 2015-03-13 | 0.0 | 5.70 | 255.96 | 72 | 3 | 2.4 | 2015 | 0.0 | 22114.64 | -0.43 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.17 | 7.27 | 13.2 | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 0.0 | 0.0 | -22114.64 | -22114.64 | 1 | 0.01 |

| 2015-03-14 | 5.8 | 7.10 | 289.55 | 73 | 3 | 8.2 | 2015 | 39150.0 | 25016.74 | 0.97 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.17 | 7.27 | 13.2 | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 5.8 | 174000.0 | 126868.62 | -7981.38 | 1 | 0.01 |

| 2015-03-15 | 7.4 | 4.90 | 289.92 | 74 | 3 | 15.6 | 2015 | 49950.0 | 25048.97 | -1.23 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.17 | 7.27 | 13.2 | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 7.4 | 222000.0 | 323819.65 | 16919.65 | 1 | 0.01 |

| 2015-03-16 | 8.6 | 7.50 | 290.08 | 75 | 3 | 24.2 | 2015 | 58050.0 | 25063.16 | 1.37 | 171405.03 | 75128.87 | 532398.39 | 1983.85 | 869.55 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.17 | 7.27 | 21.8 | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 8.6 | 258000.0 | 556756.49 | 49906.49 | 1 | 0.01 |

| 2015-03-17 | 7.6 | 7.55 | 290.69 | 76 | 3 | 29.4 | 2015 | 51300.0 | 25115.51 | 1.42 | 171405.03 | 75227.64 | 532398.39 | 1983.85 | 870.69 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | 238.2 | 0.63 | 7.27 | 23.6 | 29.4 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 7.6 | 228000.0 | 759640.98 | 76090.98 | 1 | 2.00 |

Water_Spring_Madonna_di_Canneto.loc[Water_Spring_Madonna_di_Canneto.Wet == 1]

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | PET | Dormant | Rainfall_5 | Wet | runoffdepth | Infilt | Infiltsum | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | ||||||||||||

| 2012-04-23 | 10.00 | 9.80 | NaN | 114.0 | 4.0 | 3.34 | 0.0 | 49.60 | 1.0 | 0.0 | 10.00 | 363.32 |

| 2012-04-24 | 3.60 | 10.80 | NaN | 115.0 | 4.0 | 3.34 | 0.0 | 40.20 | 1.0 | 0.0 | 3.60 | 366.92 |

| 2012-09-14 | 18.00 | 15.00 | NaN | 258.0 | 9.0 | 4.20 | 0.0 | 46.80 | 1.0 | 0.0 | 18.00 | 636.29 |

| 2012-09-15 | 0.00 | 16.00 | NaN | 259.0 | 9.0 | 4.20 | 0.0 | 44.40 | 1.0 | 0.0 | 0.00 | 636.29 |

| 2012-09-16 | 0.00 | 17.35 | NaN | 260.0 | 9.0 | 4.20 | 0.0 | 44.20 | 1.0 | 0.0 | 0.00 | 636.29 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2019-09-11 | 12.39 | NaN | 295.89 | 254.0 | 9.0 | 4.20 | 0.0 | 50.43 | 1.0 | 0.0 | 12.39 | 11179.34 |

| 2019-09-12 | 1.07 | NaN | 294.60 | 255.0 | 9.0 | 4.20 | 0.0 | 43.77 | 1.0 | 0.0 | 1.07 | 11180.41 |

| 2019-09-13 | 4.97 | NaN | 295.88 | 256.0 | 9.0 | 4.20 | 0.0 | 48.14 | 1.0 | 0.0 | 4.97 | 11185.38 |

| 2019-09-14 | 6.83 | NaN | NaN | 257.0 | 9.0 | 4.20 | 0.0 | 51.70 | 1.0 | 0.0 | 6.83 | 11192.21 |

| 2019-10-28 | 20.69 | NaN | 218.23 | 301.0 | 10.0 | 2.52 | 0.0 | 49.50 | 1.0 | 0.0 | 20.69 | 11358.61 |

97 rows × 12 columns

The runoff volume / depth is based on dry, average or wet soil condition.

def runoffdepthdry(P):

#print(P)#if W == 0.1: #if dry

CN=35; Q=0

S= (25400/CN)- 254 # (1000/CN)-10 # inches

if P<= 0.2*S:

Q=0

elif P> 0.2*S:

Q = (P- 0.2 *S)**2/(P + 0.8*S) # S is related to the soil and cover conditions of the watershed through the CN.

return Q #round(, 4)

def runoffdepthavg(P):

CN=55; Q=0 # average

S= (25400/CN)- 254 # (1000/CN)-10 # inches

if P<= 0.2*S:

Q=0

elif P> 0.2*S:

Q = (P- 0.2 *S)**2/(P + 0.8*S) # S is related to the soil and cover conditions of the watershed through the CN.

return Q #

def runoffdepthwet(P):

#if wet

CN=74; Q=0

S= (25400/CN)- 254 # (1000/CN)-10 # inches

if P<= 0.2*S:

Q=0

elif P> 0.2*S:

Q = (P- 0.2 *S)**2/(P + 0.8*S) # S is related to the soil and cover conditions of the watershed through the CN.

return Q #

def runoffdepth2(P):

CN=70; Q=0 # ex CN=55

S= (25400/CN)- 254 # in mm retention coefficient

if P<= 0.05*S: Q= 0

elif P> 0.05*S:

Q = (P- 0.05 *S)**2/(P + 0.95*S) # S is related to the soil and cover conditions of the watershed through the CN.

return Q #

# if Water_Spring_Madonna_di_Canneto['Wet'] == 0.1:

droog= Water_Spring_Madonna_di_Canneto.loc[Water_Spring_Madonna_di_Canneto.Wet == 0.01]

Water_Spring_Madonna_di_Canneto['runoffdepth'] = droog.applymap( runoffdepthdry ).loc[:, 'Rainfall_Settefrati'] # in mm

# elif Water_Spring_Madonna_di_Canneto['Wet'] == 1:

medium =Water_Spring_Madonna_di_Canneto.loc[Water_Spring_Madonna_di_Canneto.Wet == 1]

Water_Spring_Madonna_di_Canneto['runoffdepth'] = medium.applymap( runoffdepthavg ).loc[:, 'Rainfall_Settefrati']

# elif Water_Spring_Madonna_di_Canneto['Wet'] == 2:

nat= Water_Spring_Madonna_di_Canneto.loc[Water_Spring_Madonna_di_Canneto.Wet == 2]

Water_Spring_Madonna_di_Canneto['runoffdepth'] = nat.applymap( runoffdepthwet ).loc[:, 'Rainfall_Settefrati']

Water_Spring_Madonna_di_Canneto['runoffdepth']= Water_Spring_Madonna_di_Canneto.runoffdepth.replace( np.nan, 0)

Water_Spring_Madonna_di_Canneto['runoffdepth2'] = Water_Spring_Madonna_di_Canneto.applymap( runoffdepth2 ).loc[:, 'Rainfall_Settefrati']

Water_Spring_Madonna_di_Canneto["Infilt"] =Water_Spring_Madonna_di_Canneto["Rainfall_Settefrati"]-Water_Spring_Madonna_di_Canneto["runoffdepth"]

There is no runoff until the rainwater starts ponding, and this is implemented by the empiric parameter $\lambda$, originally valued at: 0.20, revised value: 0.05. (Hawkings, et ...)

print(round(0.05*((25400/55)- 254),3), round(0.05*((25400/30)- 254),2 ), " S:",round((25400/55)- 254,1 ))

10.391 29.63 S: 207.8

Accounting for forest cover on steep slopes, and rocks or barrow soils that make at least 25% on slopes and on a plateau.

print(round(0.05*((25400/71)- 254),3), round(0.05*((25400/80)- 254),2 ), " S:",round((25400/71)- 254,1 ), "CN combined",55*0.66+93*0.34)

5.187 3.18 S: 103.7 CN combined 67.92

dfW[dfW["Rainfall_Settefrati"] >5.187 ] # ex 10.39

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | date | date_check | Rainfall_5 | |

|---|---|---|---|---|---|---|---|---|

| Date | ||||||||

| 2012-02-01 | 13.8 | 0.40 | NaN | 32 | 2 | 2012-02-01 | 1 days | 16.8 |

| 2012-02-09 | 14.8 | 0.80 | NaN | 40 | 2 | 2012-02-09 | 1 days | 31.2 |

| 2012-02-11 | 10.4 | 0.60 | NaN | 42 | 2 | 2012-02-11 | 1 days | 35.2 |

| 2012-02-12 | 13.2 | 0.25 | NaN | 43 | 2 | 2012-02-12 | 1 days | 47.2 |

| 2012-02-13 | 16.4 | -0.55 | NaN | 44 | 2 | 2012-02-13 | 1 days | 55.8 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2018-11-24 | 26.9 | 10.35 | 274.27 | 328 | 11 | 2018-11-24 | 1 days | 99.7 |

| 2018-11-25 | 46.3 | 10.15 | 274.32 | 329 | 11 | 2018-11-25 | 1 days | 74.4 |

| 2018-12-14 | 68.2 | 6.25 | NaN | 348 | 12 | 2018-12-14 | 1 days | 77.8 |

| 2018-12-15 | 23.2 | 3.10 | NaN | 349 | 12 | 2018-12-15 | 1 days | 100.8 |

| 2018-12-17 | 17.8 | 5.20 | NaN | 351 | 12 | 2018-12-17 | 1 days | 120.8 |

354 rows × 8 columns

We have to separate the runoff water, which cannot infiltrate into the soil, from the calculation.

def infiltration(row):

if row["Rainfall_Settefrati"]>= 5.187: # ex 10.39

row["Infilt"] =row["Rainfall_Settefrati"]-row["runoffdepth"]-row["PET"]

elif row["Rainfall_Settefrati"]< 5.187:

row["Infilt"] =row["Rainfall_Settefrati"]-row["PET"] #

return row["Infilt"]

Water_Spring_Madonna_di_Canneto['Infilt'] = Water_Spring_Madonna_di_Canneto.apply(lambda row: infiltration(row), axis=1)

Water_Spring_Madonna_di_Canneto.head() #= Water_Spring_Madonna_di_Canneto.dropna()

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Week | Dormant | Rainfall_5 | Wet | runoffdepth | PET | PETs | Infilt_ | Infiltsum | Infilt | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||

| 2012-01-01 | 0.0 | 5.25 | NaN | 1 | 1 | 52 | 1 | 0.0 | 0.01 | 0.0 | 0.91 | 0.91 | -0.91 | -0.91 | -0.91 |

| 2012-01-02 | 5.6 | 6.65 | NaN | 2 | 1 | 1 | 1 | 0.0 | 0.01 | 0.0 | 0.91 | 0.91 | 4.69 | 3.79 | 4.69 |

| 2012-01-03 | 10.0 | 8.85 | NaN | 3 | 1 | 1 | 1 | 0.0 | 0.01 | 0.0 | 0.91 | 0.91 | 9.09 | 12.88 | 9.09 |

| 2012-01-04 | 0.0 | 6.75 | NaN | 4 | 1 | 1 | 1 | 0.0 | 0.01 | 0.0 | 0.91 | 0.91 | -0.91 | 11.98 | -0.91 |

| 2012-01-05 | 1.0 | 5.55 | NaN | 5 | 1 | 1 | 1 | 16.6 | 0.01 | 0.0 | 0.91 | 0.91 | 0.09 | 12.07 | 0.09 |

Water_Spring_Madonna_di_Canneto["Infiltsum"] = Water_Spring_Madonna_di_Canneto["Infilt"].cumsum()

Water_Spring_Madonna_di_Canneto["Infilt2"] =Water_Spring_Madonna_di_Canneto["Rainfall_Settefrati"]-Water_Spring_Madonna_di_Canneto["runoffdepth2"]

Water_Spring_Madonna_di_Canneto["2018"]["Infilt"]#,"Infilt2"

2021-03-11 19:31:23,009 [2736] WARNING py.warnings: <ipython-input-98-860bdca5875b>:1: FutureWarning: Indexing a DataFrame with a datetimelike index using a single string to slice the rows, like `frame[string]`, is deprecated and will be removed in a future version. Use `frame.loc[string]` instead. Water_Spring_Madonna_di_Canneto["2018"]["Infilt"]#,"Infilt2"

Date

2018-01-01 4.29

2018-01-02 4.09

2018-01-03 0.29

2018-01-04 -0.71

2018-01-05 -0.91

...

2018-12-27 -0.89

2018-12-28 -0.89

2018-12-29 -0.89

2018-12-30 -0.89

2018-12-31 -0.89

Name: Infilt, Length: 365, dtype: float64

Water_Spring_Madonna_di_Canneto["runoffdepth"].plot( figsize=(15, 4)); #,"Infilt2"]

Water_Spring_Madonna_di_Canneto["Infilt"].plot( figsize=(15, 4)); #,"Infilt2"]

Before resampling, and convertion of units, and calculate netto in - out, or "rest", we must handle first the other features...

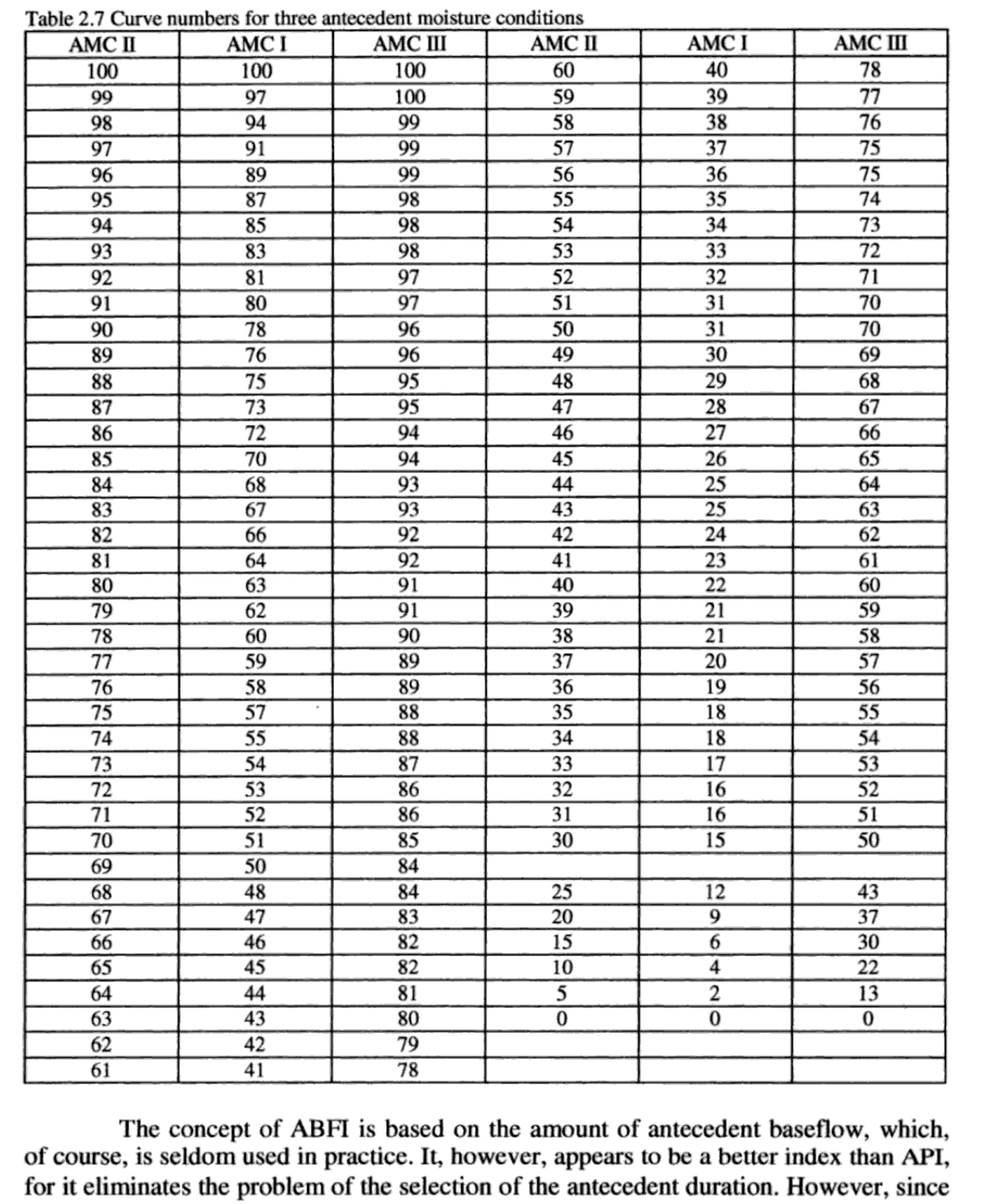

The determination of CN values: by formula method, or by conversion table method¶

The CN values in normal wetness conditions can be determined through NEH integrated with other conditions, such as land use and hydrologic conditions. The values of other two AMC levels can be obtained, by using conversion tables, or according to the conversion formulas [2] shown as below:

$$CN_1 =(4.2*CN_2 )/( 10- 0.058* CN_2)$$R. in inches!!

$$CN_{3} =( 23*CN_2)/(10 -0.12*CN_2 )$$R. in inches!!

When the CN values are determined, the runoff estimation can be made combined with given rainfall account. $Infiltration=Pr-RO-ET$

CN2=55

#CN1 =( 4.2*CN2)/(10 -(0.058*CN2) ); print("CN1:",round(CN1))

CN1 = CN2/(2.281 -0.01281*CN2) ; print("CN1:",round(CN1), " in meters")

CN1: 35 in meters

CN2=55

#CN3 =( 23*CN2)/(10 -(0.012*CN2) ); print("CN3:",round(CN3))

CN3 = CN2/(0.427 -(0.00573*CN2)) ; print("CN3:",round(CN3), " in meters","the formula fails to produce a meaningfull CN3, so we use the conv. tables")

CN3: 492 in meters the formula fails to produce a meaningfull CN3, so we use the conv. tables

averageTemp2019.info()

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 365 entries, 2019-01-01 to 2019-12-31 Freq: D Data columns (total 6 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Rainfall_Settefrati 365 non-null float64 1 Temperature_Settefrati 365 non-null float64 2 Flow_Rate_Madonna_di_Canneto 365 non-null float64 3 doy 365 non-null float64 4 Month 365 non-null int64 5 PET 365 non-null float64 dtypes: float64(5), int64(1) memory usage: 28.1 KB

averageTemp2019

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | PET | |

|---|---|---|---|---|---|---|

| 2019-01-01 | 1.49 | 4.54 | 268.39 | 1.00 | 1 | 0.91 |

| 2019-01-02 | 3.17 | 5.24 | 268.14 | 2.00 | 1 | 0.91 |

| 2019-01-03 | 6.59 | 6.67 | 268.43 | 3.00 | 1 | 0.91 |

| 2019-01-04 | 3.47 | 6.89 | 270.83 | 4.00 | 1 | 0.91 |

| 2019-01-05 | 6.29 | 5.87 | 270.67 | 5.00 | 1 | 0.91 |

| ... | ... | ... | ... | ... | ... | ... |

| 2019-12-27 | 15.26 | 5.96 | 269.19 | 361.25 | 12 | 0.89 |

| 2019-12-28 | 3.29 | 6.19 | 268.90 | 362.25 | 12 | 0.89 |

| 2019-12-29 | 1.51 | 4.71 | 269.00 | 363.25 | 12 | 0.89 |

| 2019-12-30 | 1.49 | 3.54 | 269.14 | 364.25 | 12 | 0.89 |

| 2019-12-31 | 0.11 | 3.94 | 268.76 | 365.25 | 12 | 0.89 |

365 rows × 6 columns

Temperature¶

this series stops end 2018, and nada from 2019 on... but I could use the averages distilled from the other years

Water_Spring_Madonna_di_Canneto.Temperature_Settefrati.plot(figsize=(20, 3.8));

Water_Spring_Madonna_di_Canneto.update( averageTemp2019["2019-01-01":"2019-12-31"].Temperature_Settefrati) #["2019-01-01":"2019-12-31"]

Water_Spring_Madonna_di_Canneto.update( averageTemp2020["2020-01-01":"2020-12-31"].Temperature_Settefrati)#

Water_Spring_Madonna_di_Canneto["2018-01-01":"2020-12-31"].Temperature_Settefrati.plot();

<AxesSubplot:xlabel='Date'>

Water_Spring_Madonna_di_Canneto.tail() #info()

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Rainfall_5 | Year | Rainfall_Set | Flow_Rate_Mad | TmnStd | Flow_m3_7 | Flow_m3_3 | Flow_m3_22 | Flow_7 | Flow_3 | Flow_22 | Flow_m3_90 | Rainfall_m3_90 | Flow_90 | ... | Rainfall_4 | Rainfall_50 | Rainfall_7 | Rainfall_22 | Rainfall_30 | RainCutOff_6 | RainCutOff_5 | RainOvers_6 | CutOff | RainCutOff | RainCutOffmm | RCO_F_cumdif | R_F_cumdif | Dormant | Wet | runoffdepth | PET | Infilt | Infiltsum | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||||||||||||||||||||||||||

| 2020-06-26 | 1.43 | 20.41 | 223.92 | 178 | 6 | 0.0 | 2020 | NaN | 19346.61 | NaN | 135529.14 | 58061.30 | 426305.61 | 1568.62 | 672.01 | 4934.09 | 1.75e+06 | NaN | 20198.06 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -4.60 | 328.09 |

| 2020-06-27 | 1.50 | 19.99 | 223.86 | 179 | 6 | 0.0 | 2020 | NaN | 19341.66 | NaN | 135504.96 | 58043.05 | 426311.11 | 1568.34 | 671.79 | 4934.16 | 1.75e+06 | NaN | 20197.33 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -4.52 | 323.57 |

| 2020-06-28 | 0.26 | 20.67 | 223.76 | 180 | 6 | 0.0 | 2020 | NaN | 19333.24 | NaN | 135468.81 | 58021.51 | 426260.72 | 1567.93 | 671.55 | 4933.57 | 1.74e+06 | NaN | 20196.59 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -5.77 | 317.80 |

| 2020-06-29 | 0.09 | 20.70 | 223.77 | 181 | 6 | 0.0 | 2020 | NaN | 19333.41 | NaN | 135433.90 | 58008.31 | 426191.69 | 1567.52 | 671.39 | 4932.77 | 1.74e+06 | NaN | 20195.81 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -5.94 | 311.86 |

| 2020-06-30 | 1.84 | 21.80 | 223.75 | 182 | 6 | 0.0 | 2020 | NaN | 19332.23 | NaN | 135401.84 | 57998.88 | 426128.56 | 1567.15 | 671.28 | 4932.04 | 1.74e+06 | NaN | 20195.05 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -4.18 | 307.68 |

5 rows × 42 columns

Flow rate¶

We'll replace all repeated identical values in column 'Flow_Rate', as I find them doubious, and we'll also replace nan's with 0.

Or shall I drop the duplicates?

- Flow_Rate_Madonna_di_Canneto == 296.6075439

- Flow_Rate_Madonna_di_Canneto == 221.1757507

doubles period 2: 5/11/2016: 19/02/2017

# Drop rows where all data is the same # dfC =

Water_Spring_Madonna_di_Canneto =Water_Spring_Madonna_di_Canneto.drop_duplicates( subset="Flow_Rate_Madonna_di_Canneto", keep='last')#,limit=7

no source water flow means no data, or Nan; but 0 can also mean no running water...

Water_Spring_Madonna_di_Canneto['Flow_Rate_Madonna_di_Canneto']= Water_Spring_Madonna_di_Canneto.Flow_Rate_Madonna_di_Canneto.replace( np.nan, 0)

Water_Spring_Madonna_di_Canneto["2016-04-14"].loc['Flow_Rate_Madonna_di_Canneto']

pd.to_datetime(["2016-04-14":"2016-04-22"]), Water_Spring_Madonna_di_Canneto.Flow_Rate_Madonna_di_Canneto]=0 pd.to_datetime(["2017-02-05":"2017-02-19"]), Water_Spring_Madonna_di_Canneto.Flow_Rate_Madonna_di_Canneto]=0

Conversion of units etc...¶

Conversion of units: mm/d ->m³/d , and l/s-> m³/d. This way, we obtain a common unit m³, which is usefull to spot numerical mistakes and for a water balance.

Also, the creation of an indicator for the months in order to be able to distinguish the progression of seasons.

The actual drainage area for the source is not given, and is probably unknown. I'll give here an estimation based on my study of the topology of the place. I imagined from where higher up any water could trickle down and reach the water spring.

Update 15/5/2022:¶

I found a map about the aquifers and subterrain water resources of the parc Abruzzo. It features an outline for the drainage area S. capodaqua Val Canneto. A rough calculation of the surface area is 22-23 km².

pd.set_option('mode.chained_assignment',None)

Water_Spring_Madonna_di_Canneto["Month"] =Water_Spring_Madonna_di_Canneto.index.month

Water_Spring_Madonna_di_Canneto["Year"] =Water_Spring_Madonna_di_Canneto.index.year

Water_Spring_Madonna_di_Canneto["Rainfall_Set"] =Water_Spring_Madonna_di_Canneto.Rainfall_Settefrati* 22 *1000*1000/ 1000 # ex 4.5*1.5

Water_Spring_Madonna_di_Canneto["Flow_Rate_Mad"]= Water_Spring_Madonna_di_Canneto.Flow_Rate_Madonna_di_Canneto*24*3600 /1000 #

Water_Spring_Madonna_di_Canneto.info() # no duplicates

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 1277 entries, 2015-03-13 to 2020-06-30 Data columns (total 11 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Rainfall_Settefrati 768 non-null float64 1 Temperature_Settefrati 768 non-null float64 2 Flow_Rate_Madonna_di_Canneto 1277 non-null float64 3 doy 1277 non-null int64 4 Month 1277 non-null int64 5 date 1277 non-null datetime64[ns] 6 date_check 1277 non-null timedelta64[ns] 7 Rainfall_5 1277 non-null float64 8 Year 1277 non-null int64 9 Rainfall_Set 768 non-null float64 10 Flow_Rate_Mad 1277 non-null float64 dtypes: datetime64[ns](1), float64(6), int64(3), timedelta64[ns](1) memory usage: 119.7 KB

Water_Spring_Madonna_di_Canneto.head() # no duplicates

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | PET | Dormant | Rainfall_5 | Wet | runoffdepth | Infilt | Infiltsum | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | ||||||||||||

| 2012-01-01 00:00:00 | 0.0 | 5.25 | NaN | 1.0 | 1.0 | 0.91 | 1.0 | 0.0 | 0.01 | 0.0 | 0.0 | 0.0 |

| 2012-01-02 00:00:00 | 5.6 | 6.65 | NaN | 2.0 | 1.0 | 0.91 | 1.0 | 0.0 | 0.01 | 0.0 | 5.6 | 5.6 |

| 2012-01-03 00:00:00 | 10.0 | 8.85 | NaN | 3.0 | 1.0 | 0.91 | 1.0 | 0.0 | 0.01 | 0.0 | 10.0 | 15.6 |

| 2012-01-04 00:00:00 | 0.0 | 6.75 | NaN | 4.0 | 1.0 | 0.91 | 1.0 | 0.0 | 0.01 | 0.0 | 0.0 | 15.6 |

| 2012-01-05 00:00:00 | 1.0 | 5.55 | NaN | 5.0 | 1.0 | 0.91 | 1.0 | 16.6 | 0.01 | 0.0 | 1.0 | 16.6 |

%precision 4

2500*750

1875000

print("Flow rates: Minimum:", Water_Spring_Madonna_di_Canneto.iloc[:,2].min(), "Average:", Water_Spring_Madonna_di_Canneto.iloc[:,2].mean(), "Maximum:", Water_Spring_Madonna_di_Canneto.iloc[:,2].max(),"St.d.:",

Water_Spring_Madonna_di_Canneto.iloc[:,2].std(),"Variation:", Water_Spring_Madonna_di_Canneto.iloc[:,2].var())

Flow rates: Minimum: 0.0 Average: 260.4125854539546 Maximum: 300.1609827 St.d.: 32.624516081381806 Variation: 1064.3590495443402

Water_Spring_Madonna_di_Canneto.describe().T # no duplicates

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| Rainfall_Settefrati | 764.0 | 4.17 | 11.90 | 0.00e+00 | 0.00e+00 | 0.00e+00 | 2.42 | 140.80 |

| Temperature_Settefrati | 764.0 | 15.19 | 6.11 | 5.50e-01 | 1.07e+01 | 1.52e+01 | 19.95 | 31.10 |

| Flow_Rate_Madonna_di_Canneto | 764.0 | 276.62 | 23.44 | 1.88e+02 | 2.75e+02 | 2.85e+02 | 290.60 | 300.16 |

| doy | 764.0 | 198.74 | 90.32 | 1.00e+00 | 1.26e+02 | 2.03e+02 | 275.25 | 365.00 |

| Month | 764.0 | 7.03 | 2.97 | 1.00e+00 | 5.00e+00 | 7.00e+00 | 10.00 | 12.00 |

| PET | 764.0 | 3.92 | 2.16 | 8.95e-01 | 2.04e+00 | 4.20e+00 | 6.02 | 7.52 |

| Dormant | 764.0 | 0.33 | 0.47 | 0.00e+00 | 0.00e+00 | 0.00e+00 | 1.00 | 1.00 |

| Rainfall_5 | 764.0 | 20.99 | 35.87 | -7.11e-15 | 1.40e+00 | 1.02e+01 | 25.40 | 359.60 |

| Flag1 | 764.0 | 0.33 | 0.70 | 1.00e-02 | 1.00e-02 | 1.00e-02 | 0.01 | 2.00 |

| Wet | 764.0 | 0.33 | 0.70 | 1.00e-02 | 1.00e-02 | 1.00e-02 | 0.01 | 2.00 |

| Flow_7 | 764.0 | 1936.59 | 156.19 | 1.35e+03 | 1.92e+03 | 1.99e+03 | 2032.53 | 2083.16 |

| Flow_3 | 764.0 | 829.92 | 69.01 | 5.67e+02 | 8.24e+02 | 8.53e+02 | 871.33 | 900.10 |

| Flow_22 | 764.0 | 6086.74 | 433.35 | 4.44e+03 | 6.03e+03 | 6.23e+03 | 6361.22 | 6491.86 |

| RainCutOff_5 | 764.0 | 20.87 | 35.61 | -1.07e-14 | 1.40e+00 | 1.02e+01 | 25.40 | 359.60 |

| RainCutOff_6 | 764.0 | 25.06 | 39.64 | 0.00e+00 | 2.80e+00 | 1.24e+01 | 31.42 | 360.00 |

| RainOvers_6 | 764.0 | 4.18 | 11.90 | -1.42e-14 | 7.11e-15 | 1.42e-14 | 2.60 | 140.80 |

| CutOff | 764.0 | 0.11 | 0.32 | 0.00e+00 | 0.00e+00 | 0.00e+00 | 0.00 | 1.00 |

| RainCutOff | 764.0 | 2.44 | 5.74 | 0.00e+00 | 0.00e+00 | 0.00e+00 | 1.60 | 47.40 |

Water_Spring_Madonna_di_Canneto.to_csv("Madonna_di_Canneto_PET.csv" )

Here, I create a column to simulate a minimum temperature.

Water_Spring_Madonna_di_Canneto.Temperature_Settefrati.std(skipna=True)

6.130628847926524

Water_Spring_Madonna_di_Canneto["TmnStd"] =Water_Spring_Madonna_di_Canneto.Temperature_Settefrati- Water_Spring_Madonna_di_Canneto.Temperature_Settefrati.std(skipna=True)

Water_Spring_Madonna_di_Canneto=pd.read_csv("Madonna_di_Canneto_PET.csv", parse_dates=["Date"],index_col=0 ); Water_Spring_Madonna_di_Canneto

| Rainfall_Settefrati | Temperature_Settefrati | Flow_Rate_Madonna_di_Canneto | doy | Month | Rainfall_5 | Year | Rainfall_Set | Flow_Rate_Mad | TmnStd | Flow_m3_7 | Flow_m3_3 | Flow_m3_22 | Flow_7 | Flow_3 | Flow_22 | Flow_m3_90 | Rainfall_m3_90 | Flow_90 | ... | Rainfall_4 | Rainfall_50 | Rainfall_7 | Rainfall_22 | Rainfall_30 | RainCutOff_6 | RainCutOff_5 | RainOvers_6 | CutOff | RainCutOff | RainCutOffmm | RCO_F_cumdif | R_F_cumdif | Dormant | Wet | runoffdepth | PET | Infilt | Infiltsum | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||||||||||||||||||||||||||

| 2015-03-13 | 0.00 | 5.70 | 255.96 | 72 | 3 | 2.4 | 2015 | 0.0 | 22114.64 | -0.43 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | ... | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 0.0 | 0.0 | -22114.64 | -22114.64 | 1 | 0.01 | 0.0 | 2.04 | -2.04 | -2.04 |

| 2015-03-14 | 5.80 | 7.10 | 289.55 | 73 | 3 | 8.2 | 2015 | 39150.0 | 25016.74 | 0.97 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | ... | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 5.8 | 174000.0 | 126868.62 | -7981.38 | 1 | 0.01 | 0.0 | 2.04 | 3.76 | 1.72 |

| 2015-03-15 | 7.40 | 4.90 | 289.92 | 74 | 3 | 15.6 | 2015 | 49950.0 | 25048.97 | -1.23 | 171405.03 | 72180.35 | 532398.39 | 1983.85 | 835.42 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | ... | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 7.4 | 222000.0 | 323819.65 | 16919.65 | 1 | 0.01 | 0.0 | 2.04 | 5.36 | 7.08 |

| 2015-03-16 | 8.60 | 7.50 | 290.08 | 75 | 3 | 24.2 | 2015 | 58050.0 | 25063.16 | 1.37 | 171405.03 | 75128.87 | 532398.39 | 1983.85 | 869.55 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | ... | 21.8 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 8.6 | 258000.0 | 556756.49 | 49906.49 | 1 | 0.01 | 0.0 | 2.04 | 6.56 | 13.64 |

| 2015-03-17 | 7.60 | 7.55 | 290.69 | 76 | 3 | 29.4 | 2015 | 51300.0 | 25115.51 | 1.42 | 171405.03 | 75227.64 | 532398.39 | 1983.85 | 870.69 | 6162.02 | 2.14e+06 | 1.61e+06 | 24805.53 | ... | 29.4 | 158.0 | 29.4 | 84.3 | 105.8 | 29.4 | 29.4 | 0.0 | 0 | 7.6 | 228000.0 | 759640.98 | 76090.98 | 1 | 2.00 | 0.0 | 2.04 | 5.56 | 19.19 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2020-06-26 | 1.43 | 20.41 | 223.92 | 178 | 6 | 0.0 | 2020 | NaN | 19346.61 | NaN | 135529.14 | 58061.30 | 426305.61 | 1568.62 | 672.01 | 4934.09 | 1.75e+06 | NaN | 20198.06 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -4.60 | 328.09 |

| 2020-06-27 | 1.50 | 19.99 | 223.86 | 179 | 6 | 0.0 | 2020 | NaN | 19341.66 | NaN | 135504.96 | 58043.05 | 426311.11 | 1568.34 | 671.79 | 4934.16 | 1.75e+06 | NaN | 20197.33 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 0 | NaN | NaN | NaN | NaN | 0 | 0.01 | 0.0 | 6.02 | -4.52 | 323.57 |