Demo: Neural network training for denoising of Tribolium castaneum¶

This notebook demonstrates training a CARE model for a 3D denoising task, assuming that training data was already generated via 1_datagen.ipynb and has been saved to disk to the file data/my_training_data.npz.

Note that training a neural network for actual use should be done on more (representative) data and with more training time.

More documentation is available at http://csbdeep.bioimagecomputing.com/doc/.

from __future__ import print_function, unicode_literals, absolute_import, division

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

from tifffile import imread

from csbdeep.utils import axes_dict, plot_some, plot_history

from csbdeep.utils.tf import limit_gpu_memory

from csbdeep.io import load_training_data

from csbdeep.models import Config, CARE

The TensorFlow backend uses all available GPU memory by default, hence it can be useful to limit it:

# limit_gpu_memory(fraction=1/2)

(X,Y), (X_val,Y_val), axes = load_training_data('data/my_training_data.npz', validation_split=0.1, verbose=True)

c = axes_dict(axes)['C']

n_channel_in, n_channel_out = X.shape[c], Y.shape[c]

number of training images: 922 number of validation images: 102 image size (3D): (16, 64, 64) axes: SZYXC channels in / out: 1 / 1

plt.figure(figsize=(12,5))

plot_some(X_val[:5],Y_val[:5])

plt.suptitle('5 example validation patches (top row: source, bottom row: target)');

CARE model¶

Before we construct the actual CARE model, we have to define its configuration via a Config object, which includes

- parameters of the underlying neural network,

- the learning rate,

- the number of parameter updates per epoch,

- the loss function, and

- whether the model is probabilistic or not.

The defaults should be sensible in many cases, so a change should only be necessary if the training process fails.

Important: Note that for this notebook we use a very small number of update steps per epoch for immediate feedback, whereas this number should be increased considerably (e.g. train_steps_per_epoch=400) to obtain a well-trained model.

config = Config(axes, n_channel_in, n_channel_out, train_steps_per_epoch=10)

print(config)

vars(config)

Config(axes='ZYXC', n_channel_in=1, n_channel_out=1, n_dim=3, probabilistic=False, train_batch_size=16, train_checkpoint='weights_best.h5', train_checkpoint_epoch='weights_now.h5', train_checkpoint_last='weights_last.h5', train_epochs=100, train_learning_rate=0.0004, train_loss='mae', train_reduce_lr={'factor': 0.5, 'patience': 10, 'min_delta': 0}, train_steps_per_epoch=10, train_tensorboard=True, unet_input_shape=(None, None, None, 1), unet_kern_size=3, unet_last_activation='linear', unet_n_depth=2, unet_n_first=32, unet_residual=True)

{'n_dim': 3,

'axes': 'ZYXC',

'n_channel_in': 1,

'n_channel_out': 1,

'train_checkpoint': 'weights_best.h5',

'train_checkpoint_last': 'weights_last.h5',

'train_checkpoint_epoch': 'weights_now.h5',

'probabilistic': False,

'unet_residual': True,

'unet_n_depth': 2,

'unet_kern_size': 3,

'unet_n_first': 32,

'unet_last_activation': 'linear',

'unet_input_shape': (None, None, None, 1),

'train_loss': 'mae',

'train_epochs': 100,

'train_steps_per_epoch': 10,

'train_learning_rate': 0.0004,

'train_batch_size': 16,

'train_tensorboard': True,

'train_reduce_lr': {'factor': 0.5, 'patience': 10, 'min_delta': 0}}

We now create a CARE model with the chosen configuration:

model = CARE(config, 'my_model', basedir='models')

Training¶

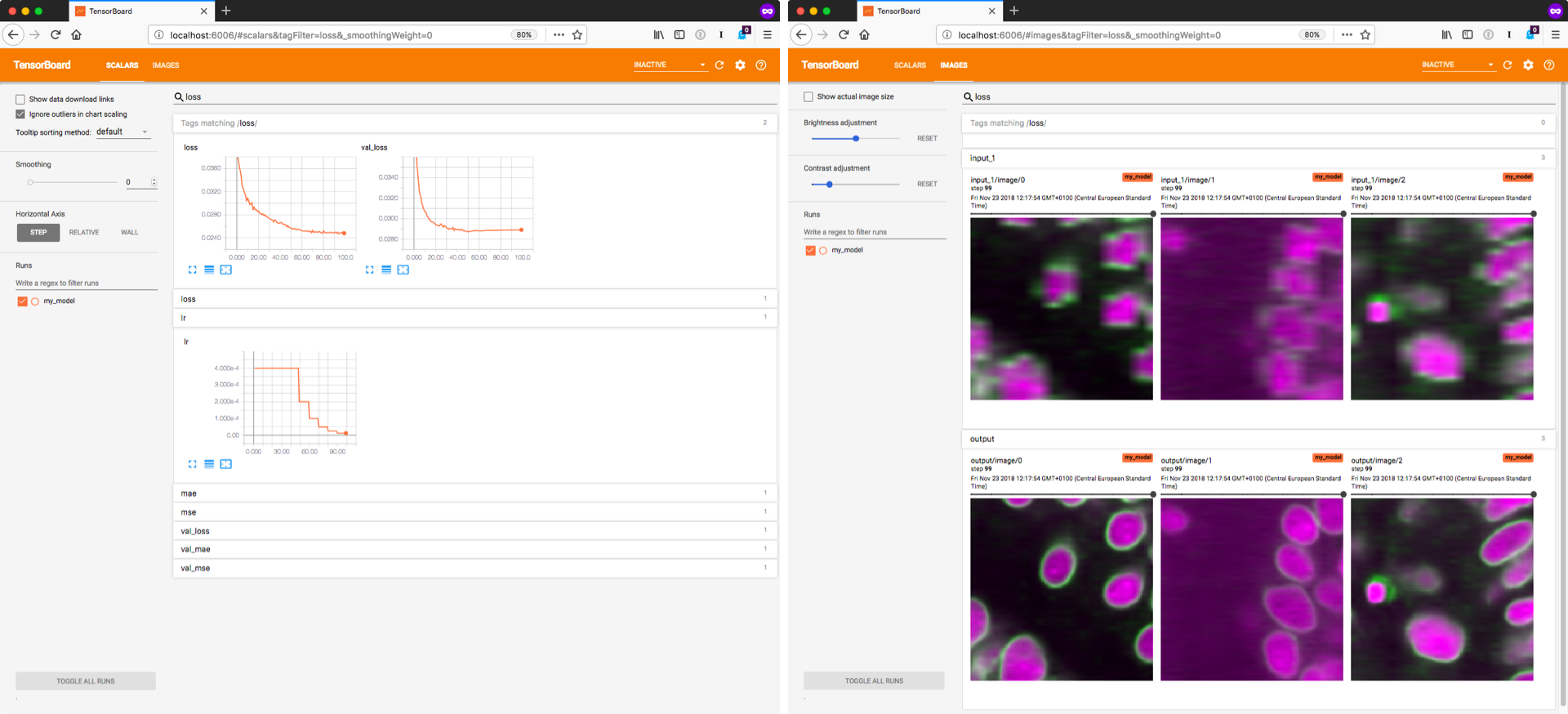

Training the model will likely take some time. We recommend to monitor the progress with TensorBoard (example below), which allows you to inspect the losses during training. Furthermore, you can look at the predictions for some of the validation images, which can be helpful to recognize problems early on.

You can start TensorBoard from the current working directory with tensorboard --logdir=.

Then connect to http://localhost:6006/ with your browser.

history = model.train(X,Y, validation_data=(X_val,Y_val))

Epoch 1/100 WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489430> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489430>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489430> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489430>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489670> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489670>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489670> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489670>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489790> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489790>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489790> and will run it as-is. Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x7fb20f489790>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were: Match 0: (lambda x: K.mean(x, axis=(- 1))) Match 1: (lambda x: x) To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert 6/10 [=================>............] - ETA: 0s - loss: 0.1511 - mse: 0.0562 - mae: 0.1511WARNING:tensorflow:Callback method `on_train_batch_end` is slow compared to the batch time (batch time: 0.0762s vs `on_train_batch_end` time: 0.1400s). Check your callbacks. 10/10 [==============================] - 8s 505ms/step - loss: 0.1439 - mse: 0.0529 - mae: 0.1439 - val_loss: 0.1392 - val_mse: 0.0521 - val_mae: 0.1392 - lr: 4.0000e-04 Epoch 2/100 10/10 [==============================] - 3s 313ms/step - loss: 0.1239 - mse: 0.0414 - mae: 0.1239 - val_loss: 0.1068 - val_mse: 0.0345 - val_mae: 0.1068 - lr: 4.0000e-04 Epoch 3/100 10/10 [==============================] - 3s 313ms/step - loss: 0.0979 - mse: 0.0263 - mae: 0.0979 - val_loss: 0.0919 - val_mse: 0.0231 - val_mae: 0.0919 - lr: 4.0000e-04 Epoch 4/100 10/10 [==============================] - 3s 313ms/step - loss: 0.0886 - mse: 0.0214 - mae: 0.0886 - val_loss: 0.0933 - val_mse: 0.0251 - val_mae: 0.0933 - lr: 4.0000e-04 Epoch 5/100 10/10 [==============================] - 3s 313ms/step - loss: 0.0815 - mse: 0.0191 - mae: 0.0815 - val_loss: 0.0868 - val_mse: 0.0234 - val_mae: 0.0868 - lr: 4.0000e-04 Epoch 6/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0862 - mse: 0.0203 - mae: 0.0862 - val_loss: 0.0972 - val_mse: 0.0253 - val_mae: 0.0972 - lr: 4.0000e-04 Epoch 7/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0964 - mse: 0.0257 - mae: 0.0964 - val_loss: 0.0870 - val_mse: 0.0192 - val_mae: 0.0870 - lr: 4.0000e-04 Epoch 8/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0833 - mse: 0.0184 - mae: 0.0833 - val_loss: 0.0788 - val_mse: 0.0191 - val_mae: 0.0788 - lr: 4.0000e-04 Epoch 9/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0769 - mse: 0.0174 - mae: 0.0769 - val_loss: 0.0758 - val_mse: 0.0157 - val_mae: 0.0758 - lr: 4.0000e-04 Epoch 10/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0669 - mse: 0.0131 - mae: 0.0669 - val_loss: 0.0725 - val_mse: 0.0158 - val_mae: 0.0725 - lr: 4.0000e-04 Epoch 11/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0693 - mse: 0.0144 - mae: 0.0693 - val_loss: 0.0694 - val_mse: 0.0144 - val_mae: 0.0694 - lr: 4.0000e-04 Epoch 12/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0647 - mse: 0.0127 - mae: 0.0647 - val_loss: 0.0686 - val_mse: 0.0140 - val_mae: 0.0686 - lr: 4.0000e-04 Epoch 13/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0677 - mse: 0.0138 - mae: 0.0677 - val_loss: 0.0689 - val_mse: 0.0155 - val_mae: 0.0689 - lr: 4.0000e-04 Epoch 14/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0647 - mse: 0.0130 - mae: 0.0647 - val_loss: 0.0647 - val_mse: 0.0128 - val_mae: 0.0647 - lr: 4.0000e-04 Epoch 15/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0640 - mse: 0.0127 - mae: 0.0640 - val_loss: 0.0663 - val_mse: 0.0130 - val_mae: 0.0663 - lr: 4.0000e-04 Epoch 16/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0656 - mse: 0.0132 - mae: 0.0656 - val_loss: 0.0649 - val_mse: 0.0123 - val_mae: 0.0649 - lr: 4.0000e-04 Epoch 17/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0606 - mse: 0.0119 - mae: 0.0606 - val_loss: 0.0679 - val_mse: 0.0126 - val_mae: 0.0679 - lr: 4.0000e-04 Epoch 18/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0628 - mse: 0.0122 - mae: 0.0628 - val_loss: 0.0656 - val_mse: 0.0150 - val_mae: 0.0656 - lr: 4.0000e-04 Epoch 19/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0607 - mse: 0.0112 - mae: 0.0607 - val_loss: 0.0609 - val_mse: 0.0124 - val_mae: 0.0609 - lr: 4.0000e-04 Epoch 20/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0598 - mse: 0.0111 - mae: 0.0598 - val_loss: 0.0582 - val_mse: 0.0108 - val_mae: 0.0582 - lr: 4.0000e-04 Epoch 21/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0534 - mse: 0.0097 - mae: 0.0534 - val_loss: 0.0569 - val_mse: 0.0105 - val_mae: 0.0569 - lr: 4.0000e-04 Epoch 22/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0547 - mse: 0.0097 - mae: 0.0547 - val_loss: 0.0555 - val_mse: 0.0101 - val_mae: 0.0555 - lr: 4.0000e-04 Epoch 23/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0587 - mse: 0.0108 - mae: 0.0587 - val_loss: 0.0590 - val_mse: 0.0100 - val_mae: 0.0590 - lr: 4.0000e-04 Epoch 24/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0548 - mse: 0.0097 - mae: 0.0548 - val_loss: 0.0567 - val_mse: 0.0101 - val_mae: 0.0567 - lr: 4.0000e-04 Epoch 25/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0546 - mse: 0.0098 - mae: 0.0546 - val_loss: 0.0542 - val_mse: 0.0098 - val_mae: 0.0542 - lr: 4.0000e-04 Epoch 26/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0496 - mse: 0.0084 - mae: 0.0496 - val_loss: 0.0542 - val_mse: 0.0096 - val_mae: 0.0542 - lr: 4.0000e-04 Epoch 27/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0533 - mse: 0.0094 - mae: 0.0533 - val_loss: 0.0542 - val_mse: 0.0099 - val_mae: 0.0542 - lr: 4.0000e-04 Epoch 28/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0526 - mse: 0.0089 - mae: 0.0526 - val_loss: 0.0533 - val_mse: 0.0091 - val_mae: 0.0533 - lr: 4.0000e-04 Epoch 29/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0517 - mse: 0.0089 - mae: 0.0517 - val_loss: 0.0524 - val_mse: 0.0091 - val_mae: 0.0524 - lr: 4.0000e-04 Epoch 30/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0545 - mse: 0.0097 - mae: 0.0545 - val_loss: 0.0535 - val_mse: 0.0088 - val_mae: 0.0535 - lr: 4.0000e-04 Epoch 31/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0494 - mse: 0.0079 - mae: 0.0494 - val_loss: 0.0525 - val_mse: 0.0089 - val_mae: 0.0525 - lr: 4.0000e-04 Epoch 32/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0486 - mse: 0.0081 - mae: 0.0486 - val_loss: 0.0532 - val_mse: 0.0085 - val_mae: 0.0532 - lr: 4.0000e-04 Epoch 33/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0478 - mse: 0.0077 - mae: 0.0478 - val_loss: 0.0520 - val_mse: 0.0086 - val_mae: 0.0520 - lr: 4.0000e-04 Epoch 34/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0502 - mse: 0.0083 - mae: 0.0502 - val_loss: 0.0511 - val_mse: 0.0088 - val_mae: 0.0511 - lr: 4.0000e-04 Epoch 35/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0496 - mse: 0.0080 - mae: 0.0496 - val_loss: 0.0508 - val_mse: 0.0089 - val_mae: 0.0508 - lr: 4.0000e-04 Epoch 36/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0492 - mse: 0.0081 - mae: 0.0492 - val_loss: 0.0506 - val_mse: 0.0082 - val_mae: 0.0506 - lr: 4.0000e-04 Epoch 37/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0496 - mse: 0.0080 - mae: 0.0496 - val_loss: 0.0522 - val_mse: 0.0097 - val_mae: 0.0522 - lr: 4.0000e-04 Epoch 38/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0495 - mse: 0.0082 - mae: 0.0495 - val_loss: 0.0501 - val_mse: 0.0085 - val_mae: 0.0501 - lr: 4.0000e-04 Epoch 39/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0456 - mse: 0.0071 - mae: 0.0456 - val_loss: 0.0496 - val_mse: 0.0081 - val_mae: 0.0496 - lr: 4.0000e-04 Epoch 40/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0454 - mse: 0.0069 - mae: 0.0454 - val_loss: 0.0489 - val_mse: 0.0080 - val_mae: 0.0489 - lr: 4.0000e-04 Epoch 41/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0494 - mse: 0.0079 - mae: 0.0494 - val_loss: 0.0489 - val_mse: 0.0080 - val_mae: 0.0489 - lr: 4.0000e-04 Epoch 42/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0431 - mse: 0.0066 - mae: 0.0431 - val_loss: 0.0495 - val_mse: 0.0080 - val_mae: 0.0495 - lr: 4.0000e-04 Epoch 43/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0460 - mse: 0.0070 - mae: 0.0460 - val_loss: 0.0497 - val_mse: 0.0086 - val_mae: 0.0497 - lr: 4.0000e-04 Epoch 44/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0508 - mse: 0.0083 - mae: 0.0508 - val_loss: 0.0557 - val_mse: 0.0086 - val_mae: 0.0557 - lr: 4.0000e-04 Epoch 45/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0479 - mse: 0.0076 - mae: 0.0479 - val_loss: 0.0499 - val_mse: 0.0081 - val_mae: 0.0499 - lr: 4.0000e-04 Epoch 46/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0502 - mse: 0.0081 - mae: 0.0502 - val_loss: 0.0483 - val_mse: 0.0077 - val_mae: 0.0483 - lr: 4.0000e-04 Epoch 47/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0460 - mse: 0.0071 - mae: 0.0460 - val_loss: 0.0483 - val_mse: 0.0079 - val_mae: 0.0483 - lr: 4.0000e-04 Epoch 48/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0476 - mse: 0.0075 - mae: 0.0476 - val_loss: 0.0501 - val_mse: 0.0082 - val_mae: 0.0501 - lr: 4.0000e-04 Epoch 49/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0469 - mse: 0.0072 - mae: 0.0469 - val_loss: 0.0477 - val_mse: 0.0077 - val_mae: 0.0477 - lr: 4.0000e-04 Epoch 50/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0465 - mse: 0.0073 - mae: 0.0465 - val_loss: 0.0473 - val_mse: 0.0075 - val_mae: 0.0473 - lr: 4.0000e-04 Epoch 51/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0413 - mse: 0.0061 - mae: 0.0413 - val_loss: 0.0481 - val_mse: 0.0081 - val_mae: 0.0481 - lr: 4.0000e-04 Epoch 52/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0477 - mse: 0.0074 - mae: 0.0477 - val_loss: 0.0534 - val_mse: 0.0096 - val_mae: 0.0534 - lr: 4.0000e-04 Epoch 53/100 10/10 [==============================] - 3s 314ms/step - loss: 0.0482 - mse: 0.0075 - mae: 0.0482 - val_loss: 0.0480 - val_mse: 0.0076 - val_mae: 0.0480 - lr: 4.0000e-04 Epoch 54/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0469 - mse: 0.0071 - mae: 0.0469 - val_loss: 0.0478 - val_mse: 0.0074 - val_mae: 0.0478 - lr: 4.0000e-04 Epoch 55/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0494 - mse: 0.0079 - mae: 0.0494 - val_loss: 0.0473 - val_mse: 0.0075 - val_mae: 0.0473 - lr: 4.0000e-04 Epoch 56/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0443 - mse: 0.0067 - mae: 0.0443 - val_loss: 0.0469 - val_mse: 0.0073 - val_mae: 0.0469 - lr: 4.0000e-04 Epoch 57/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0447 - mse: 0.0067 - mae: 0.0447 - val_loss: 0.0471 - val_mse: 0.0075 - val_mae: 0.0471 - lr: 4.0000e-04 Epoch 58/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0460 - mse: 0.0071 - mae: 0.0460 - val_loss: 0.0463 - val_mse: 0.0071 - val_mae: 0.0463 - lr: 4.0000e-04 Epoch 59/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0426 - mse: 0.0061 - mae: 0.0426 - val_loss: 0.0469 - val_mse: 0.0072 - val_mae: 0.0469 - lr: 4.0000e-04 Epoch 60/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0443 - mse: 0.0066 - mae: 0.0443 - val_loss: 0.0473 - val_mse: 0.0076 - val_mae: 0.0473 - lr: 4.0000e-04 Epoch 61/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0435 - mse: 0.0063 - mae: 0.0435 - val_loss: 0.0461 - val_mse: 0.0070 - val_mae: 0.0461 - lr: 4.0000e-04 Epoch 62/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0459 - mse: 0.0068 - mae: 0.0459 - val_loss: 0.0504 - val_mse: 0.0076 - val_mae: 0.0504 - lr: 4.0000e-04 Epoch 63/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0487 - mse: 0.0078 - mae: 0.0487 - val_loss: 0.0475 - val_mse: 0.0074 - val_mae: 0.0475 - lr: 4.0000e-04 Epoch 64/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0441 - mse: 0.0065 - mae: 0.0441 - val_loss: 0.0466 - val_mse: 0.0073 - val_mae: 0.0466 - lr: 4.0000e-04 Epoch 65/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0419 - mse: 0.0060 - mae: 0.0419 - val_loss: 0.0459 - val_mse: 0.0070 - val_mae: 0.0459 - lr: 4.0000e-04 Epoch 66/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0452 - mse: 0.0067 - mae: 0.0452 - val_loss: 0.0470 - val_mse: 0.0069 - val_mae: 0.0470 - lr: 4.0000e-04 Epoch 67/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0438 - mse: 0.0063 - mae: 0.0438 - val_loss: 0.0473 - val_mse: 0.0073 - val_mae: 0.0473 - lr: 4.0000e-04 Epoch 68/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0449 - mse: 0.0068 - mae: 0.0449 - val_loss: 0.0457 - val_mse: 0.0070 - val_mae: 0.0457 - lr: 4.0000e-04 Epoch 69/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0446 - mse: 0.0065 - mae: 0.0446 - val_loss: 0.0452 - val_mse: 0.0069 - val_mae: 0.0452 - lr: 4.0000e-04 Epoch 70/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0433 - mse: 0.0063 - mae: 0.0433 - val_loss: 0.0451 - val_mse: 0.0067 - val_mae: 0.0451 - lr: 4.0000e-04 Epoch 71/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0445 - mse: 0.0066 - mae: 0.0445 - val_loss: 0.0480 - val_mse: 0.0074 - val_mae: 0.0480 - lr: 4.0000e-04 Epoch 72/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0451 - mse: 0.0065 - mae: 0.0451 - val_loss: 0.0469 - val_mse: 0.0078 - val_mae: 0.0469 - lr: 4.0000e-04 Epoch 73/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0451 - mse: 0.0066 - mae: 0.0451 - val_loss: 0.0453 - val_mse: 0.0068 - val_mae: 0.0453 - lr: 4.0000e-04 Epoch 74/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0461 - mse: 0.0068 - mae: 0.0461 - val_loss: 0.0478 - val_mse: 0.0073 - val_mae: 0.0478 - lr: 4.0000e-04 Epoch 75/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0420 - mse: 0.0059 - mae: 0.0420 - val_loss: 0.0459 - val_mse: 0.0068 - val_mae: 0.0459 - lr: 4.0000e-04 Epoch 76/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0397 - mse: 0.0054 - mae: 0.0397 - val_loss: 0.0451 - val_mse: 0.0070 - val_mae: 0.0451 - lr: 4.0000e-04 Epoch 77/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0420 - mse: 0.0059 - mae: 0.0420 - val_loss: 0.0448 - val_mse: 0.0070 - val_mae: 0.0448 - lr: 4.0000e-04 Epoch 78/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0411 - mse: 0.0055 - mae: 0.0411 - val_loss: 0.0443 - val_mse: 0.0066 - val_mae: 0.0443 - lr: 4.0000e-04 Epoch 79/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0440 - mse: 0.0064 - mae: 0.0440 - val_loss: 0.0440 - val_mse: 0.0066 - val_mae: 0.0440 - lr: 4.0000e-04 Epoch 80/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0411 - mse: 0.0056 - mae: 0.0411 - val_loss: 0.0445 - val_mse: 0.0068 - val_mae: 0.0445 - lr: 4.0000e-04 Epoch 81/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0420 - mse: 0.0059 - mae: 0.0420 - val_loss: 0.0459 - val_mse: 0.0069 - val_mae: 0.0459 - lr: 4.0000e-04 Epoch 82/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0401 - mse: 0.0054 - mae: 0.0401 - val_loss: 0.0437 - val_mse: 0.0065 - val_mae: 0.0437 - lr: 4.0000e-04 Epoch 83/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0415 - mse: 0.0056 - mae: 0.0415 - val_loss: 0.0443 - val_mse: 0.0062 - val_mae: 0.0443 - lr: 4.0000e-04 Epoch 84/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0439 - mse: 0.0061 - mae: 0.0439 - val_loss: 0.0435 - val_mse: 0.0064 - val_mae: 0.0435 - lr: 4.0000e-04 Epoch 85/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0404 - mse: 0.0054 - mae: 0.0404 - val_loss: 0.0432 - val_mse: 0.0063 - val_mae: 0.0432 - lr: 4.0000e-04 Epoch 86/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0385 - mse: 0.0051 - mae: 0.0385 - val_loss: 0.0431 - val_mse: 0.0062 - val_mae: 0.0431 - lr: 4.0000e-04 Epoch 87/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0380 - mse: 0.0049 - mae: 0.0380 - val_loss: 0.0431 - val_mse: 0.0062 - val_mae: 0.0431 - lr: 4.0000e-04 Epoch 88/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0390 - mse: 0.0050 - mae: 0.0390 - val_loss: 0.0435 - val_mse: 0.0062 - val_mae: 0.0435 - lr: 4.0000e-04 Epoch 89/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0400 - mse: 0.0053 - mae: 0.0400 - val_loss: 0.0433 - val_mse: 0.0063 - val_mae: 0.0433 - lr: 4.0000e-04 Epoch 90/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0403 - mse: 0.0053 - mae: 0.0403 - val_loss: 0.0434 - val_mse: 0.0063 - val_mae: 0.0434 - lr: 4.0000e-04 Epoch 91/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0390 - mse: 0.0052 - mae: 0.0390 - val_loss: 0.0430 - val_mse: 0.0064 - val_mae: 0.0430 - lr: 4.0000e-04 Epoch 92/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0427 - mse: 0.0058 - mae: 0.0427 - val_loss: 0.0452 - val_mse: 0.0064 - val_mae: 0.0452 - lr: 4.0000e-04 Epoch 93/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0417 - mse: 0.0055 - mae: 0.0417 - val_loss: 0.0441 - val_mse: 0.0061 - val_mae: 0.0441 - lr: 4.0000e-04 Epoch 94/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0413 - mse: 0.0054 - mae: 0.0413 - val_loss: 0.0472 - val_mse: 0.0072 - val_mae: 0.0472 - lr: 4.0000e-04 Epoch 95/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0407 - mse: 0.0055 - mae: 0.0407 - val_loss: 0.0434 - val_mse: 0.0064 - val_mae: 0.0434 - lr: 4.0000e-04 Epoch 96/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0400 - mse: 0.0051 - mae: 0.0400 - val_loss: 0.0426 - val_mse: 0.0060 - val_mae: 0.0426 - lr: 4.0000e-04 Epoch 97/100 10/10 [==============================] - 3s 315ms/step - loss: 0.0382 - mse: 0.0048 - mae: 0.0382 - val_loss: 0.0436 - val_mse: 0.0061 - val_mae: 0.0436 - lr: 4.0000e-04 Epoch 98/100 10/10 [==============================] - 3s 316ms/step - loss: 0.0404 - mse: 0.0052 - mae: 0.0404 - val_loss: 0.0432 - val_mse: 0.0061 - val_mae: 0.0432 - lr: 4.0000e-04 Epoch 99/100 10/10 [==============================] - 3s 317ms/step - loss: 0.0400 - mse: 0.0051 - mae: 0.0400 - val_loss: 0.0425 - val_mse: 0.0059 - val_mae: 0.0425 - lr: 4.0000e-04 Epoch 100/100 10/10 [==============================] - 3s 318ms/step - loss: 0.0403 - mse: 0.0051 - mae: 0.0403 - val_loss: 0.0421 - val_mse: 0.0060 - val_mae: 0.0421 - lr: 4.0000e-04 Loading network weights from 'weights_best.h5'.

Plot final training history (available in TensorBoard during training):

print(sorted(list(history.history.keys())))

plt.figure(figsize=(16,5))

plot_history(history,['loss','val_loss'],['mse','val_mse','mae','val_mae']);

['loss', 'lr', 'mae', 'mse', 'val_loss', 'val_mae', 'val_mse']

plt.figure(figsize=(12,7))

_P = model.keras_model.predict(X_val[:5])

if config.probabilistic:

_P = _P[...,:(_P.shape[-1]//2)]

plot_some(X_val[:5],Y_val[:5],_P,pmax=99.5)

plt.suptitle('5 example validation patches\n'

'top row: input (source), '

'middle row: target (ground truth), '

'bottom row: predicted from source');

Export model to be used with CSBDeep Fiji plugins and KNIME workflows¶

See https://github.com/CSBDeep/CSBDeep_website/wiki/Your-Model-in-Fiji for details.

model.export_TF()

WARNING:tensorflow:From /home/uwe/sw/miniconda3/envs/ws/lib/python3.8/site-packages/tensorflow/python/saved_model/signature_def_utils_impl.py:203: build_tensor_info (from tensorflow.python.saved_model.utils_impl) is deprecated and will be removed in a future version. Instructions for updating: This function will only be available through the v1 compatibility library as tf.compat.v1.saved_model.utils.build_tensor_info or tf.compat.v1.saved_model.build_tensor_info. INFO:tensorflow:No assets to save. INFO:tensorflow:No assets to write. INFO:tensorflow:SavedModel written to: /tmp/tmpcizgxjb5/model/saved_model.pb Model exported in TensorFlow's SavedModel format: /home/uwe/research/csbdeep/examples/examples/denoising3D/models/my_model/TF_SavedModel.zip

***IMPORTANT NOTE*** You are using 'tensorflow' 2.x, hence it is likely that the exported model *will not work* in associated ImageJ/Fiji plugins (e.g. CSBDeep and StarDist). If you indeed have problems loading the exported model in Fiji, the current workaround is to load the trained model in a Python environment with installed 'tensorflow' 1.x and then export it again. If you need help with this, please read: https://gist.github.com/uschmidt83/4b747862fe307044c722d6d1009f6183