10 Minute of Pytorch¶

Pytorch Introduction¶

PyTorch is an open source machine learning library for Python, based on [Torch](https://en.wikipedia.org/wiki/Torch_(machine_learning),used for applications such as natural language processing.It is primarily developed by Facebook's artificial-intelligence research group, and Uber's "Pyro" software for probabilistic programming is built on it.

PyTorch provides two high-level features:¶

- Tensor computation (like NumPy) with strong GPU acceleration

- Deep Neural Networks built on a tape-based autodiff system

Environment Configuration¶

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import torch

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory

import seaborn as sns

import matplotlib.pyplot as plt

plt.style.use("fivethirtyeight")

%matplotlib inline

import os

# print(os.listdir("../input"))

# Any results you write to the current directory are saved as output.

Section-1 : Pytorch Basic Foundation¶

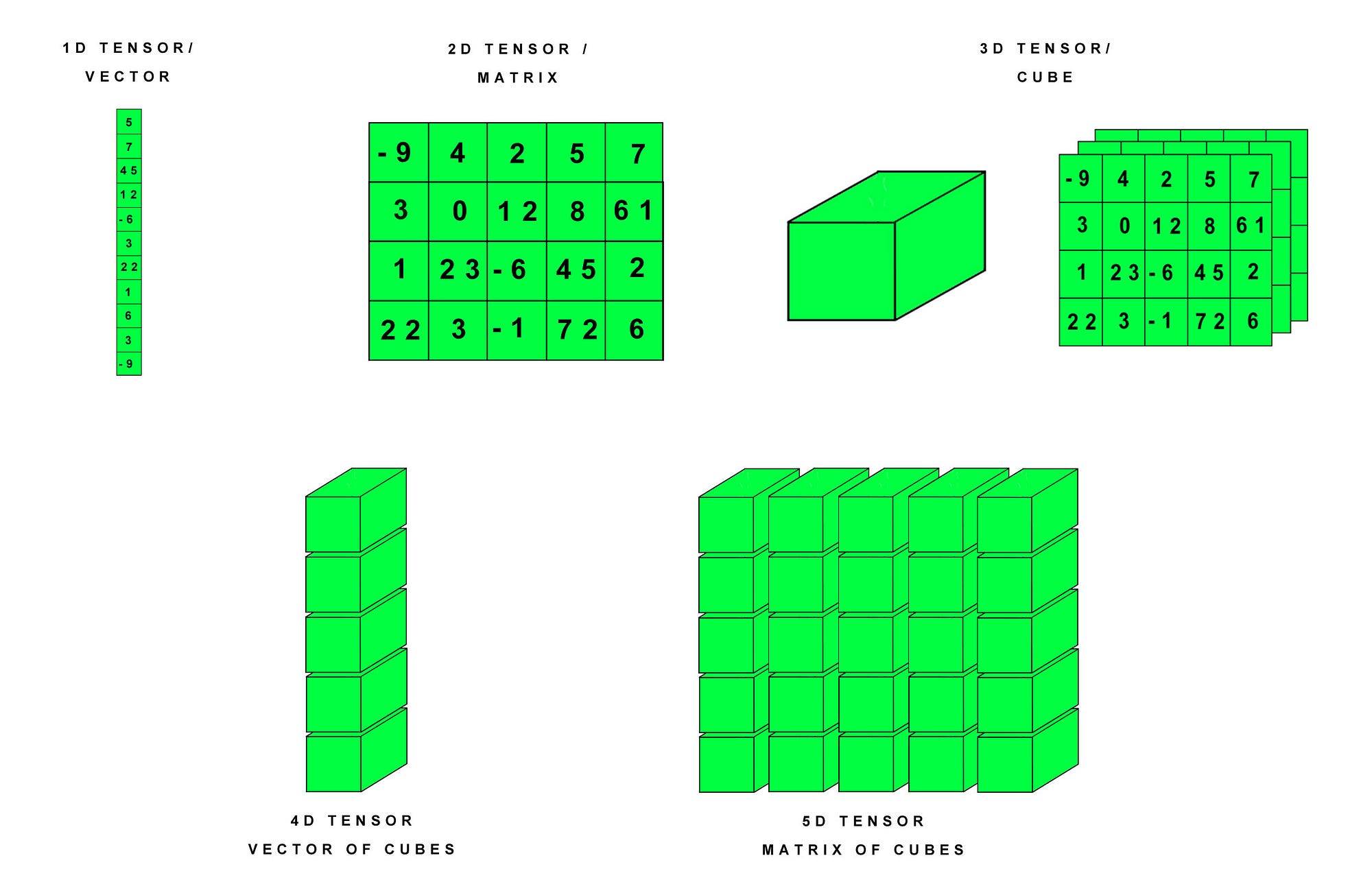

Tensor¶

- Tensors are matrix-like data structures which are essential components in deep learning libraries and efficient computation. Graphical Processing Units (GPUs) are especially effective at calculating operations between tensors, and this has spurred the surge in deep learning capability in recent times. In PyTorch, tensors can be declared simply in a number of ways:

Construct a 5x3 matrix uninitialized¶

x = torch.empty(5, 3)

print(x)

Convert to numpy¶

x.numpy()

Size of tensor¶

x.size()

From Numpy to tensor¶

a = np.array([[3,4],[4,3]])

b = torch.from_numpy(a)

print(b)

Tensor Operations¶

Random similar to numpy¶

x = torch.rand(5, 3)

print(x)

Construct a matrix filled zeros and of dtype long¶

x = torch.zeros(5, 3, dtype=torch.long)

print(x)

x = torch.ones(3, 3, dtype=torch.long)

print(x)

Construct a tensor directly from data¶

x = torch.tensor([2.5, 7])

print(x)

Create tensor based on existing tensor - This method has reuse property of input Tensor¶

x = x.new_ones(5, 3, dtype=torch.double) # new_* methods take in sizes

print(x)

print(x.size())

x = torch.randn_like(x, dtype=torch.float) # override dtype!

print(x)

print(x.size())

- Addition(

torch.add()) - Substraction(

torch.sub()) - Division(

torch.div()) - ** Multiplication(

torch.mul())**

x = torch.rand(5, 3)

y = torch.rand(5, 3)

print(x + y) # This is old method

print(torch.add(x, y)) # pytorch method

x = torch.rand(5, 3)

y = torch.rand(5, 3)

print(x - y) # This is old method

print(torch.sub(x, y)) # pytorch method

x = torch.rand(5, 3)

y = torch.rand(5, 3)

print(x / y) # This is old method

print(torch.div(x, y)) # pytorch method

x = torch.rand(5, 3)

y = torch.rand(5, 3)

print(x * y) # This is old method

print(torch.mul(x, y)) # pytorch method

- Add x to y

# adds x to y

y.add_(x)

print(y)

- Standard Numpy like Indexing

print(x[:, 1])

- Resizing

x = torch.randn(4, 4)

y = x.view(16)

z = x.view(-1, 8) # the size -1 is inferred from other dimensions

print(x.size(), y.size(), z.size())

- Note : Any operation that mutates a tensor in-place is post-fixed with an . For example:

x.copy_(y), x.t_(), will changex.

for more about tensor go on this link : https://pytorch.org/docs/stable/tensors.html¶

Variable¶

- The difference between pytorch and numpy is that it provides automatic derivation, which can automatically give you the gradient of the parameters you want. This operation is provided by another basic element, Variable.

- A Variable wraps a Tensor. It supports nearly all the API’s defined by a Tensor. Variable also provides a backward method to perform backpropagation. For example, to backpropagate a loss function to train model parameter x, we use a variable loss to store the value computed by a loss function. Then, we call loss.backward which computes the gradients ∂loss∂x for all trainable parameters. PyTorch will store the gradient results back in the corresponding variable x.

- Variable in torch is to build a computational graph, but this graph is dynamic compared with a static graph in Tensorflow or Theano.So torch does not have placeholder, torch can just pass variable to the computational graph.

import torch

from torch.autograd import Variable

- Build a tensor

- Build a variable, usually for compute gradients

tensor = torch.FloatTensor([[1,2],[3,4]])

variable = Variable(tensor, requires_grad=True)

print(tensor) # [torch.FloatTensor of size 2x2]

print(variable) # [torch.FloatTensor of size 2x2]

- Till now the tensor and variable seem the same.However, the variable is a part of the graph, it's a part of the auto-gradient.

t_out = torch.mean(tensor*tensor) # x^2

v_out = torch.mean(variable*variable) # x^2

print(t_out)

print(v_out) # 7.5

- Backpropagation from v_out

v_out = 1 / 4 * sum(variable * variable)- the gradients w.r.t the variable,

d(v_out)/d(variable) = 1/4*2*variable = variable/2

v_out.backward()

print(variable.grad)

- This is data in variable format

print(variable)

- This is data in tensor format

print(variable.data) #

- This is in numpy format

print(variable.data.numpy()) # numpy format

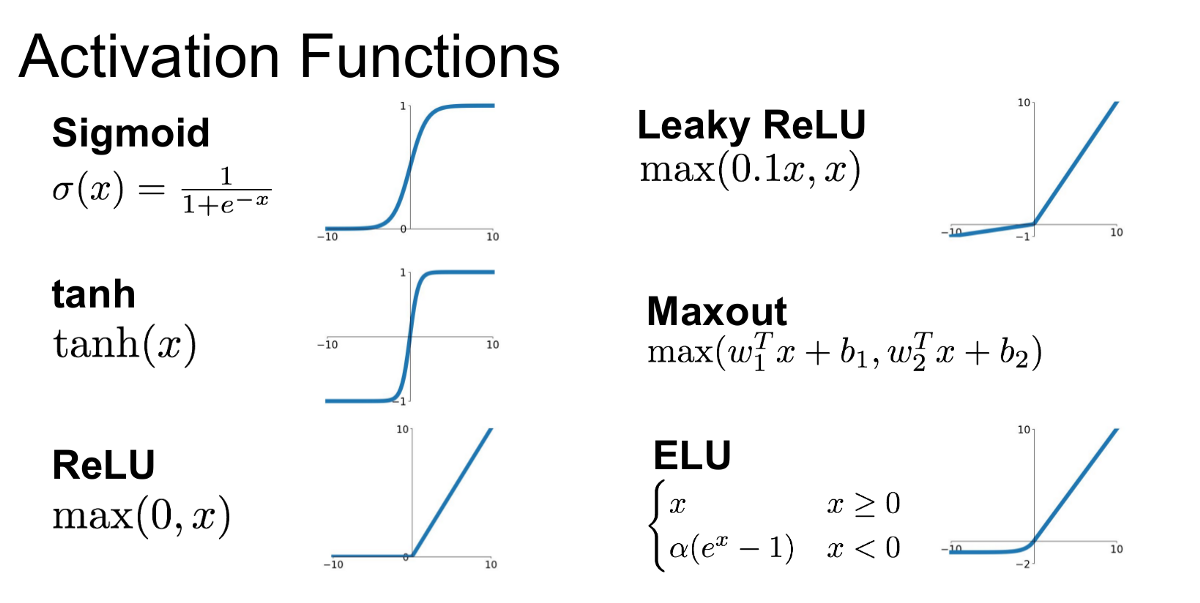

Activation Function¶

- Activation functions is used for a Artificial Neural Network to learn and make sense of something really complicated and Non-linear complex functional mappings between the inputs and response variable. They introduce non-linear properties to our Network.

- Their main purpose is to convert a *input signal of a node in a A-NN to an output signal. That output signal now is used as a input in the next layer in the stack.

import torch

import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings(action='ignore')

Generate Fake Data¶

x = torch.linspace(-5, 5, 200) # x data (tensor), shape=(200, 1)

x = Variable(x)

x_np = x.data.numpy() # numpy array for plotting

Popular Activation Function¶

y_relu = F.relu(x).data.numpy()

y_sigmoid = F.sigmoid(x).data.numpy()

y_tanh = F.tanh(x).data.numpy()

y_softplus = F.softplus(x).data.numpy()

# y_softmax = F.softmax(x)

# softmax is a special kind of activation function, it is about probability

# and will make the sum as 1.

You can see that all activation function we have applied here.softmax is special kind of activation function which is used for multiclass classification problem.

Activation Function plot from data¶

%matplotlib inline

plt.figure(1, figsize=(20, 20))

plt.subplot(221)

plt.scatter(x_np, y_relu, c='red', label='relu')

plt.ylim((-1, 5))

plt.legend(loc='best')

plt.subplot(222)

plt.scatter(x_np, y_sigmoid, c='orange', label='sigmoid')

plt.ylim((-0.2, 1.2))

plt.legend(loc='best')

plt.subplot(223)

plt.scatter(x_np, y_tanh, c='green', label='tanh')

plt.ylim((-1.2, 1.2))

plt.legend(loc='best')

plt.subplot(224)

plt.scatter(x_np, y_softplus, c='blue', label='softplus')

plt.ylim((-0.2, 6))

plt.legend(loc='best')

plt.show()

Section : 2. Neural Network¶

Linear Regression¶

- It is worth underlining that this is an example focused on re-applying the techniques introduced. Indeed, PyTorch offers much more advanced methodologies to accomplish the same task, introduced in the following tutorials.

- In this example we will consider a simple one-dimensional synthetic problem (with some added noise):

Example :

X = np.random.rand(30, 1)*2.0

w = np.random.rand(2, 1)

y = X*w[0] + w[1] + np.random.randn(30, 1) * 0.05

In order to detect the line's coefficient, we define a linear model:

Define Weight and Bias¶

W = Variable(torch.rand(1, 1), requires_grad=True)

b = Variable(torch.rand(1), requires_grad=True)

def linear(x):

return torch.matmul(x, W) + b

- Using

torch.matmulis redundant in this case, but we want the function to be as general as possible to be re-used for more complex models.

Xt = Variable(torch.from_numpy(X)).float()

yt = Variable(torch.from_numpy(y)).float()

for epoch in range(2500):

# Compute predictions

y_pred = linear(Xt)

# Compute cost function

loss = torch.mean((y_pred - yt) ** 2)

# Run back-propagation

loss.backward()

# Update variables

W.data = W.data - 0.005*W.grad.data

b.data = b.data - 0.005*b.grad.data

# Reset gradients

W.grad.data.zero_()

b.grad.data.zero_()

- After Training we can see the graph like this

Relationship Fitting Regression Model¶

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships among variables. It includes many techniques for modeling and analyzing several variables, when the focus is on the relationship between a dependent variable and one or more independent variables.

import torch

from torch.autograd import Variable

import torch.nn.functional as F

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

x = torch.unsqueeze(torch.linspace(-1, 1, 100), dim=1) # x data (tensor), shape=(100, 1)

y = x.pow(2) + 0.2*torch.rand(x.size()) # noisy y data (tensor), shape=(100, 1)

# torch can only train on Variable, so convert them to Variable

x, y = Variable(x), Variable(y)

plt.figure(figsize=(20,8))

plt.scatter(x.data.numpy(), y.data.numpy(), color = "orange")

plt.title('Regression Analysis')

plt.xlabel('Independent varible')

plt.ylabel('Dependent varible')

plt.show()

Model Fitting¶

class Net(torch.nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, n_hidden) # hidden layer

self.predict = torch.nn.Linear(n_hidden, n_output) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.predict(x) # linear output

return x

net = Net(n_feature=1, n_hidden=10, n_output=1) # define the network

print(net) # net architecture

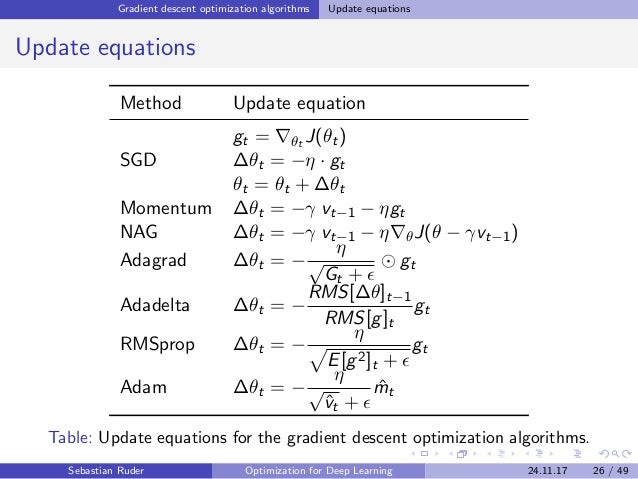

- Optimizer - It is the most popular Optimization algorithms used in optimizing a Neural Network. Now gradient descent is majorly used to do Weights updates in a Neural Network Model , i.e update and tune the Model's parameters in a direction so that we can minimize the Loss function.

optimizer = torch.optim.SGD(net.parameters(), lr=0.2)

loss_func = torch.nn.MSELoss() # this is for regression mean squared loss

plt.ion() # something about plotting

for t in range(100):

prediction = net(x) # input x and predict based on x

loss = loss_func(prediction, y) # must be (1. nn output, 2. target)

optimizer.zero_grad() # clear gradients for next train

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if t % 10 == 0:

# plot and show learning process

plt.figure(figsize=(20,6))

plt.cla()

plt.scatter(x.data.numpy(), y.data.numpy(), color = "orange")

plt.plot(x.data.numpy(), prediction.data.numpy(), 'g-', lw=3)

plt.text(0.3, 0, 'Loss=%.4f' % loss.data.numpy(), fontdict={'size': 25, 'color': 'red'})

plt.show()

plt.pause(0.1)

plt.ioff()

Distinguish type classification¶

- Classification is a data mining function that assigns items in a collection to target categories or classes. The goal of classification is to accurately predict the target class for each case in the data. For example, a classification model could be used to identify loan applicants as low, medium, or high credit risks.

import torch

from torch.autograd import Variable

import torch.nn.functional as F

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Generate Fake Data¶

n_data = torch.ones(100, 2)

x0 = torch.normal(2*n_data, 1) # class0 x data (tensor), shape=(100, 2)

y0 = torch.zeros(100) # class0 y data (tensor), shape=(100, 1)

x1 = torch.normal(-2*n_data, 1) # class1 x data (tensor), shape=(100, 2)

y1 = torch.ones(100) # class1 y data (tensor), shape=(100, 1)

x = torch.cat((x0, x1), 0).type(torch.FloatTensor) # shape (200, 2) FloatTensor = 32-bit floating

y = torch.cat((y0, y1), ).type(torch.LongTensor) # shape (200,) LongTensor = 64-bit integer

# torch can only train on Variable, so convert them to Variable

x, y = Variable(x), Variable(y)

plt.figure(figsize=(10,10))

plt.scatter(x.data.numpy()[:, 0], x.data.numpy()[:, 1], c=y.data.numpy(), s=100, lw=0, cmap='RdYlGn')

plt.show()

Model design¶

class Net(torch.nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, n_hidden) # hidden layer

self.out = torch.nn.Linear(n_hidden, n_output) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.out(x)

return x

Define Network¶

net = Net(n_feature=2, n_hidden=10, n_output=2) # define the network

print(net) # net architecture

# Loss and Optimizer

# Softmax is internally computed.

# Set parameters to be updated.

optimizer = torch.optim.SGD(net.parameters(), lr=0.02)

loss_func = torch.nn.CrossEntropyLoss() # the target label is NOT an one-hotted

Plot the Model¶

plt.ion() # something about plotting

for t in range(100):

out = net(x) # input x and predict based on x

loss = loss_func(out, y) # must be (1. nn output, 2. target), the target label is NOT one-hotted

optimizer.zero_grad() # clear gradients for next train

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if t % 10 == 0 or t in [3, 6]:

# plot and show learning process

plt.figure(figsize=(20,6))

plt.cla()

_, prediction = torch.max(F.softmax(out), 1)

pred_y = prediction.data.numpy().squeeze()

target_y = y.data.numpy()

plt.scatter(x.data.numpy()[:, 0], x.data.numpy()[:, 1], c=pred_y, s=100, lw=0, cmap='RdYlGn')

accuracy = sum(pred_y == target_y)/200.

plt.text(1.5, -4, 'Accuracy=%.2f' % accuracy, fontdict={'size': 20, 'color': 'red'})

plt.show()

plt.pause(0.1)

plt.ioff()

Easy way to Buid Neural Network¶

Neural Network - Neural networks, a beautiful biologically-inspired programming paradigm which enables a computer to learn from observational data.

import torch

import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Design Model¶

# replace following class code with an easy sequential network

class Net(torch.nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, n_hidden) # hidden layer

self.predict = torch.nn.Linear(n_hidden, n_output) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.predict(x) # linear output

return x

Define Model¶

net1 = Net(1, 10, 1)

# easy and fast way to build your network

net2 = torch.nn.Sequential(

torch.nn.Linear(1, 10),

torch.nn.ReLU(),

torch.nn.Linear(10, 1)

)

print(net1) # net1 architecture

print(net2) # net2 architecture

x = torch.unsqueeze(torch.linspace(-1, 1, 100), dim=1) # x data (tensor), shape=(100, 1)

y = x.pow(2) + 0.2*torch.rand(x.size()) # noisy y data (tensor), shape=(100, 1)

x, y = Variable(x, requires_grad=False), Variable(y, requires_grad=False)

Model Saving Function in Pickle Format¶

def save():

# save net1

net1 = torch.nn.Sequential(

torch.nn.Linear(1, 10),

torch.nn.ReLU(),

torch.nn.Linear(10, 1)

)

optimizer = torch.optim.SGD(net1.parameters(), lr=0.5)

loss_func = torch.nn.MSELoss()

for t in range(100):

prediction = net1(x)

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# plot result

plt.figure(1, figsize=(20, 5))

plt.subplot(131)

plt.title('Net1')

plt.scatter(x.data.numpy(), y.data.numpy(), color = "orange")

plt.plot(x.data.numpy(), prediction.data.numpy(), 'r-', lw=5)

# 2 ways to save the net

torch.save(net1, 'net.pkl') # save entire net

torch.save(net1.state_dict(), 'net_params.pkl') # save only the parameters

Load save model¶

def restore_net():

# restore entire net1 to net2

net2 = torch.load('net.pkl')

prediction = net2(x)

# plot resulta

plt.figure(1, figsize=(20, 5))

plt.subplot(132)

plt.title('Net2')

plt.scatter(x.data.numpy(), y.data.numpy(), color = "orange")

plt.plot(x.data.numpy(), prediction.data.numpy(), 'r-', lw=5)

Parameter Restore for net1 to net3¶

def restore_params():

# restore only the parameters in net1 to net3

net3 = torch.nn.Sequential(

torch.nn.Linear(1, 10),

torch.nn.ReLU(),

torch.nn.Linear(10, 1)

)

# copy net1's parameters into net3

net3.load_state_dict(torch.load('net_params.pkl'))

prediction = net3(x)

# plot result

plt.figure(1, figsize=(20, 5))

plt.subplot(133)

plt.title('Net3')

plt.scatter(x.data.numpy(), y.data.numpy(), color = "orange")

plt.plot(x.data.numpy(), prediction.data.numpy(), 'r-', lw=5)

plt.show()

Plot the Models¶

# save net1

save()

# restore entire net (may slow)

restore_net()

# restore only the net parameters

restore_params()

import torch

import torch.utils.data as Data

torch.manual_seed(1) # reproducible

BATCH_SIZE = 5

# BATCH_SIZE = 8

Dataset Preparation¶

x = torch.linspace(1, 10, 10) # this is x data (torch tensor)

y = torch.linspace(10, 1, 10) # this is y data (torch tensor)

Load dataset¶

torch_dataset = Data.TensorDataset(x, y)

loader = Data.DataLoader(

dataset=torch_dataset, # torch TensorDataset format

batch_size=BATCH_SIZE, # mini batch size

shuffle=True, # random shuffle for training

num_workers=2, # subprocesses for loading data

)

Model Treaning¶

def show_batch():

for epoch in range(3): # train entire dataset 3 times

for step, (batch_x, batch_y) in enumerate(loader): # for each training step

# train your data...

print('Epoch: ', epoch, '| Step: ', step, '| batch x: ',

batch_x.numpy(), '| batch y: ', batch_y.numpy())

if __name__ == '__main__':

show_batch()

Optimizers¶

Nice Article on Optimizer : https://blog.paperspace.com/intro-to-optimization-momentum-rmsprop-adam/

import torch

import torch.utils.data as Data

import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Paramater Defined¶

LR = 0.01

BATCH_SIZE = 32

EPOCH = 12

Generate Fake Data¶

# fake dataset

x = torch.unsqueeze(torch.linspace(-1, 1, 1000), dim=1)

y = x.pow(2) + 0.1*torch.normal(torch.zeros(*x.size()))

# plot dataset

plt.figure(figsize=(20,8))

plt.scatter(x.numpy(), y.numpy(), color = "orange")

plt.show()

Put Dataset in torch dataset¶

torch_dataset = Data.TensorDataset(x, y)

loader = Data.DataLoader(

dataset=torch_dataset,

batch_size=BATCH_SIZE,

shuffle=True, num_workers=2,)

Default Network¶

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(1, 20) # hidden layer

self.predict = torch.nn.Linear(20, 1) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.predict(x) # linear output

return x

Different Net¶

net_SGD = Net()

net_Momentum = Net()

net_RMSprop = Net()

net_Adam = Net()

nets = [net_SGD, net_Momentum, net_RMSprop, net_Adam]

Different optimizers¶

opt_SGD = torch.optim.SGD(net_SGD.parameters(), lr=LR)

opt_Momentum = torch.optim.SGD(net_Momentum.parameters(), lr=LR, momentum=0.8)

opt_RMSprop = torch.optim.RMSprop(net_RMSprop.parameters(), lr=LR, alpha=0.9)

opt_Adam = torch.optim.Adam(net_Adam.parameters(), lr=LR, betas=(0.9, 0.99))

optimizers = [opt_SGD, opt_Momentum, opt_RMSprop, opt_Adam]

loss_func = torch.nn.MSELoss()

losses_his = [[], [], [], []] # record loss

Model Training¶

for epoch in range(EPOCH):

print('Epoch: ', epoch)

for step, (batch_x, batch_y) in enumerate(loader): # for each training step

b_x = Variable(batch_x)

b_y = Variable(batch_y)

for net, opt, l_his in zip(nets, optimizers, losses_his):

output = net(b_x) # get output for every net

loss = loss_func(output, b_y) # compute loss for every net

opt.zero_grad() # clear gradients for next train

loss.backward() # backpropagation, compute gradients

opt.step() # apply gradients

l_his.append(loss.data[0]) # loss recoder

plt.figure(figsize = (20,10))

labels = ['SGD', 'Momentum', 'RMSprop', 'Adam']

for i, l_his in enumerate(losses_his):

plt.plot(l_his, label=labels[i])

plt.legend(loc='best')

plt.xlabel('Steps')

plt.ylabel('Loss')

plt.ylim((0, 0.2))

plt.show()

Section : 3. Advance Neural Network¶

CNN¶

Learning Link : http://cs231n.github.io/convolutional-networks/¶

- Convolutional neural networks are an artificial neural network structure that has gradually emerged in recent years. Because convolutional neural networks can give better prediction results in image and speech recognition, this technology is also widely used. The most commonly used aspect of convolutional neural networks is computer image recognition, but because of constant innovation, it is also used in video analysis, natural language processing, drug discovery, etc. The most recent Alpha Go, let the computer see Knowing Go, there is also the use of this technology.

- Let's take a look at the word convolutional neural network. "Convolution" and "neural network".

- *Convolution* means that the neural network is no longer processing the input information of each pixel, but every picture. A small block of pixels is processed, which enhances the continuity of the picture information. It allows the neural network to see the picture instead of a point. This also deepens the understanding of the picture by the neural network.

- Specifically, The convolutional neural network has a batch filter* that continuously scrolls the information in the image on the image. Each time it collects, it only collects a small pixel area, and then sorts the collected information. The information has some actual representations.*

- For example, the neural network can see some edge image information, and then in the same step, use a similar batch filter to scan the generated edge information, the neural network from these The edge information summarizes the higher-level information structure. For example, the edge of the summary can draw eyes, nose, etc. After a filter, the face information is also from this. Information nose eyes are summed up. The last information we then set into the general picture of several layers fully connected neural layer classification, so that we can get input can be divided into what type of result.

Convolution¶

Pooling¶

- The study found that at each convolution, the neural layer may inadvertently lose some information. At this time, pooling can solve this problem well. And pooling is a process of filtering and filtering. Filter the useful information in the layer and analyze it for the next layer. It also reduces the computational burden of the neural network. That is to say, in the volume set, we do not compress the length and width, try to retain more Information, compression work is handed over to the pool, such an additional work can be very effective to improve accuracy. With these technologies, we can build a convolutional neural network of our own.

Popular CNN Structure¶

- A popular construction structure is this. From bottom to top, the first is the input image, after a convolution, and then the convolution information is processed in a pooling manner. Here, the max pooling method is used. Then, after the same processing, the obtained second processed information is transmitted to the two layers of fully connected neural layers, which is also a general two-layer neural network layer. Finally, it is connected to a classifier for classification prediction.

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.utils.data as Data

import torchvision

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Hyper Parameters¶

# Hyper Parameters

EPOCH = 1 # train the training data n times, to save time, we just train 1 epoch

BATCH_SIZE = 50

LR = 0.001 # learning rate

DOWNLOAD_MNIST = False

Dataset Reading¶

# Mnist digits dataset

train_data = torchvision.datasets.MNIST(

root='../input/mnist/mnist/',

train=True, # this is training data

transform=torchvision.transforms.ToTensor(), # Converts a PIL.Image or numpy.ndarray to

# torch.FloatTensor of shape (C x H x W) and normalize in the range [0.0, 1.0]

download=DOWNLOAD_MNIST, # download it if you don't have it

)

Show data¶

# plot one example

print(train_data.train_data.size()) # (60000, 28, 28)

print(train_data.train_labels.size()) # (60000)

plt.imshow(train_data.train_data[0].numpy(), cmap='gray')

plt.title('%i' % train_data.train_labels[0])

plt.show()

Data Convert into Variable¶

# Data Loader for easy mini-batch return in training, the image batch shape will be (50, 1, 28, 28)

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

# convert test data into Variable, pick 2000 samples to speed up testing

test_data = torchvision.datasets.MNIST(root='../input/mnist/mnist/', train=False)

test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1)).type(torch.FloatTensor)[:2000]/255. # shape from (2000, 28, 28) to (2000, 1, 28, 28), value in range(0,1)

test_y = test_data.test_labels[:2000]

Model Design¶

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential( # input shape (1, 28, 28)

nn.Conv2d(

in_channels=1, # input height

out_channels=16, # n_filters

kernel_size=5, # filter size

stride=1, # filter movement/step

padding=2, # if want same width and length of this image after con2d, padding=(kernel_size-1)/2 if stride=1

), # output shape (16, 28, 28)

nn.ReLU(), # activation

nn.MaxPool2d(kernel_size=2), # choose max value in 2x2 area, output shape (16, 14, 14)

)

self.conv2 = nn.Sequential( # input shape (1, 28, 28)

nn.Conv2d(16, 32, 5, 1, 2), # output shape (32, 14, 14)

nn.ReLU(), # activation

nn.MaxPool2d(2), # output shape (32, 7, 7)

)

self.out = nn.Linear(32 * 7 * 7, 10) # fully connected layer, output 10 classes

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1) # flatten the output of conv2 to (batch_size, 32 * 7 * 7)

output = self.out(x)

return output, x # return x for visualization

cnn = CNN()

print(cnn) # net architecture

optimizer = torch.optim.Adam(cnn.parameters(), lr=LR) # optimize all cnn parameters

loss_func = nn.CrossEntropyLoss() # the target label is not one-hotted

Resulting Graphs¶

# following function (plot_with_labels) is for visualization, can be ignored if not interested

from matplotlib import cm

try: from sklearn.manifold import TSNE; HAS_SK = True

except: HAS_SK = False; print('Please install sklearn for layer visualization')

def plot_with_labels(lowDWeights, labels):

plt.figure(figsize = (20,6))

plt.cla()

X, Y = lowDWeights[:, 0], lowDWeights[:, 1]

for x, y, s in zip(X, Y, labels):

c = cm.rainbow(int(255 * s / 9)); plt.text(x, y, s, backgroundcolor=c, fontsize=9)

plt.xlim(X.min(), X.max()); plt.ylim(Y.min(), Y.max()); plt.title('Visualize last layer'); plt.show(); plt.pause(0.01)

plt.ion()

# training and testing

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_loader): # gives batch data, normalize x when iterate train_loader

b_x = Variable(x) # batch x

b_y = Variable(y) # batch y

output = cnn(b_x)[0] # cnn output

loss = loss_func(output, b_y) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if step % 100 == 0:

test_output, last_layer = cnn(test_x)

pred_y = torch.max(test_output, 1)[1].data.squeeze()

accuracy = (pred_y == test_y).sum().item() / float(test_y.size(0))

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data[0], '| test accuracy: %.2f' % accuracy)

if HAS_SK:

# Visualization of trained flatten layer (T-SNE)

tsne = TSNE(perplexity=30, n_components=2, init='pca', n_iter=5000)

plot_only = 500

low_dim_embs = tsne.fit_transform(last_layer.data.numpy()[:plot_only, :])

labels = test_y.numpy()[:plot_only]

plot_with_labels(low_dim_embs, labels)

plt.ioff()

# print 10 predictions from test data

test_output, _ = cnn(test_x[:10])

pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()

print(pred_y, 'prediction number')

print(test_y[:10].numpy(), 'real number')

RNN-Classification¶

Sequence Modeling¶

- We imagine that there is now a set of sequence data data 0,1,2,3. When predicting result0, we are based on data0, and when predicting other data, we are only based on a single data. The neural networks are all the same NN. However, the data is in an ascending order, just like cooking in the kitchen. Sauce A is placed earlier than Sauce B, otherwise it will be scented. So the ordinary neural network structure can not let NN understands the association between these data.

Neural network for processing sequence¶

- So how do we let the association between data be analyzed by NN? Think about how humans analyze the *relationship between things.* The most basic way is to remember what happened before. Then we let the neural network also have this. *The ability to remember what happened before*.

- When analyzing Data0, we store the analysis results in memory. Then when analyzing data1, NN will generate new memories, but new memories and old memories are unrelated. Simply call the old memory and analyze it together. If you continue to analyze more ordered data, RNN will accumulate the previous memories and analyze them together.

- Let's repeat the process just now, but this time to add some mathematical aspects. Every time RNN is done, it will produce a description of the current state. We replace it with a shorthand S(t), then the RNN starts Analyze x(t+1), which produces s(t+1) from x(t+1), but y(t+1) is created by s(t) and s(t+1) So the RNN we usually see can also be expressed like this.

Application of RNN¶

- The form of RNN is not limited to this. His structure is very free. If it is used for classification problems, for example, if a person says a sentence, the emotional color of this sentence is positive or negative. Then we will You can use the RNN that outputs the judgment result only at the last time.

- Or this is the picture description RNN, we only need an X to replace the input picture, and then generate a paragraph describing the picture.

- Or the RNN of the language translation, give a paragraph of English, and then translate it into Any Langauge.

- With these different forms of RNN, RNN becomes powerful. There are many interesting RNN applications. For example, let RNN describe the photo. Let RNN write the academic paper, let RNN write the program script, let RNN compose. We The average person can't even tell if this is written by the machine.

import torch

from torch import nn

from torch.autograd import Variable

import torchvision.datasets as dsets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Hyper Parameters¶

EPOCH = 1 # train the training data n times, to save time, we just train 1 epoch

BATCH_SIZE = 64

TIME_STEP = 28 # rnn time step / image height

INPUT_SIZE = 28 # rnn input size / image width

LR = 0.01 # learning rate

DOWNLOAD_MNIST = True # set to True if haven't download the data

# Mnist digital dataset

train_data = dsets.MNIST(

root='../input/mnist/mnist/',

train=True, # this is training data

transform=transforms.ToTensor(), # Converts a PIL.Image or numpy.ndarray to

# torch.FloatTensor of shape (C x H x W) and normalize in the range [0.0, 1.0]

download=DOWNLOAD_MNIST, # download it if you don't have it

)

# plot one example

print(train_data.train_data.size()) # (60000, 28, 28)

print(train_data.train_labels.size()) # (60000)

plt.imshow(train_data.train_data[0].numpy(), cmap='gray')

plt.title('%i' % train_data.train_labels[0])

plt.show()

Data Loader for easy mini-batch return in training¶

train_loader = torch.utils.data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

Convert test data into Variable, pick 2000 samples to speed up testing¶

test_data = dsets.MNIST(root='../input/mnist/mnist/', train=False, transform=transforms.ToTensor())

test_x = Variable(test_data.test_data, volatile=True).type(torch.FloatTensor)[:2000]/255. # shape (2000, 28, 28) value in range(0,1)

test_y = test_data.test_labels.numpy().squeeze()[:2000] # covert to numpy array

Model Design¶

class RNN(nn.Module):

def __init__(self):

super(RNN, self).__init__()

self.rnn = nn.LSTM( # if use nn.RNN(), it hardly learns

input_size=INPUT_SIZE,

hidden_size=64, # rnn hidden unit

num_layers=1, # number of rnn layer

batch_first=True, # input & output will has batch size as 1s dimension. e.g. (batch, time_step, input_size)

)

self.out = nn.Linear(64, 10)

def forward(self, x):

# x shape (batch, time_step, input_size)

# r_out shape (batch, time_step, output_size)

# h_n shape (n_layers, batch, hidden_size)

# h_c shape (n_layers, batch, hidden_size)

r_out, (h_n, h_c) = self.rnn(x, None) # None represents zero initial hidden state

# choose r_out at the last time step

out = self.out(r_out[:, -1, :])

return out

rnn = RNN()

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR) # optimize all cnn parameters

loss_func = nn.CrossEntropyLoss()

Training and Testing¶

# training and testing

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_loader): # gives batch data

b_x = Variable(x.view(-1, 28, 28)) # reshape x to (batch, time_step, input_size)

b_y = Variable(y) # batch y

output = rnn(b_x) # rnn output

loss = loss_func(output, b_y) # cross entropy loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if step % 50 == 0:

test_output = rnn(test_x) # (samples, time_step, input_size)

pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()

accuracy = sum(pred_y == test_y) / float(test_y.size)

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data[0], '| test accuracy: %.2f' % accuracy)

Predicted and Actual Value match¶

# print 20 predictions from test data

test_output = rnn(test_x[:20].view(-1, 28, 28))

pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()

print(pred_y, 'prediction number')

print(test_y[:20], 'real number')

plt.figure(1, figsize=(20, 8))

plt.plot(pred_y, c='green', label='Predicted')

plt.plot(test_y[:20], c='orange', label='Actual')

plt.xlabel("Index")

plt.ylabel("Predicted/Actual Value")

plt.title("RNN Classification Result Analysis")

plt.legend(loc='best')

RNN-Regression¶

Note : Regression Concept I have already explaned above, so here I have applied direct RNN on Regression.

import torch

from torch import nn

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

Hyper Parameters¶

# Hyper Parameters

TIME_STEP = 10 # rnn time step

INPUT_SIZE = 1 # rnn input size

LR = 0.02 # learning rate

Show data¶

steps = np.linspace(0, np.pi*2, 100, dtype=np.float32)

x_np = np.sin(steps) # float32 for converting torch FloatTensor

y_np = np.cos(steps)

plt.figure(figsize=(20,5))

plt.plot(steps, y_np, 'r-', label='target (cos)')

plt.plot(steps, x_np, 'b-', label='input (sin)')

plt.legend(loc='best')

plt.show()

Model Design¶

class RNN(nn.Module):

def __init__(self):

super(RNN, self).__init__()

self.rnn = nn.RNN(

input_size=INPUT_SIZE,

hidden_size=32, # rnn hidden unit

num_layers=1, # number of rnn layer

batch_first=True, # input & output will has batch size as 1s dimension. e.g. (batch, time_step, input_size)

)

self.out = nn.Linear(32, 1)

def forward(self, x, h_state):

# x (batch, time_step, input_size)

# h_state (n_layers, batch, hidden_size)

# r_out (batch, time_step, hidden_size)

r_out, h_state = self.rnn(x, h_state)

outs = [] # save all predictions

for time_step in range(r_out.size(1)): # calculate output for each time step

outs.append(self.out(r_out[:, time_step, :]))

return torch.stack(outs, dim=1), h_state

# instead, for simplicity, you can replace above codes by follows

# r_out = r_out.view(-1, 32)

# outs = self.out(r_out)

# return outs, h_state

rnn = RNN()

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR) # optimize all cnn parameters

loss_func = nn.MSELoss()

Model Training and Result Plotting¶

h_state = None # for initial hidden state

plt.ion() # continuously plot

for step in range(50):

start, end = step * np.pi, (step+1)*np.pi # time range

# use sin predicts cos

steps = np.linspace(start, end, TIME_STEP, dtype=np.float32)

x_np = np.sin(steps) # float32 for converting torch FloatTensor

y_np = np.cos(steps)

x = torch.from_numpy(x_np[np.newaxis, :, np.newaxis]) # shape (batch, time_step, input_size)

y = torch.from_numpy(y_np[np.newaxis, :, np.newaxis])

prediction, h_state = rnn(x, h_state) # rnn output

# !! next step is important !!

h_state = h_state.data # repack the hidden state, break the connection from last iteration

loss = loss_func(prediction, y) # calculate loss

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

# plotting

plt.figure(1, figsize=(20, 5))

plt.plot(steps, y_np.flatten(), 'r-', label="Actual")

plt.plot(steps, prediction.data.numpy().flatten(), 'b-', label="Predicted")

plt.xlabel("Steps")

plt.ylabel("Actual/Predicted")

plt.title("Result")

plt.draw(); plt.pause(0.05)

plt.ioff()

plt.show()

AutoEncoder¶

*Nueral Network Unsupervised form is Known as AutoEncoder.*

Autoencoders (AE) are neural networks that aims to copy their inputs to their outputs. They work by compressing the input into a latent-space representation, and then reconstructing the output from this representation. This kind of network is composed of two parts :

1) Encoder: This is the part of the network that compresses the input into a latent-space representation. It can be represented by an encoding function h=f(x).

2) Decoder: This part aims to reconstruct the input from the latent space representation. It can be represented by a decoding function r=g(h).

What are autoencoders used for ?¶

- Data denoising and Dimensionality reduction for data visualization are considered as two main interesting practical applications of autoencoders. With appropriate dimensionality and sparsity constraints, autoencoders can learn data projections that are more interesting than PCA or other basic techniques.

Types of autoencoder :¶

Vanilla autoencoder: In its simplest form, the autoencoder is a three layers net, i.e. a neural net with one hidden layer. The input and output are the same, and we learn how to reconstruct the input, for example using the adam optimizer and the mean squared error loss function.(Code)

Multilayer autoencoder : If one hidden layer is not enough, we can obviously extend the autoencoder to more hidden layers.(Code)

Convolutional autoencoder: We may also ask ourselves: can autoencoders be used with Convolutions instead of Fully-connected layers ?

The answer is yes and the principle is the same, but using images (3D vectors) instead of flattened 1D vectors. The input image is downsampled to give a latent representation of smaller dimensions and force the autoencoder to learn a compressed version of the images. (Code)

Regularized autoencoder: There are other ways we can constraint the reconstruction of an autoencoder than to impose a hidden layer of smaller dimension than the input. Rather than limiting the model capacity by keeping the encoder and decoder shallow and the code size small, regularized autoencoders use a loss function that encourages the model to have other properties besides the ability to copy its input to its output. In practice, we usually find two types of regularized autoencoder: the sparse autoencoder and the denoising autoencoder.

Sparse autoencoder: Sparse autoencoders are typically used to learn features for another task such as classification. An autoencoder that has been regularized to be sparse must respond to unique statistical features of the dataset it has been trained on, rather than simply acting as an identity function. In this way, training to perform the copying task with a sparsity penalty can yield a model that has learned useful features as a byproduct.(Code)

Denoising autoencoder : Rather than adding a penalty to the loss function, we can obtain an autoencoder that learns something useful by changing the reconstruction error term of the loss function. This can be done by adding some noise of the input image and make the autoencoder learn to remove it. By this means, the encoder will extract the most important features and learn a robuster representation of the data.(Code)

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.utils.data as Data

import torchvision

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

from matplotlib import cm

import numpy as np

%matplotlib inline

torch.manual_seed(1) # reproducible

Hyper Parameters¶

EPOCH = 10

BATCH_SIZE = 64

LR = 0.005 # learning rate

DOWNLOAD_MNIST = False

N_TEST_IMG = 5

# Mnist digits dataset

train_data = torchvision.datasets.MNIST(

root='../input/mnist/mnist/',

train=True, # this is training data

transform=torchvision.transforms.ToTensor(), # Converts a PIL.Image or numpy.ndarray to

# torch.FloatTensor of shape (C x H x W) and normalize in the range [0.0, 1.0]

download=DOWNLOAD_MNIST, # download it if you don't have it

)

# plot one example

print(train_data.train_data.size()) # (60000, 28, 28)

print(train_data.train_labels.size()) # (60000)

plt.imshow(train_data.train_data[2].numpy(), cmap='gray')

plt.title('%i' % train_data.train_labels[2])

plt.show()

Data Loader for easy mini-batch return in training, the image batch shape will be (50, 1, 28, 28)¶

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

Model Design¶

class AutoEncoder(nn.Module):

def __init__(self):

super(AutoEncoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(28*28, 128),

nn.Tanh(),

nn.Linear(128, 64),

nn.Tanh(),

nn.Linear(64, 12),

nn.Tanh(),

nn.Linear(12, 3), # compress to 3 features which can be visualized in plt

)

self.decoder = nn.Sequential(

nn.Linear(3, 12),

nn.Tanh(),

nn.Linear(12, 64),

nn.Tanh(),

nn.Linear(64, 128),

nn.Tanh(),

nn.Linear(128, 28*28),

nn.Sigmoid(), # compress to a range (0, 1)

)

def forward(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return encoded, decoded

Model Training¶

autoencoder = AutoEncoder()

print(autoencoder)

optimizer = torch.optim.Adam(autoencoder.parameters(), lr=LR)

loss_func = nn.MSELoss()

# original data (first row) for viewing

view_data = Variable(train_data.train_data[:N_TEST_IMG].view(-1, 28*28).type(torch.FloatTensor)/255.)

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_loader):

b_x = Variable(x.view(-1, 28*28)) # batch x, shape (batch, 28*28)

b_y = Variable(x.view(-1, 28*28)) # batch y, shape (batch, 28*28)

b_label = Variable(y) # batch label

encoded, decoded = autoencoder(b_x)

loss = loss_func(decoded, b_y) # mean square error

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

if step % 500 == 0 and epoch in [0, 5, EPOCH-1]:

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data[0])

# plotting decoded image (second row)

_, decoded_data = autoencoder(view_data)

# initialize figure

f, a = plt.subplots(2, N_TEST_IMG, figsize=(5, 2))

for i in range(N_TEST_IMG):

a[0][i].imshow(np.reshape(view_data.data.numpy()[i], (28, 28)), cmap='gray'); a[0][i].set_xticks(()); a[0][i].set_yticks(())

for i in range(N_TEST_IMG):

a[1][i].clear()

a[1][i].imshow(np.reshape(decoded_data.data.numpy()[i], (28, 28)), cmap='gray')

a[1][i].set_xticks(()); a[1][i].set_yticks(())

plt.show(); plt.pause(0.05)

Visualize in 3D plot¶

# visualize in 3D plot

view_data = Variable(train_data.train_data[:200].view(-1, 28*28).type(torch.FloatTensor)/255.)

encoded_data, _ = autoencoder(view_data)

fig = plt.figure(2,figsize=(15,6)); ax = Axes3D(fig)

X, Y, Z = encoded_data.data[:, 0].numpy(), encoded_data.data[:, 1].numpy(), encoded_data.data[:, 2].numpy()

values = train_data.train_labels[:200].numpy()

for x, y, z, s in zip(X, Y, Z, values):

c = cm.rainbow(int(255*s/9)); ax.text(x, y, z, s, backgroundcolor=c)

ax.set_xlim(X.min(), X.max()); ax.set_ylim(Y.min(), Y.max()); ax.set_zlim(Z.min(), Z.max())

plt.show()

DQN Reinforcement Learning¶

- Intensive learning, Deep Q Network is referred to as DQN for short. The Google Deep Mind team is relying on this DQN to make computers play more powerful than us.

Reinforcement learning and neural networks¶

- The reinforcement learning methods are more traditional methods. Nowadays, with the various applications of machine learning in daily life, various machine learning methods are also integrated, merged and upgraded. The intensive study explored is a method that combines neural network and Q learning , called Deep Q Network. Why is this new structure proposed? Originally, traditional form-based reinforcement learning has such a bottleneck.

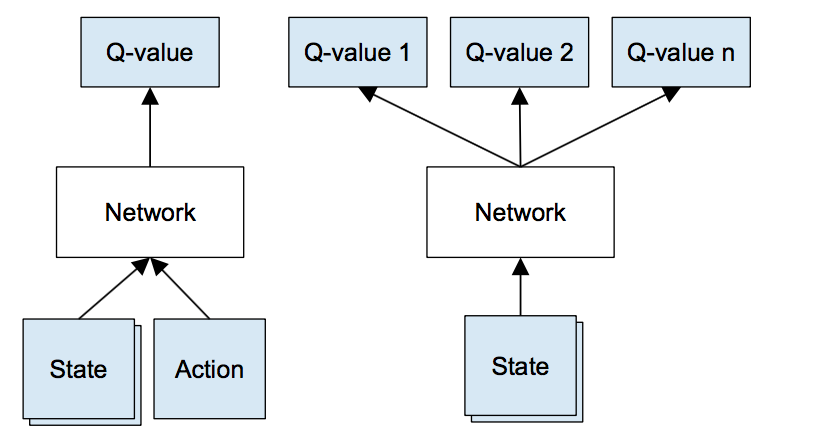

The role of neural networks¶

- We use a table to store each state state, and the Q value that each behavior action has in this state. The problem is that it is too complicated, and the state can be more than the stars in the sky (such as Go). Using tables to store them, I am afraid that our computer has not enough memory, and it is time consuming to search for the corresponding state in such a large table. However, in machine learning, there is a way It is very good for this kind of thing, that is the neural network.

- We can use the state and action as the input of the neural network, and then the neural network analysis to get the Q value of the action, so we do not need to record the Q value in the table, and It is directly using the neural network to generate Q values. Another form is that we can only input the state value, output all the action values, and then directly select the action with the maximum value as the next step according to the principle of Q learning.

- The action. We can imagine that the neural network accepts external information, which is equivalent to collecting information from the eyes, nose and ears, and then outputting each action through brain processing. The value, finally select the action by means of reinforcement learning.

Q-Learning Reference¶

- Q-learning learns the action-value function Q(s, a): how good to take an action at a particular state. For example, for the board position below, how good to move the pawn two steps forward. Literally, we assign a scalar value over the benefit of making such a move.

In Q-learning, we build a memory table Q[s, a] to store Q-values for all possible combinations of s and a. If you are a chess player, it is the cheat sheet for the best move. In the example above, we may realize that moving the pawn 2 steps ahead has the highest Q values over all others. (The memory consumption will be too high for the chess game. But let’s stick with this approach a little bit longer.)

Technical speaking, we sample an action from the current state. We find out the reward R (if any) and the new state s’ (the new board position). From the memory table, we determine the next action a’ to take which has the maximum Q(s’, a’).

In a video game, we score points (rewards) by shooting down the enemy. In a chess game, the reward is +1 when we win or -1 if we lose. So there is only one reward given and it takes a while to get it.

We can take a single move a and see what reward R can we get. This creates a one-step look ahead. R + Q(s’, a’) becomes the target that we want Q(s, a) to be. For example, say all Q values are equal to one now. If we move the joystick to the right and score 2 points, we want to move Q(s, a) closer to 3 (i.e. 2 + 1).

- As we keep playing, we maintain a running average for Q. The values will get better and with some tricks, the Q values will converge.

Q-Learning Algorithm¶

- However, if the combinations of states and actions are too large, the memory and the computation requirement for Q will be too high. To address that, we switch to a deep network Q (DQN) to approximate Q(s, a). This is called Deep Q-learning. With the new approach, we generalize the approximation of the Q-value function rather than remembering the solutions.

Deep Q-Network Algorithm with experience replay¶

Algorithms

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.nn.functional as F

import numpy as np

import gym

Hyper Parameters¶

# Hyper Parameters

BATCH_SIZE = 32

LR = 0.01 # learning rate

EPSILON = 0.9 # greedy policy

GAMMA = 0.9 # reward discount

TARGET_REPLACE_ITER = 100 # target update frequency

MEMORY_CAPACITY = 2000

env = gym.make('CartPole-v0')

env = env.unwrapped

N_ACTIONS = env.action_space.n

N_STATES = env.observation_space.shape[0]

Neural Network Design¶

class Net(nn.Module):

def __init__(self, ):

super(Net, self).__init__()

self.fc1 = nn.Linear(N_STATES, 10)

self.fc1.weight.data.normal_(0, 0.1) # initialization

self.out = nn.Linear(10, N_ACTIONS)

self.out.weight.data.normal_(0, 0.1) # initialization

def forward(self, x):

x = self.fc1(x)

x = F.relu(x)

actions_value = self.out(x)

return actions_value

DQN Model Design¶

class DQN(object):

def __init__(self):

self.eval_net, self.target_net = Net(), Net()

self.learn_step_counter = 0 # for target updating

self.memory_counter = 0 # for storing memory

self.memory = np.zeros((MEMORY_CAPACITY, N_STATES * 2 + 2)) # initialize memory

self.optimizer = torch.optim.Adam(self.eval_net.parameters(), lr=LR)

self.loss_func = nn.MSELoss()

def choose_action(self, x):

x = Variable(torch.unsqueeze(torch.FloatTensor(x), 0))

# input only one sample

if np.random.uniform() < EPSILON: # greedy

actions_value = self.eval_net.forward(x)

action = torch.max(actions_value, 1)[1].data.numpy()[0, 0] # return the argmax

else: # random

action = np.random.randint(0, N_ACTIONS)

return action

def store_transition(self, s, a, r, s_):

transition = np.hstack((s, [a, r], s_))

# replace the old memory with new memory

index = self.memory_counter % MEMORY_CAPACITY

self.memory[index, :] = transition

self.memory_counter += 1

def learn(self):

# target parameter update

if self.learn_step_counter % TARGET_REPLACE_ITER == 0:

self.target_net.load_state_dict(self.eval_net.state_dict())

self.learn_step_counter += 1

# sample batch transitions

sample_index = np.random.choice(MEMORY_CAPACITY, BATCH_SIZE)

b_memory = self.memory[sample_index, :]

b_s = Variable(torch.FloatTensor(b_memory[:, :N_STATES]))

b_a = Variable(torch.LongTensor(b_memory[:, N_STATES:N_STATES+1].astype(int)))

b_r = Variable(torch.FloatTensor(b_memory[:, N_STATES+1:N_STATES+2]))

b_s_ = Variable(torch.FloatTensor(b_memory[:, -N_STATES:]))

# q_eval w.r.t the action in experience

q_eval = self.eval_net(b_s).gather(1, b_a) # shape (batch, 1)

q_next = self.target_net(b_s_).detach() # detach from graph, don't backpropagate

q_target = b_r + GAMMA * q_next.max(1)[0] # shape (batch, 1)

loss = self.loss_func(q_eval, q_target)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

dqn = DQN()

'''

print('\nCollecting experience...')

for i_episode in range(400):

s = env.reset()

ep_r = 0

while True:

env.render()

a = dqn.choose_action(s)

# take action

s_, r, done, info = env.step(a)

# modify the reward

x, x_dot, theta, theta_dot = s_

r1 = (env.x_threshold - abs(x)) / env.x_threshold - 0.8

r2 = (env.theta_threshold_radians - abs(theta)) / env.theta_threshold_radians - 0.5

r = r1 + r2

dqn.store_transition(s, a, r, s_)

ep_r += r

if dqn.memory_counter > MEMORY_CAPACITY:

dqn.learn()

if done:

print('Ep: ', i_episode,

'| Ep_r: ', round(ep_r, 2))

if done:

break

s = s_

'''

Note : After Running this code you can find below output this will not open here you have to run in your own computer.because it will generate ikernel for this.¶

Generative Adversarial Network¶

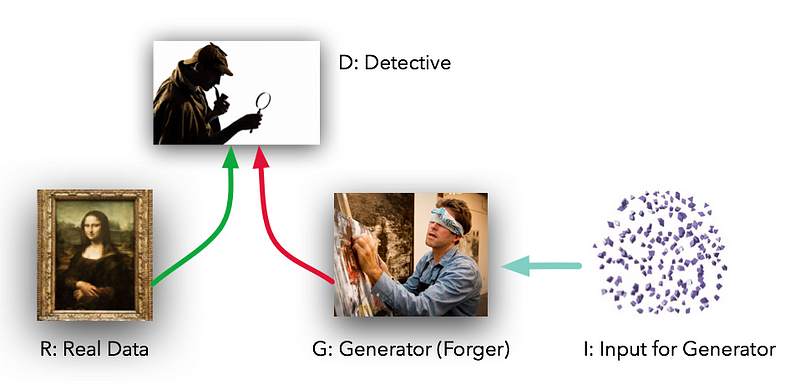

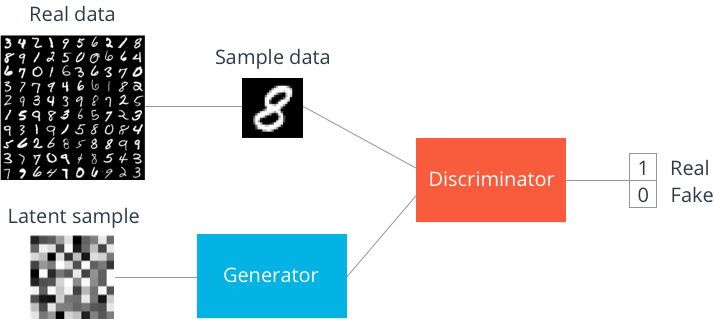

- In 2014, Ian Goodfellow and his colleagues at the University of Montreal published a stunning paper introducing the world to GANs, or generative adversarial networks. Through an innovative combination of computational graphs and game theory they showed that, given enough modeling power, two models fighting against each other would be able to co-train through plain old backpropagation.

The models play two distinct (literally, adversarial) roles. Given some real data set R, G is the generator, trying to create fake data that looks just like the genuine data, while D is the discriminator, getting data from either the real set or G and labeling the difference. Goodfellow’s metaphor (and a fine one it is) was that G was like a team of forgers trying to match real paintings with their output, while D was the team of detectives trying to tell the difference. (Except that in this case, the forgers G never get to see the original data — only the judgments of D. They’re like blind forgers.)

GANs or Generative Adversarial Networks are a kind of neural networks that is composed of 2 separate deep neural networks competing each other: the generator and the discriminator.

GAN to generate various things. It can generate realistic images, 3D-models, videos, and a lot more.Like this Example below

Idea of GAN¶

Architecture of GAN¶

1) Generator

1) Generator

2) Discriminator:

2) Discriminator:

Conceptual Diagram¶

- "The generator will try to generate fake images that fool the discriminator into thinking that they’re real. And the discriminator will try to distinguish between a real and a generated image as best as it could when an image is fed.”

- They both get stronger together until the discriminator cannot distinguish between the real and the generated images anymore. It could do nothing more than predicting real or fake with only 50% accuracy. This is no more useful than flipping a coin and guess.

- This inaccuracy of the discriminator occurs because the generator generates really realistic face images that it seems like they are actually real. So, it is normally expected that it wouldn’t be able to distinguish them. When that happens, the most educated guess would be as equally useful as an uneducated random guess.

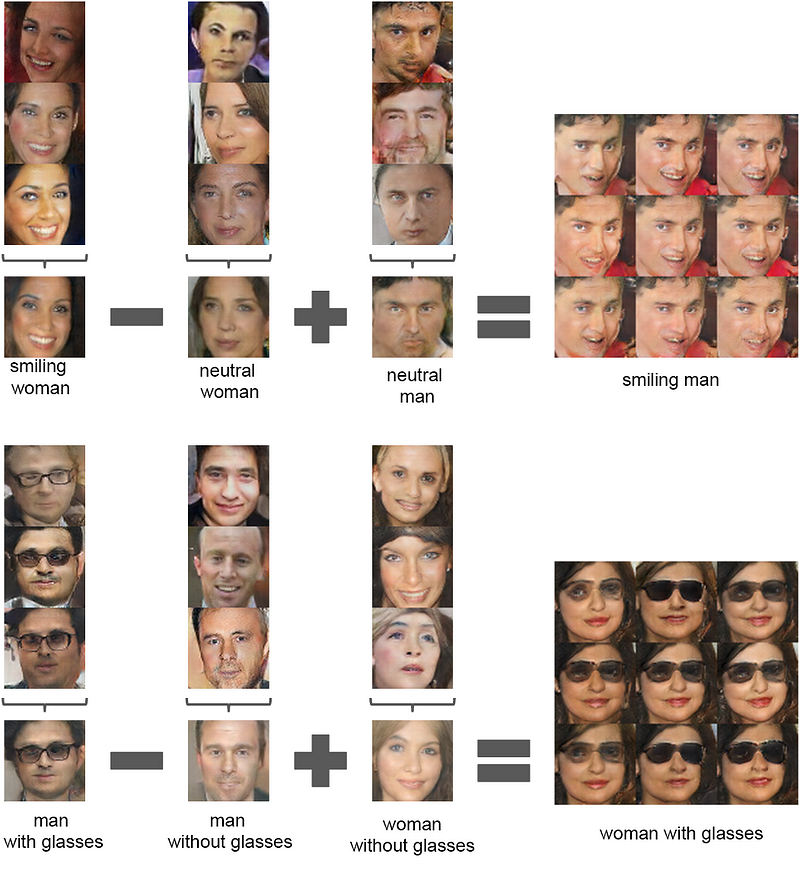

The optimal generator¶

- Intuitively, the Code vector that I shown earlier in the generator will represent things that are abstract. For example, if the Code vector has 100 dimensions, there might be a dimension that represents “face age” or “gender” automatically.

- Why would it learn such representation? Because knowing people ages and their gender helps you draw their face more properly!

The optimal discriminator¶

- When given an image, the discriminator must be looking for components of the face to be able to distinguish correctly.

- Intuitively, some of the discriminator’s hidden neurons will be excited when it sees things like eyes, mouths, hair, etc. These features are good for other purposes later like classification!

import torch

import torch.nn as nn

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

torch.manual_seed(1) # reproducible

np.random.seed(1)

Hyper Parameters¶

# Hyper Parameters

BATCH_SIZE = 64

LR_G = 0.0001 # learning rate for generator

LR_D = 0.0001 # learning rate for discriminator

N_IDEAS = 5 # think of this as number of ideas for generating an art work (Generator)

ART_COMPONENTS = 15 # it could be total point G can draw in the canvas

PAINT_POINTS = np.vstack([np.linspace(-1, 1, ART_COMPONENTS) for _ in range(BATCH_SIZE)])

# show our beautiful painting range

plt.figure(figsize=(20,6))

plt.plot(PAINT_POINTS[0], 2 * np.power(PAINT_POINTS[0], 2) + 1, c='#74BCFF', lw=3, label='upper bound')

plt.plot(PAINT_POINTS[0], 1 * np.power(PAINT_POINTS[0], 2) + 0, c='#FF9359', lw=3, label='lower bound')

plt.legend(loc='upper right')

plt.show()

def artist_works(): # painting from the famous artist (real target)

a = np.random.uniform(1, 2, size=BATCH_SIZE)[:, np.newaxis]

paintings = a * np.power(PAINT_POINTS, 2) + (a-1)

paintings = torch.from_numpy(paintings).float()

return Variable(paintings)

G = nn.Sequential( # Generator

nn.Linear(N_IDEAS, 128), # random ideas (could from normal distribution)

nn.ReLU(),

nn.Linear(128, ART_COMPONENTS), # making a painting from these random ideas

)

D = nn.Sequential( # Discriminator

nn.Linear(ART_COMPONENTS, 128), # receive art work either from the famous artist or a newbie like G

nn.ReLU(),

nn.Linear(128, 1),

nn.Sigmoid(), # tell the probability that the art work is made by artist

)

opt_D = torch.optim.Adam(D.parameters(), lr=LR_D)

opt_G = torch.optim.Adam(G.parameters(), lr=LR_G)

for step in range(800):

artist_paintings = artist_works() # real painting from artist

G_ideas = torch.randn(BATCH_SIZE, N_IDEAS) # random ideas

G_paintings = G(G_ideas) # fake painting from G (random ideas)

prob_artist0 = D(artist_paintings) # D try to increase this prob

prob_artist1 = D(G_paintings) # D try to reduce this prob

D_loss = - torch.mean(torch.log(prob_artist0) + torch.log(1. - prob_artist1))

G_loss = torch.mean(torch.log(1. - prob_artist1))

opt_D.zero_grad()

D_loss.backward(retain_graph=True) # reusing computational graph

opt_D.step()

opt_G.zero_grad()

G_loss.backward()

opt_G.step()

if step % 50 == 0: # plotting

plt.figure(figsize=(20,5))

plt.cla()

plt.plot(PAINT_POINTS[0], G_paintings.data.numpy()[0], c='#4AD631', lw=3, label='Generated painting',)

plt.plot(PAINT_POINTS[0], 2 * np.power(PAINT_POINTS[0], 2) + 1, c='#74BCFF', lw=3, label='upper bound')

plt.plot(PAINT_POINTS[0], 1 * np.power(PAINT_POINTS[0], 2) + 0, c='#FF9359', lw=3, label='lower bound')

plt.text(-.5, 2.3, 'D accuracy=%.2f (0.5 for D to converge)' % prob_artist0.data.numpy().mean(), fontdict={'size': 13})

plt.text(-.5, 2, 'D score= %.2f (-1.38 for G to converge)' % -D_loss.data.numpy(), fontdict={'size': 13})

plt.ylim((0, 3));plt.legend(loc='upper right', fontsize=10);plt.draw();plt.pause(0.01)

plt.ioff()

plt.show()

Conditional GAN¶

import torch

import torchvision

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision import transforms

from torchvision.utils import save_image

import numpy as np

import datetime

import scipy.misc

MODEL_NAME = 'ConditionalGAN'

DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

def to_cuda(x):

return x.to(DEVICE)

def to_onehot(x, num_classes=10):

assert isinstance(x, int) or isinstance(x, (torch.LongTensor, torch.cuda.LongTensor))

if isinstance(x, int):

c = torch.zeros(1, num_classes).long()

c[0][x] = 1

else:

x = x.cpu()

c = torch.LongTensor(x.size(0), num_classes)

c.zero_()

c.scatter_(1, x, 1) # dim, index, src value

return c

def get_sample_image(G, n_noise=100):

"""

save sample 100 images

"""

for num in range(10):

c = to_cuda(to_onehot(num))

for i in range(10):

z = to_cuda(torch.randn(1, n_noise))

y_hat = G(z,c)

line_img = torch.cat((line_img, y_hat.view(28, 28)), dim=1) if i > 0 else y_hat.view(28, 28)

all_img = torch.cat((all_img, line_img), dim=0) if num > 0 else line_img

img = all_img.cpu().data.numpy()

return img

class Discriminator(nn.Module):

"""

Simple Discriminator w/ MLP

"""

def __init__(self, input_size=784, label_size=10, num_classes=1):

super(Discriminator, self).__init__()

self.layer1 = nn.Sequential(

nn.Linear(input_size+label_size, 200),

nn.ReLU(),

nn.Dropout(),

)

self.layer2 = nn.Sequential(

nn.Linear(200, 200),

nn.ReLU(),

nn.Dropout(),

)

self.layer3 = nn.Sequential(

nn.Linear(200, num_classes),

nn.Sigmoid(),

)

def forward(self, x, y):

x, y = x.view(x.size(0), -1), y.view(y.size(0), -1).float()

v = torch.cat((x, y), 1) # v: [input, label] concatenated vector

y_ = self.layer1(v)

y_ = self.layer2(y_)

y_ = self.layer3(y_)

return y_

class Generator(nn.Module):

"""

Simple Generator w/ MLP

"""

def __init__(self, input_size=100, label_size=10, num_classes=784):

super(Generator, self).__init__()

self.layer = nn.Sequential(

nn.Linear(input_size+label_size, 200),

nn.LeakyReLU(0.2),

nn.Linear(200, 200),

nn.LeakyReLU(0.2),

nn.Linear(200, num_classes),

nn.Tanh()

)

def forward(self, x, y):

x, y = x.view(x.size(0), -1), y.view(y.size(0), -1).float()

v = torch.cat((x, y), 1) # v: [input, label] concatenated vector

y_ = self.layer(v)

y_ = y_.view(x.size(0), 1, 28, 28)

return y_

D = to_cuda(Discriminator())

G = to_cuda(Generator())

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5),

std=(0.5, 0.5, 0.5))]

)

mnist = datasets.MNIST(root='../input/mnist/mnist/', train=True, transform=transform, download=True)

batch_size = 64

condition_size = 10

data_loader = DataLoader(dataset=mnist, batch_size=batch_size, shuffle=True, drop_last=True)

criterion = nn.BCELoss()

D_opt = torch.optim.Adam(D.parameters())

G_opt = torch.optim.Adam(G.parameters())

max_epoch = 100 # need more than 200 epochs for training generator

step = 0

n_critic = 5 # for training more k steps about Discriminator

n_noise = 100

D_labels = to_cuda(torch.ones(batch_size)) # Discriminator Label to real

D_fakes = to_cuda(torch.zeros(batch_size)) # Discriminator Label to fake

for epoch in range(max_epoch):

for idx, (images, labels) in enumerate(data_loader):

step += 1

# Training Discriminator

x = to_cuda(images)

y = labels.view(batch_size, 1)

y = to_cuda(to_onehot(y))

x_outputs = D(x, y)

D_x_loss = criterion(x_outputs, D_labels)

z = to_cuda(torch.randn(batch_size, n_noise))

z_outputs = D(G(z, y), y)

D_z_loss = criterion(z_outputs, D_fakes)

D_loss = D_x_loss + D_z_loss

D.zero_grad()

D_loss.backward()

D_opt.step()

if step % n_critic == 0:

# Training Generator

z = to_cuda(torch.randn(batch_size, n_noise))

z_outputs = D(G(z, y), y)

G_loss = criterion(z_outputs, D_labels)

G.zero_grad()

G_loss.backward()

G_opt.step()

if step % 1000 == 0:

print('Epoch: {}/{}, Step: {}, D Loss: {}, G Loss: {}'.format(epoch, max_epoch, step, D_loss.data[0], G_loss.data[0]))

if epoch % 5 == 0:

G.eval()

img = get_sample_image(G)

scipy.misc.imsave('{}_epoch_{}_type1.jpg'.format(MODEL_NAME, epoch), img)

G.train()

def save_checkpoint(state, file_name='checkpoint.pth.tar'):

torch.save(state, file_name)

# Saving params.

# torch.save(D.state_dict(), 'D_c.pkl')

# torch.save(G.state_dict(), 'G_c.pkl')

save_checkpoint({'epoch': epoch + 1, 'state_dict':D.state_dict(), 'optimizer' : D_opt.state_dict()}, 'D_dc.pth.tar')

save_checkpoint({'epoch': epoch + 1, 'state_dict':G.state_dict(), 'optimizer' : G_opt.state_dict()}, 'G_dc.pth.tar')