Scraping StackOverflow¶

In this project, we will be scraping StackOverflow website and:

- Goal 1: List Most mentioned/tagged languages along with their tag counts

- Goal 2: List Most voted questions along with with their attributes (votes, summary, tags, number of votes, answers and views)

We will divide our project into the above mentioned two goals.

Before starting our project, we need to understand few basics regarding Web Scraping.

Web Scraping Basics¶

Before starting our project, we need to understand few basics regarding Web Pages and Web Scraping.

When we visit a page, our browser makes a request to a web server. Most of the times, this request is a GET Request. Our web browser then receives a bunch of files, typically (HTML, CSS, JavaScript). HTML contains the content, CSS & JavaScript tell browser how to render the webpage. So, we will be mainly interested in the HTML file.

HTML:¶

HTML has elements called tags, which help in differentiating different parts of a HTML Document. Different types of tags are:

html- all content is inside this taghead- contains title and other related filesbody- contains main cotent to be displayed on the webpagediv- division or area of a pagep- paragrapha- links

We will get our content inside the body tag and use p and a tags for getting paragraphs and links.

HTML also has class and id properties. These properties give HTML elements names and makes it easier for us to refer to a particular element. Class can be shared among multiple elements and an element can have moer then one class. Whereas, id needs to be unique for a given element and can be used just once in the document.

Requests¶

The requests module in python lets us easily download pages from the web.

We can request contents of a webpage by using requests.get(), passing in target link as a parameter. This will give us a response object.

Beautiful Soup¶

Beautiful Soup library helps us parse contents of the webpage in an easy to use manner. It provides us with some very useful methods and attributes like:

find(),select_one()- retuns first occurence of the tag object that matches our filterfind_all(),select()- retuns a list of the tag object that matches our filterchildren- provides list of direct nested tags of the given paramter/tag

These methods help us in extracting specific portions from the webpage.

*Tip: When Scraping, we try to find common properties shared among target objects. This helps us in extracting all of them in just one or two commands.*

For e.g. We want to scrap points of teams on a league table. In such a scenario, we can go to each element and extract its value. Or else, we can find a common thread (like same class, same parent + same element type) between all the points. And then, pass that common thread as an argument to BeautifulSoup. BeautifulSoup will then extract and return the elements to us.

Goal 1: Listing most tagged Languages¶

Now that we know the basics of Web Scraping, we will move towards our first goal.

In Goal 1, we have to list most tagged Languages along with their Tag Count. First, lets make a list of steps to follow:

Let's import all the required libraries and packages

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import requests # Getting Webpage content

from bs4 import BeautifulSoup as bs # Scraping webpages

import matplotlib.pyplot as plt # Visualization

import matplotlib.style as style # For styling plots

from matplotlib import pyplot as mp # For Saving plots as images

# For displaying plots in jupyter notebook

%matplotlib inline

style.use('fivethirtyeight') # matplotlib Style

Downloading Tags page from StackOverflow¶

We will download the tags page from stackoverflow, where it has all the languages listed with their tag count.

# Using requests module for downloading webpage content

response = requests.get('https://stackoverflow.com/tags')

# Getting status of the request

# 200 status code means our request was successful

# 404 status code means that the resource you were looking for was not found

response.status_code

200

# Parsing html data using BeautifulSoup

soup = bs(response.content, 'html.parser')

# body

body = soup.find('body')

# printing the object type of body

type(body)

bs4.element.Tag

Extract Top Languages¶

In order to acheive this, we need to understand HTML structure of the document that we have. And then, narrow down to our element of interest.

One way of doing this would be manually searching the webpage (hint: print body variable from above and search through it).

Second method, is to use the browser's Developr Tools.

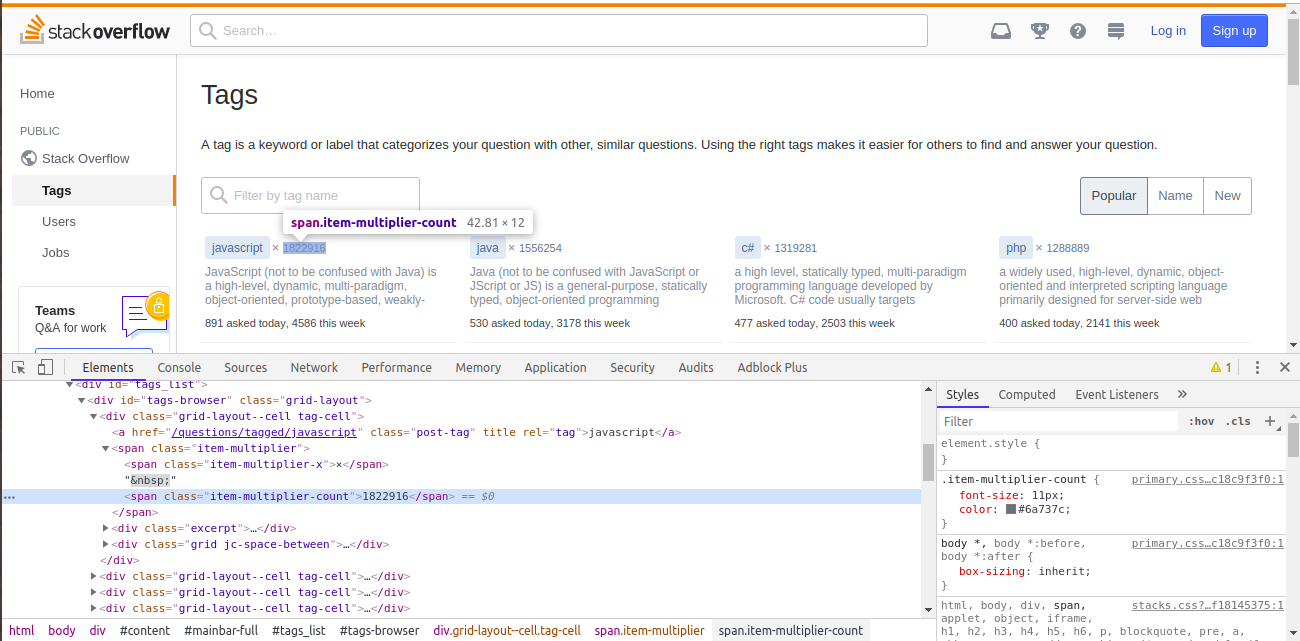

We will use this second one. On Chrome, open tags page and right-click on the language name (shown in top left) and choose Inspect.

We can see that the Language name is inside a tag, which in turn is inside a lot of div tags. This seems, difficult to extract. Here, the class and id, we spoke about earlier comes to our rescue.

If we look more closely in the image above, we can see that the a tag has a class of post-tag. Using this class along with a tag, we can extract all the language links in a list.

lang_tags = body.find_all('a', class_='post-tag')

lang_tags[:2]

[<a class="post-tag" href="/questions/tagged/javascript" rel="tag" title="show questions tagged 'javascript'">javascript</a>, <a class="post-tag" href="/questions/tagged/java" rel="tag" title="show questions tagged 'java'">java</a>]

Next, using list comprehension, we will extract all the language names.

languages = [i.text for i in lang_tags]

languages[:5]

['javascript', 'java', 'c#', 'php', 'android']

Extract Tag Counts¶

To extract tag counts, we will follow the same process.

On Chrome, open tags page and right-click on the tag count, next to the top language (shown in top left) and choose Inspect.

Here, the tag counts are inside span tag, with a class of item-multiplier-count. Using this class along with span tag, we will extract all the tag count spans in a list.

tag_counts = body.find_all('span', class_='item-multiplier-count')

tag_counts[:2]

[<span class="item-multiplier-count">1824582</span>, <span class="item-multiplier-count">1557391</span>]

Next, using list comprehension, we will extract all the Tag Counts.

no_of_tags = [int(i.text) for i in tag_counts]

no_of_tags[:5]

[1824582, 1557391, 1320273, 1289585, 1200130]

Put all code together and join the two lists¶

We will use Pandas.DataFrame to put the two lists together.

In order to make a DataFrame, we need to pass both the lists (in dictionary form) as argument to our function.

# Function to check, if there is any error in length of the extracted bs4 object

def error_checking(list_name, length):

if (len(list_name) != length):

print("Error in {} parsing, length not equal to {}!!!".format(list_name, length))

return -1

else:

pass

def get_top_languages(url):

# Using requests module for downloading webpage content

response = requests.get(url)

# Parsing html data using BeautifulSoup

soup = bs(response.content, 'html.parser')

body = soup.find('body')

# Extracting Top Langauges

lang_tags = body.find_all('a', class_='post-tag')

error_checking(lang_tags, 36) # Error Checking

languages = [i.text for i in lang_tags] # Languages List

# Extracting Tag Counts

tag_counts = body.find_all('span', class_='item-multiplier-count')

error_checking(tag_counts, 36) # Error Checking

no_of_tags = [int(i.text) for i in tag_counts] # Tag Counts List

# Putting the two lists together

df = pd.DataFrame({'Languages':languages,

'Tag Count':no_of_tags})

return df

URL1 = 'https://stackoverflow.com/tags'

df = get_top_languages(URL1)

df.head()

| Languages | Tag Count | |

|---|---|---|

| 0 | javascript | 1824582 |

| 1 | java | 1557391 |

| 2 | c# | 1320273 |

| 3 | php | 1289585 |

| 4 | android | 1200130 |

Now, we will plot the Top Languages along with their Tag Counts.

plt.figure(figsize=(8, 3))

plt.bar(height=df['Tag Count'][:10], x=df['Languages'][:10])

plt.xticks(rotation=90)

plt.xlabel('Languages')

plt.ylabel('Tag Counts')

plt.savefig('lang_vs_tag_counts.png', bbox_inches='tight')

plt.show()

Goal 2: Listing most voted Questions¶

Now that we have collected data using web scraping one time, it won't be difficult the next time.

In Goal 2 part, we have to list questions with most votes along with their attributes, like:

- Summary

- Tags

- Number of Votes

- Number of Answers

- Number of Views

I would suggest giving it a try on your own, then come here to see my solution.

Similar to previous step, we will make a list of steps to act upon:

Downloading Questions page from StackOverflow¶

We will download the questions page from stackoverflow, where it has all the top voted questions listed.

Here, I've appended ?sort=votes&pagesize=50 to the end of the defualt questions URL, to get a list of top 50 questions.

# Using requests module for downloading webpage content

response1 = requests.get('https://stackoverflow.com/questions?sort=votes&pagesize=50')

# Getting status of the request

# 200 status code means our request was successful

# 404 status code means that the resource you were looking for was not found

response1.status_code

200

A different Scraping Function¶

In this section, we will use select() and select_one() to return BeautifulSoup objects as per our requierment. While find_all uses tags, select uses CSS Selectors in the filter. I personally tend to use the latter one more.

For example:

p a— finds all a tags inside of a p tag.

soup.select('p a')

div.outer-text— finds all div tags with a class of outer-text.div#first— finds all div tags with an id of first.body p.outer-text— finds any p tags with a class of outer-text inside of a body tag.

# Parsing html data using BeautifulSoup

soup1 = bs(response1.content, 'html.parser')

# body

body1 = soup1.select_one('body')

# printing the object type of body

type(body1)

bs4.element.Tag

Extract Top Questions¶

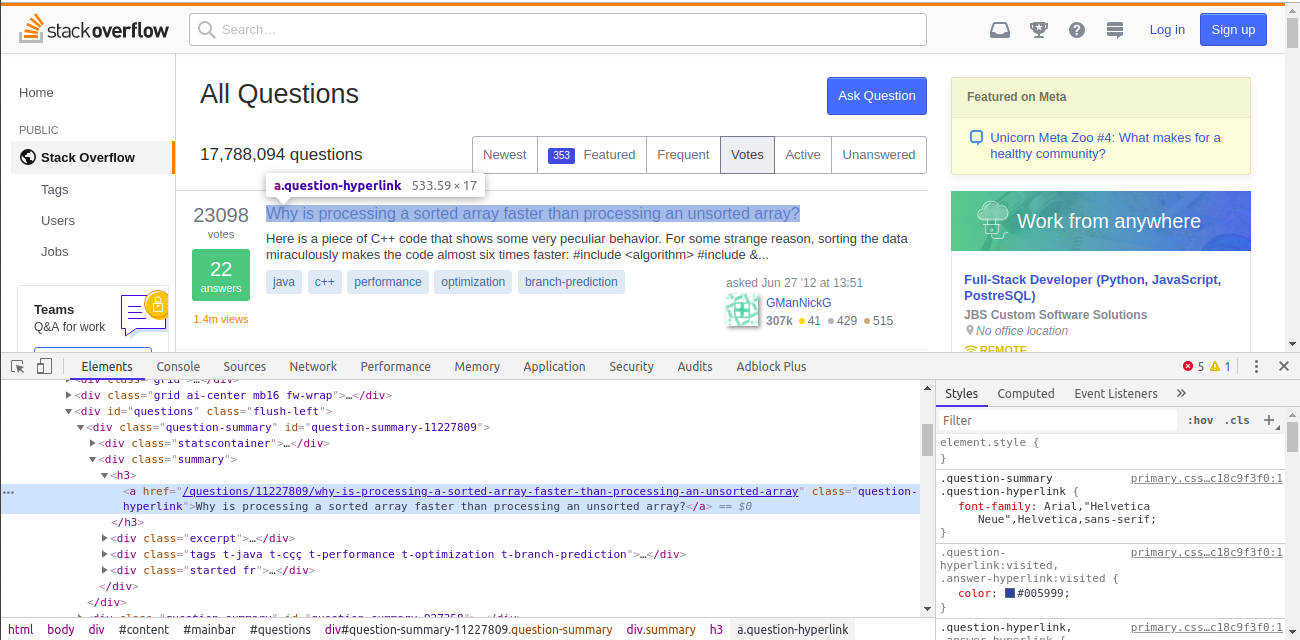

On Chrome, open questions page and right-click on the top question and choose Inspect.

We can see that the question is inside a tag, which has a class of question-hyperlink.

Taking cue from our previous Goal, we can use this class along with a tag, to extract all the question links in a list. However, there are more question hyperlinks in sidebar which will also be extracted in this case. To avoid this scenario, we can combine a tag, question-hyperlink class with their parent h3 tag. This will give us exactly 50 Tags.

question_links = body1.select("h3 a.question-hyperlink")

question_links[:2]

[<a class="question-hyperlink" href="/questions/11227809/why-is-processing-a-sorted-array-faster-than-processing-an-unsorted-array">Why is processing a sorted array faster than processing an unsorted array?</a>, <a class="question-hyperlink" href="/questions/927358/how-do-i-undo-the-most-recent-local-commits-in-git">How do I undo the most recent local commits in Git?</a>]

List comprehension, to extract all the questions.

questions = [i.text for i in question_links]

questions[:2]

['Why is processing a sorted array faster than processing an unsorted array?', 'How do I undo the most recent local commits in Git?']

Extract Summary¶

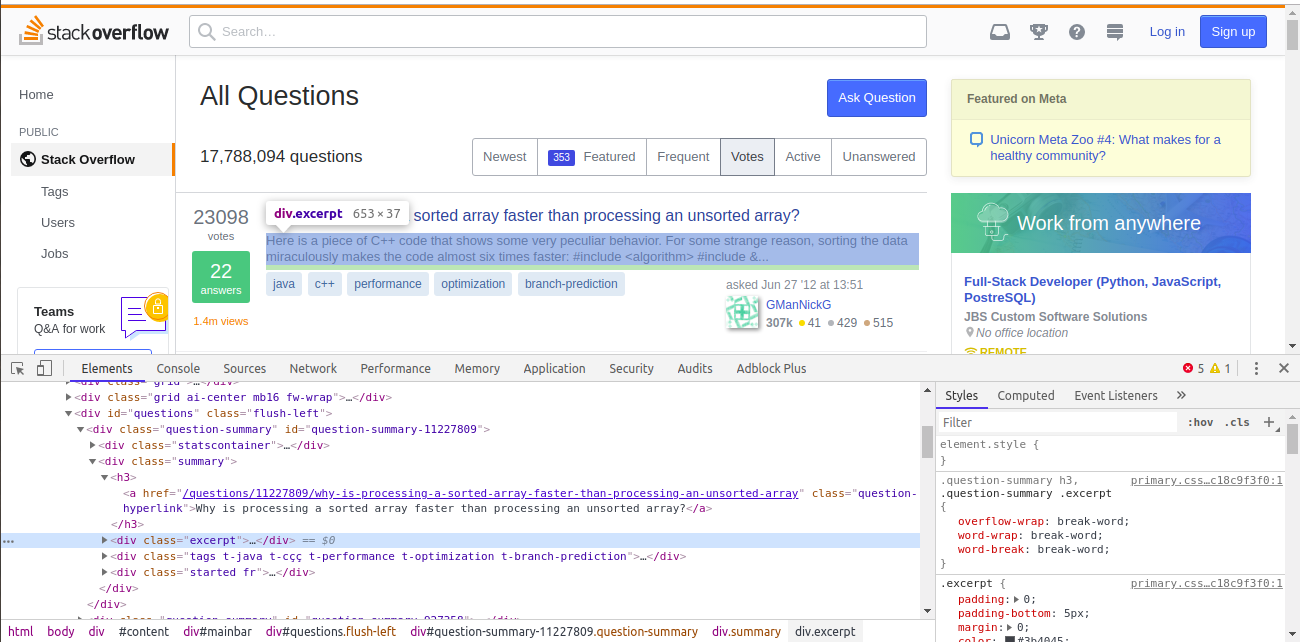

On Chrome, open questions page and right-click on summary of the top question and choose Inspect.

We can see that the question is inside div tag, which has a class of excerpt. Using this class along with div tag, we can extract all the question links in a list.

summary_divs = body1.select("div.excerpt")

print(summary_divs[0])

<div class="excerpt">

Here is a piece of C++ code that shows some very peculiar behavior. For some strange reason, sorting the data miraculously makes the code almost six times faster:

#include <algorithm>

#include &...

</div>

List comprehension, to extract all the questions.

Here, we will also use strip() method on each div's text. This is to remove both leading and trailing unwanted characters from a string.

summaries = [i.text.strip() for i in summary_divs]

summaries[0]

'Here is a piece of C++ code that shows some very peculiar behavior. For some strange reason, sorting the data miraculously makes the code almost six times faster:\n\n#include <algorithm>\n#include &...'

Extract Tags¶

On Chrome, open questions page and right-click on summary of the top question and choose Inspect.

Extracting tags per question is the most complex task in this post. Here, we cannot find unique class or id for each tag, and there are multiple tags per question that we n eed to store.

To extract tags per question, we will follow a multi-step process:

- As shown in figure, individual tags are in a third layer, under two nested div tags. With the upper div tag, only having unique class (

summary).- First, we will extract div with

summaryclass. - Now notice our target div is third child overall and second

divchild of the above extracted object. Here, we can usenth-of-type()method to extract this 2nddivchild. Usage of this method is very easy and few exmaples can be found here. This method will extract the 2nddivchild directly, without extractingsummary divfirst.

- First, we will extract div with

tags_divs = body1.select("div.summary > div:nth-of-type(2)")

tags_divs[0]

<div class="tags t-java t-cçç t-performance t-optimization t-branch-prediction"> <a class="post-tag" href="/questions/tagged/java" rel="tag" title="show questions tagged 'java'">java</a> <a class="post-tag" href="/questions/tagged/c%2b%2b" rel="tag" title="show questions tagged 'c++'">c++</a> <a class="post-tag" href="/questions/tagged/performance" rel="tag" title="show questions tagged 'performance'">performance</a> <a class="post-tag" href="/questions/tagged/optimization" rel="tag" title="show questions tagged 'optimization'">optimization</a> <a class="post-tag" href="/questions/tagged/branch-prediction" rel="tag" title="show questions tagged 'branch-prediction'">branch-prediction</a> </div>

- Now, we can use list comprehension to extract

atags in a list, grouped per question.

a_tags_list = [i.select('a') for i in tags_divs]

# Printing first question's a tags

a_tags_list[0]

[<a class="post-tag" href="/questions/tagged/java" rel="tag" title="show questions tagged 'java'">java</a>, <a class="post-tag" href="/questions/tagged/c%2b%2b" rel="tag" title="show questions tagged 'c++'">c++</a>, <a class="post-tag" href="/questions/tagged/performance" rel="tag" title="show questions tagged 'performance'">performance</a>, <a class="post-tag" href="/questions/tagged/optimization" rel="tag" title="show questions tagged 'optimization'">optimization</a>, <a class="post-tag" href="/questions/tagged/branch-prediction" rel="tag" title="show questions tagged 'branch-prediction'">branch-prediction</a>]

- Now we will run a for loop for going through each question and use list comprehension inside it, to extract the tags names.

tags = []

for a_group in a_tags_list:

tags.append([a.text for a in a_group])

tags[0]

['java', 'c++', 'performance', 'optimization', 'branch-prediction']

Extract Number of votes, answers and views¶

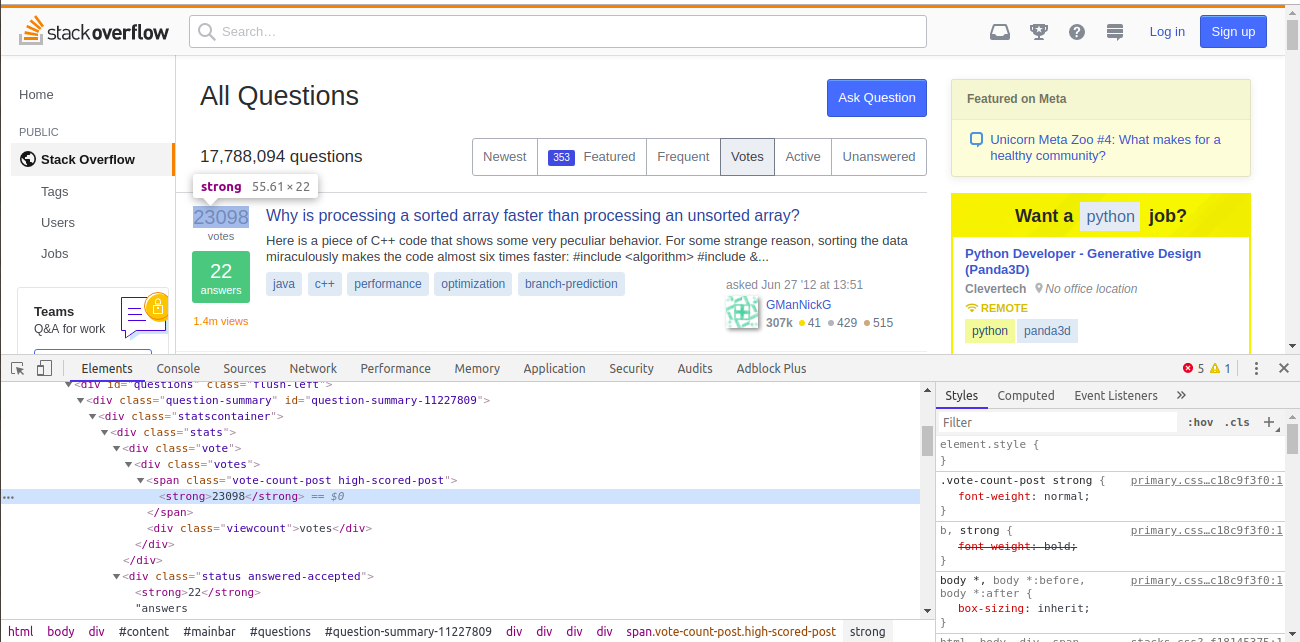

On Chrome, open questions page and inspect vote, answers and views for the topmost answer.

No. of Votes¶

- They can be found by using

spantag along withvote-count-postclass and nestedstrongtags

vote_spans = body1.select("span.vote-count-post strong")

print(vote_spans[:2])

[<strong>23111</strong>, <strong>19690</strong>]

List comprehension, to extract vote counts.

no_of_votes = [int(i.text) for i in vote_spans]

no_of_votes[:5]

[23111, 19690, 15321, 11030, 9718]

I'm not going to post images to extract last two attributes

No. of Answers¶

- They can be found by using

divtag along withstatusclass and nestedstrongtags. Here, we don't useanswered-acceptedbecause its not common among all questions, few of them (whose answer are not accepted) have the class -answered.

answer_divs = body1.select("div.status strong")

answer_divs[:2]

[<strong>22</strong>, <strong>78</strong>]

List comprehension, to extract answer counts.

no_of_answers = [int(i.text) for i in answer_divs]

no_of_answers[:5]

[22, 78, 38, 40, 34]

No. of Views¶

- For views, we can see two options. One is short form in number of millions and other is full number of views. We will extract the full version.

- They can be found by using

divtag along withsupernovaclass. Then we need to clean the string and convert it into integer format.

div_views = body1.select("div.supernova")

div_views[0]

<div class="views supernova" title="1,362,267 views">

1.4m views

</div>

List comprehension, to extract vote counts.

no_of_views = [i['title'] for i in div_views]

no_of_views = [i[:-6].replace(',', '') for i in no_of_views]

no_of_views = [int(i) for i in no_of_views]

no_of_views[:5]

[1362267, 7932952, 7011126, 2550002, 2490787]

def get_top_questions(url, question_count):

# WARNING: Only enter one of these 3 values [15, 30, 50].

# Since, stackoverflow, doesn't display any other size questions list

url = url + "?sort=votes&pagesize={}".format(question_count)

# Using requests module for downloading webpage content

response = requests.get(url)

# Parsing html data using BeautifulSoup

soup = bs(response.content, 'html.parser')

body = soup.find('body')

# Extracting Top Questions

question_links = body1.select("h3 a.question-hyperlink")

error_checking(question_links, question_count) # Error Checking

questions = [i.text for i in question_links] # questions list

# Extracting Summary

summary_divs = body1.select("div.excerpt")

error_checking(summary_divs, question_count) # Error Checking

summaries = [i.text.strip() for i in summary_divs] # summaries list

# Extracting Tags

tags_divs = body1.select("div.summary > div:nth-of-type(2)")

error_checking(tags_divs, question_count) # Error Checking

a_tags_list = [i.select('a') for i in tags_divs] # tag links

tags = []

for a_group in a_tags_list:

tags.append([a.text for a in a_group]) # tags list

# Extracting Number of votes

vote_spans = body1.select("span.vote-count-post strong")

error_checking(vote_spans, question_count) # Error Checking

no_of_votes = [int(i.text) for i in vote_spans] # votes list

# Extracting Number of answers

answer_divs = body1.select("div.status strong")

error_checking(answer_divs, question_count) # Error Checking

no_of_answers = [int(i.text) for i in answer_divs] # answers list

# Extracting Number of views

div_views = body1.select("div.supernova")

error_checking(div_views, question_count) # Error Checking

no_of_views = [i['title'] for i in div_views]

no_of_views = [i[:-6].replace(',', '') for i in no_of_views]

no_of_views = [int(i) for i in no_of_views] # views list

# Putting all of them together

df = pd.DataFrame({'question': questions,

'summary': summaries,

'tags': tags,

'no_of_votes': no_of_votes,

'no_of_answers': no_of_answers,

'no_of_views': no_of_views})

return df

URL2 = 'https://stackoverflow.com/questions'

df1 = get_top_questions(URL2, 50)

df1.head()

| question | summary | tags | no_of_votes | no_of_answers | no_of_views | |

|---|---|---|---|---|---|---|

| 0 | Why is processing a sorted array faster than p... | Here is a piece of C++ code that shows some ve... | [java, c++, performance, optimization, branch-... | 23111 | 22 | 1362267 |

| 1 | How do I undo the most recent local commits in... | I accidentally committed the wrong files to Gi... | [git, version-control, git-commit, undo] | 19690 | 78 | 7932952 |

| 2 | How do I delete a Git branch locally and remot... | I want to delete a branch both locally and rem... | [git, git-branch, git-remote] | 15321 | 38 | 7011126 |

| 3 | What is the difference between 'git pull' and ... | Moderator Note: Given that this question has a... | [git, git-pull, git-fetch] | 11030 | 40 | 2550002 |

| 4 | What is the correct JSON content type? | I've been messing around with JSON for some ti... | [json, http-headers, content-type] | 9718 | 34 | 2490787 |

f, ax = plt.subplots(3, 1, figsize=(12, 8))

ax[0].bar(df1.index, df1.no_of_votes)

ax[0].set_ylabel('No of Votes')

ax[1].bar(df1.index, df1.no_of_views)

ax[1].set_ylabel('No of Views')

ax[2].bar(df1.index, df1.no_of_answers)

ax[2].set_ylabel('No of Answers')

plt.xlabel('Question Number')

plt.savefig('votes_vs_views_vs_answers.png', bbox_inches='tight')

plt.show()

Here, we may observe that there is no collinearity between the votes, views and answers related to a question.

Useful Resources: