Harvesting data from the web: APIs¶

A first API¶

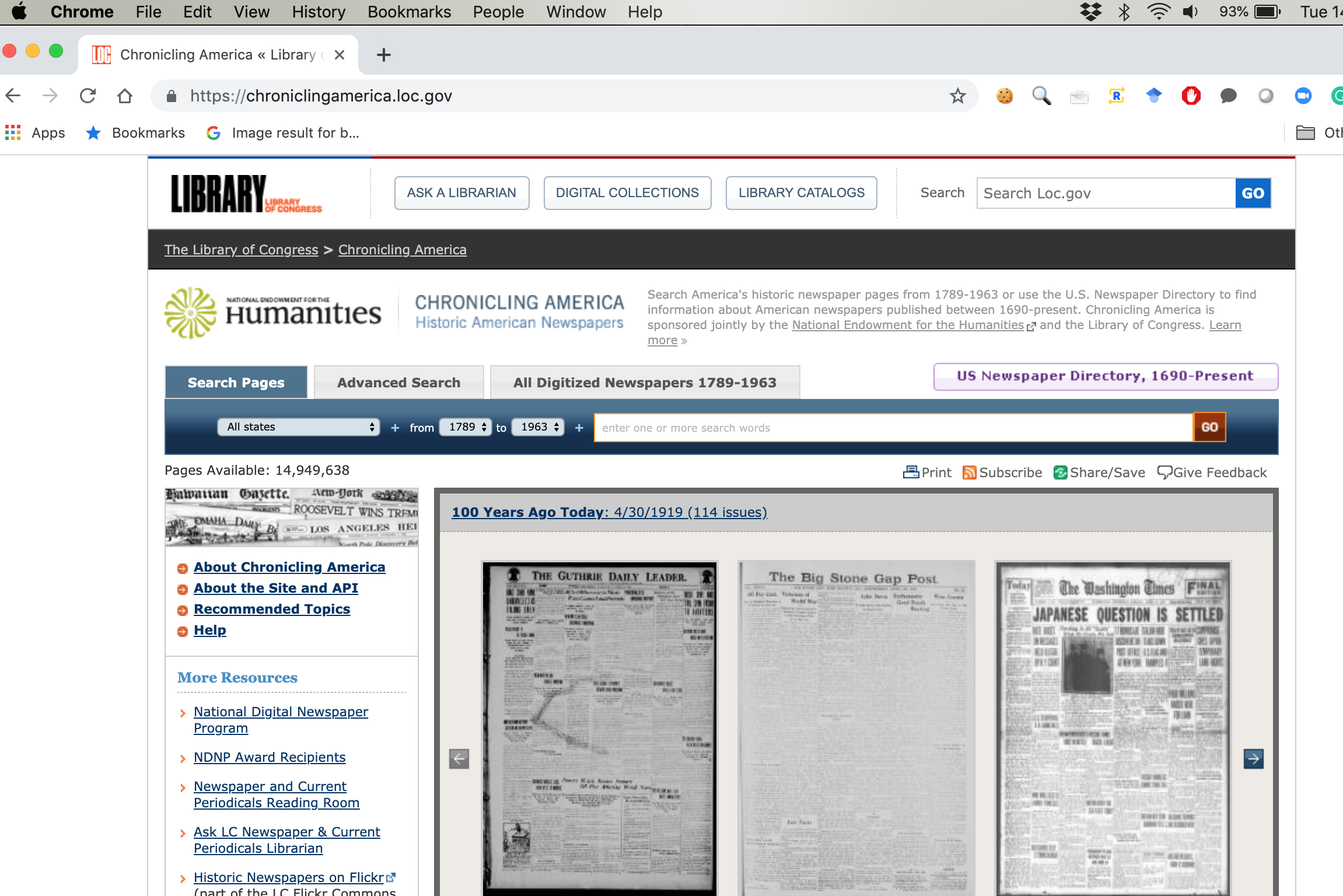

Chronicling America is a joint project of the National Endowment for the Humanities and the Library of Congress .

Search for articles that mention "lynching".

Your turn

Look at the URL. What happens if you change the word 'lynching' to 'murder'?

What happens to the URL when you go to the second page? Can you get to page 251?

Sample answer url

https://chroniclingamerica.loc.gov/search/pages/results/?andtext=murder&page=251&sort=relevance

What if we append &format=json to the end of the search URL?

http://chroniclingamerica.loc.gov/search/pages/results/?andtext=lynching&format=json

requests is a useful and commonly used HTTP library for python. It is not a part of the default installation, but is included with Anaconda Python Distribution.

import requests

It would be possible to use the API URL and parameters directly in the requests command, but since the most likely scenario involves making repeating calls to requests as part of a loop -- the search returned less than 1% of the results -- I store the strings first.

base_url = 'http://chroniclingamerica.loc.gov/search/pages/results/'

parameters = '?andtext=lynching&format=json'

url = base_url + parameters

print(url)

requests.get() is used for both accessing websites and APIs. The command can be modified by several arguements, but at a minimum, it requires the URL.

r = requests.get(base_url + parameters)

r is a requests response object. Any JSON returned by the server are stored in .json().

r.json()

search_json = r.json()

JSONs are dictionary like objects, in that they have keys (think variable names) and values. .keys() returns a list of the keys.

search_json.keys()

You can return the value of any key by putting the key name in brackets.

search_json['totalItems']

Sample answer code

search_json['items']

As is often the case with results from an API, most of the keys and values are metadate about either the search or what is being returned. These are useful for knowing if the search is returning what you want, which is particularly important when you are making multiple calls to the API.

The data I'm intereted in is all in items.

type(search_json['items'])

len(search_json['items'])

items is a list with 20 items.

type(search_json['items'][3])

Each of the 20 items in the list is a dictionary.

first_item = search_json['items'][0]

first_item.keys()

Sample answer code

print(first_item['title'])

While a standard CSV file has a header row that describes the contents of each column, a JSON file has keys identifying the values found in each case. Importantly, these keys need not be the same for each item. Additionally, values don't have to be numbers of strings, but could be lists or dictionaries. For example, this JSON could have included a newspaper key that was a dictionary with all the metadata about the newspaper the article and issue was published, an article key that include the article specific information as another dictionary, and a text key whose value was a string with the article text.

As before, we can examine the contents of a particular item, such as the publication's title.

first_item['ocr_eng']

print will make things look a little cleaner, converting, for example, \n to a line break.

print(first_item['ocr_eng'])

print(first_item['ocr_eng'][:200])

The easiest way to view or analyze this data is to convert it to a dataset-like structure. While Python does not have a builting in dataframe type, the popular pandas library does. By convention, it is imported as pd.

import pandas as pd

# Make sure all columns are displayed

pd.set_option("display.max_columns",101)

pandas is prety smart about importing different JSON-type objects and converting them to dataframes with its .DataFrame() function.

df = pd.DataFrame(search_json['items'])

df.head(6)

Note that I converted search_json['items'] to dataframe and not the entire JSON file. This is because I wanted each row to be an article.

If this dataframe contained all the items that you were looking for, it would be easy to save this to a csv file for storage and later analysis.

df.to_csv('lynching_articles.csv', index = False)

On a Mac, you can use the

!head slavery_articles.csv

df.to_json('slavery_articles.json', orient='records')

!head slavery_articles.json

Your Turn

Conduct your own search of the API. Store the results in a dataframe.

Sample answer code

search_word = 'robbery'

base_url = 'http://chroniclingamerica.loc.gov/search/pages/results/'

parameters = '?andtext=' + search_word + '&format=json'

r = requests.get(base_url + parameters)

search_json = r.json()

df = pd.DataFrame(search_json['items'])

A different API¶

r = requests.get('https://api.fbi.gov/wanted/v1/list?pageSize=20&page=1&sort_on=modified')

r.json()

r.json().keys()

r.json()['total']

df = pd.DataFrame(r.json()['items'])

df

Your turn

Conduct your own search of the API. Change the page size to 200. Store the results in a csv file.

Sample answer code

r = requests.get('https://api.fbi.gov/wanted/v1/list?pageSize=200&page=1&sort_on=modified')

mw_df = pd.DataFrame(r.json()['items'])

mw_df.to_csv('mw.csv')

base_url = 'https://api.fbi.gov/wanted/v1/list'

parameters = {'pageSize' : 20}

r = requests.get(base_url,

params = parameters)

r.url

pd.DataFrame(r.json()['items'])

Your turn

Set a page parameter to "2" to get the second page of results.

Sample answer code

base_url = 'https://api.fbi.gov/wanted/v1/list'

parameters = {'pageSize' : 20,

'page' : 2}

r = requests.get(base_url,

params = parameters)

pd.DataFrame(r.json()['items'])

In a group

Read about the API on the website. Then create a dataframe that contains that take place in a residence. Run some descriptive statistics on your data.

Sample answer code

base_url = 'https://data.cityofchicago.org/resource/ijzp-q8t2.json'

parameters = {'location_description' : 'RESIDENCE',

}

r = requests.get(base_url,

params = parameters)

df = pd.DataFrame(r.json())

df['primary_type'].value_counts()

df['arrest'].value_counts()

pd.crosstab(df['primary_type'] ,df['arrest'], normalize='index')