import numpy as np

import random

import import_ipynb

from q1_softmax import softmax

from q2_gradcheck import gradcheck_naive

from q2_sigmoid import sigmoid, sigmoid_grad

importing Jupyter notebook from q1_softmax.ipynb importing Jupyter notebook from q2_gradcheck.ipynb importing Jupyter notebook from q2_sigmoid.ipynb

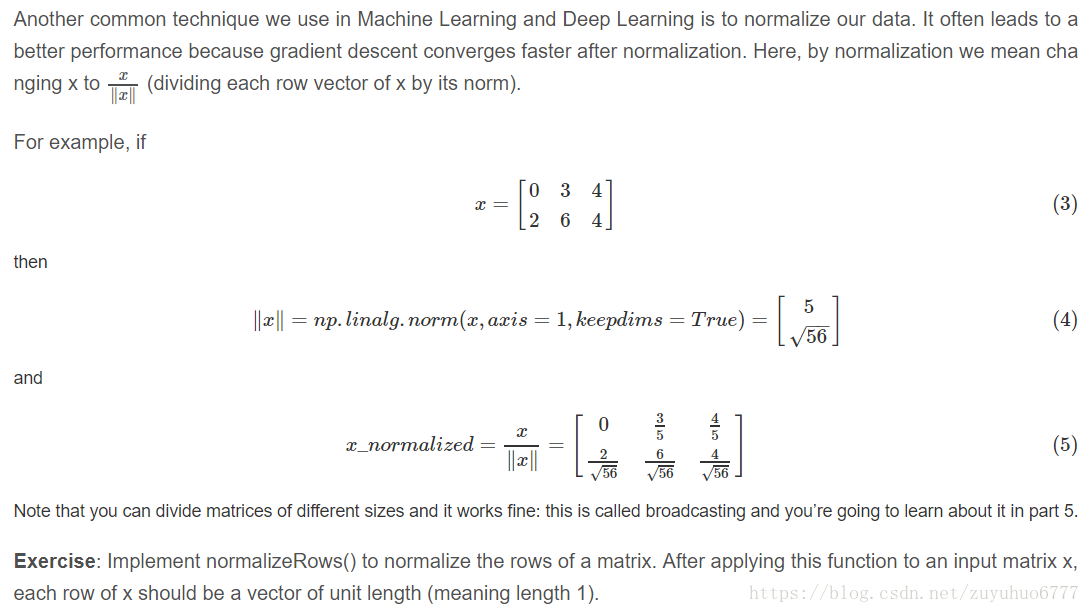

normalizeRows¶

def normalizeRows(x):

""" Row normalization function

Implement a function that normalizes each row of a matrix to have

unit length.

"""

### YOUR CODE HERE

denom = np.linalg.norm(x,axis=1,keepdims=True)

x = x/denom

### END YOUR CODE

return x

def test_normalize_rows():

print("Testing normalizeRows...")

x = normalizeRows(np.array([[3.0,4.0],[1, 2]]))

print(x)

ans = np.array([[0.6,0.8],[0.4472136,0.89442719]])

assert np.allclose(x, ans, rtol=1e-05, atol=1e-06)

print("")

softmaxCostAndGradient¶

- $\begin{align} \hat{\boldsymbol{y}}{o} = p(\boldsymbol{o} \vert \boldsymbol{c}) =\frac{exp(\boldsymbol{u}{0}^{T} \boldsymbol{v}{c})}{\sum\limits{w=1}^{W} exp(\boldsymbol{u}{w}^{T} \boldsymbol{v}{c})}

\end{align}$ 计算得到 Pred

$\frac{\partial J}{\partial{v_c}} =\frac{\partial J}{\partial \boldsymbol{z}} \frac{\partial z}{\partial v_c} = U(\hat{\boldsymbol{y}} -\boldsymbol{y})$

$\frac{\partial J}{\partial{U}} =\frac{\partial J}{\partial \boldsymbol{z}} \frac{\partial z}{\partial U} = v_c(\hat{\boldsymbol{y}} -\boldsymbol{y})^{T}$

def softmaxCostAndGradient(predicted, target, outputVectors, dataset):

""" Softmax cost function for word2vec models

Implement the cost and gradients for one predicted word vector

and one target word vector as a building block for word2vec

models, assuming the softmax prediction function and cross

entropy loss.

Arguments:

predicted -- numpy ndarray, predicted word vector (\hat{v} in

the written component)

target -- integer, the index of the target word

outputVectors -- "output" vectors (as rows) for all tokens

dataset -- needed for negative sampling, unused here.

Return:

cost -- cross entropy cost for the softmax word prediction

gradPred -- the gradient with respect to the predicted word

vector

grad -- the gradient with respect to all the other word

vectors

We will not provide starter code for this function, but feel

free to reference the code you previously wrote for this

assignment!

"""

### YOUR CODE HERE

# target是指公式中下标为o的那个,在skipgram

v_hat = predicted

#注意到每行代表一个词向量

Pred = softmax(np.dot(outputVectors, v_hat))

cost = -np.log(Pred[target])

# \hat{y} - y 的实现

Pred[target] -= 1.

# 关于V的梯度

gradPred = np.dot(outputVectors.T, Pred)

# 关于U的梯度,pred和v_hat都是向量,扩充为矩阵。

grad = np.outer(Pred, v_hat)

### END YOUR CODE

return cost, gradPred, grad

def getNegativeSamples(target, dataset, K):

""" Samples K indexes which are not the target """

indices = [None] * K

for k in range(K):

newidx = dataset.sampleTokenIdx()

while newidx == target:

newidx = dataset.sampleTokenIdx()

indices[k] = newidx

return indices

negSamplingCostAndGradient¶

$\begin{align} \frac{\partial J}{\partial v_c}&=\left(\sigma(u_o^Tv_c)-1\right)u_o-\sum_{k=1}^K\left(\sigma(-u_k^Tv_c)-1\right)u_k\\ \frac{\partial J}{\partial u_o}&=\left(\sigma(u_o^Tv_c)-1\right)v_c\\ \frac{\partial J}{\partial u_k}&=-\left(\sigma(-u_k^Tv_c)-1\right)v_c\\ \end{align}$

def negSamplingCostAndGradient(predicted, target, outputVectors, dataset,

K=10):

""" Negative sampling cost function for word2vec models

Implement the cost and gradients for one predicted word vector

and one target word vector as a building block for word2vec

models, using the negative sampling technique. K is the sample

size.

Note: See test_word2vec below for dataset's initialization.

Arguments/Return Specifications: same as softmaxCostAndGradient

"""

# Sampling of indices is done for you. Do not modify this if you

# wish to match the autograder and receive points!

indices = [target]

indices.extend(getNegativeSamples(target, dataset, K))

### YOUR CODE HERE

grad = np.zeros(outputVectors.shape)

gradPred =np.zeros(predicted.shape)

cost = 0

z = sigmoid(np.dot(outputVectors[target], predicted))

cost -= np.log(z)

grad[target] += predicted * (z - 1.0)

gradPred += outputVectors[target] * (z-1.0)

for k in range(K):

sample = indices[k + 1]

z = sigmoid(np.dot(outputVectors[sample], predicted))

# sigmoid(x) = 1 - sigmoid(-x)

cost -= np.log(1.0 - z)

# sigmoid(-x) -1 = -sigmoid(x)

grad[sample] += predicted * z

gradPred += outputVectors[sample] * z

### END YOUR CODE

return cost, gradPred, grad

Skip-gram¶

- 给定中间词,寻找周围的词。

- 获得cost和梯度,更新词向量。即,把每一个词的梯度,都加起来。

$\begin{align} \frac{J_{skip-gram}(word_{c-m \dots c+m})}{\partial \boldsymbol{U}} &= \sum\limits_{-m \leq j \leq m, j \ne 0} \frac{\partial F(\boldsymbol{w}_{c+j}, \boldsymbol{v}_{c})}{\partial \boldsymbol{U}} \nonumber \\ \frac{J_{skip-gram}(word_{c-m \dots c+m})}{\partial \boldsymbol{v}_{c}} &= \sum\limits_{-m \leq j \leq m, j \ne 0} \frac{\partial F(\boldsymbol{w}_{c+j}, \boldsymbol{v}_{c})}{\partial \boldsymbol{v}_{c}} \nonumber \\ \frac{J_{skip-gram}(word_{c-m \dots c+m})}{\partial \boldsymbol{v}_{j}} &= 0, \forall j\ne c \nonumber\end{align}$

def skipgram(currentWord, C, contextWords, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient=softmaxCostAndGradient):

""" Skip-gram model in word2vec

Implement the skip-gram model in this function.

Arguments:

currrentWord -- a string of the current center word

C -- integer, context size

contextWords -- list of no more than 2*C strings, the context words

tokens -- a dictionary that maps words to their indices in

the word vector list

inputVectors -- "input" word vectors (as rows) for all tokens

outputVectors -- "output" word vectors (as rows) for all tokens

word2vecCostAndGradient -- the cost and gradient function for

a prediction vector given the target

word vectors, could be one of the two

cost functions you implemented above.

Return:

cost -- the cost function value for the skip-gram model

grad -- the gradient with respect to the word vectors

"""

cost = 0.0

gradIn = np.zeros(inputVectors.shape)

gradOut = np.zeros(outputVectors.shape)

### YOUR CODE HERE

cword_index = tokens[currentWord]

vhat = inputVectors[cword_index]

for j in contextWords:

u_index = tokens[j] # target

c_cost, c_grad_in, c_grad_out = \

word2vecCostAndGradient(vhat, u_index, outputVectors, dataset)

cost += c_cost

gradIn[cword_index] += c_grad_in

gradOut += c_grad_out

### END YOUR CODE

return cost, gradIn, gradOut

CBOW¶

- 给定周围的字,发现中间的字。

- 策略:把周围字的词向量加起来(为什么不是平均?),得到推测的中间字向量,与当前的中间字向量做更新。

$\begin{align} \frac{J_{CBOW}(word_{c-m \dots c+m})}{\partial \boldsymbol{U}}& = \frac{\partial F(\boldsymbol{w}_{c}, \hat{\boldsymbol{v}})}{\partial \boldsymbol{U}} \nonumber \\ \frac{J_{CBOW}(word_{c-m \dots c+m})}{\partial \boldsymbol{v}_{j}} &= \frac{\partial F(\boldsymbol{w}_{c}, \hat{\boldsymbol{v}})}{\partial \hat{\boldsymbol{v}}}, \forall (j \ne c) \in \{c-m \dots c+m\} \nonumber \\ \frac{J_{CBOW}(word_{c-m \dots c+m})}{\partial \boldsymbol{v}_{j}} &= 0, \forall (j \ne c) \notin \{c-m \dots c+m\} \nonumber\end{align}$

def cbow(currentWord, C, contextWords, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient=softmaxCostAndGradient):

"""CBOW model in word2vec

Implement the continuous bag-of-words model in this function.

Arguments/Return specifications: same as the skip-gram model

Extra credit: Implementing CBOW is optional, but the gradient

derivations are not. If you decide not to implement CBOW, remove

the NotImplementedError.

"""

cost = 0.0

gradIn = np.zeros(inputVectors.shape)

gradOut = np.zeros(outputVectors.shape)

### YOUR CODE HERE

predicted_indices = [tokens[word] for word in contextWords]

predicted_vectors = inputVectors[predicted_indices]

# 我记得笔记中提到的是做平均,这里待定。

predicted = np.sum(predicted_vectors, axis=0)

target = tokens[currentWord]

cost,gradIn_predicted, gradOut = \

word2vecCostAndGradient(predicted, target, outputVectors, dataset)

#注意下面是加,而不是赋值,因为同一个样本重复出现,山下文中可能出现相同的词汇

for i in predicted_indices:

gradIn[i] += gradIn_predicted

### END YOUR CODE

return cost, gradIn, gradOut

#############################################

# Testing functions below. DO NOT MODIFY! #

#############################################

def word2vec_sgd_wrapper(word2vecModel, tokens, wordVectors, dataset, C,

word2vecCostAndGradient=softmaxCostAndGradient):

batchsize = 50

cost = 0.0

grad = np.zeros(wordVectors.shape)

N = wordVectors.shape[0]

inputVectors = wordVectors[:int(N/2),:]

outputVectors = wordVectors[int(N/2):,:]

for i in range(batchsize):

C1 = random.randint(1,C)

centerword, context = dataset.getRandomContext(C1)

if word2vecModel == skipgram:

denom = 1

else:

denom = 1

c, gin, gout = word2vecModel(

centerword, C1, context, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient)

cost += c / batchsize / denom

grad[:int(N/2), :] += gin / batchsize / denom

grad[int(N/2):, :] += gout / batchsize / denom

return cost, grad

def test_word2vec():

""" Interface to the dataset for negative sampling """

dataset = type('dummy', (), {})()

def dummySampleTokenIdx():

return random.randint(0, 4)

def getRandomContext(C):

tokens = ["a", "b", "c", "d", "e"]

return tokens[random.randint(0,4)], \

[tokens[random.randint(0,4)] for i in range(2*C)]

dataset.sampleTokenIdx = dummySampleTokenIdx

dataset.getRandomContext = getRandomContext

random.seed(31415)

np.random.seed(9265)

dummy_vectors = normalizeRows(np.random.randn(10,3))

dummy_tokens = dict([("a",0), ("b",1), ("c",2),("d",3),("e",4)])

print ("==== Gradient check for skip-gram ====")

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

skipgram, dummy_tokens, vec, dataset, 5, softmaxCostAndGradient),

dummy_vectors)

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

skipgram, dummy_tokens, vec, dataset, 5, negSamplingCostAndGradient),

dummy_vectors)

print ("\n==== Gradient check for CBOW ====")

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

cbow, dummy_tokens, vec, dataset, 5, softmaxCostAndGradient),

dummy_vectors)

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

cbow, dummy_tokens, vec, dataset, 5, negSamplingCostAndGradient),

dummy_vectors)

print ("\n=== Results ===")

print (skipgram("c", 3, ["a", "b", "e", "d", "b", "c"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset))

print (skipgram("c", 1, ["a", "b"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset,

negSamplingCostAndGradient))

print (cbow("a", 2, ["a", "b", "c", "a"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset))

print (cbow("a", 2, ["a", "b", "a", "c"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset,

negSamplingCostAndGradient))

if __name__ == "__main__":

test_normalize_rows()

test_word2vec()

Testing normalizeRows...

[[0.6 0.8 ]

[0.4472136 0.89442719]]

==== Gradient check for skip-gram ====

Gradient check passed!

Gradient check passed!

==== Gradient check for CBOW ====

Gradient check passed!

Gradient check passed!

=== Results ===

(11.16610900153398, array([[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[-1.26947339, -1.36873189, 2.45158957],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ]]), array([[-0.41045956, 0.18834851, 1.43272264],

[ 0.38202831, -0.17530219, -1.33348241],

[ 0.07009355, -0.03216399, -0.24466386],

[ 0.09472154, -0.04346509, -0.33062865],

[-0.13638384, 0.06258276, 0.47605228]]))

(14.093692760899629, array([[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ],

[-3.86802836, -1.12713967, -1.52668625],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ]]), array([[-0.11265089, 0.05169237, 0.39321163],

[-0.22716495, 0.10423969, 0.79292674],

[-0.79674766, 0.36560539, 2.78107395],

[-0.31602611, 0.14501561, 1.10309954],

[-0.80620296, 0.36994417, 2.81407799]]))

(0.7989958010906648, array([[ 0.23330542, -0.51643128, -0.8281311 ],

[ 0.11665271, -0.25821564, -0.41406555],

[ 0.11665271, -0.25821564, -0.41406555],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ]]), array([[ 0.80954933, 0.21962514, -0.54095764],

[-0.03556575, -0.00964874, 0.02376577],

[-0.13016109, -0.0353118 , 0.08697634],

[-0.1650812 , -0.04478539, 0.11031068],

[-0.47874129, -0.1298792 , 0.31990485]]))

(7.89559320359914, array([[-2.98873309, -3.38440688, -2.62676289],

[-1.49436655, -1.69220344, -1.31338145],

[-1.49436655, -1.69220344, -1.31338145],

[ 0. , 0. , 0. ],

[ 0. , 0. , 0. ]]), array([[ 0.21992784, 0.0596649 , -0.14696034],

[-1.37825047, -0.37390982, 0.92097553],

[-0.77702167, -0.21080061, 0.51922198],

[-2.58955401, -0.7025281 , 1.73039366],

[-2.36749007, -0.64228369, 1.58200593]]))