This tutorial is based on this blog article about the same topic.

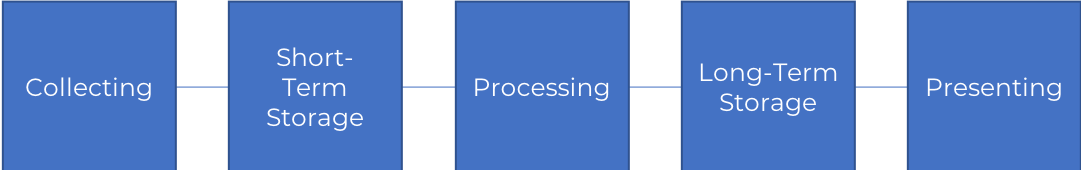

Data storage a crucial part of any data system. It is important to understand how your data can be stored and accessed. Data engineers build architectures for data platforms enabling data scientists to query their data. They are responsible for building data pipelines that connect the pieces of the data ecosystem. They are familiar with:

- Working with large datasets

- Automation of intensive queries

- The architecture of robust data platforms

Each part of the pipeline below is built/maintained by a data engineer.

In this notebook I will describe one example relational databases, PostgreSQL more specifically. PostgreSQL is one of the main open source relational databases. It has advantages such as:

- It is easily accessible in cloud providers such as AWS

- It is highly stable

- It is open source

The Python library psycopg2 one can create tables and load data into a local running PostgreSQL servers.

!pip install psycopg2

Requirement already satisfied: psycopg2 in /Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages (2.7.6.1)

SQLite is a SQL engine where all the data is saved onto a one file. Since SQLite only allows a single process to write to the database and therefore it is not built for multiple connections, it has limited usefulness in a data production systems. PostgreSQL is implemented as a server rather than a single file hence it accepts connections from any clients who can request e.g. SELECT or any other query. PostgreSQL has a client-server model design (clients can interact with the server).

According to this blog:

Anytime you are accessing a website, your browser (the client) will continually be requesting website data from the server

When using PostgreSQL, the connecting client will use database=specific requests following a defined protocol"

A protocol is the language that both the client and server will use when the client requests data and the server responds with data.

Connecting to PostgreSQL¶

psycopg2 is type of client that "speaks the database protocol" described earlier. In the code below, the database name dbname and user are chosen.

Because of the multiple connections, Postgres uses multiple users and databases as a way to improve security and division of data.

import psycopg2

conn = psycopg2.connect(dbname="marcotavora", user="marcotavora")

The return value of the connect() method is a Connection object.

print(conn)

<connection object at 0x1072bfbd0; dsn: 'dbname=marcotavora user=marcotavora', closed: 0>

Interacting with the database¶

The connection object conn

creates a client session with the database server that instantiates a persistant client to speak with. To issue commands against the database, you will also need to create another object called the

Cursorobject.Cursoris created by theConnectionobject and using theCursorobject we will be able to execute our commands. To execute commands on the Postgres database, you call the execute method on the Cursor object with a stringified SQL command.

To get the returned values from the query, we need fetchone() or fetchall(). The former returns the first row result and the latter returns a list of each row in the table.

import psycopg2

conn = psycopg2.connect(dbname="marcotavora",

user="marcotavora")

cur = conn.cursor()

cur.execute('SELECT * FROM users')

one = cur.fetchone()

all = cur.fetchall()

print(' The first element of the list all is:\n\n',one,'\n')

print(' The second element of the list all is:\n\n',all[0],'\n')

print(' The third element of the list all is:\n\n',all[1])

The first element of the list all is: (0, 'cbenjamin@yahoo.com', 'Joseph Kirby', '3594 Fox Ford Apt. 192 West Kristen GA 22838-8977') The second element of the list all is: (1, 'morganlopez@matthews-hickman.com', 'Erin Figueroa', '64763 Li Meadows Apt. 554 New Marcoton MA 99016-6159') The third element of the list all is: (2, 'ypark@russo.biz', 'Leon Matthews', '91144 Hamilton Manors Suite 421 Ronaldland WA 98705')

CREATE TABLE tableName(

column1 dataType1 PRIMARY KEY,

column2 dataType2,

column3 dataType3,

...

);

Each column is a placeholder for the column name, dataType is the data type you want to store for that column, and PRIMARY KEY is an example of an optional parameter to add on to the table. In Postgres, every table requires at least one PRIMARY KEY column that contains a unique set of values. Let's now take a look at the CSV file we wish to load into the database (note that the CSV does not contain real users but are randomly generated users using a Python library called faker).

import psycopg2

conn = psycopg2.connect(dbname="marcotavora",

user="marcotavora")

cur = conn.cursor()

cur.execute("""CREATE TABLE new_users_table(id integer PRIMARY KEY, email text, name text, address text)""")

cur

<cursor object at 0x109c03dd8; closed: 0>

Inserting the data¶

Let us load the csv file into the database issuing an INSERT command on the table. Using the INSERT command, we can insert into the new_users_table table using pyscopg2. In more details:

- Write a string

INSERTSQL command for theexecute()method. - Format the string with all the values

import csv

with open('user_accounts_new.csv', 'r') as f:

next(f)

reader = csv.reader(f)

lst = [row for row in reader]

n = 2

print('First {} rows (the first one was skipped):\n'.format(n))

lst[0:n]

'id,email,name,address\n'

First 2 rows (the first one was skipped):

[['0', 'cbenjamin@yahoo.com', 'Joseph Kirby', '3594 Fox Ford Apt. 192 West Kristen GA 22838-8977'], ['1', 'morganlopez@matthews-hickman.com', 'Erin Figueroa', '64763 Li Meadows Apt. 554 New Marcoton MA 99016-6159']]

import csv

import psycopg2

conn = psycopg2.connect(dbname="marcotavora", user="marcotavora")

cur = conn.cursor()

with open('user_accounts_new.csv', 'r') as f:

reader = csv.reader(f)

next(reader)

for row in reader:

cur.execute("INSERT INTO new_users_table VALUES (%s, %s, %s, %s)",row)

conn.commit()

This is inefficient since we had to loop through every row from the csv. There is a better way.

Copying the data¶

The copy_from method loads a file directly into a table and like execute() method, it is attached to the Cursor object. The copy_from arguments requires:

- a file to load (excluding the header)

- the

tablenameit should load into - a delimiter (the key argument sep)

- running

commit()transfers the file

import psycopg2

# conn = psycopg2.connect(dbname="marcotavora",

# user="marcotavora")

# cur = conn.cursor()

with open('user_accounts_new.csv', 'r') as f:

next(f)

cur.copy_from(f,

'new_users_table', sep=',')

conn.commit()

'id,email,name,address\n'

We finished loading the user_accounts_new.csv file into our table. The summary to Dataquest follows:

import psycopg2

# conn = psycopg2.connect(dbname="marcotavora",

# user="marcotavora")

# cur = conn.cursor()

cur.execute('SELECT * FROM new_users_table')

one = cur.fetchone()

all = cur.fetchall()

print(' The first element of the list all is:\n\n',one,'\n')

print(' The second element of the list all is:\n\n',all[0],'\n')

print(' The third element of the list all is:\n\n',all[1])

The first element of the list all is: (0, 'cbenjamin@yahoo.com', 'Joseph Kirby', '3594 Fox Ford Apt. 192 West Kristen GA 22838-8977') The second element of the list all is: (1, 'morganlopez@matthews-hickman.com', 'Erin Figueroa', '64763 Li Meadows Apt. 554 New Marcoton MA 99016-6159') The third element of the list all is: (2, 'ypark@russo.biz', 'Leon Matthews', '91144 Hamilton Manors Suite 421 Ronaldland WA 98705')

Summary¶

- Postgres uses the client-server model to enable multiple connections to the database.

- Using the popular

psycopg2library, we can use Python to connect to PostgreSQL. - Postgres is type sensitive so we have to declare types on each of our columns.

- Postgres uses SQL transactions to save the state of the database.

- The most efficient way to load files into Postgres tables is to use COPY, or the

psycopg2.copy_from()method.