Hydrological modeling with a simple LSTM network of 30 units¶

Abstract¶

Are neural networks the future of hydrological modeling? Maybe. This simple LSTM is in many ways a poor model; yet, it performs quite good. On the small 15-year dataset included, it archives a Nash–Sutcliffe efficiency/R2 of 0.74 on the 3-year validation part (where 0.00 is the trivial mean prediction and 1.00 is perfect prediction). On the same validation set, my own HBV implementation, SwiftHBV, archives 0.78. It still much left to beat HBV, especially its robustness, but perhaps a longer dataset and a new RNN architecture, without LSTM's drawbacks, would be able to.

Introduction¶

There have been some papers out there that have tried to model rainfall-runoff and sometimes snow storage with LSTM neural networks. For instance Kratzert et al. (2018) who archives a median Nash–Sutcliffe efficiency/R2 of 0.65 on 250 catchments in the US, -0.03 less than a traditional SAC-SMA+SNOW17 model. The results seem good, but not jet as good as old fashion models, like SAC-SMA+SNOW17 or HBV, which also are more robust.

This was a quick model I through together to see for myself how good these models perform compare to HBV. In difference to HBV, this model has no catchment parameters and generally no assumptions about the world. It should, therefore, be harder to fit than HBV—especially for small datasets, like the one I used. On the other hand, are they not constrained by HBV's simplistic model of the world.

A poor model¶

I many ways are LSTM networks a poor model for hydrology. LSTM was created to model more fuzzy binary problems like natural language processing. While the memory cells can have arbitrary values, the inputs/outputs to/from them are constrained to the [-1, 1] domain with tanh activation functions. To be able to handle rare floods, hydrological models should not constraint large inputs or outputs.

The second problem is that LSTM has forget gates and add/subtract gates. Generally, we would like to have models where water is passed around from input, to different internal (storage) cells, between (storage) cells and then to output, without water being created or lost, except for the correction of precipitation at the input and a separate evaporation output that could be monitored.

These problems do not make LSTM inherently unusable. LSTMs are general computation machines that should theoretically be able to compute anything, given enough units. The problem is just that they might be unnecessary hard to train.

Method¶

Model¶

The model is a simple LSTM with one layer and 30 units, plus one output layer with ReLU activation.

Optimization¶

- Adam is used for optimization with a learning rate of 0.00001

- The sequence length is 30 time steps (days) and batch size 1. Thus the error is back propagated 30 time steps and parameters are updated without batching.

- The internal state is reset every epoch (12 years). It can therefore learn dependencies longer than 30 days.

- The loss function is mean squared error on the discharge. There is no explicit optimization of the accumulated discharge.

Dataset¶

I used a short 15-years dataset from Hagabru, a discharge station in the Gaula river system, south of Trondheim (Norway). This catchment has a big area of 3059,5 km2 and no lakes to dampen responses. The first 12-years are used as training set, the last 3 years as validation set.

Results¶

Training experience¶

- Normalize inputs and outputs between 0.0–1.0 seems to have a big effect on how fast optimization converges, but not prediction performance. Since the LSTM layers outputs are between -1 to +1, the dense layer has to increase their weights. It seems to take a lot of time before these weights are fully learned.

- Increasing the number of LSTM-units seems to have a modest effect on performance after 30 neurons on the small dataset.

- Two LSTM-layers gave the same fit on the training set and slightly worse on the validation set. It might increase a bit with a bigger dataset, but I don't think it is very beneficial with many layers for hydrological models.

- Normal SGD Nesterov with momentum seems to converge extremely poorly compared with Adam. I have no explanation for why.

- I tried to put accumulated discharge in the loss/objective/cost function, but this just led to models that cap peaks and smoothens the discharge rate. R2 was reduced to 0.54 on the validation set.

- I tried ReLU as activation function, but convergence failed and outputs produced NaNs. Not sure why, but ReLUs are uncommon for LSTM, so it might be that ReLUs make values explode and convergence unstable.

Performance¶

R2/Nash-Sutcliffe efficiency¶

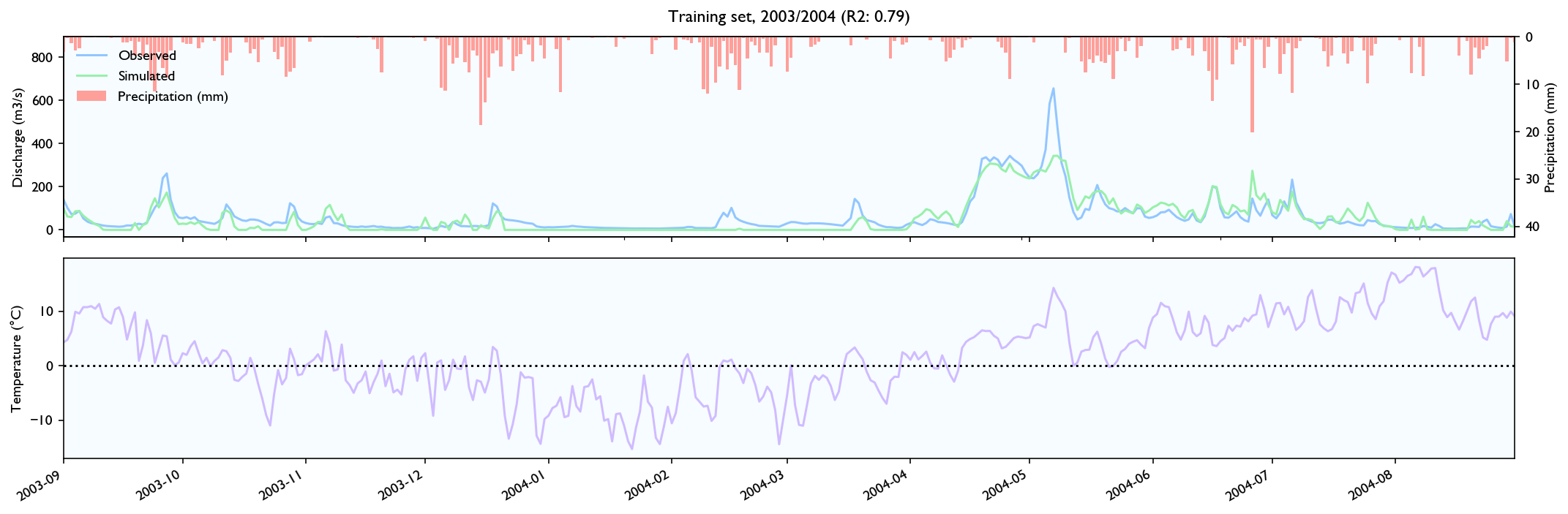

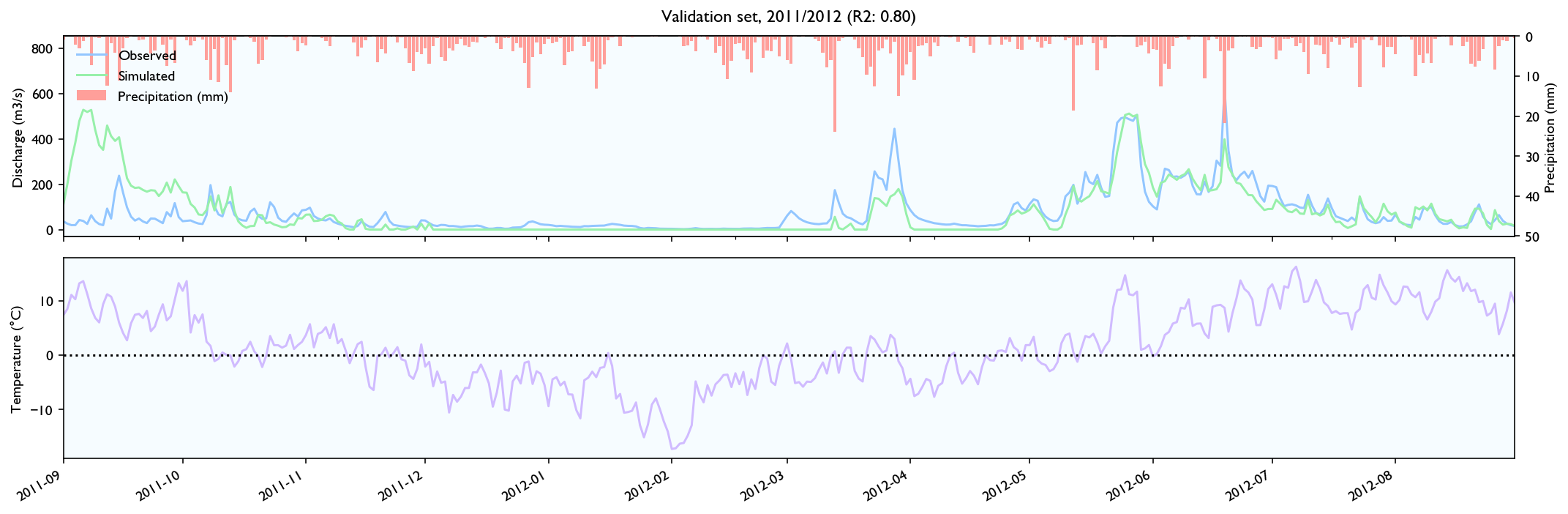

Training set: 0.82 (HBV: 0.78)

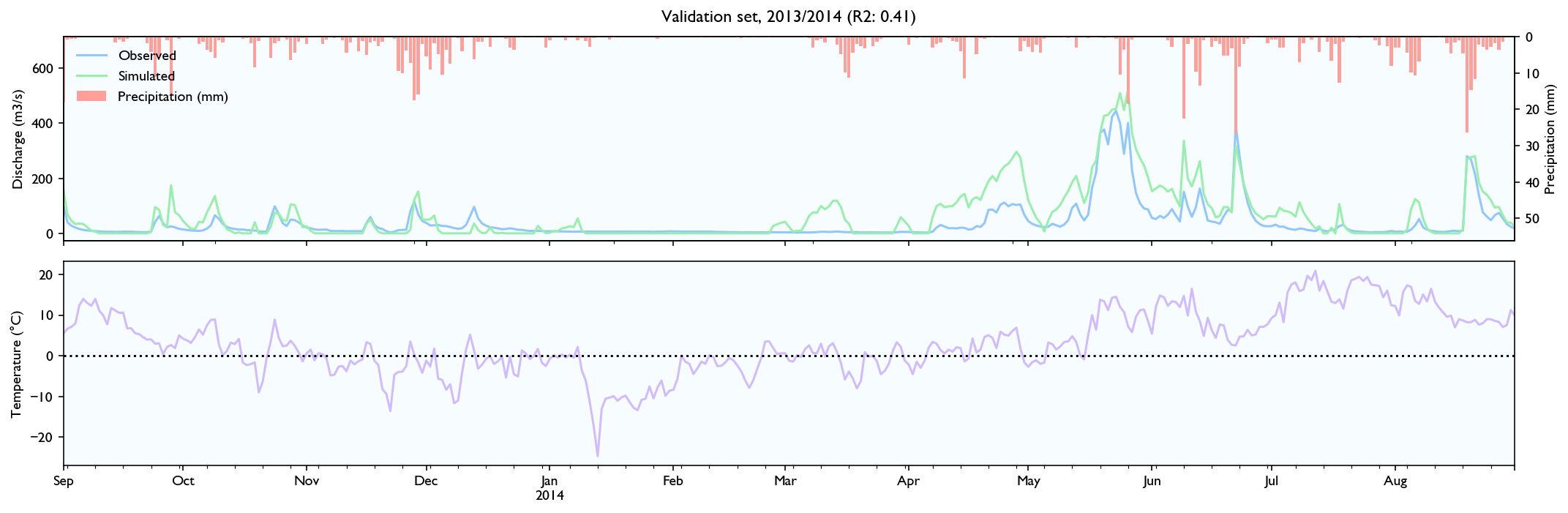

Validation set: 0.74 (HBV: 0.78)

(Where 0.0 is the trivial mean prediction and 1.0 is perfect prediction.)

The LSTM network is generally not much behind the HBV-model. It performs four percentage points, or 20% worse than the HBV-model. However, the LSTM model is less robust. HBV performs equally well on the validation set as the training set, while the LSTM model performs 8 percentage points worse.

Rainfall events¶

- It seems to estimate the peak size after rainfall events quite well, perhaps slightly better than HBV.

- The discharge falls a bit weird. It seems to fall linearly with lots of breaks. It should fall like exponential decay, where the decay is larger for/after big rainfall events.

- It seems like the model has a maximal discharge rate. Large peaks are underestimated and simulated peaks have ca. the same value.

Snowmelt¶

- The model struggles with spring melt when the temperature is near zero, for instance in March and April 2014, and March 2012. When the temperature is high, like in June 2012, the estimates are good. Snowmelt around zero is something all hydrological models struggle with because snowmelt is affected not only by temperature, but also wind, humidity and in-/outgoing radiation (e.g. clear nights). It still performs worse than HBV, but the HBV is configured with the elevation distribution of the catchment and the estimated temperature on different elevations, while the LSTM-network needs to learn it, so it should perform worse.

- It seems to be able to learn to store snow, but it is hard to know how accurately it records and store snow levels. The model seems to output more water after winters with more precipitation, and it looks like the accumulated discharge is neither severely over- or underestimated during spring melt the two first years in the validation set. However, in 2014 the discharge is way too high over time.

Discussion¶

- The performance looks quite good compared to the simple, poor model and short time series. It is quite amazing how well it behaves from being trained on only 4380 time steps and that it learns quite well to store snow over long periods.

- The reason it underestimates peaks is probably because of the

tanhactivation functions. It is not the biggest problem, but it probably makes LSTM unusable to estimate (rare) flood events. A different RNN architecture could perhaps solve this. - It is hard to know how accurate the model store snow levels. However, it is probably far away from HBV. The model would likely benefit from a more constraint model when it comes to adding and subtracting water from memory cells.

- The problem that discharge rates do not smoothly decay after rainfall events, might be an artifact by how LSTM networks work. LSTM networks should be able to model exponential decay. However it requires that the cell units have values around 0.5 (so they neither are limited the tanh activation functions, or they are close to zero and produce small outputs), the activation layer needs produce outputs near 0, so the sigmoids produce output near 0.5 and decent decaying factors, and the outputs should into the forget gates on the next time step and remove the same amount of water from the cell states that was outputted. It is a lot to demand that LSTM networks should learn this accurately, and the fact that it does not look like it does might mean that LSTM networks are poor at accurately modeling snow levels, and get proper decaying outputting from storage cells.

- Even if LSTM-model had performed similarly as HBV, the HBV-model would be preferred. It is, of course, a lot more robust, and performs almost equally well on the validation set as the training set.

Conclusion¶

This dataset is too small to get a decent overview of LSTMs performance. However, on this dataset it performs quite well. It is not too much behind HBV, although its predictions are a lot less robust and trustwordy compare to HBV. The LSTM model fails at some places which might indicate that the architecture is not the best, like that the decaying after rainfall events are not very smooth and constrained peaks. It would be interesting to look at other RNN architectures that are more suitable for hydrological modeling.

Is it possible to create a better model?¶

For hydrology, we probably want an RNN network with ReLU gates and where water not lost or created. However, designing new RNN architectures is not the easiest task in the world. LSTM (and the similar GRU) has been the default architecture because it is difficult to create RNN models. LSTM was carefully designed to make training stable and avoid the vanishing gradient problem and be able to propagate errors over long time frames. I have tried to think of a better model, but probably need to think a bit more seriously, if I should have a chance.

It would also be interesting to implement HBV, or something similar, in Tensorflow and train it with gradient descent like a neural network. As far as I can see is the only strictly undifferentiable parts in HBV min/max functions, and they are differentiable in practice.

However, a pure HBV trained with gradient descent might be stuck in local minimas. Local minimas are usually not a problem for neural networks because they have lots of parameters. The chance that all second derivates are all positive is therefore small. HBV has only 16 parameters and is, therefore, more likely to be stuck in local minimas if it's not trained by algorithms that tackle local minimas.

The ideal model might be therefore something between HBV and LSTM—a network based on HBV's tank model, but with more parameters, more freedom, and less linearity. The error in storage cells should also be able to flow freely back in time without degradation to be able to train long term dependencies like snow storage over the winter.

References¶

- Kratzert, Frederik, Daniel Klotz, Claire Brenner, Karsten Schulz, and Mathew Herrnegger. “Rainfall–Runoff Modelling Using Long Short-Term Memory (LSTM) Networks.” Hydrology and Earth System Sciences 22, no. 11 (November 22, 2018): 6005–22. https://doi.org/10.5194/hess-22-6005-2018.

Setup¶

Imports and functions

import tensorflow as tf

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

import sklearn.metrics

import plotly

import plotly.graph_objects

pd.plotting.register_matplotlib_converters()

mpl.rcParams['figure.figsize'] = (8, 6)

mpl.rcParams['axes.grid'] = False

tf.__version__

# Matplotlib style sheet

plt.style.use('seaborn-pastel')

plt.style.use('jont')

%config InlineBackend.figure_format = 'retina'

tf.random.set_seed(13)

# Data preprosessing functions

def hydrological_year(df, i):

date = df.index[i]

if date.month >= 9:

return date.year

else:

return date.year-1

# Tensorflow time series preprocessing functions

def multivariate_data(x, y, sequence_length, start_index=0, end_index=None, step=1):

start_index = start_index + sequence_length

if end_index is None:

end_index = len(x) - sequence_length

number_of_sequences = int((end_index - start_index)/step)

number_of_features = len(x[0])

new_x = np.zeros(shape=[number_of_sequences, sequence_length, number_of_features])

new_y = np.zeros(shape=[number_of_sequences, sequence_length])

for i in range(number_of_sequences):

i_dataset = start_index + i*sequence_length

slice_d = slice(i_dataset-sequence_length, i_dataset)

if x[slice_d].shape == new_x[i].shape:

new_x[i] = x[slice_d]

if y[slice_d].shape == new_y[i].shape:

new_y[i] = y[slice_d]

return new_x, new_y

# Get estimated values from model

def predict(model, x_test, y_test, SEQUENCE_LENGTH, INPUT_d):

model.reset_states()

y_test_hat = y_test.copy()

n_windows = int(len(x_test)/SEQUENCE_LENGTH)

for i in range(n_windows):

x_test_sequence = x_test.iloc[i*SEQUENCE_LENGTH:(i+1)*SEQUENCE_LENGTH]

x_test_sequence = x_test_sequence.values.reshape([1, SEQUENCE_LENGTH, INPUT_d])

y_test_hat_sequence = model.predict(x_test_sequence).flatten()

y_test_hat[i*SEQUENCE_LENGTH:(i+1)*SEQUENCE_LENGTH] = y_test_hat_sequence

return y_test_hat

def plot_train_history(history, title):

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(loss))

plt.figure()

plt.plot(epochs, loss, label='Training loss')

plt.plot(epochs, val_loss, label='Validation loss')

plt.title(title)

plt.legend()

plt.show()

# Result plot functions

def plot_data_with_temp_precip(x, y, y_hat, title="", init_timesteps=0):

plt.rcParams["axes.spines.top"] = True

plt.rcParams["axes.spines.right"] = True

fig, axes = plt.subplots(nrows=2, ncols=1, figsize=(15, 5))

year_from = y.index.year[0]

year_to = y.index.year[-1]

r2 = sklearn.metrics.r2_score(y.values[init_timesteps:], y_hat.values[init_timesteps:])

if title:

title = title + ", "

if year_to == year_from+1:

title = title + "%i/%i (R2: %.2f)" % (year_from, year_to, r2)

else:

title = title + "%i–%i (R2: %.2f)" % (year_from, year_to, r2)

ax1 = axes[0]

ax1.plot(y.index, y, label="Observed", linestyle="-")

ax1.plot(y_hat.index, y_hat, label="Simulated", linestyle="-")

ax1.set_xlabel("")

ax1.set_ylabel("Discharge (m3/s)")

ax1.set_title(title)

ax1.set_xlim(y.index[0], y.index[-1])

ax1.set_ylim(ax1.get_ylim()[0], 1.3*ax1.get_ylim()[1])

colors = getattr(getattr(pd.plotting, '_matplotlib').style, '_get_standard_colors')(num_colors=4)

# Nedbør

precipitation = x[x.columns[0]]

ax2 = ax1.twinx()

ax2.spines['right'].set_position(('axes', 1.0))

ax2.bar(precipitation.index, precipitation, label="Precipitation (mm)", color=colors[2], width=0.8)

ax2.set_ylabel("Precipitation (mm)")

ax2.invert_yaxis()

ax2.set_ylim(2.0*ax2.get_ylim()[0], ax2.get_ylim()[1])

# ask matplotlib for the plotted objects and their labels

lines, labels = ax1.get_legend_handles_labels()

lines2, labels2 = ax2.get_legend_handles_labels()

ax2.legend(lines + lines2, labels + labels2, loc="upper left", framealpha=0.5)

# Temperatur

temp = x[x.columns[1]]

ax3 = axes[1]

#ax3.plot(temp.index, temp, color=colors[3])

temp.plot(ax=ax3, color=colors[3])

ax3.set_xlabel("")

ax3.set_ylabel("Temperature (°C)")

ax3.axhline(0.0, color="black", linestyle="dotted")

fig.tight_layout()

return fig

def plot_data(y_val, y_val_hat, title=""):

plt.rcParams["axes.spines.top"] = False

plt.rcParams["axes.spines.right"] = False

ax = pd.DataFrame({

"Observed": y_val,

"Simulated": y_val_hat

}).plot(style=["-", "-"], figsize=(15, 5));

ax.set_xlabel("")

ax.set_ylabel("Discharge (m3/s)")

ax.set_title(title)

ax.legend(loc="upper right")

ax.get_figure().tight_layout()

return ax

def plot_accumulated(y_val, y_val_hat, title=""):

plt.rcParams["axes.spines.top"] = False

plt.rcParams["axes.spines.right"] = False

max_observed = sum(y_val)

ax = pd.DataFrame({

"Observed": y_val.cumsum() / max_observed,

"Simulated": y_val_hat.cumsum() / max_observed

}).plot(style=["-", "-"], figsize=(15, 3));

ax.set_xlabel("")

ax.set_ylabel("Accumulated discharge\n1.0=total observed")

ax.set_title(title)

ax.legend(loc="lower right")

ax.get_figure().tight_layout()

return ax

Preprocess dataset¶

- Min/max temperature the same. Drop min.

- Divide dataset into hydrological years (sep–aug)

- Set 2000–2011 as training set and 2011–2014 as validation set

- Normalize inputs and outputs to be between 0.0–1.0

# Load dataset

filename = "data/Hagabru_input.txt"

df = pd.read_csv(filename, sep="\t")

df = df[1:] # Drop first row

df.index = pd.to_datetime(df["Dato"] + " " + df["Time"], format='%d.%m.%Y %H:%M:%S')

df = df.drop(columns=["Dato", "Time"])

# min/max temp is equal in this dataset, so drop one and rename other

df = df.rename(columns={"T_maxC_738moh": "T_C_738moh"}).drop(columns=["T_minC_738moh"])

df = df.astype("float")

# Add hydrological years

df["hydrological_year_from"] = [hydrological_year(df, i) for i in range(len(df))]

df["hydrological_year_to"] = [hydrological_year(df, i)+1 for i in range(len(df))]

df

| P_mm_738moh | T_C_738moh | Q_Hagabru | hydrological_year_from | hydrological_year_to | |

|---|---|---|---|---|---|

| 2000-04-01 | 1.42 | -1.77 | 26.79 | 1999 | 2000 |

| 2000-04-02 | 0.15 | -6.05 | 24.96 | 1999 | 2000 |

| 2000-04-03 | 1.96 | -6.08 | 22.11 | 1999 | 2000 |

| 2000-04-04 | 0.80 | -7.14 | 19.44 | 1999 | 2000 |

| 2000-04-05 | 1.53 | -7.99 | 17.62 | 1999 | 2000 |

| ... | ... | ... | ... | ... | ... |

| 2015-12-27 | 1.94 | -9.26 | 14.25 | 2015 | 2016 |

| 2015-12-28 | 0.00 | -11.26 | 11.91 | 2015 | 2016 |

| 2015-12-29 | 0.00 | -7.13 | 10.87 | 2015 | 2016 |

| 2015-12-30 | 0.01 | -3.12 | 11.91 | 2015 | 2016 |

| 2015-12-31 | 0.00 | 0.71 | 11.39 | 2015 | 2016 |

5753 rows × 5 columns

train_from_hyd_year = 2000

train_to_hyd_year = 2010

test_from_hyd_year = 2011

test_to_hyd_year = 2013

X_columns = ["P_mm_738moh", "T_C_738moh"]

Y_columns = "Q_Hagabru"

df_not_feb_29 = df[~((df.index.month == 2) & (df.index.day == 29))]

df_train = df_not_feb_29[(train_from_hyd_year <= df_not_feb_29["hydrological_year_from"]) & (df_not_feb_29["hydrological_year_from"] <= train_to_hyd_year)]

df_test = df_not_feb_29[(test_from_hyd_year <= df_not_feb_29["hydrological_year_from"]) & (df_not_feb_29["hydrological_year_from"] <= test_to_hyd_year)]

X_train, Y_train = df_train[X_columns], df_train[Y_columns]

X_test, Y_test = df_test[X_columns], df_test[Y_columns]

Y_max = df[Y_columns].max()

print("Y max: %.2f" % (Y_max,))

def normalizeY(Y):

return Y/Y_max

def denormalizeY(normalized_Y):

return normalized_Y * Y_max

Y_train = normalizeY(Y_train)

Y_test = normalizeY(Y_test)

Y max: 959.68

X_max = df[X_columns].max()

print("X max:\n %s" % (X_max,))

def normalizeX(X):

return X/X_max

def denormalizeX(normalized_X):

return normalized_X * X_max

X_train = normalizeX(X_train)

X_test = normalizeX(X_test)

X max: P_mm_738moh 44.45 T_C_738moh 20.92 dtype: float64

# Variance

print("Variance training set: %.5f" % Y_train.var())

print("Variance validation set: %.5f" % Y_test.var())

Variance training set: 0.00957 Variance validation set: 0.00871

Prepair dataset for Tensorflow¶

Divide dataset into destinct sequences of 30 days/timesteps, but only reset state of LSTM after each epoch (full time series). Batch size = 1

SEQUENCE_LENGTH = 30 # days

BATCH_SIZE = 1

STEP = 30

INPUT_d = 2

OUTPUT_d = 1

x_train, y_train = multivariate_data(

X_train.values, Y_train.values, SEQUENCE_LENGTH, start_index=0, end_index=None, step=STEP)

x_val, y_val = multivariate_data(

X_test.values, Y_test.values, SEQUENCE_LENGTH, start_index=0, end_index=None, step=STEP)

train_data = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(BATCH_SIZE)

val_data = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(BATCH_SIZE)

print(x_train.shape, y_train.shape)

print(train_data)

(131, 30, 2) (131, 30) <BatchDataset shapes: ((None, 30, 2), (None, 30)), types: (tf.float64, tf.float64)>

SEQUENCE_LENGTH = 365 # days

BATCH_SIZE = 1

STEP = 365

INPUT_d = 2

OUTPUT_d = 1

x_train, y_train = multivariate_data(

X_train.values, Y_train.values, SEQUENCE_LENGTH, start_index=0, end_index=None, step=STEP)

x_val, y_val = multivariate_data(

X_test.values, Y_test.values, SEQUENCE_LENGTH, start_index=0, end_index=None, step=STEP)

train_data2 = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(BATCH_SIZE)

val_data2 = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(BATCH_SIZE)

print(x_train.shape, y_train.shape)

print(train_data)

(9, 365, 2) (9, 365) <BatchDataset shapes: ((None, 30, 2), (None, 30)), types: (tf.float64, tf.float64)>

Model¶

A simple LSTM model with 1 layer and 30 units, and one additional ReLU layer to combine units. The LSTM output is limited to the -1.0–1.0 domain.

Optmized with Adam.

klayers = tf.keras.layers

model = tf.keras.models.Sequential()

model.add(klayers.LSTM(30, return_sequences=True, stateful=True,

input_shape=(None, INPUT_d,),

batch_input_shape=(BATCH_SIZE, None, INPUT_d)))

model.add(klayers.Dense(OUTPUT_d, activation="relu"))

model.compile(optimizer=tf.keras.optimizers.Adam(0.00003), loss='mse', metrics=['mae'])

print(model.summary())

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= lstm (LSTM) (1, None, 30) 3960 _________________________________________________________________ dense (Dense) (1, None, 1) 31 ================================================================= Total params: 3,991 Trainable params: 3,991 Non-trainable params: 0 _________________________________________________________________ None

#model.load_weights('3 - LSTM statefull')

# Define callbacks

class ResetStateBetweenEpochs(tf.keras.callbacks.Callback):

def on_epoch_begin(self, batch, logs=None):

self.model.reset_states()

class ResetStateBetweenBatches(tf.keras.callbacks.Callback):

def on_train_batch_begin(self, batch, logs=None):

self.model.reset_states()

# Stop after 20 epochs with no improvement

earlyStopping = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=15)

Training with a sequence size of 30 days seems to have the best effect. One year sequences overfits the data. Short sequences are slower to train. The overfitting seems to be serious if the val_loss haven't improve in 50 epochs. Less than 20 and training terminates too early.

#model.fit(train_data, validation_data=val_data, epochs=20, callbacks=[ResetStateBetweenBatches(), earlyStopping])

#model.fit(train_data2, validation_data=val_data2, epochs=10, callbacks=[ResetStateBetweenEpochs(), earlyStopping])

history = model.fit(

train_data,

validation_data=val_data,

epochs=2000,

callbacks=[ResetStateBetweenEpochs(), earlyStopping]

)

Epoch 1/2000 131/131 [==============================] - 11s 83ms/step - loss: 0.0090 - mae: 0.0496 - val_loss: 0.0000e+00 - val_mae: 0.0000e+00 Epoch 2/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0080 - mae: 0.0505 - val_loss: 0.0068 - val_mae: 0.0445 Epoch 3/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0078 - mae: 0.0518 - val_loss: 0.0067 - val_mae: 0.0460 Epoch 4/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0077 - mae: 0.0525 - val_loss: 0.0067 - val_mae: 0.0470 Epoch 5/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0076 - mae: 0.0529 - val_loss: 0.0067 - val_mae: 0.0476 Epoch 6/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0076 - mae: 0.0531 - val_loss: 0.0066 - val_mae: 0.0480 Epoch 7/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0075 - mae: 0.0532 - val_loss: 0.0066 - val_mae: 0.0483 Epoch 8/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0075 - mae: 0.0532 - val_loss: 0.0066 - val_mae: 0.0485 Epoch 9/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0074 - mae: 0.0532 - val_loss: 0.0066 - val_mae: 0.0486 Epoch 10/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0074 - mae: 0.0531 - val_loss: 0.0065 - val_mae: 0.0486 Epoch 11/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0073 - mae: 0.0530 - val_loss: 0.0065 - val_mae: 0.0486 Epoch 12/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0072 - mae: 0.0529 - val_loss: 0.0065 - val_mae: 0.0486 Epoch 13/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0072 - mae: 0.0527 - val_loss: 0.0064 - val_mae: 0.0486 Epoch 14/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0071 - mae: 0.0525 - val_loss: 0.0064 - val_mae: 0.0485 Epoch 15/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0071 - mae: 0.0523 - val_loss: 0.0063 - val_mae: 0.0484 Epoch 16/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0070 - mae: 0.0521 - val_loss: 0.0063 - val_mae: 0.0483 Epoch 17/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0070 - mae: 0.0519 - val_loss: 0.0063 - val_mae: 0.0482 Epoch 18/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0069 - mae: 0.0517 - val_loss: 0.0062 - val_mae: 0.0481 Epoch 19/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0068 - mae: 0.0514 - val_loss: 0.0062 - val_mae: 0.0480 Epoch 20/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0068 - mae: 0.0511 - val_loss: 0.0061 - val_mae: 0.0478 Epoch 21/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0067 - mae: 0.0509 - val_loss: 0.0061 - val_mae: 0.0477 Epoch 22/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0066 - mae: 0.0505 - val_loss: 0.0060 - val_mae: 0.0475 Epoch 23/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0066 - mae: 0.0502 - val_loss: 0.0059 - val_mae: 0.0473 Epoch 24/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0065 - mae: 0.0499 - val_loss: 0.0059 - val_mae: 0.0471 Epoch 25/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0064 - mae: 0.0495 - val_loss: 0.0058 - val_mae: 0.0469 Epoch 26/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0063 - mae: 0.0491 - val_loss: 0.0057 - val_mae: 0.0466 Epoch 27/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0062 - mae: 0.0487 - val_loss: 0.0056 - val_mae: 0.0464 Epoch 28/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0061 - mae: 0.0483 - val_loss: 0.0056 - val_mae: 0.0461 Epoch 29/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0060 - mae: 0.0478 - val_loss: 0.0055 - val_mae: 0.0458 Epoch 30/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0059 - mae: 0.0473 - val_loss: 0.0054 - val_mae: 0.0456 Epoch 31/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0058 - mae: 0.0468 - val_loss: 0.0053 - val_mae: 0.0453 Epoch 32/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0057 - mae: 0.0462 - val_loss: 0.0052 - val_mae: 0.0450 Epoch 33/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0056 - mae: 0.0456 - val_loss: 0.0051 - val_mae: 0.0448 Epoch 34/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0055 - mae: 0.0451 - val_loss: 0.0050 - val_mae: 0.0445 Epoch 35/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0054 - mae: 0.0445 - val_loss: 0.0049 - val_mae: 0.0442 Epoch 36/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0053 - mae: 0.0439 - val_loss: 0.0048 - val_mae: 0.0439 Epoch 37/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0052 - mae: 0.0434 - val_loss: 0.0047 - val_mae: 0.0436 Epoch 38/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0051 - mae: 0.0429 - val_loss: 0.0046 - val_mae: 0.0433 Epoch 39/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0050 - mae: 0.0423 - val_loss: 0.0045 - val_mae: 0.0430 Epoch 40/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0049 - mae: 0.0418 - val_loss: 0.0044 - val_mae: 0.0427 Epoch 41/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0048 - mae: 0.0414 - val_loss: 0.0043 - val_mae: 0.0424 Epoch 42/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0048 - mae: 0.0409 - val_loss: 0.0043 - val_mae: 0.0421 Epoch 43/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0047 - mae: 0.0405 - val_loss: 0.0042 - val_mae: 0.0418 Epoch 44/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0046 - mae: 0.0401 - val_loss: 0.0041 - val_mae: 0.0415 Epoch 45/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0046 - mae: 0.0397 - val_loss: 0.0041 - val_mae: 0.0412 Epoch 46/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0045 - mae: 0.0393 - val_loss: 0.0040 - val_mae: 0.0409 Epoch 47/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0044 - mae: 0.0389 - val_loss: 0.0039 - val_mae: 0.0406 Epoch 48/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0044 - mae: 0.0385 - val_loss: 0.0039 - val_mae: 0.0402 Epoch 49/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0043 - mae: 0.0381 - val_loss: 0.0038 - val_mae: 0.0399 Epoch 50/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0042 - mae: 0.0378 - val_loss: 0.0038 - val_mae: 0.0396 Epoch 51/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0042 - mae: 0.0374 - val_loss: 0.0037 - val_mae: 0.0393 Epoch 52/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0041 - mae: 0.0370 - val_loss: 0.0037 - val_mae: 0.0390 Epoch 53/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0040 - mae: 0.0367 - val_loss: 0.0036 - val_mae: 0.0387 Epoch 54/2000 131/131 [==============================] - 4s 32ms/step - loss: 0.0040 - mae: 0.0363 - val_loss: 0.0036 - val_mae: 0.0384 Epoch 55/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0039 - mae: 0.0360 - val_loss: 0.0035 - val_mae: 0.0381 Epoch 56/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0039 - mae: 0.0356 - val_loss: 0.0035 - val_mae: 0.0379 Epoch 57/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0038 - mae: 0.0353 - val_loss: 0.0035 - val_mae: 0.0376 Epoch 58/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0037 - mae: 0.0350 - val_loss: 0.0034 - val_mae: 0.0374 Epoch 59/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0037 - mae: 0.0346 - val_loss: 0.0034 - val_mae: 0.0371 Epoch 60/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0036 - mae: 0.0343 - val_loss: 0.0034 - val_mae: 0.0369 Epoch 61/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0036 - mae: 0.0341 - val_loss: 0.0033 - val_mae: 0.0367 Epoch 62/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0036 - mae: 0.0338 - val_loss: 0.0033 - val_mae: 0.0365 Epoch 63/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0035 - mae: 0.0335 - val_loss: 0.0033 - val_mae: 0.0364 Epoch 64/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0035 - mae: 0.0333 - val_loss: 0.0033 - val_mae: 0.0362 Epoch 65/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0034 - mae: 0.0331 - val_loss: 0.0032 - val_mae: 0.0360 Epoch 66/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0034 - mae: 0.0329 - val_loss: 0.0032 - val_mae: 0.0359 Epoch 67/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0034 - mae: 0.0327 - val_loss: 0.0032 - val_mae: 0.0358 Epoch 68/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0033 - mae: 0.0326 - val_loss: 0.0032 - val_mae: 0.0357 Epoch 69/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0033 - mae: 0.0324 - val_loss: 0.0032 - val_mae: 0.0356 Epoch 70/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0033 - mae: 0.0323 - val_loss: 0.0031 - val_mae: 0.0355 Epoch 71/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0033 - mae: 0.0322 - val_loss: 0.0031 - val_mae: 0.0354 Epoch 72/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0033 - mae: 0.0321 - val_loss: 0.0031 - val_mae: 0.0353 Epoch 73/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0032 - mae: 0.0320 - val_loss: 0.0031 - val_mae: 0.0352 Epoch 74/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0032 - mae: 0.0319 - val_loss: 0.0031 - val_mae: 0.0352 Epoch 75/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0032 - mae: 0.0318 - val_loss: 0.0031 - val_mae: 0.0351 Epoch 76/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0032 - mae: 0.0318 - val_loss: 0.0031 - val_mae: 0.0350 Epoch 77/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0032 - mae: 0.0317 - val_loss: 0.0031 - val_mae: 0.0350 Epoch 78/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0032 - mae: 0.0316 - val_loss: 0.0031 - val_mae: 0.0349 Epoch 79/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0031 - mae: 0.0316 - val_loss: 0.0031 - val_mae: 0.0349 Epoch 80/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0031 - mae: 0.0315 - val_loss: 0.0030 - val_mae: 0.0348 Epoch 81/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0031 - mae: 0.0314 - val_loss: 0.0030 - val_mae: 0.0348 Epoch 82/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0031 - mae: 0.0314 - val_loss: 0.0030 - val_mae: 0.0347 Epoch 83/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0031 - mae: 0.0313 - val_loss: 0.0030 - val_mae: 0.0347 Epoch 84/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0031 - mae: 0.0313 - val_loss: 0.0030 - val_mae: 0.0346 Epoch 85/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0031 - mae: 0.0312 - val_loss: 0.0030 - val_mae: 0.0346 Epoch 86/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0031 - mae: 0.0312 - val_loss: 0.0030 - val_mae: 0.0345 Epoch 87/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0031 - mae: 0.0311 - val_loss: 0.0030 - val_mae: 0.0345 Epoch 88/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0031 - mae: 0.0311 - val_loss: 0.0030 - val_mae: 0.0344 Epoch 89/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0030 - mae: 0.0311 - val_loss: 0.0030 - val_mae: 0.0344 Epoch 90/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0030 - mae: 0.0310 - val_loss: 0.0030 - val_mae: 0.0343 Epoch 91/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0030 - mae: 0.0310 - val_loss: 0.0030 - val_mae: 0.0343 Epoch 92/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0030 - mae: 0.0309 - val_loss: 0.0030 - val_mae: 0.0342 Epoch 93/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0030 - mae: 0.0309 - val_loss: 0.0029 - val_mae: 0.0342 Epoch 94/2000 131/131 [==============================] - 4s 34ms/step - loss: 0.0030 - mae: 0.0309 - val_loss: 0.0029 - val_mae: 0.0341 Epoch 95/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0030 - mae: 0.0308 - val_loss: 0.0029 - val_mae: 0.0341 Epoch 96/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0030 - mae: 0.0308 - val_loss: 0.0029 - val_mae: 0.0340 Epoch 97/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0030 - mae: 0.0308 - val_loss: 0.0029 - val_mae: 0.0340 Epoch 98/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0030 - mae: 0.0307 - val_loss: 0.0029 - val_mae: 0.0340 Epoch 99/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0030 - mae: 0.0307 - val_loss: 0.0029 - val_mae: 0.0339 Epoch 100/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0030 - mae: 0.0307 - val_loss: 0.0029 - val_mae: 0.0339 Epoch 101/2000 131/131 [==============================] - 6s 44ms/step - loss: 0.0030 - mae: 0.0306 - val_loss: 0.0029 - val_mae: 0.0338 Epoch 102/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0029 - mae: 0.0306 - val_loss: 0.0029 - val_mae: 0.0338 Epoch 103/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0029 - mae: 0.0306 - val_loss: 0.0029 - val_mae: 0.0338 Epoch 104/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0029 - mae: 0.0305 - val_loss: 0.0029 - val_mae: 0.0337 Epoch 105/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0029 - mae: 0.0305 - val_loss: 0.0029 - val_mae: 0.0337 Epoch 106/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0305 - val_loss: 0.0029 - val_mae: 0.0336 Epoch 107/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0029 - mae: 0.0304 - val_loss: 0.0029 - val_mae: 0.0336 Epoch 108/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0304 - val_loss: 0.0028 - val_mae: 0.0336 Epoch 109/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0304 - val_loss: 0.0028 - val_mae: 0.0335 Epoch 110/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0304 - val_loss: 0.0028 - val_mae: 0.0335 Epoch 111/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0303 - val_loss: 0.0028 - val_mae: 0.0334 Epoch 112/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0303 - val_loss: 0.0028 - val_mae: 0.0334 Epoch 113/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0029 - mae: 0.0303 - val_loss: 0.0028 - val_mae: 0.0334 Epoch 114/2000 131/131 [==============================] - 4s 32ms/step - loss: 0.0029 - mae: 0.0302 - val_loss: 0.0028 - val_mae: 0.0333 Epoch 115/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0029 - mae: 0.0302 - val_loss: 0.0028 - val_mae: 0.0333 Epoch 116/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0029 - mae: 0.0302 - val_loss: 0.0028 - val_mae: 0.0333 Epoch 117/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0301 - val_loss: 0.0028 - val_mae: 0.0332 Epoch 118/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0301 - val_loss: 0.0028 - val_mae: 0.0332 Epoch 119/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0028 - mae: 0.0301 - val_loss: 0.0028 - val_mae: 0.0332 Epoch 120/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0028 - mae: 0.0301 - val_loss: 0.0028 - val_mae: 0.0331 Epoch 121/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0300 - val_loss: 0.0028 - val_mae: 0.0331 Epoch 122/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0300 - val_loss: 0.0028 - val_mae: 0.0331 Epoch 123/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0300 - val_loss: 0.0028 - val_mae: 0.0331 Epoch 124/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0028 - mae: 0.0299 - val_loss: 0.0027 - val_mae: 0.0330 Epoch 125/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0028 - mae: 0.0299 - val_loss: 0.0027 - val_mae: 0.0330 Epoch 126/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0299 - val_loss: 0.0027 - val_mae: 0.0330 Epoch 127/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0299 - val_loss: 0.0027 - val_mae: 0.0329 Epoch 128/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0298 - val_loss: 0.0027 - val_mae: 0.0329 Epoch 129/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0298 - val_loss: 0.0027 - val_mae: 0.0329 Epoch 130/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0298 - val_loss: 0.0027 - val_mae: 0.0328 Epoch 131/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0028 - mae: 0.0298 - val_loss: 0.0027 - val_mae: 0.0328 Epoch 132/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0028 - mae: 0.0297 - val_loss: 0.0027 - val_mae: 0.0328 Epoch 133/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0297 - val_loss: 0.0027 - val_mae: 0.0328 Epoch 134/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0028 - mae: 0.0297 - val_loss: 0.0027 - val_mae: 0.0327 Epoch 135/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0027 - mae: 0.0297 - val_loss: 0.0027 - val_mae: 0.0327 Epoch 136/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0027 - mae: 0.0296 - val_loss: 0.0027 - val_mae: 0.0327 Epoch 137/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0027 - mae: 0.0296 - val_loss: 0.0027 - val_mae: 0.0327 Epoch 138/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0027 - mae: 0.0296 - val_loss: 0.0027 - val_mae: 0.0326 Epoch 139/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0027 - mae: 0.0296 - val_loss: 0.0027 - val_mae: 0.0326 Epoch 140/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0027 - mae: 0.0295 - val_loss: 0.0027 - val_mae: 0.0326 Epoch 141/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0027 - mae: 0.0295 - val_loss: 0.0027 - val_mae: 0.0326 Epoch 142/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0027 - mae: 0.0295 - val_loss: 0.0027 - val_mae: 0.0325 Epoch 143/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0027 - mae: 0.0295 - val_loss: 0.0027 - val_mae: 0.0325 Epoch 144/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0027 - mae: 0.0294 - val_loss: 0.0027 - val_mae: 0.0325 Epoch 145/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0027 - mae: 0.0294 - val_loss: 0.0027 - val_mae: 0.0325 Epoch 146/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0027 - mae: 0.0294 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 147/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0027 - mae: 0.0294 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 148/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0027 - mae: 0.0293 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 149/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0027 - mae: 0.0293 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 150/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0027 - mae: 0.0293 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 151/2000 131/131 [==============================] - 4s 34ms/step - loss: 0.0027 - mae: 0.0293 - val_loss: 0.0026 - val_mae: 0.0324 Epoch 152/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0027 - mae: 0.0292 - val_loss: 0.0026 - val_mae: 0.0323 Epoch 153/2000 131/131 [==============================] - 5s 41ms/step - loss: 0.0027 - mae: 0.0292 - val_loss: 0.0026 - val_mae: 0.0323 Epoch 154/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0027 - mae: 0.0292 - val_loss: 0.0026 - val_mae: 0.0323 Epoch 155/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0027 - mae: 0.0292 - val_loss: 0.0026 - val_mae: 0.0323 Epoch 156/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0026 - mae: 0.0291 - val_loss: 0.0026 - val_mae: 0.0323 Epoch 157/2000 131/131 [==============================] - 4s 32ms/step - loss: 0.0026 - mae: 0.0291 - val_loss: 0.0026 - val_mae: 0.0322 Epoch 158/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0026 - mae: 0.0291 - val_loss: 0.0026 - val_mae: 0.0322 Epoch 159/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0291 - val_loss: 0.0026 - val_mae: 0.0322 Epoch 160/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0290 - val_loss: 0.0026 - val_mae: 0.0322 Epoch 161/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0290 - val_loss: 0.0026 - val_mae: 0.0322 Epoch 162/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0290 - val_loss: 0.0026 - val_mae: 0.0321 Epoch 163/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0290 - val_loss: 0.0026 - val_mae: 0.0321 Epoch 164/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0289 - val_loss: 0.0026 - val_mae: 0.0321 Epoch 165/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0289 - val_loss: 0.0026 - val_mae: 0.0321 Epoch 166/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0289 - val_loss: 0.0026 - val_mae: 0.0321 Epoch 167/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0289 - val_loss: 0.0026 - val_mae: 0.0320 Epoch 168/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0289 - val_loss: 0.0026 - val_mae: 0.0320 Epoch 169/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0288 - val_loss: 0.0026 - val_mae: 0.0320 Epoch 170/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0288 - val_loss: 0.0026 - val_mae: 0.0320 Epoch 171/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0288 - val_loss: 0.0026 - val_mae: 0.0320 Epoch 172/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0288 - val_loss: 0.0026 - val_mae: 0.0319 Epoch 173/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0288 - val_loss: 0.0026 - val_mae: 0.0319 Epoch 174/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0026 - mae: 0.0287 - val_loss: 0.0026 - val_mae: 0.0319 Epoch 175/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0026 - mae: 0.0287 - val_loss: 0.0026 - val_mae: 0.0319 Epoch 176/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0026 - mae: 0.0287 - val_loss: 0.0025 - val_mae: 0.0319 Epoch 177/2000 131/131 [==============================] - 4s 31ms/step - loss: 0.0026 - mae: 0.0287 - val_loss: 0.0025 - val_mae: 0.0319 Epoch 178/2000 131/131 [==============================] - 4s 30ms/step - loss: 0.0026 - mae: 0.0287 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 179/2000 131/131 [==============================] - 4s 32ms/step - loss: 0.0026 - mae: 0.0286 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 180/2000 131/131 [==============================] - 4s 33ms/step - loss: 0.0025 - mae: 0.0286 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 181/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0286 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 182/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0286 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 183/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0286 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 184/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0025 - mae: 0.0285 - val_loss: 0.0025 - val_mae: 0.0318 Epoch 185/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0025 - mae: 0.0285 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 186/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0025 - mae: 0.0285 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 187/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0025 - mae: 0.0285 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 188/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0025 - mae: 0.0285 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 189/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 190/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0317 Epoch 191/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 192/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 193/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 194/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0025 - mae: 0.0284 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 195/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 196/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 197/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 198/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 199/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0316 Epoch 200/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0025 - mae: 0.0283 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 201/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 202/2000 131/131 [==============================] - 3s 27ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 203/2000 131/131 [==============================] - 4s 29ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 204/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 205/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 206/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0025 - mae: 0.0282 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 207/2000 131/131 [==============================] - 4s 33ms/step - loss: 0.0025 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0315 Epoch 208/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0025 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 209/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0024 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 210/2000 131/131 [==============================] - 4s 32ms/step - loss: 0.0024 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 211/2000 131/131 [==============================] - 4s 34ms/step - loss: 0.0024 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 212/2000 131/131 [==============================] - 4s 28ms/step - loss: 0.0024 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 213/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0024 - mae: 0.0281 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 214/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 215/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0314 Epoch 216/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 217/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 218/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 219/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 220/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0280 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 221/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0025 - val_mae: 0.0313 Epoch 222/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0313 Epoch 223/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0313 Epoch 224/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0313 Epoch 225/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0313 Epoch 226/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 227/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 228/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0024 - mae: 0.0279 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 229/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 230/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 231/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 232/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 233/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 234/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 235/2000 131/131 [==============================] - 5s 35ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0312 Epoch 236/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 237/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0024 - mae: 0.0278 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 238/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 239/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 240/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 241/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 242/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 243/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0024 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 244/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0023 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 245/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0277 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 246/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0311 Epoch 247/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 248/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 249/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 250/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 251/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 252/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 253/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0310 Epoch 254/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 255/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0276 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 256/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 257/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 258/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 259/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 260/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 261/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 262/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 263/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 264/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 265/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 266/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0275 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 267/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0309 Epoch 268/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 269/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 270/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 271/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 272/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 273/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 274/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 275/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 276/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 277/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 278/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0274 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 279/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 280/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 281/2000 131/131 [==============================] - 4s 27ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 282/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 283/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 284/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 285/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 286/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 287/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 288/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 289/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 290/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 291/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 292/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0023 - mae: 0.0273 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 293/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 294/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 295/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 296/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 297/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 298/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 299/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 300/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 301/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 302/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 303/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 304/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 305/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 306/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 307/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0272 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 308/2000 131/131 [==============================] - 2s 17ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 309/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 310/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 311/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 312/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 313/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 314/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 315/2000 131/131 [==============================] - 2s 17ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 316/2000 131/131 [==============================] - 2s 17ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 317/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 318/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 319/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 320/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 321/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 322/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 323/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 324/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0022 - mae: 0.0271 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 325/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 326/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 327/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 328/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 329/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 330/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 331/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 332/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 333/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 334/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 335/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 336/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 337/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 338/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 339/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 340/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 341/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 342/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 343/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 344/2000 131/131 [==============================] - 3s 19ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 345/2000 131/131 [==============================] - 3s 25ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 346/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 347/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 348/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 349/2000 131/131 [==============================] - 3s 26ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 350/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0270 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 351/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 352/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 353/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 354/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 355/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 356/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 357/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 358/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 359/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 360/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 361/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 362/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 363/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 364/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 365/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 366/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0022 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 367/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0307 Epoch 368/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 369/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 370/2000 131/131 [==============================] - 3s 24ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 371/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 372/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 373/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 374/2000 131/131 [==============================] - 3s 21ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 375/2000 131/131 [==============================] - 3s 23ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 376/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 377/2000 131/131 [==============================] - 2s 18ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 378/2000 131/131 [==============================] - 2s 19ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 379/2000 131/131 [==============================] - 3s 22ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 380/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308 Epoch 381/2000 131/131 [==============================] - 3s 20ms/step - loss: 0.0021 - mae: 0.0269 - val_loss: 0.0024 - val_mae: 0.0308

plot_train_history(history, 'Training and validation loss')

#model.save_weights('3 - LSTM statefull')

x = df_train[X_columns]

y = df_train[Y_columns]

x_norm = normalizeX(x)

y_norm = normalizeY(y)

y_hat_norm = predict(model, x_norm, y_norm, SEQUENCE_LENGTH, INPUT_d)

y_hat = denormalizeY(y_hat_norm)

init_timesteps=40

print("R2: %.2f of 1.0" % sklearn.metrics.r2_score(y.values[init_timesteps:], y_hat.values[init_timesteps:]))

print("MAE: %.2f m3/s" % sklearn.metrics.mean_absolute_error(y.values[init_timesteps:], y_hat.values[init_timesteps:]))

plot_data_with_temp_precip(x, y, y_hat, title="Training set", init_timesteps=init_timesteps);

for year in range(2001,2004):

s = df_train.hydrological_year_from == year

if year==2001:

init_timesteps=40

else:

init_timesteps=0

plot_data_with_temp_precip(x[s], y[s], y_hat[s], title="Training set", init_timesteps=init_timesteps);

init_timesteps=40

plot_accumulated(y[init_timesteps:], y_hat[init_timesteps:], title="%i–%i" % (2001, 2011));

R2: 0.83 of 1.0 MAE: 23.75 m3/s

Validation set¶

x = df_test[X_columns]

y = df_test[Y_columns]

x_norm = normalizeX(x)

y_norm = normalizeY(y)

y_hat_norm = predict(model, x_norm, y_norm, SEQUENCE_LENGTH, INPUT_d)

y_hat = denormalizeY(y_hat_norm)

init_timesteps=40

print("R2: %.2f of 1.0" % sklearn.metrics.r2_score(y.values[init_timesteps:], y_hat.values[init_timesteps:]))

print("MAE: %.2f m3/s" % sklearn.metrics.mean_absolute_error(y.values[init_timesteps:], y_hat.values[init_timesteps:]))

plot_data_with_temp_precip(x, y, y_hat, title="Validation set", init_timesteps=init_timesteps);

for year in range(2011, 2014):

s = df_test.hydrological_year_from == year

if year==2011:

init_timesteps=40

else:

init_timesteps=0

plot_data_with_temp_precip(x[s], y[s], y_hat[s], title="Validation set", init_timesteps=init_timesteps);

init_timesteps=40

plot_accumulated(y[init_timesteps:], y_hat[init_timesteps:], title="%i–%i" % (2011, 2014));

R2: 0.74 of 1.0 MAE: 29.23 m3/s

TODO¶

- Should measure 10 times and calcualte mean and std performance

- Implement new RNN architecture