Tutorial 7 - The EU's Labour Market

Table of Contents

2.1 Labour market states

2.2 Transition rates

2.3 Steady state

2.4 Limitations

3. Data and methodology

3.1 Data

3.2 Technical setup

4. Data handling

4.1 Introduction to pandas

4.2 Technical toolkit

4.2.1 Audit

4.2.2 Cleaning

4.2.3 Transformation

4.2.4 Controlling

4.3 Regression datasets

4.3.1 GDP growth on unemployment rates of age groups

4.3.2 GDP growth on unemployment rates of education levels

4.3.3 Job finding probability on GDP per capita of age groups

5. Data visualization

5.1 Introduction to plotting libraries

5.1.1 Matplotlib

5.1.2 Seaborn

5.2 Plotting

5.2.1 Correlation

5.2.2 Divergence

5.2.3 Ranking

5.2.4 Composition

5.2.5 Time evolution

5.2.6 Advanced plots

6. Statistical analysis

6.1 Introduction to statsmodels

6.2 Simple linear OLS regression

6.2.1 GDP growth on unemployment rates of age groups

6.2.2 GDP growth on unemployment rates of educational attainment levels

6.2.3 Job finding probability on GDP per capita of age groups

6.3 Multiple regression

6.3.1 Unemployment rates on transition rates

6.3.2 Model performance on transition rates

6.4 Discussion

7. Conclusion

Introduction¶

Hello and we welcome you to this tutorial! The aim of this tutorial is to present the features of the European labor market in Python consistently and with commentary. From a programming perspecitve, the main objective of the tutorial is to learn how to prepare and analyse data. This entails several subprocesses such as the data import, cleaning, transformation, visualization and inspection. From an economic point of view, the main objective is to construct a simple but useful model of a labour market while characterizing it's equilibrium. As it will become clear, using Python to implement an economic model allows us to demonstrate features of the model that would otherwise be more difficult to understand. Before starting, let's not forget that the focus of this series of tutorials is mainly to transmit knowledge regarding programming with Python, and not pure economic theory. We invite you have a look at the following agenda, which will provide you with an overview of what you can expect from this tutorial.

Structure and content of this tutorial

- Theoretical framework: First things first!

Setting-up the theoretical background underlying this tutorial. We construct step by step a simple model for a labour market and explain concepts such as transition rates and steady state equilibria

- Data and methodology: With great data comes great responsibility!

A mindful introduction to the database that will be used throughout the tutorial.

- Data handling: Let's get to programming!

The introduction to the always forgotten, but most important programming apsect, the data handling process. This process audits the data and ensures that it is clean and well-transformed, thus ensuring it's integrity and hence its usfulness for statistical analysis.

- Data visualization: Gain the ability to build nice and neat plots!

Multiple visualization methods in Python exist, we present and explain a subset of the most essential data visualizations. This section will equip you with the ability to construct nice and neat plots in an efficient and effective manner. Don't forget as this will also be extremely useful to qualitatively discover the patterns in your data.

- Statistical analysis: Let's examine the data patterns on a statistical level!

After having cleaned and visualized the data, we will perform statistical models on it. More specifically, we will run simple and multiple OLS regressions to tease out causal relationships between indicators of the labour market and macroeconomic variables.

- Summary and discussion: We hope that you learned a lot!

This is the conclusion of our tutorial. This section will provide you with a summary and the main take-aways from what you have learned. We will provide you with additional resources where you can put theory into practice. We thereby hope that this will support you on your learning process and bring you even further with your programming skills.

We really hope that this tutorial will provide you with a solid economic foundation and a technical toolkit in methods for quantitative analysis with Python.

Enjoy and have a great journey!

Theoretical framework¶

The following section provides and explains essential theoretical concepts that are required in order to understand the mechanics of the labour market model. First, this section presents the notion labour market states. Second, we will show how we can model the transition between these states. Third, we will introduce the economic equilibrium of the labour market model, the steady state. Finally, we will present the essential assumptions on which the model is based. This will give you a critical view on the applicability and limitations of the model.

Labour market states¶

Most countries in the world have established a system and infrastructure to record and approximate the employment and unemployment rates of the labour force as accurately as possible. However, the methodology for calculating these rates often varies among countries. Different definitions of employment and unemployment, as well as different data sources can lead to different results. Here, we will agree on a clear definition of these concepts following the perception of the statistical office of the European Union. Thereby, the labour market model assigns each individual of a population to one of the following three labour market states.

- Employed ($E$): The number of people engaged in productive activities in an economy

- Unemployed ($U$): The number of people not engaging in productive activites but available and actively seeking to

- Inactive ($I$): The number of people not being part of the labour force

Each of these states, is expressed by an absolute number and describes a labour market as of a specific point in time. For this reason, those indicators are so-called stock variables. We can further use those variables, in order to construct more meaningful indicators that characterize a labor market.

Employment Rate

The percentage of employed individuals in relation to the comparable population

Unemployment Rate

The percentage of unemployed individuals in relation to the comparable labour force

Participation Rate

The percentage of individuals in the labout force in relation to the comparable population

The employment rate, the unemployment rate and the participation rate are important indicators for understanding a labour market. You have probably noticed that there is always a comparable population or labour force for which this indicator holds. This is because those rates can be expressed either for geographical areas or even for particular age groups or educational attainment levels. Therefore, an important characteristic of the labour market model is that its structure allows for age- and skill-specific segmentation of labour markets and their indicators. This feature will become useful when we will try to understand the distribution of labour market indicators within specific segments.

Finally, note the difference for the computation labour market rates. While we put into relation employment and participation with the population, we compare unemployment only to the labour force. The next section explains and illustrates the definition of the labour force. For instance, unemployment is defined as having the desire and availability to work while having actively sought work within the past four weeks. This excludes for example prisoners or disabled people, who are not considered unenmployed but rather out of the labor force. For a complete definition of each labor market state we encourage you to visit the 'Main Concepts' section on the webpage of the statistical office. The following figure illustrates the categorization of a population into mutually exclusive and collectively exhaustive labour market states with main characteristics defined correspondingly.

Transition rates¶

Having introduced a labour market model, which assigns individuals to distinct states, we can further model the transition of individuals between labour market states. For example, if some unempolyed people find employment over time, the unenployment rate will decrease while the employment rate will increase. The flows of people from one state to another are used to compoute the so-called transition rates. Those variables are important indicators for understanding the development of a labour market under study. They express the evolvement of a labour market as of a specific time period. For this reason, those variables are so-called flow variables. Transition rates tell us how likely it is for an individual to move from one state to another. Since there are three possible states a person can belong to ($E$, $U$, $I$), there are in total nine possible transitions that can occurr within a marginal time period (from $t$ to $t+1$).

| t / t+1 | E | U | I |

|---|---|---|---|

| E | EE | EU | EI |

| U | UE | UU | UI |

| I | IE | IU | II |

For example, the transition rate from unemployment to unemployment ($UE$) of 5% indicates the percentage of people unemployed at the beginning of the period that eventually found employment by the end of the period, thus number of migrating individuals from unemployed to employed as percentage uf unemployed individuals. We are now able to formulate a general formula for computing transition rates.

$$Transition Rate = \frac{S_{t}S_{t+1}}{Stock_{S_{t}}}\tag{4}$$For instance, if we would like to compute the transition rate from unemployment to employment, we need to divide the absolute number of transitioning individuals (from unemployment to employment) by total stock of unemployed people in the initial period. In summary, to compute transition rates we need to follow the following computation steps:

- We compute the stock of all individuals in each state ($E$, $U$, $I$) at time $t$

- We compute the stock of all individuals in each state ($E$, $U$, $I$) at time $t + 1$

- We compute for each combination of states how many individuals migrated from a state ($S_{t}$) to another ($S_{t+1}$). We denote all combinations by $SS$

- We divide $SS$ by the stock of individuals in the original state

As you will progress in this tutorial, you will encounter this theoretical knowledge again in section 4.2. Transition rates as we will compute transitions rates with real-world data using exactly this formula! As we are now able to calculate those variables, we can use transition rates to develop two other useful indicators for a labor market.

Rate of Job Finding

The percentage of individuals entering the employment state

Rate of Job Separation

The percentage of individuals entering the unemployment state

Intuitively, $f$ describes the (constant) probability for an unemployed individual to find a job and $s$ describes the (constant) probability for an employed person to lose her/his job. These two equations are extremely useful, because we can now describe the dynamics of our labour market model. Lastly, as the dynamics of the transition rates depend on each other, we can identify a determinisic and stable pattern. At this point, we will introduce an equilibrium of the labour market model, the steady state.

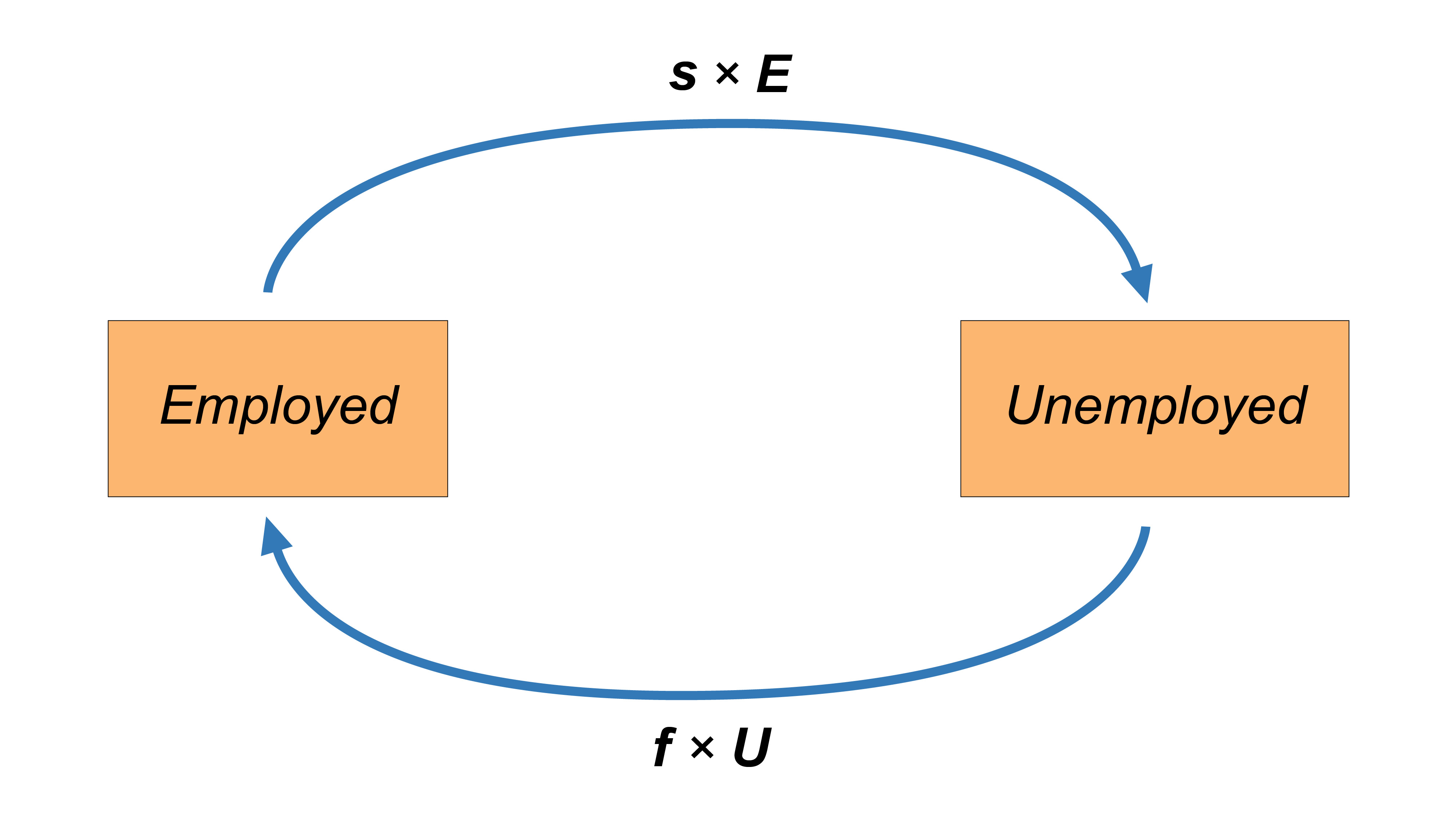

Steady state¶

In economics, a system or a process is said to be in steady state if the variables which define the behavior of the system or the process are unchanging in time. In economic theory, studying steady states is highly important, because if we assume that an economy converges to a steady state given certain factors, then it becomes interesting to evaluate the dynamics and to understand how the economy converges to that steady state. We will see that understanding those dynamics are crucial in order to formulate useful policy recommendations. In this tutorial we will pay particular attention to the steady state of the unemployment rate. The unemployment rate can possess the characteristics of a steady state if and only if the number of individuals entering the unemployment state and the number of individuals exiting the unemployment state are equal. The following figure illustrates a situation where the antagonist flow variables cancel each other out.

We will now apply the concept of steady state to our labour market model. Formally, a labour market is said to possess an unemployment rate persisting in a steady state if the fraction of unemployed people finding a job ($f \times U$) and the fraction of employed individuals losing their job ($s \times E$) is equal. Therefore, the condition for a steady state such that the unemployment rate stays constant from period $t$ to period $t+1$ is given by the following equation.

$$fU=sE\tag{7}$$Substituting some terms and re-arranging we can easily compute the formula for the steady steate unenmployment rate in two simple steps:

- We exploit the fact that $E = N - U$, i.e. the number of employed people equals labor force minus the number of unemployed persons:

$ \hspace{12.2cm} \begin{align} fU & = sE \\ & = s(N-U) \\ & = sN - sU \end{align} $

- We re-arrange and solve for $\frac{U}{N}$:

The steady state unemployment rate is then given by:

$$ UR_{ss} = \frac{U}{N} = \frac{s}{s + f} = \frac{1}{1 + \frac{s}{f}}\tag{8} $$In section [4.3. Steady state](#steady state) of this tutorial we will focus on computing the steady state unemployment rates with real world data. This will allow us to see the predictive power of our model when put into practice in empirical research. To understand how useful equation $(8)$ is and how this understanding is essential for formulating policy reccomendations, let's look at an example. Assume an economy in which each quarter 20% of unemployed individuals find a job and 2% of employed individuals lose their job, hence:

- $f$ = 0.2

- $s$ = 0.02

The steady state unemployment rate is then given by:

$$ \frac{s}{s + f} = \frac{0.02}{0.02 + 0.2} \approx 0.09, \text{or} \; 9\% $$It becomes clear that a policy aimed at reducing this unemployment rate, will only succeed one of the following options. First, steady state unemployment rate decreases if a the policy manages to increase $f$, i.e. the probability of finding a job. This could be done by investing in education and job placement programs. Second, steady state unemployment rate decreases if the policy manages to decrease $s$, i.e. the probability of losing a job. This could be done by ensuring a slow and sustainable growth of the overall economy, without major recessions. In next and last part of this section, will sensitize on the assumptions underlying the labour market model as well as its practical limitations.

Limitations¶

The labour market model is build on the assumption that transition can happen within a specified time period (from $t$ to $t+1$). Hence, the rate of job finding and the rate of job separation do not exactly correspond but only approximate the probability to move from one state to another. In fact, the labour market model assumes transitions in discrete time. In reality, an individual can change the state several times within a time period. Hence, individuals move across states in a continuous way and the computed transition rates will not fully correspond to the true probability to move acrosss states. But clearly, under the assumption of the law of large numbers, we can expect computed transition rates to be more accurate the more often they are calculated and therefore they will approximate the instantaneuous probabilities to move across states. Instantaneuos transition probabilities can be seen as mathematical adjustments to the computation of transition rates we saw so far. With instantaneous probabilities, the steady-state unemployment rate can also be rewritten as:

$$UR_{SS} = \frac{\pi^{EN}\pi^{NU}+\pi^{NE}\pi^{EU}+\pi^{NU}\pi^{EU}}{(\pi^{UN}\pi^{NE}+\pi^{NU}\pi^{UE}+\pi^{NE}\pi^{UE})+(\pi^{EN}\pi^{NU}+\pi^{NE}\pi^{EU}+\pi^{NU}\pi^{EU})}\tag{10}$$where $\pi^{SS}$ are the instantaneous probabilities of transitioning from one state to the other. For the purpose of our tutorial, we will make use of transition rates as an approximation for these instantanaeous probabilities, hence we assume that the following equation hold:

$$s = {\pi^{EN}\pi^{NU}+\pi^{NE}\pi^{EU}+\pi^{NU}\pi^{EU}}\tag{11}$$$$f = {\pi^{UN}\pi^{NE}+\pi^{NU}\pi^{UE}+\pi^{NE}\pi^{UE}}\tag{12}$$Altough this setting allows us to build a model that resembles more closely to reality (were changes happen rather in a continuous way than in discrete time) throughout the turorial we will use the classical transition rates discussed in the previous section, since they are easier to deal with and confer nontheless great explantory power to the model.

Data and methodology¶

Having laid down a theoretical foundation of the labour market model, it is now time to devote the attention to the transition from theory to practice. This transition can be seen as successful if we as the researchers are not interrupted within our data analysis. Such an interruption can either happen because the data integrity becomes questionable or because there are technical issues which have not been adressed before starting the programming. Therefore, the following section focuses on providing information about the data source, its provider, and about the data collection process. The latter part of this section is designated to set the stage and provide the technical prerequisites for the programming part.

Data¶

Data source

In order to compare the different dynamics of countries related to transition rates and unemployment rates, data needs to be collected systematically and in a reliable manner. All data used in this tutorial is extracted from Eurostat which is an adminitrative branch of the European Commission located in Luxembourg. Its responsability is to provide statistical information to the institutions of the European Union and to encourage the harmonisation of statistical methods in order to ease comparison between data. In this section, we will discuss how Eurostat gather data and the degree of relability of its operations. Eurostat publishes its statistical database online for free on its website.

Data collection

The data that will interest us in this tutorial are the one related to the European labor market.

The European Labor Force Survey is a survey conducted by Eurostat in order to find those data. The latter are obtained by interviewing a large sample of individuals directly. This data collection takes place over on a monthly, quarterly and annually basis.

The European Labor Force Survey collects data by four manners:

- Personal visits

- Telephone interviews

- Web interviews

- Self-administered questionnaires (questionnaire that has been designed specifically to be completed by a respondent without intervention of the researchers)

Data integrity

For the sake of this tutorial, there are factors to be considered that could possibly affect the outcome of the analysis:

- Population adjustments are revised at fixed time intervals on the basis of new population censuses

- Reference periods may not have remained the same for a given country due to the transition to a quarterly continuous survey

- Countries may have modified sample designs

- Countries may have modified the content or order of their questionnaire

Since 1983 however, the statistical office of the European Union has endeavored to establish a greater comparability between the results of successive surveys. This has been achieved mainly through increased harmonisation, greater stability of content and higher frequency of surveys. Furthermore, Eurostat makes considerable efforts to perform a structured approach to data validation. It thereby defines common standards for validation, providing common validation tools or services to be used. Validation rules are jointly designed and agreed upon and the resulting reglement is documented using common cross-domain standards, with clear validation responsibilities assigned to the different groups participating in the production process of European statistics. A more detailed perspective on the data validation process can be gathered on their website.

Metadata of datasets

As this tutorial will introduce a programmatic access to the datasets, it is not required to download any local files to follow the analysis. In the following sections, the tutorial will use three datasets provided by Eurostat, namely for unemployment rates, for the transitions and for GDP data. Each of these datasets contain observations for different European countries accross sex, age and citizenship. The following table presents the metadata of the datasets that is valid as of the date of submission of this tutorial.

| Unemployment rates | Labour market transitions | Real GDP growth rates | |

|---|---|---|---|

| File | 'lfsq_urgan' | 'lfsi_long_q' | 'tec00115' |

| Time coverage | 1998Q1 - 2020Q4 | 2010Q2 - 2020Q4 | 2009 - 2020 |

| Number of values | 1,632,066 | 293,058 | 949 |

| Last data update | 13-04-2021 | 13-04-2021 | 23-04-2021 |

Variables:

unit: Data format, either percentage (PC) or absolute in thousands (THS)sex: Sex, either male (M), female (F) or total (T)age: Age group, from 15 to 74 years, in different ranges, not mutually exclusivecitizen: Country of citizenship, e.g. from EU28 countries (EU28_FOR) or total (TOTAL)geo\time: ISO alpha two-letter country codes (e.g. CH)na_item:s_adj: Flag for whether data is seasonally adjusted (SA) or not (NSA)indic_em: Employment indicator of transition (e.g. U_E)

As it will be shown in section 4.2 Data Import, we will access these three databases through an API. An API (application programming interface) allows interactions such as data transmission between multiple software applications. In our case, we will connect to the API of Eurostat, and by interacting with it we will be able to retrieve the three datasets directly here in Python.

Technical setup¶

Package management

Python is considered a "batteries included" language which means that its rich and versatile library is immediately available without the user being forced to download a large amount of packages. At the same time, Python has an active community that contributes to the development of an even bigger set of packages. Many of these packages enhance the simplicity and the computational power of the code. In order to be able to access these sets of powerful modules, it is neccessary to install the packages and load them into the memory. For the installation of a package, one can use the standard package manager of python, named pip. The simple terminal or jupyter command

pip install <package>

will do the work and intialize a number of subprocesses, namely the identification of base requirements, the resolvement of existent environemnt dependencies and finally, the installation of the desired package. For further information about the functionality and features of pip one can consult the website. For now, let's install some packages that you will need for following this tutorial. If you should have problems in importing other packages in the second cell, you can just add them to the first cell and install them.

# You can skip this cell if you have the packages already installed in your environment

pip install eurostat

pip install geopandas

pip install pycountry

pip install squarify

Module import

For the sake of this tutorial, the required libraries are imported below. Note that the interpreter will raise a ModuleNotFoundError if a package has not been installed yet. The tutorial will address each library in detail, but for now, we simply run the below cell to set the stage for the analysis.

import eurostat

import geopandas as gpd

import math

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import pycountry as pyc

import statsmodels.api as sm

import datetime

import seaborn as sns

import matplotlib.lines as mlines

import squarify

import random

Data handling¶

The data handling process ensures that the information can be presented in a way that is useful to analyze. This includes the development of policies and procedures to manage data with regards to its storage, audit, cleaning and controlling. Most importantly, the data handling process is an iterative process that has to be adapted to the needs of the current task. The following section is designated to load the datasets and to conduct an extensive amount of inspection that ensures the integrity and hence its usfulness for the analysis. In order to programmatically address the process of data handling, this tutorial introduces one of the most popular and most used python libraries.

Introduction to Pandas¶

Environment

The pandas library takes its name from an acronym of "panel data", which refers to the tabular format of its data structure. The previous tutorials have introduced already built-in data structures such as lists, sets, tuples and dicitionaries. With pandas, we introduce two new data structures, namely pandas.Series for one-dimensional arrays and the pandas.DataFrame for two-dimensional tabular structures. The pandas library shares many similarities with numpy as it adopts its style of array-based computing. The biggest difference however is that pandas is designed for working with tabular and heterogeneous data. The numpy library, by contrast, is best suited for working with homogeneous and numerical data. In order to simplify the code that will follow it is helpful to import pandas as pd such that we can reference to the library and its modules with a shorter name. Indeed, you can check in the previous section that we imported pandas and many other libraries using the as command, in order to more easily reference to each library in the future.

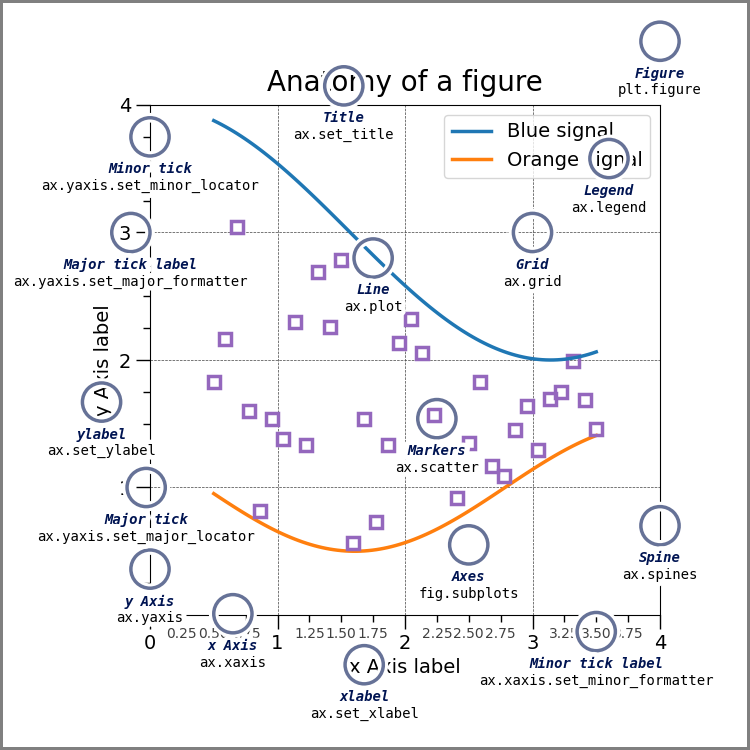

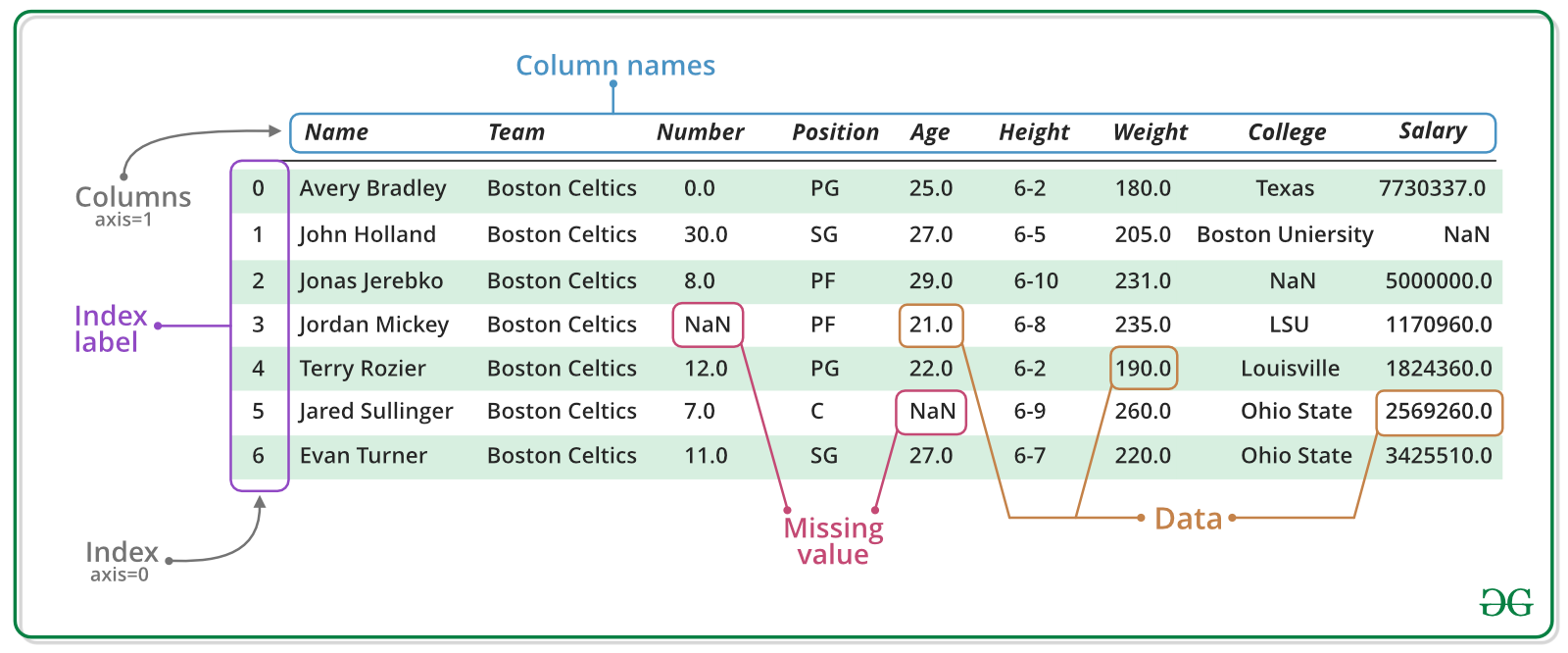

Data structures

The pd.Series is an object of the pandas library designed to represent one-dimensional and heterogeneos data structures. The array is characterized by its name, its values and and index. Similarily, the pd.DataFrame is a two-dimensional data structure consisting of a concatenation of pd.Series. As the tabular format can be thought of a spreadsheet, the columns must be of the same length, however they can accommodate heterogeneous data types. The pd.DataFrame is characterized by its column names, its column values and its row indices. The ability of indexing is crucially important as it enables to filter the data or to access specific data points in order to read them or to mutate them in place. The following figure should summarize and illustrate the anatomy of the introduced data structures related to pandas.

Instantation

The pd.DataFrame can be created in two different ways. First, this can be achieved by passing suitable data structure as an argument in the object caller. Suitable data structures are numpy.arrays, or a combination of dicitionaries and other data structures such as lists or pd.Series. Second, a pd.DataFrame can be created by reading suitable data files. An overview of the possible writer functions can be found here. The following cells showcase the different ways in which a pd.DataFrame can be created by using the object caller. Note that the resulting table will always be the same.

# Creation of a DataFrame using a two-dimensional numpy.array

np_array = np.array([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

df = pd.DataFrame(np_array, columns=['Employed', 'Unemployed', 'Inactive'], index=['CH', 'FR', 'DE'])

df

| Employed | Unemployed | Inactive | |

|---|---|---|---|

| CH | 1 | 2 | 3 |

| FR | 4 | 5 | 6 |

| DE | 7 | 8 | 9 |

# Creation of a DataFrame using a dictionary of dictionaries

dic = {'Employed' : {'CH':1, 'FR':4, 'DE':7},

'Unemployed' : {'CH':2, 'FR':5, 'DE':8},

'Inactive' : {'CH':3, 'FR':6, 'DE':9}}

df = pd.DataFrame(dic, index = ['CH', 'FR', 'DE'])

df

| Employed | Unemployed | Inactive | |

|---|---|---|---|

| CH | 1 | 2 | 3 |

| FR | 4 | 5 | 6 |

| DE | 7 | 8 | 9 |

# Creation of a DataFrame using a dictionary of lists

dic = {'Employed' : [1, 4, 7],

'Unemployed' : [2, 5, 8],

'Inactive' : [3, 6, 9]}

df = pd.DataFrame(dic, index = ['CH', 'FR', 'DE'])

df

| Employed | Unemployed | Inactive | |

|---|---|---|---|

| CH | 1 | 2 | 3 |

| FR | 4 | 5 | 6 |

| DE | 7 | 8 | 9 |

Pandas functionalities

Some other useful functions for dataframes in pandas are:

df['Employed']: Selecting a column e.g. column 'Employed'df.iloc: Accessing the data via index referencedf.loc: Accessing the data via label referencedf.drop: Dropping columnsdf.T: Transposing the dataframedf.sort_index: Sorting the data by axisdf.sort_values: Sorting by specific columnsdf.mean: Calculating the mean row or column wisepd.merge: Merging two dataframesdf.append: Appending dataframes to one anotherpd.concat: Concatenation of two dataframes

The pandas documentation provides a very good description of what you can do with dataframes and if there is something that interests you beyond the application of this tutorial, it may very likely to be found there. Having introduced pandas as a useful library for data handling, we can start to put theory into practice. In the next step, we will be making queries to the REST API of Eurostat and as a response, we will receive a pd.DataFrame.

Technical toolkit¶

The statistical office of the European Union offers the access of a data base through the REST API. One python package that enables to access this interface is the eurostat library. Its website provides useful documentation of the functionalities of the package. For the sake of this tutorial, we can use the get_data_df method by passing as arguments the filename of the dataset.

The following cells showcase a structured approach to the data handling process. For the sake of a better readability of this notebook, we will insert descriptive comments in-line. The cell bellow calls the functions to store the datasets by specifying the filenames for the unemployment rates, the labour market transitions and the real GDP growth rates. As Eurostat flags missing values with a colon, we can pass the argument flags=False to replace those values with a np.nan object which in data science represents a common placeholder for missing datapoints. The function returns the corresponding datasets as pd.DataFrame and, as you will see, we will store them into the variables udf, tdf and gdf.

udf = eurostat.get_data_df('lfsq_urgan', flags=False) # Unemployment rates

tdf = eurostat.get_data_df('lfsi_long_q', flags=False) # Labour market transitions

gdf = eurostat.get_data_df('tec00115', flags=False) # Real GDP growth rates

# Job finding prob inputs

age_UE = eurostat.get_data_df('lfsi_long_e01', flags=False) # Labour market transitions

age_IE = eurostat.get_data_df('lfsi_long_e06', flags=False) # Labour market transitions

age_U = eurostat.get_data_df('une_rt_a', flags=False) # Labour market transitions

Audit¶

In order to make a first verfication of the datasets, we will perform an audit. The goal of our audit is to examine the data with regards to its structure and its content. Thereby we can identify how we have to clean and transform the datasets and we can anticipate if we will encounter issues by doing so. The pandas library provides us with useful methods to perform a first inspection. Below we will focus on inspecting the unemployment rates. We invite you to do the same with the other datasets.

udf.shape # Returns the shape: (rows, columns)

(34736, 97)

udf.columns # Returns the column names

Index(['unit', 'sex', 'age', 'citizen', 'geo\time', '2020Q4', '2020Q3',

'2020Q2', '2020Q1', '2019Q4', '2019Q3', '2019Q2', '2019Q1', '2018Q4',

'2018Q3', '2018Q2', '2018Q1', '2017Q4', '2017Q3', '2017Q2', '2017Q1',

'2016Q4', '2016Q3', '2016Q2', '2016Q1', '2015Q4', '2015Q3', '2015Q2',

'2015Q1', '2014Q4', '2014Q3', '2014Q2', '2014Q1', '2013Q4', '2013Q3',

'2013Q2', '2013Q1', '2012Q4', '2012Q3', '2012Q2', '2012Q1', '2011Q4',

'2011Q3', '2011Q2', '2011Q1', '2010Q4', '2010Q3', '2010Q2', '2010Q1',

'2009Q4', '2009Q3', '2009Q2', '2009Q1', '2008Q4', '2008Q3', '2008Q2',

'2008Q1', '2007Q4', '2007Q3', '2007Q2', '2007Q1', '2006Q4', '2006Q3',

'2006Q2', '2006Q1', '2005Q4', '2005Q3', '2005Q2', '2005Q1', '2004Q4',

'2004Q3', '2004Q2', '2004Q1', '2003Q4', '2003Q3', '2003Q2', '2003Q1',

'2002Q4', '2002Q3', '2002Q2', '2002Q1', '2001Q4', '2001Q3', '2001Q2',

'2001Q1', '2000Q4', '2000Q3', '2000Q2', '2000Q1', '1999Q4', '1999Q3',

'1999Q2', '1999Q1', '1998Q4', '1998Q3', '1998Q2', '1998Q1'],

dtype='object')

udf.head(10) # Returns first 10 rows

| unit | sex | age | citizen | geo\time | 2020Q4 | 2020Q3 | 2020Q2 | 2020Q1 | 2019Q4 | ... | 2000Q2 | 2000Q1 | 1999Q4 | 1999Q3 | 1999Q2 | 1999Q1 | 1998Q4 | 1998Q3 | 1998Q2 | 1998Q1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | PC | F | Y15-19 | EU15_FOR | AT | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 1 | PC | F | Y15-19 | EU15_FOR | BE | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | PC | F | Y15-19 | EU15_FOR | BG | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | PC | F | Y15-19 | EU15_FOR | CH | NaN | NaN | NaN | NaN | 11.9 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4 | PC | F | Y15-19 | EU15_FOR | CY | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 5 | PC | F | Y15-19 | EU15_FOR | DE | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 6 | PC | F | Y15-19 | EU15_FOR | DK | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 7 | PC | F | Y15-19 | EU15_FOR | EA19 | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 8 | PC | F | Y15-19 | EU15_FOR | EE | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 9 | PC | F | Y15-19 | EU15_FOR | EL | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

10 rows × 97 columns

udf.tail(10) # Returns last 10 rows

| unit | sex | age | citizen | geo\time | 2020Q4 | 2020Q3 | 2020Q2 | 2020Q1 | 2019Q4 | ... | 2000Q2 | 2000Q1 | 1999Q4 | 1999Q3 | 1999Q2 | 1999Q1 | 1998Q4 | 1998Q3 | 1998Q2 | 1998Q1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 34726 | PC | T | Y70-74 | TOTAL | NO | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34727 | PC | T | Y70-74 | TOTAL | PL | NaN | NaN | NaN | NaN | NaN | ... | 4.2 | 4.9 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34728 | PC | T | Y70-74 | TOTAL | PT | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34729 | PC | T | Y70-74 | TOTAL | RO | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34730 | PC | T | Y70-74 | TOTAL | RS | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34731 | PC | T | Y70-74 | TOTAL | SE | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34732 | PC | T | Y70-74 | TOTAL | SI | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34733 | PC | T | Y70-74 | TOTAL | SK | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34734 | PC | T | Y70-74 | TOTAL | TR | 3.0 | 1.6 | 1.0 | 0.9 | 2.2 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34735 | PC | T | Y70-74 | TOTAL | UK | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

10 rows × 97 columns

udf.info() # Prints the summary of the dataset

<class 'pandas.core.frame.DataFrame'> RangeIndex: 34736 entries, 0 to 34735 Data columns (total 97 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 unit 34736 non-null object 1 sex 34736 non-null object 2 age 34736 non-null object 3 citizen 34736 non-null object 4 geo\time 34736 non-null object 5 2020Q4 8424 non-null float64 6 2020Q3 8912 non-null float64 7 2020Q2 8686 non-null float64 8 2020Q1 8505 non-null float64 9 2019Q4 13764 non-null float64 10 2019Q3 13683 non-null float64 11 2019Q2 13790 non-null float64 12 2019Q1 13875 non-null float64 13 2018Q4 13883 non-null float64 14 2018Q3 13831 non-null float64 15 2018Q2 14077 non-null float64 16 2018Q1 14466 non-null float64 17 2017Q4 14236 non-null float64 18 2017Q3 14133 non-null float64 19 2017Q2 14219 non-null float64 20 2017Q1 14705 non-null float64 21 2016Q4 14560 non-null float64 22 2016Q3 14756 non-null float64 23 2016Q2 14833 non-null float64 24 2016Q1 14979 non-null float64 25 2015Q4 14703 non-null float64 26 2015Q3 14700 non-null float64 27 2015Q2 14966 non-null float64 28 2015Q1 15146 non-null float64 29 2014Q4 14678 non-null float64 30 2014Q3 14797 non-null float64 31 2014Q2 14889 non-null float64 32 2014Q1 15270 non-null float64 33 2013Q4 14976 non-null float64 34 2013Q3 14726 non-null float64 35 2013Q2 14954 non-null float64 36 2013Q1 15064 non-null float64 37 2012Q4 14623 non-null float64 38 2012Q3 14391 non-null float64 39 2012Q2 14526 non-null float64 40 2012Q1 14509 non-null float64 41 2011Q4 14251 non-null float64 42 2011Q3 13966 non-null float64 43 2011Q2 14231 non-null float64 44 2011Q1 14179 non-null float64 45 2010Q4 13900 non-null float64 46 2010Q3 13644 non-null float64 47 2010Q2 14001 non-null float64 48 2010Q1 13920 non-null float64 49 2009Q4 12927 non-null float64 50 2009Q3 12826 non-null float64 51 2009Q2 13350 non-null float64 52 2009Q1 12633 non-null float64 53 2008Q4 11780 non-null float64 54 2008Q3 11487 non-null float64 55 2008Q2 11965 non-null float64 56 2008Q1 11395 non-null float64 57 2007Q4 11001 non-null float64 58 2007Q3 11030 non-null float64 59 2007Q2 11710 non-null float64 60 2007Q1 11242 non-null float64 61 2006Q4 11464 non-null float64 62 2006Q3 11371 non-null float64 63 2006Q2 12033 non-null float64 64 2006Q1 11662 non-null float64 65 2005Q4 7806 non-null float64 66 2005Q3 7716 non-null float64 67 2005Q2 8231 non-null float64 68 2005Q1 7931 non-null float64 69 2004Q4 5839 non-null float64 70 2004Q3 5811 non-null float64 71 2004Q2 8329 non-null float64 72 2004Q1 5635 non-null float64 73 2003Q4 5519 non-null float64 74 2003Q3 5509 non-null float64 75 2003Q2 8232 non-null float64 76 2003Q1 5571 non-null float64 77 2002Q4 4998 non-null float64 78 2002Q3 4912 non-null float64 79 2002Q2 5908 non-null float64 80 2002Q1 5310 non-null float64 81 2001Q4 4603 non-null float64 82 2001Q3 4205 non-null float64 83 2001Q2 5482 non-null float64 84 2001Q1 4659 non-null float64 85 2000Q4 4274 non-null float64 86 2000Q3 3918 non-null float64 87 2000Q2 5660 non-null float64 88 2000Q1 4490 non-null float64 89 1999Q4 3337 non-null float64 90 1999Q3 3042 non-null float64 91 1999Q2 5186 non-null float64 92 1999Q1 3204 non-null float64 93 1998Q4 1796 non-null float64 94 1998Q3 1500 non-null float64 95 1998Q2 5192 non-null float64 96 1998Q1 2088 non-null float64 dtypes: float64(92), object(5) memory usage: 25.7+ MB

udf.describe() # Returns descriptive statistics

| 2020Q4 | 2020Q3 | 2020Q2 | 2020Q1 | 2019Q4 | 2019Q3 | 2019Q2 | 2019Q1 | 2018Q4 | 2018Q3 | ... | 2000Q2 | 2000Q1 | 1999Q4 | 1999Q3 | 1999Q2 | 1999Q1 | 1998Q4 | 1998Q3 | 1998Q2 | 1998Q1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 8424.000000 | 8912.000000 | 8686.000000 | 8505.000000 | 13764.000000 | 13683.000000 | 13790.000000 | 13875.000000 | 13883.000000 | 13831.000000 | ... | 5660.000000 | 4490.000000 | 3337.000000 | 3042.000000 | 5186.000000 | 3204.000000 | 1796.000000 | 1500.000000 | 5192.000000 | 2088.000000 |

| mean | 10.930437 | 10.969895 | 10.178920 | 9.638013 | 9.865577 | 9.747607 | 10.167817 | 10.958148 | 10.664446 | 10.379372 | ... | 9.710371 | 11.380356 | 10.869374 | 10.367949 | 10.251851 | 12.671785 | 13.144710 | 13.532533 | 10.971206 | 14.074377 |

| std | 8.803644 | 8.662468 | 8.597697 | 7.922562 | 7.689105 | 7.400749 | 7.602340 | 8.273551 | 8.028276 | 7.370775 | ... | 7.620672 | 8.546312 | 8.150764 | 8.235109 | 7.718976 | 9.705334 | 8.964836 | 10.271037 | 8.557631 | 9.859074 |

| min | 0.900000 | 0.900000 | 1.000000 | 0.900000 | 0.800000 | 0.600000 | 0.800000 | 0.600000 | 0.800000 | 0.800000 | ... | 0.400000 | 0.600000 | 0.500000 | 0.400000 | 0.300000 | 0.600000 | 2.300000 | 1.500000 | 0.500000 | 2.600000 |

| 25% | 5.200000 | 5.100000 | 4.600000 | 4.300000 | 4.700000 | 4.700000 | 4.800000 | 5.400000 | 5.100000 | 5.100000 | ... | 4.300000 | 5.400000 | 5.300000 | 4.900000 | 4.900000 | 6.100000 | 7.375000 | 6.300000 | 5.400000 | 6.675000 |

| 50% | 8.200000 | 8.200000 | 7.200000 | 7.100000 | 7.400000 | 7.300000 | 7.700000 | 8.400000 | 8.400000 | 8.300000 | ... | 7.400000 | 8.900000 | 8.300000 | 7.600000 | 8.000000 | 9.300000 | 11.100000 | 10.150000 | 8.600000 | 10.800000 |

| 75% | 13.700000 | 14.100000 | 12.800000 | 12.600000 | 12.900000 | 12.700000 | 13.500000 | 14.000000 | 13.900000 | 13.700000 | ... | 13.500000 | 15.200000 | 14.000000 | 13.000000 | 13.400000 | 15.825000 | 16.300000 | 17.200000 | 13.500000 | 18.925000 |

| max | 91.700000 | 77.100000 | 73.600000 | 65.700000 | 64.800000 | 68.900000 | 74.300000 | 75.300000 | 75.500000 | 75.700000 | ... | 69.600000 | 84.100000 | 72.400000 | 70.500000 | 61.100000 | 68.900000 | 70.200000 | 53.100000 | 62.500000 | 55.700000 |

8 rows × 92 columns

Cleaning¶

We should have a clear picture now of how the datasets are structured (for the sake of this tutorial, we have limited the inspection on the unemployment rates). The second step is the data cleaning process. The data cleaning process is neccessary to prepare the datasets for their later visualization and analysis. Thereby, the goal is to fix or remove incorrect, corrupted or unneccessary data that we do not need for our purpose. In the following cells, we will make some changes on all datasets. Please read through carefully the following list that documents what changes are being made. To help you with your understanding and your learning process, please refer also to the in-line comments which should give you a short and clear explanation of what is being done exactly!

List of changes

| ID | Change | Pandas method used | Description |

|---|---|---|---|

| 1 | Rename columns | pd.rename |

Rename the column 'geo\time' to 'country' |

| 2 | Replace string occurrences | pd.Series.apply(lambda k: k.replace()) |

Replace all 'country'occurrences of 'EL' (Greece) and 'UK' (United Kingdom) with 'GR' and 'GB' |

| 3 | Remove string occurrences | df[~df['column'].str.contains('|'.join(list_of_occurrences))] |

Remove all observation whose 'country' columns contains the string 'EU', 'EA', 'Malta' or 'Germany' |

# 1. Rename columns: We rename the column 'geo\time' to 'country' with the rename method

# (A) Note to escape the '\' character with a second '\'

# (B) Note to make changes inplace instead of just creating a copy of the dataframe

udf.rename(columns={'geo\\time':'country'}, inplace=True)

tdf.rename(columns={'geo\\time':'country'}, inplace=True)

gdf.rename(columns={'geo\\time':'country'}, inplace=True)

age_UE.rename(columns={'geo\\time':'country'}, inplace=True)

age_IE.rename(columns={'geo\\time':'country'}, inplace=True)

age_U.rename(columns={'geo\\time':'country'}, inplace=True)

# 2. Replace string occurences: We replace all 'country'occurrences of 'EL' (Greece) and 'UK' (United Kingdom) with 'GR' and 'GB'

# (A) Note we use apply an specified function to the whole column (details on lambda functions coming)

udf['country'] = udf['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

tdf['country'] = tdf['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

gdf['country'] = gdf['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

age_UE['country'] = age_UE['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

age_IE['country'] = age_IE['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

age_U['country'] = age_U['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

Lambda functions

Lambda functions are so-called anonymous functions. In programming, anonymous functions is a function definition which is not stored into memory and therefore is not assigned to an identifier. The function is typically only used once, or a limited number of times. The main advantage of the lambda function for our practical purpose is that it is syntactically lighter than defining and using an indexed function. Nevertheless, they fulfill the same role as an indexed function.

# 3. Remove string occurrences: We remove all observation whose 'country' columns contains the string 'EU', 'EA', 'Malta' or 'Germany'

# (A) Note that str.contains checks whether the expression in the brackets occurs (yielding a boolean series)

# (B) Note that in the brackets, the individual strings are concatenated with a logical OR ('|'), this represents a regular expression

# - More on how to construct regular expressions in python here: https://docs.python.org/3/howto/regex.html

# (C) Note before applying the boolean mask to the dataframe, we negate with a logical NOT ('~')

udf = udf[~udf['country'].str.contains('|'.join(['EU', 'EA', 'Malta', 'Germany']))]

age_UE = age_UE[~age_UE['country'].str.contains('Malta')]

age_U = age_U[~age_U['country'].str.contains('|'.join(['Malta', 'Germany', 'EA19', 'EU15', 'EU27_2020', 'FX', 'EU28', 'Luxembourg', 'Montenegro', 'Serbia']))]

Transformation¶

After having cleaned our datasets, our third step is to perform data transformation (we hereby introduce a new step in our data handling process). The data transformation process is differs from the data cleaning process, as that it maps data from one raw data format into another which is more useful for the analysis to be performed. In this section, we will prepare the datasets for our analysis. We thereby compute new datasets containing transition rates, job finding probabilities and steady state unemployment rates. If at any point in time, you lose the overview of what is actually being computed, we invite you to refer back to our secion 2. Theoretical framework which represents the fundament of our calculations. In order to help you with your learning process, we will refer to the relevant sections.

Some universal transformations

With regards to our datasets, Eurostat uses two-letter codes for country identifications. For our practical purposes, we want to substitute these country codes by full country names. To this end, the pycountry package provides useful functions to map country codes to their full names. The following change seems a little bit more complicated, but it follows the same logic of a lambda function that we have seen before.

# With `pyc.countries.get(alpha_2 = k).name` we pass the two country codes as arguments and get the `name` property

# (A) In case that the abbreviation doesnt match a code, we return the country code specified by Eurostat.

udf['country'] = udf['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

tdf['country'] = tdf['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

gdf['country'] = gdf['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

age_UE['country'] = age_UE['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

age_IE['country'] = age_IE['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

age_U['country'] = age_U['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

Calculation of transistion rates

The following code cells will compute datasets for all nine transition rates. In a first step, we will filter the absolute transitions, drop unneccessary columns and multiply all numbers by factor 1000 as we are dealing with numbers in thousands.

tdf = tdf[(tdf['unit'] == 'THS_PER') & (tdf['s_adj'] == 'NSA') & (tdf['sex'] == 'T')] # Filtering relevant 'THS_PERC', 'NSA' and 'sex'

tdf = tdf.drop(columns=['unit', 's_adj', 'sex']) # Dropping columns

tdf = tdf.set_index(['indic_em', 'country']) # Setting the right indices ('indic_em' are labels for transitions)

tdf = tdf.applymap(lambda k: k*1000) # Multiply all numbers by factor 1000

In a second step, we iterate through the labels for each individual transition, and we filter the large dataset by this transition. This will give us the numbers for one particular transition across all countries. We store our results in a dictionary.

transitions = {}

for tr in tdf.index.levels[0]:

exec(f"transitions_{tr} = tdf.loc['{tr}']") # this is declaration of exec statements

exec(f"transitions_{tr} = transitions_{tr}.sort_index()") # sort values by country

exec(f"transitions_{tr}.name = '{tr}'") # put name as the tr , 'E_E', 'E_I' etc.

transitions[tr] = eval(f"transitions_{tr}") # evaluate all the statements previously declared in string format

A short note on the exec() function. This function executes the Python code block passed as a string or a code object as argument. The string is parsed as a Python statement and will then be executed by the interpreter. If you need more explanation on how this function works, we encourage you to visit this website.

Having filtered all transitions separately, we can calulate all transition rates. At this point, we invite you to revisit section 2.2. Transition rates in case you would like to refresh you theoretical knowledge (Hint: Transition rates are calculated based on formula 4).

transition_rates = {} # Initiate dictionary to store transition rates

states = ['E', 'U', 'I'] # Define the labour market states

for today in states: # Loop through each state

stock = tdf.loc[tdf.index.levels[0][tdf.index.levels[0].str.startswith(today)]].groupby('country').sum().sort_values(by='country')

# today_tomorrow -> E_E, E_U, E_I, ... a total of 9 different combinations

for tomorrow in states:

exec(f"rate_{today}{tomorrow} = (eval(f'transitions_{today}_{tomorrow}')/stock).replace(np.inf, np.nan)") # replace inf with nan

exec(f"rate_{today}{tomorrow} = eval('rate_{today}{tomorrow}').rolling(4, axis=1).mean()") # rolling mean of each 4 columns starting from 1st in each row

exec(f"rate_{today}{tomorrow}= rate_{today}{tomorrow}[rate_{today}{tomorrow}.columns[::-1]]") # reversing columns, 1st col becomes last column

# Setting the normal index to periodic index for later timeseries or other purposes, e.g., plotting

exec(f"rate_{today}{tomorrow}.columns = pd.period_range(start=rate_{today}{tomorrow}.columns[0],end=rate_{today}{tomorrow}.columns[-1], freq='Q', name='Quarterly Frequency')")

# Assignign a name to the dataframe

exec(f"rate_{today}{tomorrow}.name = 'rate_{today}{tomorrow}'")

# Storage into dictionary

exec(f"transition_rates['rate_{today}{tomorrow}'] = eval(f'rate_{today}{tomorrow}')")

You have made it through the calculations of transition rates! Let's compute job finding probabilities now!

Calculation of job finding probabilities by age

The following code cells will compute datasets for job finding probabilities by age. At this point you will probably ask yourself why we would like to do this by age. As we have learned in the beginning of this tutorial, we can define a labour market by an age group of interest. The datasets that we will construct now will allow us to study age-related cross-section differences of job finding probabilites. In a first step, we want make some individual adjustment before we compute the probabilities. Please read carefully the in-line comments in order to understand what is happening exactly!

# Filtering and dropping: We filter columns by the relevant values and drop unneccessary columns

age_UE = age_UE[(age_UE['duration']=='TOTAL') & (age_UE['sex']=='T')].drop(columns=['duration', 'sex', 'unit'])

age_IE = age_IE[(age_IE['indic_em']=='TOTAL') & (age_IE['sex']=='T')].drop(columns=['indic_em', 'sex', 'unit'])

age_U = age_U[(age_U['unit']=='THS_PER') & (age_U['sex']=='T')].drop(columns=['sex', 'unit'])

# Indexing: We set age and country indices to locate values more easily

age_UE = age_UE.sort_values(by='country').set_index(['age', 'country'])

age_IE = age_IE.sort_values(by='country').set_index(['age', 'country'])

age_U = age_U.sort_values(by='country').set_index(['age', 'country'])

# Transform: We multiply the age_U data values by 1000 as those are numbers expressed as thousands

age_U = age_U.applymap(lambda k: k*1000)

# Sorting: Sorting the daframes by indices

age_UE = age_UE.sort_index(axis=1)

age_IE = age_IE.sort_index(axis=1)

age_U = age_U.sort_index(axis=1)

# Date transformation: We transform the columns which are strings into datetime objects

# For more information about this particular data object, we invite you to follow this website:

# https://docs.python.org/3/library/datetime.html

age_UE.columns = [x.strftime(format = '%Y') for x in pd.to_datetime(age_UE.columns.values, format='%Y')]

age_IE.columns = [x.strftime(format = '%Y') for x in pd.to_datetime(age_IE.columns.values, format='%Y')]

age_U.columns = [x.strftime(format = '%Y') for x in pd.to_datetime(age_U.columns.values, format='%Y')]

# We define a period of interest that we would like to study

# Note: We remove year 2017 as this column is missing in the dataframe

period = list(range(2011, 2021))

period.remove(2017)

period = [datetime.datetime.strptime(str(x), '%Y') for x in period]

period = [x.strftime('%Y') for x in period]

# We filter datasets by the time interval which was specified

age_UE = age_UE[period]

age_IE = age_IE[period]

age_U = age_U[period]

Now that we have our datasets ready for the computation, we can apply our formula that we have learned in section 2.2. Transition rates (Hint: We are applying formula 5).

# Initiate age groups with filter key

age_groups_jf = {'young':'Y15-24', 'middle':'Y25-54', 'old':'Y55-74'}

# Initiate a dictionary to store the results

job_finding_probs = {}

# Loop through each age group

for age_group in age_groups_jf:

# Applying formula 5

job_finding_prob = (age_UE.loc[age_groups_jf[age_group]] + age_IE.loc[age_groups_jf[age_group]])/age_U.loc[age_groups_jf[age_group]]

job_finding_prob.name = age_group

# Storing results in dictionary

job_finding_probs[age_group] = job_finding_prob

Great! We have calculated job finding probabilities. Let's look at steady state unemployment rates now!

Calculation of steady state unemployment rates

The following code cells will compute datasets for steady state unemployment rates. Again we will make individual adjustemnts first. Please read carefully the in-line comments in order to understand what is happening exactly!

ssdf = udf[(udf['sex']=='T') & (udf['citizen']=='TOTAL') & (udf['age']=='Y15-74')].drop(columns = ['sex', 'age', 'citizen']).sort_values(by = 'country').set_index('country')

ssdf = ssdf.rolling(4, axis=1).mean() # Seasonally adjust by taking the rolling mean over 4 quarters

ssdf = ssdf[ssdf.columns[::-1]] # Make order of quarters chronological / reverse the order with 1998Q1 as first col

ssdf.columns=pd.period_range(start=ssdf.columns[0], end=ssdf.columns[-1], freq="Q", name="Quarterly Frequency") #change datetype of quarters

In a second step, we want to build dataframes for each individual country. We will store the measured unemployment rate and all transition rates into the dataframe. Further, we store each dataset in a dictionary called 'countries'

# Inititiate dictionary

countries = {}

# saving data in dict format in countries

for country in ssdf.index:

df = pd.DataFrame() # create a temporary dataframe for each country

df['Measured Unemployment Rate'] = ssdf.loc[country] # get the country specific all unemployment data and store in col 'Measured Unemployment rate'

df.name = country

for rate in transition_rates: # get all transition rates for that country in rate columns of df

# this rate columns will start from rate_EE to rate_II, all the 9 rates which has been calculated before

if country in transition_rates[rate].index:

df[rate] = transition_rates[rate].loc[country]

if country in transition_rates[rate].index:

countries[country] = df # finally save the df as value of dictionary country

Now we are set up for the calculation of the steady state unemployment rates. We invite you to revisit formula 8 in section 2.3. Steady state.

for country in countries:

df = countries[country]

# coming from the formula previously mentioned

# Compute Steady State Unembployment Rate

aux_E = df['rate_UI']*df['rate_IE'] + df['rate_IU']*df['rate_UE'] + df['rate_IE']*df['rate_UE']

aux_U = df['rate_EI']*df['rate_IU'] + df['rate_IE']*df['rate_EU'] + df['rate_IU']*df['rate_EU']

df['Steady State Unemployment Rate'] = (aux_U/(aux_E+aux_U))*100

Controlling¶

Having transformed the data, we have set the stage for our analysis. Before we start, we have to ensure that the data cleaning and data transformation process was successful. In order to check our datasets, we will perform controlling tasks that check the datasets for their correctness. We follow two sperate methods. We control the data on a quantitative and on a qualitative (i.e. visual) manner.

Quantitative tests

For the quantitative tests, it will be useful to have a function which can be applied to multiple datasets. Therefore, we create a function that checks datasets for the following numerical attributes.

- Relative numbers: Rates of all types must be numerical values within the interval [0, 1]

- Absolute numbers: Absolute numbers must be numerical values which are non-negative

In the following cell, we will construct a parametrizable function which will serve our purpose.

# Note: First any() reduces pd.DataFrame to a pd.Series (reduction along axis=1) of booleans, second any() reduces pd.Series to one single boolean

def check(format, df):

if format == 'relative':

if (df > 1).any().any() or (df < 0).any().any(): # Check: Any values outside interval [0, 1]

print(f'Attention: Dataframe {df.name} contains values outside the interval [0, 1]')

return

else:

print(f'Dataframe {df.name} is free from invalid values')

return

if format == 'absolute':

if (np.sign(df.iloc[:, 2:]) == -1).any().any(): # Check: Any value negative (np.sign returns -1, 0 and 1 for negative, null and positive values)

print(f'Attention: Dataframe {df.name} contains negative values')

return

else:

print(f'Dataframe {df.name} is free from invalid values')

return

We check all absolute transitions for their values:

for df in transitions:

check('absolute', transitions[df])

Dataframe E_E is free from invalid values Dataframe E_I is free from invalid values Dataframe E_U is free from invalid values Dataframe I_E is free from invalid values Dataframe I_I is free from invalid values Dataframe I_U is free from invalid values Dataframe U_E is free from invalid values Dataframe U_I is free from invalid values Dataframe U_U is free from invalid values

Next, we check all transition rates for their values:

for df in transition_rates:

check('relative', transition_rates[df])

Dataframe rate_EE is free from invalid values Dataframe rate_EU is free from invalid values Dataframe rate_EI is free from invalid values Dataframe rate_UE is free from invalid values Dataframe rate_UU is free from invalid values Dataframe rate_UI is free from invalid values Dataframe rate_IE is free from invalid values Dataframe rate_IU is free from invalid values Dataframe rate_II is free from invalid values

Finally, we check our job finding probabilities. Remember, probabilities are within the interval [0, 1]

for jfp in job_finding_probs:

check('relative', job_finding_probs[jfp])

Dataframe young is free from invalid values Dataframe middle is free from invalid values Dataframe old is free from invalid values

Great! Our datasets have passed all quantitative tests. Let's perform some visual tests now.

Visual tests

In the following, we will perform some basic plotting tests. The goal of those plottings is to mainly adress possible corruptions of our data. Additionally, this will give us some first inspiration for the data visualization section that will follow.

udf['2020Q4'].hist(bins = 300); # Histogram to check the unemployment rates in 2020Q4

Distribution is right skewed, with positive values only. This corresponds to the values we are expecting to see with unemployment rates.

transitions['E_E']['2020Q4'].plot.bar(); # Barplot to check the transitions in 2020Q4

Absolute transitions seem to be positive, let's do a final check!

transition_rates['rate_EE']['2019Q4'].plot.area(ylim = (-1,2)); # Areaplot to check transition rates in 2019Q4

Perfect! It seems that transition rates are within the interval [0, 1]. For now, we can be sure that our computations were performed correctly. As we have learned how to handle real-world data in an appropriate and correct manner, we will now further built upon our data handling skills as we need to prepare our datasets for the regressions. You will have now acquired a technical toolkit that will serve you as we will prepare the datasets for our statistical analysis.

Regression datasets¶

The following section prepares the datasets for our statistical analysis. At this point, you probably ask yourself what the substance of our analysis will be. As we have learned, we can define our labour market in terms of segments based on geographical differences as well as differences accross age groups and education. We want to explore those cross-sectional differences. Hence, we will prepare three different datasets on which we will perform simple linear OLS regressions. For our first regression, we regress GDP growth on unemployment rates of different age groups. In our second regression, we regress the same GDP growth on unemployment rates of different educational attainment levels. In our third regression, we regress the job finding probability of different age groups on the country-level GDP. Please note: During this section you will probably ask yourself, why we transform the datasets the way we do it. This will certainly become clearer when you wen through section 6. Statistical analysis. But for methodological purposes we would like to bring this task forward into the data handling section.

GDP growth on unemployment rates of age groups¶

Data import

With the Eurostat API discussed in section 4.2. Technical toolkit, we can import the GDP growth data:

# Import the GDP-Growth data with the Eurostat API

gdf = eurostat.get_data_df('tec00115', flags=False)

# Print the imported dataframe

gdf

| unit | na_item | geo\time | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | CLV_PCH_PRE | B1GQ | AT | -3.8 | 1.8 | 2.9 | 0.7 | 0.0 | 0.7 | 1.0 | 2.0 | 2.4 | 2.6 | 1.4 | -6.6 |

| 1 | CLV_PCH_PRE | B1GQ | BA | -3.0 | 0.9 | 1.0 | -0.8 | 2.3 | 1.2 | 3.1 | 3.1 | 3.2 | 3.7 | 2.8 | NaN |

| 2 | CLV_PCH_PRE | B1GQ | BE | -2.0 | 2.9 | 1.7 | 0.7 | 0.5 | 1.6 | 2.0 | 1.3 | 1.6 | 1.8 | 1.8 | -6.3 |

| 3 | CLV_PCH_PRE | B1GQ | BG | -3.4 | 0.6 | 2.4 | 0.4 | 0.3 | 1.9 | 4.0 | 3.8 | 3.5 | 3.1 | 3.7 | -4.2 |

| 4 | CLV_PCH_PRE | B1GQ | CH | -2.1 | 3.3 | 1.9 | 1.2 | 1.8 | 2.4 | 1.7 | 2.0 | 1.6 | 3.0 | 1.1 | -2.9 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 75 | CLV_PCH_PRE_HAB | B1GQ | SE | -5.2 | 5.1 | 2.4 | -1.3 | 0.3 | 1.6 | 3.4 | 0.8 | 1.2 | 0.8 | 0.3 | -3.5 |

| 76 | CLV_PCH_PRE_HAB | B1GQ | SI | -8.4 | 1.0 | 0.7 | -2.8 | -1.2 | 2.7 | 2.1 | 3.1 | 4.7 | 4.1 | 2.3 | -6.2 |

| 77 | CLV_PCH_PRE_HAB | B1GQ | SK | -5.7 | 5.6 | 3.5 | 1.7 | 0.5 | 2.5 | 4.7 | 2.0 | 2.8 | 3.5 | 2.4 | -4.9 |

| 78 | CLV_PCH_PRE_HAB | B1GQ | TR | -6.1 | 6.8 | 9.6 | 3.5 | 7.1 | 3.5 | 4.7 | 1.9 | 6.1 | 1.6 | -0.5 | 0.8 |

| 79 | CLV_PCH_PRE_HAB | B1GQ | UK | -4.8 | 1.3 | 0.4 | 0.8 | 1.5 | 2.1 | 1.6 | 0.9 | 1.1 | 0.6 | 0.8 | NaN |

80 rows × 15 columns

Data transformation

To run the regression, we need to have the average values of GDP-Growth for each country. For this, we firstly need to only keep the rows that have the unit CLV_PCH_PRE, which means only the values which show the percentage change from last year. We then transform the dataframe and finally, we will caluclate the mean GDP-Growth values for each country:

# Only keep the rows with the right unit (percentage change from last year)

gdf = gdf[gdf['unit']=='CLV_PCH_PRE']

# Rename the geo\\time column to country

gdf = gdf.rename(columns={'geo\\time':'country'})

# Change the country values EL and UK to GR and GB

gdf['country'] = gdf['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

# Drop the unneeded column of unit and na_item

gdf = gdf.drop(columns=['unit', 'na_item'])

# Change the country codes to country names

gdf['country'] = gdf['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

# Set the country names as index

gdf = gdf.set_index("country")

# Transpose the dataframe to have the countries as column names and years as index

gdf = gdf.T

# Create pandas series object with all GDP Growth averages for each country

gdp_on_ur = gdf.apply(pd.to_numeric, errors='coerce').mean(axis=0)

# Convert panda series object to dataframe

gdp_on_ur = gdp_on_ur.to_frame()

# Rename the column from "0" to "Average GDP Groth"

gdp_on_ur.columns = ["Average GDP Growth"]

gdp_on_ur

| Average GDP Growth | |

|---|---|

| country | |

| Austria | 0.425000 |

| Bosnia and Herzegovina | 1.590909 |

| Belgium | 0.633333 |

| Bulgaria | 1.341667 |

| Switzerland | 1.250000 |

| Cyprus | 0.550000 |

| Czechia | 1.166667 |

| Germany | 0.733333 |

| Denmark | 0.966667 |

| EA | 0.233333 |

| EA19 | 0.225000 |

| Estonia | 1.675000 |

| Greece | -2.775000 |

| Spain | -0.333333 |

| EU27_2020 | 0.441667 |

| EU28 | 1.072727 |

| Finland | 0.100000 |

| France | 0.233333 |

| Croatia | -0.408333 |

| Hungary | 1.350000 |

| Ireland | 5.083333 |

| Iceland | 1.191667 |

| Italy | -0.966667 |

| Lithuania | 1.658333 |

| Luxembourg | 2.116667 |

| Latvia | 0.633333 |

| Montenegro | 1.880000 |

| North Macedonia | 1.725000 |

| Malta | 3.966667 |

| Netherlands | 0.583333 |

| Norway | 1.025000 |

| Poland | 3.050000 |

| Portugal | -0.191667 |

| Romania | 1.800000 |

| Serbia | 1.291667 |

| Sweden | 1.500000 |

| Slovenia | 0.516667 |

| Slovakia | 1.641667 |

| Turkey | 4.633333 |

| United Kingdom | 1.300000 |

| XK | 2.975000 |

With this we now have a dataframe of the dependent variable. Next, we need to create dataframes of the independent variables. For this, we will need the total average unemployment rate and the average unemployment rates of the 15-39 and 40-64 year olds respectively. We store the segmented datasets in a dictionary called 'GDPxURxA_dic'.

# Create List with all Age groups

age_groups = {'total': 'Total', 'young': 'Y15-39', 'old': 'Y40-64'}

# Create variable "period" with the timeperiods for which we want to calculate the mean unemployment rates (based on the available GDP-Growth data)

period = pd.period_range(start='2009Q1',end='2019Q4', freq='Q', name='Quarterly Frequency')

# Create dictionary to store dataframes

GDPxURxA_dic = {}

# Run for-loop to create all unemployment dataframes

for age_group in age_groups: # Go through every age group

aux_df = pd.DataFrame() # Create auxilary dataframe

if age_group == 'total':

aux_df['Measured Unemployment Rate'] = ssdf[period].mean(axis=1) # Calculate mean unemployment rate for total population

else:

M_UR = udf[(udf['sex']=='T') & (udf['citizen']=='TOTAL') & (udf['age']==age_groups[age_group])].drop(columns = ['sex', 'age', 'citizen', 'unit']).sort_values(by = 'country').set_index('country') #Select unemployment data for young or old age group

M_UR = M_UR.sort_index(axis=1) # Make order of quarters chronological

M_UR.columns = pd.period_range(start = M_UR.columns[0], end=M_UR.columns[-1], freq="Q", name="Quarterly Frequency") # change datetype of quarters

aux_df["Measured Unemployment Rate"] = M_UR[period].mean(axis=1) # Calculate Mean unemployment rate for age group and store in axuliary dataframe

exec(f"UR_{age_group} = aux_df") # Name the respective dataframes

exec(f"UR_{age_group}.name='{age_group}'") # Name the respective dataframes

exec(f"GDPxURxA_dic['{age_group}'] = UR_{age_group}") # Store dataframe in dictionary

Now that we have dataframes of both the dependent and independent variables, we can merge them together into one dataframe. This can easily be done with the pd.merge function from the pandas library. We will store our regression datasets in a dictionary called 'GDPxURxA_regdic'.

# Create empty dictionary to store all dataframes

GDPxURxA_regdic = {}

# Iterate through unemployment dictionary generated previously to create dataframes

for age_group in GDPxURxA_dic:

aux_df = pd.merge(GDPxURxA_dic[age_group], gdp_on_ur, on='country', how='outer').dropna() # Merge UR dataframe with GDP-Growth dataframe and drop all rows which contain NAN values

aux_df.columns = ["Measured Unemployment Rate", "GDP Growth"] # Rename the columns

GDPxURxA_regdic[age_group] = aux_df # Save the dataframes into the dictionary

Well done! We have our first dataset ready. Let's move on!

GDP growth and unemployment rates of educational attainment levels¶

Data import

With the Eurostat API discussed in section 4.2. Technical toolkit, it is very easy to import the data on the unemployment rate for different educational attainment levels:

# Import the educational attainment level data with the Eurostat library

edf = eurostat.get_data_df('lfsq_urgaed', flags=False)

# Print the dataframe

edf

| unit | sex | age | isced11 | geo\time | 2020Q4 | 2020Q3 | 2020Q2 | 2020Q1 | 2019Q4 | ... | 2000Q2 | 2000Q1 | 1999Q4 | 1999Q3 | 1999Q2 | 1999Q1 | 1998Q4 | 1998Q3 | 1998Q2 | 1998Q1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | PC | F | Y15-19 | ED0-2 | AT | NaN | 15.5 | 13.3 | NaN | NaN | ... | NaN | 8.9 | NaN | 8.6 | 8.4 | 9.2 | NaN | NaN | NaN | 16.2 |

| 1 | PC | F | Y15-19 | ED0-2 | BE | NaN | 33.0 | 25.4 | NaN | 29.6 | ... | 34.6 | NaN | NaN | 32.1 | NaN | 38.4 | NaN | NaN | 39.2 | NaN |

| 2 | PC | F | Y15-19 | ED0-2 | BG | NaN | NaN | NaN | NaN | NaN | ... | 49.4 | 64.7 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | PC | F | Y15-19 | ED0-2 | CH | 7.5 | 15.2 | 5.6 | 4.2 | 3.9 | ... | 4.6 | NaN | NaN | NaN | 6.0 | NaN | NaN | NaN | 5.6 | NaN |

| 4 | PC | F | Y15-19 | ED0-2 | CY | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 15578 | PC | T | Y65-69 | TOTAL | SE | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 15579 | PC | T | Y65-69 | TOTAL | SI | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 15580 | PC | T | Y65-69 | TOTAL | SK | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 15581 | PC | T | Y65-69 | TOTAL | TR | 3.7 | 4.5 | 3.7 | 3.3 | 3.7 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 15582 | PC | T | Y65-69 | TOTAL | UK | NaN | 2.6 | 1.7 | 2.7 | 2.7 | ... | NaN | NaN | NaN | 3.3 | 3.3 | NaN | NaN | NaN | NaN | NaN |

15583 rows × 97 columns

To understand what the different values of a column mean, the eurostat API offers the get_sdmx_dic fuction. For a given dataframe and column, the function returns a dictonary that lists all the values of that column and their respective meaning:

# Get meaning of isced11 column

eurostat.get_sdmx_dic('lfsq_urgaed', 'ISCED11')

{'ED0-2': 'Less than primary, primary and lower secondary education (levels 0-2)',

'ED3_4': 'Upper secondary and post-secondary non-tertiary education (levels 3 and 4)',

'ED5-8': 'Tertiary education (levels 5-8)',

'NRP': 'No response',

'TOTAL': 'All ISCED 2011 levels'}

Similarly as we had done in section 4.2.3 Transformation, we now transform the dataset into a format we can work with. We firstly want to create a dataframe with all genders and the largest possible age group of 15-74 year olds, which has the unemployment rate of all educational attainment levels (except non responses) stored seperately for each country:

# Only select columns of all genders and of agegroup 15-74

edf = edf[(edf['sex']=='T') & (edf['age']=='Y15-74')]

# Rename geo\\time coulmn to country

edf = edf.rename(columns={'geo\\time':'country'})

# Change the country values EL and UK to GR and GB

edf['country'] = edf['country'].apply(lambda k: k.replace('EL', 'GR').replace('UK', 'GB'))

# Drop unneeded columns of unit, sex, age

edf = edf.drop(columns=['unit', 'sex', 'age'])

# Change country code to country name

edf['country'] = edf['country'].apply(lambda k: pyc.countries.get(alpha_2 = k).name if pyc.countries.get(alpha_2 = k) != None else k)

# Drop all rows with a non response for educational attainment level, set index as country and sort the index alphabetically

edf = edf[~(edf['isced11']=='NRP')].set_index('country').sort_index()

# Print dataframe

edf

| isced11 | 2020Q4 | 2020Q3 | 2020Q2 | 2020Q1 | 2019Q4 | 2019Q3 | 2019Q2 | 2019Q1 | 2018Q4 | ... | 2000Q2 | 2000Q1 | 1999Q4 | 1999Q3 | 1999Q2 | 1999Q1 | 1998Q4 | 1998Q3 | 1998Q2 | 1998Q1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| country | |||||||||||||||||||||

| Austria | ED0-2 | 12.0 | 13.4 | 13.3 | 10.4 | 10.1 | 10.6 | 10.9 | 11.0 | 11.0 | ... | 5.6 | 8.1 | 5.8 | 4.9 | 5.5 | 7.5 | NaN | NaN | NaN | 9.3 |

| Austria | ED3_4 | 5.4 | 5.3 | 5.0 | 4.2 | 3.6 | 3.7 | 4.0 | 4.5 | 4.3 | ... | 2.7 | 4.2 | 3.1 | 2.9 | 3.2 | 4.4 | NaN | NaN | NaN | 4.7 |

| Austria | ED5-8 | 3.3 | 3.4 | 3.7 | 3.1 | 2.8 | 3.2 | 2.7 | 3.4 | 2.8 | ... | 1.4 | 2.2 | 2.1 | 1.8 | 1.8 | 2.2 | NaN | NaN | NaN | 2.3 |

| Austria | TOTAL | 5.5 | 5.7 | 5.7 | 4.7 | 4.2 | 4.4 | 4.5 | 4.9 | 4.6 | ... | 3.1 | 4.7 | 3.5 | 3.2 | 3.5 | 4.7 | NaN | NaN | NaN | 5.5 |

| Belgium | ED3_4 | 6.0 | 6.4 | 5.6 | 4.9 | 5.3 | 5.4 | 5.2 | 5.3 | 5.8 | ... | 6.8 | 6.8 | 7.3 | 8.0 | 8.3 | 8.5 | NaN | NaN | 9.1 | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| Turkey | ED5-8 | 13.3 | 14.0 | 11.4 | 11.8 | 12.7 | 15.1 | 12.4 | 13.9 | 12.9 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| United Kingdom | ED3_4 | NaN | 5.5 | 4.3 | 4.3 | 3.9 | 4.4 | 3.8 | 4.1 | 4.4 | ... | 5.0 | 5.3 | 5.2 | 5.4 | 5.3 | NaN | NaN | NaN | NaN | NaN |

| United Kingdom | ED0-2 | NaN | 6.9 | 6.1 | 6.6 | 6.7 | 6.5 | 6.4 | 6.5 | 6.3 | ... | 8.8 | 8.6 | 9.0 | 9.4 | 9.4 | NaN | NaN | NaN | NaN | NaN |

| United Kingdom | ED5-8 | NaN | 3.7 | 2.7 | 2.6 | 2.3 | 2.6 | 2.6 | 2.4 | 2.4 | ... | 2.5 | 3.0 | 3.2 | 3.4 | 2.9 | NaN | NaN | NaN | NaN | NaN |

| United Kingdom | TOTAL | NaN | 4.9 | 3.8 | 3.9 | 3.6 | 3.9 | 3.7 | 3.7 | 3.8 | ... | 5.6 | 5.8 | 5.9 | 6.2 | 6.1 | NaN | NaN | NaN | 6.2 | NaN |

156 rows × 93 columns

From this dataframe, we can now create the dataframes we need for the regression. We need a dataframe with the unemployment rate for each educational attainment level, with the mean value for each country. We create the corresponding dataframe for each educational attainment level and store it in the dictionary 'GPDxURxED_dic'.

# Create dictionary with education levels

ed_levels = {'primary':'ED0-2', 'secondary':'ED3_4', 'tertiary':'ED5-8', 'total':'TOTAL'}

# Create variable "period" with the timeperiods for which we want to calculate the mean unemployment rates (based on the available GDP-Growth data)

period = pd.period_range(start='2009Q1',end='2019Q4', freq='Q', name='Quarterly Frequency')

# Create dictionary to store dataframes