Linear Layer¶

We will be implementing a Linear Layer as they are a fundamental operation in DL. The objective of a linear layer is to map a fixed number of inputs to a desired output (whether it be a regression or classification task)

Forward Pass¶

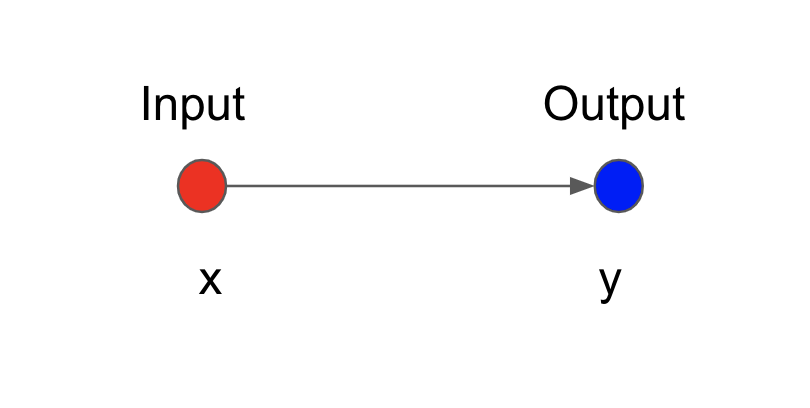

A neural network architecture consists of 2 main layers: first layer (input) and last layer (output).

Node or neuron is the simplest unit of the neural network. Each neuron held a numerical value that will be passed (forward direction in this case) to the next neuron by a mapping. For the sake of simplicity, we will only discuss the linear neural network and linear mapping in this lesson.

Let's consider a simple connection between 2 layers, each has 1 neuron,

We can map the input neuron $x$ to the output neuron $y$ by a linear equation,

$$ y = wx + \beta $$where $w$ is called the weight and $\beta$ is called the bias term.

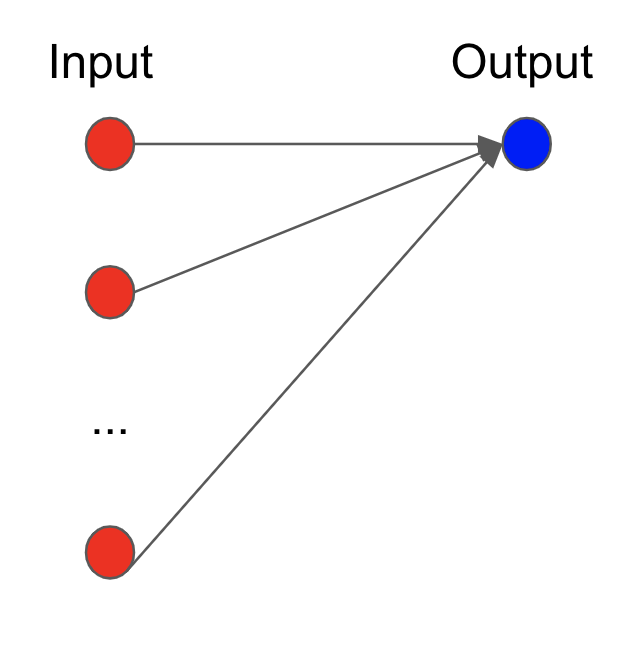

If we have $n$ input neurons ($n>1$) then the output neuron is the linear combination,

$$

\hat{y}=\beta + x_1w_1+x_2w_2+ \cdots +x_{n}w_n

$$

$$

\hat{y}=\beta + x_1w_1+x_2w_2+ \cdots +x_{n}w_n

$$where $w_i$'s are weights corresponding to each map (or arrow).

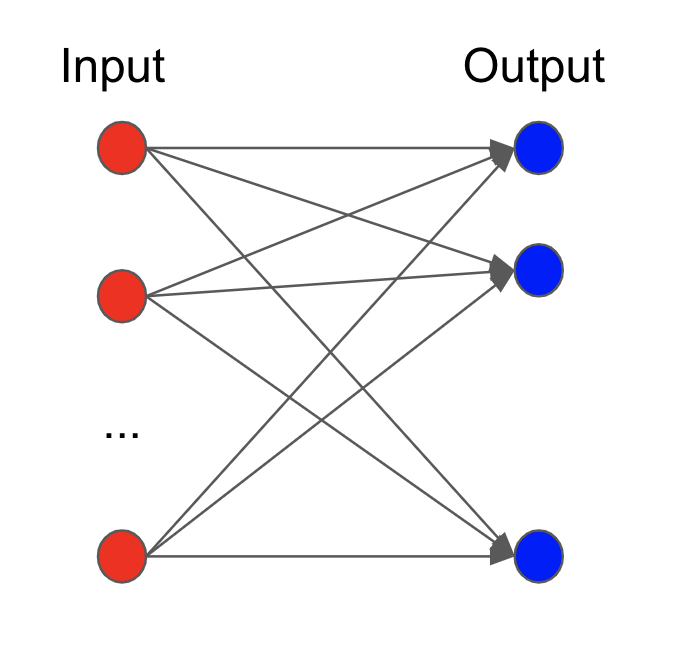

Similarly, if there are $m$ output neurons then the ouput is the system of multi-linear equations,

$$\hat{y_1}=\beta_1 + x_1 w_{1,1}+x_2 w_{1,2}+ \cdots +x_nw_{1,n} $$$$\hat{y_2}=\beta_2 + x_2 w_{2,1}+x_2 w_{2,2}+ \cdots +x_nw_{2,n} $$$$ \vdots $$$$\hat{y_m}=\beta_m + x_n w_{m,1}+x_2 w_{m,2}+ \cdots +x_nw_{m,n} $$

$$\hat{y_1}=\beta_1 + x_1 w_{1,1}+x_2 w_{1,2}+ \cdots +x_nw_{1,n} $$$$\hat{y_2}=\beta_2 + x_2 w_{2,1}+x_2 w_{2,2}+ \cdots +x_nw_{2,n} $$$$ \vdots $$$$\hat{y_m}=\beta_m + x_n w_{m,1}+x_2 w_{m,2}+ \cdots +x_nw_{m,n} $$Compactedly, it can be written in matrix form $$ \hat{Y} = \left(\begin{array}{c} \hat{y}_{0} \\ \hat{y}_{1} \\ \vdots \\ \hat{y}_{m} \end{array}\right) = \left(\begin{array}{ccccc} \beta_1 & w_{1,1} & w_{1,2} & \cdots & w_{1,n} \\ \beta_2 & w_{2,1} & w_{2,2} & \cdots & w_{2,n} \\ \vdots & \vdots & \vdots & \vdots & \vdots \\ \beta_m & w_{m,1} & w_{m,2} & \cdots & w_{m,n} \end{array}\right) \cdot \left(\begin{array}{c} x_{1} \\ x_{1} \\ \vdots \\ x_m \end{array}\right) = W \cdot X $$

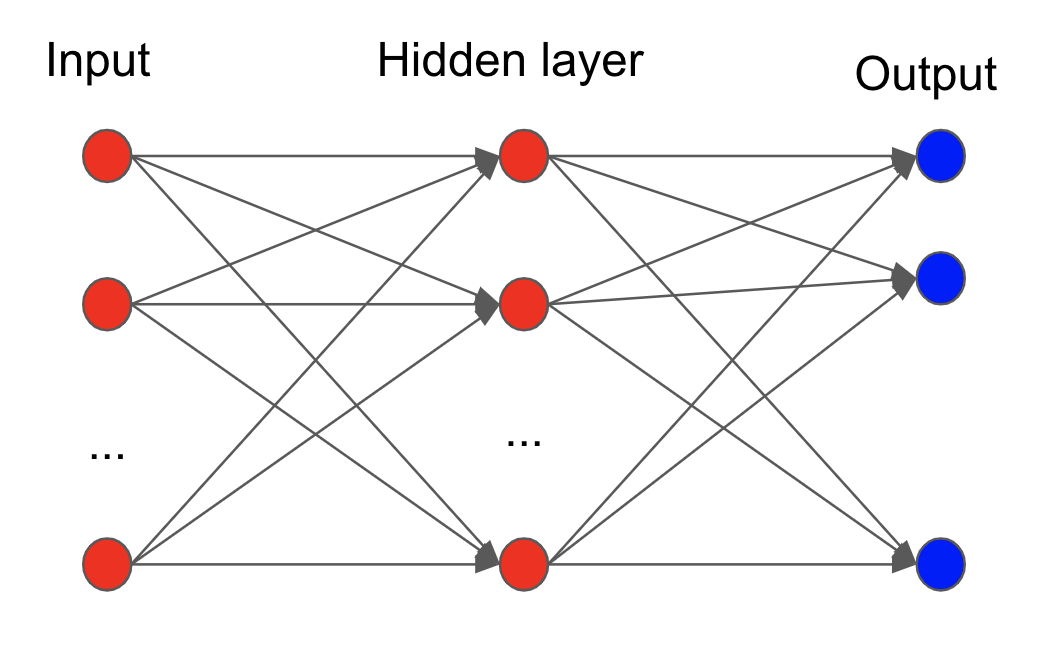

This logic can be extented further as we increase more layers.

The second layer (and beyond) is called the hidden layer. The number of hidden layer is usually decided by the complexity of the problem.

Fact:

If the weight $w_i\neq 0$ for all $i$, then we have a fully connected neural network.

The number of of neuron for each layers can be different. Moreover, they tend to decrease sequentially. Ex: $$500 \text{ neurons} \rightarrow 100 \text{ neurons} \rightarrow 20 \text{ neurons} $$

Most of the practical neural networks are non-linear. This result is achieved by applying a non-linear function on top of the linear combination. This is called the activation function.

Backward Pass¶

Now that we know how to implement the forward pass, we must next solve how it is that we are going to backpropagate our linear operation.

Keep in mind that backpropagation is simply the gradient of our latest forward operation (call it $o$) w.r.t. our weight parameters $w$, which, if many intermediate operations have been performed, we attain by the chain-rule

$$ \hat{y} = 1w_0+x_1w_1+x_2w_2+x_3w_3\\z = \sigma(\hat{y}) \\ o = L(z,y) $$$$ \frac{\partial o}{\partial w} = \frac{\partial o}{\partial z}*\frac{\partial z}{\partial \hat{y}}*\frac{\partial \hat{y}}{\partial w} $$Now, notice that during the backward pass, partial gradients can be classified in two ways:

- An Intermediate operation ($\frac{\partial o}{\partial z},\frac{\partial z}{\partial \hat{y}}$) or

- A "Receiver" operation ($\frac{\partial \hat{y}}{\partial w}$)

Notice that the intermediates have to be calculated to get to our "Receiver" operation, which receives a "step" operation once its gradient has been calculated.

In the above example, none of our intermediate operations introduced any new parameters to our model. However, what if they did? Look below

$$ \hat{y_1} = 1w_0+x_1w_1+x_2w_2+x_3w_3\\z = \sigma(\hat{y})\\l = z*w_4 \\o = L(l,y) $$$$ \frac{\partial o}{\partial w_{0:3}} = \frac{\partial o}{\partial l}*\frac{\partial l}{\partial z}*\frac{\partial z}{\partial \hat{y}}*\frac{\partial \hat{y}}{\partial w_{0:3}} \\\frac{\partial o}{\partial w_{4}} = \frac{\partial o}{\partial l} * \frac{\partial l}{\partial w_4} $$Given that we now have two operations that introduce parameters to our model, we need to make two backward calculations. More importantly, however, notice that their "paths" differ in the way that they take the gradient of $l$ w.r.t. either its parameter $w_4$ or its input $z$

Clearly, these operations are not equivalent

$$ \frac{\partial l}{\partial z} \not= \frac{\partial l}{\partial w_4} $$Despite them originating from the same forward linear operation.

Hence, this demonstrates that for any forward operation with weights, such as our Linear Layer, we need to implement two different backward operations: the intermediate pass (which takes gradient w.r.t. the input) and the "Receiver" pass (which takes gradient w.r.t. operation parameter). For either of these operations, we must integrate the incoming gradient ($\frac{\partial z}{\partial \hat{y}},\frac{\partial o}{\partial l}$) with our Linear Layer gradient ($\frac{\partial \hat{y}}{\partial w_{0:3}},\frac{\partial l}{\partial w_4}$)

Having defined the two types of backward operations, we will now define the general method to compute both calculations on our Linear Layer.

Assume we have below forward operation

$$ y=1w_0+2w_1+3w_2+4w_3 $$Then, for the backward phase, we need to take the partial derivative w.r.t. to each weight coefficient

$$ \frac{\partial y}{\partial w} = 1\frac{\partial y}{\partial w_0} + 2\frac{\partial y}{\partial w_1} + 3\frac{\partial y}{\partial w_2} + 4\frac{\partial y}{\partial w_3}=1+2+3+4 $$What about the partial w.r.t. its input?

$$ \frac{\partial y}{\partial x} = w_0\frac{\partial y}{\partial x_0} + w_1\frac{\partial y}{\partial x_1} + w_2\frac{\partial y}{\partial x_2} + w_3\frac{\partial y}{\partial x_3}=w_0+w_1+w_2+w_3 $$Easy, right? We find that the "Receiver" version of our backward pass is equivalent to the input while its intermediate derivative is equal to its weight parameters.

As a last step, to really be able to generalize these operations to any kind of differentiable architecture, we will show the general procedure to integrate the incoming gradient with our Linear gradient

Gradient Generalization w.r.t weights and input

$$ input: \text{n x f} $$$$ weights: \text{f x h} $$$$ y: \text{n x h} $$$$ incoming\_grad: \text{n x h} $$$$ grad\_y\_wrt\_weights: \text{(incoming_grad'*input)' = (h x n * n x f)' = f x h} $$$$ grad\_y\_wrt\_input: \text{(incoming_grad*weights') = (n x h * h x f) = n x f} $$Now that we know how to generalize a linear layer, let's implement the above concepts in PyTorch

Create Linear Layer with PyTorch¶

Now we will implement our own Linear Layer in PyTorch using the concepts we defined above.

However, before we begin, we will take a different approach in how we will define our bias

Initially, we defined a bias column as below:

$$ \begin{pmatrix}1 & x_{11} & x_{12} & x_{13} \\1 & x_{21} & x_{22} & x_{21} \\1 & x_{31} & x_{32} & x_{33} \\\end{pmatrix} $$However, this formulation has some practical problems. For every forward input that we receive, we will have to *manually add a column bias*. This column addition is a non-differentiable operation and hence, it messes with the entire DL methodology of only operating with differentiable functions.

Therefore, we will re-formulate the bias as an addition operation of our linear output

$$ \begin{equation}\begin{pmatrix}1 & x_{11} & x_{12} & x_{13} \\1 & x_{21} & x_{22} & x_{21} \\1 & x_{31} & x_{32} & x_{33} \\\end{pmatrix}\begin{pmatrix}w_0 \\w_1 \\w_2 \\w_3\end{pmatrix}\end{equation} = \begin{pmatrix}y_0 \\y_1 \\y_2 \end{pmatrix} = \begin{pmatrix} x_{11} & x_{12} & x_{13} \\ x_{21} & x_{22} & x_{21} \\ x_{31} & x_{32} & x_{33} \\\end{pmatrix} \begin{pmatrix}w_1 \\w_2 \\w_3\end{pmatrix} + \begin{pmatrix}w_0 \\w_0 \\w_0\end{pmatrix} $$In this sense, our Linear Layer will now be a two-step operation if the bias is included.

As for the backward pass, the differential of a simple addition will always be 1s. Hence, our forward and backward pass for the bias becomes two simple operations.

Now, to reduce boilerplate code, we will subclass our Linear operation under PyTorch's torch.autograd.Function. This enables us to do three things:

i) define and generalize the forward and backward pass

ii) use PyTorch's "context manager" that allows us to save objects from the forward and backward pass and lets us know which forward inputs need gradients (which let us know if we need to apply an Intermediate or "Receiver" operation during backward phase)

iii) Store backward's gradient output to our defined weight parameters

#Uncomment this line to install torch library

#!pip install torch

import torch

import torch.nn as nn

#No Nvidia graphic card

torch.rand((2,2))

# Nvidia graphic card

torch.randn((2,2)).cuda()

tensor([[ 0.6623, 0.8345],

[-0.1770, 0.7527]], device='cuda:0')

What do the codes above do?¶

The import command will load the torch library into your notebook.

torch.rand((m,n)) will create a matrix size m x n filled with random values in range [0,1)

Note:You will see the output has a type calledTensorwhich is a matrix used for storing arbitrary numbers.

If your computer/laptop does not have Nvidia graphic card, the torch.rand((m,n)).cuda() will yield an error.

Note:Having a graphic card with CUDA interface will enable parallel computing capability when building deep learning model which can drastically decrease training time. However, our model can still be trained without it.

# keep in mind that @staticmethod simply let's us initiate a class without instantiating it

# Remember that our gradient will be of equal dimensions as our weight parameters

class Linear_Layer(torch.autograd.Function):

"""

Define a Linear Layer operation

"""

@staticmethod

def forward(ctx, input,weights, bias = None):

"""

In the forward pass, we feed this class all necessary objects to

compute a linear layer (input, weights, and bias)

"""

# input.dim = (B, in_dim)

# weights.dim = (in_dim, out_dim)

# given that the grad(output) wrt weight parameters equals the input,

# we will save it to use for backpropagation

ctx.save_for_backward(input, weights, bias)

# linear transformation

# (B, out_dim) = (B, in_dim) * (in_dim, out_dim)

output = torch.mm(input, weights)

if bias is not None:

# bias.shape = (out_dim)

# expanded_bias.shape = (B, out_dim), repeats bias B times

expanded_bias = bias.unsqueeze(0).expand_as(output)

# element-wise addition

output += expanded_bias

return output

# ```incoming_grad``` represents the incoming gradient that we defined on the "Backward Pass" section

# incoming_grad.shape == output.shape == (B, out_dim)

@staticmethod

def backward(ctx, incoming_grad):

"""

In the backward pass we receive a Tensor (output_grad) containing the

gradient of the loss with respect to our f(x) output,

and we now need to compute the gradient of the loss

with respect to our defined function.

"""

# incoming_grad.shape = (B, out_dim)

# extract inputs from forward pass

input, weights, bias = ctx.saved_tensors

# assume none of the inputs need gradients

grad_input = grad_weight = grad_bias = None

# we will figure out which forward inputs need grads

# with ctx.needs_input_grad, which stores True/False

# values in the order that the forward inputs came

# in each of the below gradients,

# we need to return as many parameters as we used during forward pass

# if input requires grad

if ctx.needs_input_grad[0]:

# (B, in_dim) = (B, out_dim) * (out_dim, in_dim)

grad_input = incoming_grad.mm(weights.t())

# if weights require grad

if ctx.needs_input_grad[1]:

# (out_dim, in_dim) = (out_dim, B) * (B, in_dim)

grad_weight = incoming_grad.t().mm(input)

# if bias requires grad

if bias is not None and ctx.needs_input_grad[2]:

# below operation is equivalent of doing it the "long" way

# given that bias grads = 1,

# torch.ones((1,B)).mm(incoming_grad)

# (out) = (1,B)*(B,out_dim)

grad_bias = incoming_grad.sum(0)

# below, if any of the grads = None, they will simply be ignored

# add grad_output.t() to match original layout of weight parameter

return grad_input, grad_weight.t(), grad_bias

# test forward method

# input_dim & output_dim can be any dimensions (you choose)

input_dim = 1

output_dim = 2

dummy_input= torch.ones((input_dim, output_dim)) # input that will be fed to model

# create a random set of weights that matches the dimensions of input to perform matrix multiplication

final_output_dim = 3 # can be set to any integer > 0

dummy_weight = nn.Parameter(torch.randn((output_dim, final_output_dim))) # nn.Parameter registers weights as parameters of the model

# feed input and weight tensors to our Linear Layer operation

output = Linear_Layer.apply(dummy_input, dummy_weight)

print(f"forward output: \n{output}")

print('-'*70)

print(f"forward output shape: \n{output.shape}")

forward output: tensor([[0.7532, 0.5865, 0.9564]], grad_fn=<Linear_LayerBackward>) ---------------------------------------------------------------------- forward output shape: torch.Size([1, 3])

Code explanation¶

We first create a 1D Tensor of size two and initialize it with value 1 dummy_input = tensor(([1.,1.])).

We then a wrap a tensor filled with random values under nn.Parameter with dimensions (2,3) that represents the weights of our Linear Layer operation.

NOTE: We wrap our weights under

nn.Parameterbecause when we implement our Linear Layer to any Deep Learning architecture, the wrapper will automagically register our weight tensor as a model parameter to make for easy extraction by just callingmodel.parameters(). Without it, the model will not be able to differentiate parameter from inputs.

After that, we obtain the output for forward propagration using the apply method providing the input and the weight. The apply function will call the forward function defined in the class Linear_Layer and return the result for forward propagration.

We then check the result and the shape of our output to make sure the calculation is done correctly.

At this point, if we check the gradient of dummy_weight, we will see nothing since we need to propagate backward to obtain the gradient of the weight.

print(f"Weight's gradient {dummy_weight.grad}")

# test backward pass

## calculate gradient of subsequent operation w.r.t. defined weight parameters

incoming_grad = torch.ones((1,3)) # shape equals output dims

output.backward(incoming_grad) # calculate parameter gradients

# extract calculated gradient

dummy_weight.grad

tensor([[1., 1., 1.],

[1., 1., 1.]])

Now that we have our forward and backward method defined, let us define some important concepts.

By nature, Tensors that require gradients (such as parameters) automatically "record" a history of all the operations that have been applied to them.

For example, our above forward output contains the method grad_fn=<Linear_LayerBackward>, which tells us that our output is the result of our defined Linear Layer operation, which its history began with dummy_weight.

As such, once we call output.backward(incoming_grad), PyTorch automatically, from the last operation to the first, calls the backward method in order to compute the chain-gradient that corresponds to our parameters.

To truly understand what is going on and how PyTorch simplifies the backward phase, we will show a more extensive example where we manually compute the gradient of our paramters with our own defined backward() methods

class Linear_Layer_():

def __init__(self):

''

def forward(self, input,weights, bias = None):

self.input = input

self.weights = weights

self.bias = bias

output = torch.mm(input, weights)

if bias is not None:

# bias.shape = (out_dim)

# expanded_bias.shape = (B, out_dim), repeats bias B times

expanded_bias = bias.unsqueeze(0).expand_as(output)

# element-wise addition

output += expanded_bias

return output

def backward(self, incoming_grad):

# extract inputs from forward pass

input = self.input

weights = self.weights

bias = self.bias

grad_input = grad_weight = grad_bias = None

# if input requires grad

if input.requires_grad:

grad_input = incoming_grad.mm(weights.t())

# if weights require grad

if weights.requires_grad:

grad_weight = incoming_grad.t().mm(input)

# if bias requires grad

if bias.requires_grad:

grad_bias = incoming_grad.sum(0)

return grad_input, grad_weight.t(), grad_bias

# manual forward pass

input= torch.ones((1,2)) # input

# define weights for linear layers

weight1 = nn.Parameter(torch.randn((2,3)))

weight2 = nn.Parameter(torch.randn((3,5)))

weight3 = nn.Parameter(torch.randn((5,1)))

# define bias for Linear layers

bias1 = nn.Parameter(torch.randn((3)))

bias2 = nn.Parameter(torch.randn((5)))

bias3 = nn.Parameter(torch.randn((1)))

# define Linear Layers

linear1 = Linear_Layer_()

linear2 = Linear_Layer_()

linear3 = Linear_Layer_()

# define forward pass

output1 = linear1.forward(input, weight1,bias1)

output2 = linear2.forward(output1, weight2,bias2)

output3 = linear3.forward(output2, weight3,bias3)

print(f"outpu1.shape: {output1.shape}")

print('-'*50)

print(f"outpu2.shape: {output2.shape}")

print('-'*50)

print(f"outpu3.shape: {output3.shape}")

outpu1.shape: torch.Size([1, 3]) -------------------------------------------------- outpu2.shape: torch.Size([1, 5]) -------------------------------------------------- outpu3.shape: torch.Size([1, 1])

# manual backward pass

# compute intermediate and receiver backward pass

input_grad1, weight_grad1, bias_grad1 = linear3.backward(torch.tensor([[1.]]))

print(f"input_grad1.shape: {input_grad1.shape}")

print('-'*50)

print(f"weight_grad1.shape: {weight_grad1.shape}")

print('-'*50)

print(f"bias_grad1.shape: {bias_grad1.shape}")

input_grad1.shape: torch.Size([1, 5]) -------------------------------------------------- weight_grad1.shape: torch.Size([5, 1]) -------------------------------------------------- bias_grad1.shape: torch.Size([1])

# compute intermediate and receiver backward pass

input_grad2, weight_grad2, bias_grad2 = linear2.backward(input_grad1)

print(f"input_grad2.shape: {input_grad2.shape}")

print('-'*50)

print(f"weight_grad2.shape: {weight_grad2.shape}")

print('-'*50)

print(f"bias_grad2.shape: {bias_grad2.shape}")

input_grad2.shape: torch.Size([1, 3]) -------------------------------------------------- weight_grad2.shape: torch.Size([3, 5]) -------------------------------------------------- bias_grad2.shape: torch.Size([5])

# compute receiver backward pass

input_grad3, weight_grad3, bias_grad3 = linear1.backward(input_grad2)

print(f"input_grad3: {input_grad3}")

print('-'*50)

print(f"weight_grad3.shape: {weight_grad3.shape}")

print('-'*50)

print(f"bias_grad3.shape: {bias_grad3.shape}")

input_grad3: None -------------------------------------------------- weight_grad3.shape: torch.Size([2, 3]) -------------------------------------------------- bias_grad3.shape: torch.Size([3])

# now, add gradients to the corresponding parameters

weight1.grad = weight_grad3

weight2.grad = weight_grad2

weight3.grad = weight_grad1

bias1.grad = bias_grad3

bias2.grad = bias_grad2

bias3.grad = bias_grad1

# inspect manual calculated gradients

print(f"weight1.grad = \n{weight1.grad}")

print('-'*70)

print(f"weight2.grad = \n{weight2.grad}")

print('-'*70)

print(f"weight3.grad = \n{weight3.grad}")

print('-'*70)

print(f"bias1.grad = \n{bias1.grad}")

print('-'*70)

print(f"bias2.grad = \n{bias2.grad}")

print('-'*70)

print(f"bias3.grad = \n{bias3.grad}")

weight1.grad =

tensor([[-0.9869, 0.0548, 0.3107],

[-0.9869, 0.0548, 0.3107]], grad_fn=<TBackward>)

----------------------------------------------------------------------

weight2.grad =

tensor([[ 2.3822, 0.9312, 2.2510, -1.0365, 3.1596],

[ 1.3770, 0.5383, 1.3011, -0.5992, 1.8263],

[-1.3396, -0.5237, -1.2658, 0.5829, -1.7767]], grad_fn=<TBackward>)

----------------------------------------------------------------------

weight3.grad =

tensor([[-6.3651],

[-3.5532],

[-5.9865],

[ 0.7347],

[ 5.3876]], grad_fn=<TBackward>)

----------------------------------------------------------------------

bias1.grad =

tensor([-0.9869, 0.0548, 0.3107], grad_fn=<SumBackward2>)

----------------------------------------------------------------------

bias2.grad =

tensor([ 0.6981, 0.2729, 0.6597, -0.3038, 0.9260], grad_fn=<SumBackward2>)

----------------------------------------------------------------------

bias3.grad =

tensor([1.])

# now, we take our "step"

lr = .01

# perform "step" on weight parameters

weight1.data.add_(weight1.grad, alpha = -lr) # ==weight1.data+weight1.grad*-lr

weight2.data.add_(weight2.grad, alpha = -lr)

weight2.data.add_(weight2.grad, alpha = -lr)

# perform "step" on bias parameters

bias1.data.add_(bias1.grad, alpha = -lr)

bias2.data.add_(bias2.grad, alpha = -lr)

bias2.data.add_(bias2.grad, alpha = -lr)

# now that the step has been performed, zero out gradient values

weight1.grad.zero_()

weight2.grad.zero_()

weight3.grad.zero_()

bias1.grad.zero_()

bias2.grad.zero_()

bias3.grad.zero_()

# get ready for the next forward pass

tensor([0.])

Phew! We have now officially performed a "step" update! Let's review what we did:

1. Defined all needed forward and backward operations

2. Created a 3-layer model

3. Calculated forward pass

4. Calculated backward pass for all parameters

5. Performed step

6. zero-out gradients

Of coarse, we could have simplified the code by creating a list like structure and loop all needed operations.

However, for sake of clarity and understanding, we layed out all the steps in a logical manner.

Now, how can the equivalent of the forward and backward operations be performed in PyTorch?

# PyTorch forward pass

input= torch.ones((1,2)) # input

# define weights for linear layers

weight1 = nn.Parameter(torch.randn((2,3)))

weight2 = nn.Parameter(torch.randn((3,5)))

weight3 = nn.Parameter(torch.randn((5,1)))

# define bias for Linear layers

bias1 = nn.Parameter(torch.randn((3)))

bias2 = nn.Parameter(torch.randn((5)))

bias3 = nn.Parameter(torch.randn((1)))

# define Linear Layers

output1 = Linear_Layer.apply(input,weight1,bias1)

output2 = Linear_Layer.apply(output1, weight2, bias2)

output3 = Linear_Layer.apply(output2, weight3, bias3)

print(f"outpu1.shape: {output1.shape}")

print('-'*50)

print(f"outpu2.shape: {output2.shape}")

print('-'*50)

print(f"outpu3.shape: {output3.shape}")

outpu1.shape: torch.Size([1, 3]) -------------------------------------------------- outpu2.shape: torch.Size([1, 5]) -------------------------------------------------- outpu3.shape: torch.Size([1, 1])

# calculate all gradients with PyTorch's "operation history"

# it essentially just calls our defined backward methods in

# the order of applied operations (such as we did above)

output3.backward()

# inspect PyTorch calculated gradients

print(f"weight1.grad = \n{weight1.grad}")

print('-'*70)

print(f"weight2.grad = \n{weight2.grad}")

print('-'*70)

print(f"weight3.grad = \n{weight3.grad}")

print('-'*70)

print(f"bias1.grad = \n{bias1.grad}")

print('-'*70)

print(f"bias2.grad = \n{bias2.grad}")

print('-'*70)

print(f"bias3.grad = \n{bias3.grad}")

weight1.grad =

tensor([[ 0.2195, -3.4776, 3.3395],

[ 0.2195, -3.4776, 3.3395]])

----------------------------------------------------------------------

weight2.grad =

tensor([[ 2.6869, -0.6504, 1.1048, -1.9001, 3.5497],

[ 1.7754, -0.4298, 0.7300, -1.2555, 2.3455],

[ 1.1182, -0.2707, 0.4598, -0.7908, 1.4773]])

----------------------------------------------------------------------

weight3.grad =

tensor([[ 0.0630],

[ 1.2594],

[-3.3520],

[-1.9508],

[-0.3700]])

----------------------------------------------------------------------

bias1.grad =

tensor([ 0.2195, -3.4776, 3.3395])

----------------------------------------------------------------------

bias2.grad =

tensor([ 1.3815, -0.3344, 0.5681, -0.9770, 1.8251])

----------------------------------------------------------------------

bias3.grad =

tensor([1.])

Now, instead of having to define a weight and parameter bias each time we need a Linear_Layer, we will wrap our operation on PyTorch's nn.Module, which allows us to:

i) define all parameters (weight and bias) in a single object and

ii) create an easy-to-use interface to create any Linear transformation of any shape (as long as it is feasible to your memory)

class Linear(nn.Module):

def __init__(self, in_dim, out_dim, bias = True):

super().__init__()

self.in_dim = in_dim

self.out_dim = out_dim

# define parameters

# weight parameter

self.weight = nn.Parameter(torch.randn((in_dim, out_dim)))

# bias parameter

if bias:

self.bias = nn.Parameter(torch.randn((out_dim)))

else:

# register parameter as None if not initialized

self.register_parameter('bias',None)

def forward(self, input):

output = Linear_Layer.apply(input, self.weight, self.bias)

return output

# initialize model and extract all model parameters

m = Linear(1,1, bias = True)

param = list(m.parameters())

param

[Parameter containing: tensor([[-1.7011]], requires_grad=True), Parameter containing: tensor([-0.0320], requires_grad=True)]

# once gradients have been computed and a step has been taken,

# we can zero-out all gradient values in parameters with below

m.zero_grad()

MNIST¶

We will implement our Linear Layer operation to classify digits on the MNIST dataset.

This data is often used as an introduction to DL as it has two desired properties:

60000 records of observations

Binary input (dramatically reduces complexity)

Given the volumen of data, it may not be very feasible to load all 60000 images at once and feed it to our model. Hence, we will parse our data into batches of 128 to alleviate I/O.

We will import this data using torchvision and feed it to our DataLoader that enables us to parse our data into batches

# import trainingMNIST dataset

import torchvision

from torchvision import transforms

import numpy as np

from torchvision.utils import make_grid

import matplotlib.pyplot as plt

from torch.utils.data import DataLoader

root = r'C:\Users\erick\PycharmProjects\untitled\3D_2D_GAN\MNIST_experimentation'

train_mnist = torchvision.datasets.MNIST(root = root,

train = True,

transform = transforms.ToTensor(),

download = False,

)

train_mnist.data.shape

torch.Size([60000, 28, 28])

# import testing MNIST dataset

eval_mnist = torchvision.datasets.MNIST(root = root,

train = False,

transform = transforms.ToTensor(),

download = False,

)

eval_mnist.data.shape

torch.Size([10000, 28, 28])

# visualize data

# visualize our data

grid_images = np.transpose(make_grid(train_mnist.data[:64].unsqueeze(1)), (1,2,0))

plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("Training Images")

plt.imshow(grid_images,cmap = 'gray')

<matplotlib.image.AxesImage at 0x2bb00165160>

# normalize data

train_mnist.data = (train_mnist.data.float() - train_mnist.data.float().mean()) / train_mnist.data.float().std()

eval_mnist.data = (eval_mnist.data.float() - eval_mnist.data.float().mean()) / eval_mnist.data.float().std()

# parse data to batches of 128

# pin_memory = True if you have CUDA. It will speed up I/O

train_dl = DataLoader(train_mnist, batch_size = 64,

shuffle = True, pin_memory = True)

eval_dl = DataLoader(eval_mnist, batch_size = 128,

shuffle = True, pin_memory = True)

batch_images, batch_labels = next(iter(train_dl))

print(f"batch_images.shape: {batch_images.shape}")

print('-'*50)

print(f"batch_labels.shape: {batch_labels.shape}")

batch_images.shape: torch.Size([64, 1, 28, 28]) -------------------------------------------------- batch_labels.shape: torch.Size([64])

Build Neural Network¶

Now that our data has been defined, we will implement our architecture

This section will introduce three new conceps:

In short, ReLU is a famous activation function that adds non-linearity to our model, Cross-Entropy-Loss is the criterion we use to train our model, and Stochastic Gradient Descent defines the "step" operation to update our weight parameters.

For sake of compactness, a comprehensive description and implementation of these functions can both be found in the main repo or if you click on their hyperlinks.

Our model will consist of below structure (where each operation except for the last is followed by a ReLU operation):

[128, 64, 10]

class NeuralNet(nn.Module):

def __init__(self, num_units = 128, activation = nn.ReLU()):

super().__init__()

# fully-connected layers

self.fc1 = Linear(784,num_units)

self.fc2 = Linear(num_units , num_units//2)

self.fc3 = Linear(num_units // 2, 10)

# init ReLU

self.activation = activation

def forward(self,x):

# 1st layer

output = self.activation(self.fc1(x))

# 2nd layer

output = self.activation(self.fc2(output))

# 3rd layer

output = self.fc3(output)

return output

# initiate model

model = NeuralNet(128)

model

NeuralNet( (fc1): Linear() (fc2): Linear() (fc3): Linear() (activation): ReLU() )

# test model

input = torch.randn((1,784))

model(input).shape

torch.Size([1, 10])

Next, we will instantiate our loss criterion

We will use Cross-Entropy-Loss as our criterion for two reasons:

- Our objective is to classify data and

- There are 10 criterions to choose from (0-9)

This criterion exponentially "penalizes" the model if the confidence for our prediction target is far from the truth (e.g. a confidence prediction of .01 for 9 when it's actually the truth value) but is much less militant if our prediction is close to the truth

The CrossEntropyLoss criterion performs a Softmax activation before computing the Cross-Entropy-Loss as our criterion is only well-defined on a domain from [0,1]

# initiate loss criterion

criterion = nn.CrossEntropyLoss()

criterion

CrossEntropyLoss()

Next, we define our optimizer: Stochastic Gradient Descent. All this algorithm will do is extract the gradient values of our parameters and perform below step function:

$$ w_j=w_j-\alpha\frac{\partial }{\partial w_j}L(w_j) $$from torch import optim

optimizer = optim.SGD(model.parameters(), lr = .01)

optimizer

SGD (

Parameter Group 0

dampening: 0

lr: 0.01

momentum: 0

nesterov: False

weight_decay: 0

)

We will use PyTorch's device object and feed it to our model's .to method to place all our operation on our GPU for accelarated traning

# if we do not have a GPU, skip this step

# define a CUDA connection

device = torch.device('cuda')

# place model in GPU

model = model.to(device)

Train Neural Net¶

define training scheme

# compute average accuracy of batch

def accuracy(pred, labels):

# predictions.shape = (B, 10)

# labels.shape = (B)

n_batch = labels.shape[0]

# extract idx of max value from our batch predictions

# predicted.shape = (B)

_, preds = torch.max(pred, 1)

# compute average accuracy of our batch

compare = (preds == labels).sum()

return compare.item() / n_batch

def train(model, iterator, optimizer, criterion):

# hold avg loss and acc sum of all batches

epoch_loss = 0

epoch_acc = 0

for batch in iterator:

# zero-out all gradients (if any) from our model parameters

model.zero_grad()

# extract input and label

# input.shape = (B, 784), "flatten" image

input = batch[0].view(-1,784).cuda() # shape: (B, 784), "flatten" image

# label.shape = (B)

label = batch[1].cuda()

# Start PyTorch's Dynamic Graph

# predictions.shape = (B, 10)

predictions = model(input)

# average batch loss

loss = criterion(predictions, label)

# calculate grad(loss) / grad(parameters)

# "clears" PyTorch's dynamic graph

loss.backward()

# perform SGD "step" operation

optimizer.step()

# Given that PyTorch variables are "contagious" (they record all operations)

# we need to ".detach()" to stop them from recording any performance

# statistics

# average batch accuracy

acc = accuracy(predictions.detach(), label)

# record our stats

epoch_loss += loss.detach()

epoch_acc += acc

# NOTE: tense.item() unpacks Tensor item to a regular python object

# tense.tensor([1]).item() == 1

# return average loss and acc of epoch

return epoch_loss.item() / len(iterator), epoch_acc / len(iterator)

def evaluate(model, iterator, criterion):

epoch_loss = 0

epoch_acc = 0

# turn off grad tracking as we are only evaluation performance

with torch.no_grad():

for batch in iterator:

# extract input and label

input = batch[0].view(-1,784).cuda()

label = batch[1].cuda()

# predictions.shape = (B, 10)

predictions = model(input)

# average batch loss

loss = criterion(predictions, label)

# average batch accuracy

acc = accuracy(predictions, label)

epoch_loss += loss

epoch_acc += acc

return epoch_loss.item() / len(iterator), epoch_acc / len(iterator)

import time

# record time it takes to train and evaluate an epoch

def epoch_time(start_time, end_time):

elapsed_time = end_time - start_time # total time

elapsed_mins = int(elapsed_time / 60) # minutes

elapsed_secs = int(elapsed_time - (elapsed_mins * 60)) # seconds

return elapsed_mins, elapsed_secs

N_EPOCHS = 25

# track statistics

track_stats = {'epoch': [],

'train_loss': [],

'train_acc': [],

'valid_loss':[],

'valid_acc':[]}

best_valid_loss = float('inf')

for epoch in range(N_EPOCHS):

start_time = time.time()

train_loss, train_acc = train(model, train_dl, optimizer, criterion)

valid_loss, valid_acc = evaluate(model, eval_dl, criterion)

end_time = time.time()

# record operations

track_stats['epoch'].append(epoch + 1)

track_stats['train_loss'].append(train_loss)

track_stats['train_acc'].append(train_acc)

track_stats['valid_loss'].append(valid_loss)

track_stats['valid_acc'].append(valid_acc)

epoch_mins, epoch_secs = epoch_time(start_time, end_time)

# if this was our best performance, record model parameters

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), 'best_linear_params.pt')

# print out stats

print('-'*75)

print(f'Epoch: {epoch+1:02} | Epoch Time: {epoch_mins}m {epoch_secs}s')

print(f'\tTrain Loss: {train_loss:.3f} | Train Acc: {train_acc*100:.2f}%')

print(f'\t Val. Loss: {valid_loss:.3f} | Val. Acc: {valid_acc*100:.2f}%')

--------------------------------------------------------------------------- Epoch: 01 | Epoch Time: 0m 30s Train Loss: 2.213 | Train Acc: 15.09% Val. Loss: 11.462 | Val. Acc: 9.38% --------------------------------------------------------------------------- Epoch: 02 | Epoch Time: 0m 30s Train Loss: 2.201 | Train Acc: 15.77% Val. Loss: 15.436 | Val. Acc: 9.82% --------------------------------------------------------------------------- Epoch: 03 | Epoch Time: 0m 30s Train Loss: 2.193 | Train Acc: 15.93% Val. Loss: 17.744 | Val. Acc: 9.46% --------------------------------------------------------------------------- Epoch: 04 | Epoch Time: 0m 30s Train Loss: 2.168 | Train Acc: 17.62% Val. Loss: 19.838 | Val. Acc: 9.68% --------------------------------------------------------------------------- Epoch: 05 | Epoch Time: 0m 30s Train Loss: 2.132 | Train Acc: 19.22% Val. Loss: 21.154 | Val. Acc: 9.47% --------------------------------------------------------------------------- Epoch: 06 | Epoch Time: 0m 29s Train Loss: 2.101 | Train Acc: 20.55% Val. Loss: 21.468 | Val. Acc: 9.46% --------------------------------------------------------------------------- Epoch: 07 | Epoch Time: 0m 29s Train Loss: 2.077 | Train Acc: 21.55% Val. Loss: 19.181 | Val. Acc: 9.54% --------------------------------------------------------------------------- Epoch: 08 | Epoch Time: 0m 29s Train Loss: 2.051 | Train Acc: 22.55% Val. Loss: 17.388 | Val. Acc: 9.64% --------------------------------------------------------------------------- Epoch: 09 | Epoch Time: 0m 29s Train Loss: 2.031 | Train Acc: 22.94% Val. Loss: 15.644 | Val. Acc: 10.23% --------------------------------------------------------------------------- Epoch: 10 | Epoch Time: 0m 30s Train Loss: 2.012 | Train Acc: 23.96% Val. Loss: 15.170 | Val. Acc: 9.63% --------------------------------------------------------------------------- Epoch: 11 | Epoch Time: 0m 29s Train Loss: 1.996 | Train Acc: 24.24% Val. Loss: 12.971 | Val. Acc: 9.92% --------------------------------------------------------------------------- Epoch: 12 | Epoch Time: 0m 32s Train Loss: 1.980 | Train Acc: 25.02% Val. Loss: 12.088 | Val. Acc: 10.27% --------------------------------------------------------------------------- Epoch: 13 | Epoch Time: 0m 22s Train Loss: 1.967 | Train Acc: 25.26% Val. Loss: 11.535 | Val. Acc: 10.73% --------------------------------------------------------------------------- Epoch: 14 | Epoch Time: 0m 12s Train Loss: 1.955 | Train Acc: 25.72% Val. Loss: 9.970 | Val. Acc: 9.86% --------------------------------------------------------------------------- Epoch: 15 | Epoch Time: 0m 13s Train Loss: 1.943 | Train Acc: 26.42% Val. Loss: 10.950 | Val. Acc: 10.29% --------------------------------------------------------------------------- Epoch: 16 | Epoch Time: 0m 14s Train Loss: 1.935 | Train Acc: 26.69% Val. Loss: 9.350 | Val. Acc: 12.06% --------------------------------------------------------------------------- Epoch: 17 | Epoch Time: 0m 14s Train Loss: 1.928 | Train Acc: 27.14% Val. Loss: 9.407 | Val. Acc: 10.16% --------------------------------------------------------------------------- Epoch: 18 | Epoch Time: 0m 16s Train Loss: 1.918 | Train Acc: 27.60% Val. Loss: 9.823 | Val. Acc: 9.86% --------------------------------------------------------------------------- Epoch: 19 | Epoch Time: 0m 16s Train Loss: 1.914 | Train Acc: 27.59% Val. Loss: 9.612 | Val. Acc: 10.27% --------------------------------------------------------------------------- Epoch: 20 | Epoch Time: 0m 12s Train Loss: 1.906 | Train Acc: 27.85% Val. Loss: 10.421 | Val. Acc: 10.40% --------------------------------------------------------------------------- Epoch: 21 | Epoch Time: 0m 12s Train Loss: 1.903 | Train Acc: 28.06% Val. Loss: 10.308 | Val. Acc: 10.47% --------------------------------------------------------------------------- Epoch: 22 | Epoch Time: 0m 12s Train Loss: 1.894 | Train Acc: 28.63% Val. Loss: 9.670 | Val. Acc: 10.06% --------------------------------------------------------------------------- Epoch: 23 | Epoch Time: 0m 12s Train Loss: 1.888 | Train Acc: 28.85% Val. Loss: 10.267 | Val. Acc: 9.95% --------------------------------------------------------------------------- Epoch: 24 | Epoch Time: 0m 12s Train Loss: 1.885 | Train Acc: 28.74% Val. Loss: 9.961 | Val. Acc: 10.07% --------------------------------------------------------------------------- Epoch: 25 | Epoch Time: 0m 12s Train Loss: 1.878 | Train Acc: 29.04% Val. Loss: 10.058 | Val. Acc: 10.11%

Visualization¶

Looking at the above statistics is great, however, we would attain a much better understanding if we can graph our data in a way that is more appealing.

We will do this by using HiPlot, a newly release deep visualization library by Facebook.

HiPlot measures each unique dimension by inserting parallel vertical lines.

Before we use it, we need to format our data as a list of dictionaries

# format data

import pandas as pd

stats = pd.DataFrame(track_stats)

stats

| epoch | train_loss | train_acc | valid_loss | valid_acc | |

|---|---|---|---|---|---|

| 0 | 1 | 2.212897 | 0.150920 | 11.462227 | 0.093750 |

| 1 | 2 | 2.201463 | 0.157666 | 15.435633 | 0.098200 |

| 2 | 3 | 2.193212 | 0.159348 | 17.743526 | 0.094640 |

| 3 | 4 | 2.167792 | 0.176156 | 19.837977 | 0.096816 |

| 4 | 5 | 2.132317 | 0.192181 | 21.154042 | 0.094739 |

| 5 | 6 | 2.100851 | 0.205507 | 21.467726 | 0.094640 |

| 6 | 7 | 2.076702 | 0.215452 | 19.181373 | 0.095431 |

| 7 | 8 | 2.051445 | 0.225546 | 17.387510 | 0.096420 |

| 8 | 9 | 2.031049 | 0.229428 | 15.643752 | 0.102255 |

| 9 | 10 | 2.012228 | 0.239622 | 15.169947 | 0.096321 |

| 10 | 11 | 1.995873 | 0.242387 | 12.971168 | 0.099189 |

| 11 | 12 | 1.980406 | 0.250200 | 12.088010 | 0.102650 |

| 12 | 13 | 1.967482 | 0.252649 | 11.534692 | 0.107298 |

| 13 | 14 | 1.954952 | 0.257229 | 9.970132 | 0.098596 |

| 14 | 15 | 1.942960 | 0.264226 | 10.950436 | 0.102947 |

| 15 | 16 | 1.935199 | 0.266908 | 9.349646 | 0.120649 |

| 16 | 17 | 1.928071 | 0.271372 | 9.406645 | 0.101562 |

| 17 | 18 | 1.917641 | 0.276036 | 9.823315 | 0.098596 |

| 18 | 19 | 1.914162 | 0.275853 | 9.611549 | 0.102749 |

| 19 | 20 | 1.906237 | 0.278501 | 10.421081 | 0.104035 |

| 20 | 21 | 1.902847 | 0.280584 | 10.308280 | 0.104727 |

| 21 | 22 | 1.893793 | 0.286347 | 9.669761 | 0.100574 |

| 22 | 23 | 1.887595 | 0.288513 | 10.266509 | 0.099486 |

| 23 | 24 | 1.884877 | 0.287380 | 9.961499 | 0.100672 |

| 24 | 25 | 1.878398 | 0.290378 | 10.058255 | 0.101068 |

data = []

for row in stats.iterrows():

data.append(row[1].to_dict())

data

[{'epoch': 1.0,

'train_loss': 2.212897131946295,

'train_acc': 0.15091950959488273,

'valid_loss': 11.462226964250396,

'valid_acc': 0.09375},

{'epoch': 2.0,

'train_loss': 2.2014626053604744,

'train_acc': 0.15766591151385928,

'valid_loss': 15.43563340585443,

'valid_acc': 0.0982001582278481},

{'epoch': 3.0,

'train_loss': 2.193212318013726,

'train_acc': 0.15934834754797442,

'valid_loss': 17.743525637856013,

'valid_acc': 0.09464003164556962},

{'epoch': 4.0,

'train_loss': 2.1677922816164714,

'train_acc': 0.1761560501066098,

'valid_loss': 19.837977155854432,

'valid_acc': 0.09681566455696203},

{'epoch': 5.0,

'train_loss': 2.1323169309701493,

'train_acc': 0.1921808368869936,

'valid_loss': 21.154041918018198,

'valid_acc': 0.09473892405063292},

{'epoch': 6.0,

'train_loss': 2.100850640075293,

'train_acc': 0.2055070628997868,

'valid_loss': 21.467725536491297,

'valid_acc': 0.09464003164556962},

{'epoch': 7.0,

'train_loss': 2.076701670567364,

'train_acc': 0.2154517590618337,

'valid_loss': 19.181373306467563,

'valid_acc': 0.09543117088607594},

{'epoch': 8.0,

'train_loss': 2.0514450886610476,

'train_acc': 0.22554637526652452,

'valid_loss': 17.387509889240505,

'valid_acc': 0.09642009493670886},

{'epoch': 9.0,

'train_loss': 2.0310485449426974,

'train_acc': 0.22942763859275053,

'valid_loss': 15.643752472310126,

'valid_acc': 0.10225474683544304},

{'epoch': 10.0,

'train_loss': 2.012227853478145,

'train_acc': 0.23962220149253732,

'valid_loss': 15.169946598101266,

'valid_acc': 0.09632120253164557},

{'epoch': 11.0,

'train_loss': 1.995873294659515,

'train_acc': 0.24238739339019189,

'valid_loss': 12.971168228342563,

'valid_acc': 0.09918908227848101},

{'epoch': 12.0,

'train_loss': 1.9804057627598615,

'train_acc': 0.2501998933901919,

'valid_loss': 12.088009604924842,

'valid_acc': 0.10265031645569621},

{'epoch': 13.0,

'train_loss': 1.967482056444896,

'train_acc': 0.25264858742004265,

'valid_loss': 11.534691919254351,

'valid_acc': 0.10729825949367089},

{'epoch': 14.0,

'train_loss': 1.9549524107975746,

'train_acc': 0.2572294776119403,

'valid_loss': 9.970132175880142,

'valid_acc': 0.09859572784810126},

{'epoch': 15.0,

'train_loss': 1.9429595882196162,

'train_acc': 0.2642257462686567,

'valid_loss': 10.950435590140428,

'valid_acc': 0.10294699367088607},

{'epoch': 16.0,

'train_loss': 1.9351988835121268,

'train_acc': 0.26690764925373134,

'valid_loss': 9.349645687054984,

'valid_acc': 0.1206487341772152},

{'epoch': 17.0,

'train_loss': 1.9280705238456157,

'train_acc': 0.27137193496801704,

'valid_loss': 9.406644797023338,

'valid_acc': 0.1015625},

{'epoch': 18.0,

'train_loss': 1.9176410601845681,

'train_acc': 0.2760361140724947,

'valid_loss': 9.823314811609968,

'valid_acc': 0.09859572784810126},

{'epoch': 19.0,

'train_loss': 1.9141617960004664,

'train_acc': 0.27585287846481876,

'valid_loss': 9.611549087717563,

'valid_acc': 0.1027492088607595},

{'epoch': 20.0,

'train_loss': 1.9062367258295576,

'train_acc': 0.2785014658848614,

'valid_loss': 10.421080770371836,

'valid_acc': 0.10403481012658228},

{'epoch': 21.0,

'train_loss': 1.902847127365405,

'train_acc': 0.28058368869936035,

'valid_loss': 10.30828007565269,

'valid_acc': 0.10472705696202532},

{'epoch': 22.0,

'train_loss': 1.8937929718733342,

'train_acc': 0.2863472814498934,

'valid_loss': 9.669761174841772,

'valid_acc': 0.10057357594936708},

{'epoch': 23.0,

'train_loss': 1.887595365804904,

'train_acc': 0.28851279317697226,

'valid_loss': 10.266508850870252,

'valid_acc': 0.09948575949367089},

{'epoch': 24.0,

'train_loss': 1.8848772841984276,

'train_acc': 0.28738006396588484,

'valid_loss': 9.961499177956883,

'valid_acc': 0.10067246835443038},

{'epoch': 25.0,

'train_loss': 1.8783976670775586,

'train_acc': 0.29037846481876334,

'valid_loss': 10.058255352551424,

'valid_acc': 0.10106803797468354}]

import hiplot as hip

hip.Experiment.from_iterable(data).display(force_full_width = True)

<hiplot.ipython.IPythonExperimentDisplayed at 0x2be3482c240>

From the above visualization, we can infer properties about our model's performance:

- As epochs increase, train loss decreases

- As train loss decreases, training accuracy increases

- As training accuracy increases, validation loss decreases

- As validation loss decreases, however, validation accuracy does not seem to increase as linearly as the others

Comparing Different Architectures¶

While the above insights are useful, it would be much better if we can compare the performance of the same model but with different parameters.

Let us do this by testing four separate models with distinct hidden layer inputs:

[32, 16, 10][64, 32, 10][128, 64, 10][256, 128, 10]

We will compare these models by performing a 3-fold Cross-Validation (CV) on each of the models.

If you are unfamiliar with the concept, this page will get you to speed

We could train all of these with the same approach as we did above, however, that will be a little redundant.

Instead, we will use the skorch library to grid search our above models while performing 3-fold CV on each of them.

NOTE: skorch is a library that highly mimics the operations of sklearn. Go to link to learn more.

# concat training and testing data into two variables

X = torch.cat((train_mnist.data,eval_mnist.data),dim=0).view(70000,-1)

y = torch.cat((train_mnist.targets,eval_mnist.targets),dim=0).view(-1)

# Set up the equivalent hyperparameters as we had above

from skorch import NeuralNetClassifier

from torch import optim

net = NeuralNetClassifier(

NeuralNet,

max_epochs = 25,

batch_size = 64,

lr = .01,

criterion = nn.CrossEntropyLoss,

optimizer = optim.SGD,

device = 'cuda',

iterator_train__pin_memory = True)

# select model parameters to GridSearch

from sklearn.model_selection import GridSearchCV

params = {

'module__num_units': [32, 64, 128, 256]

}

# intantiate GridSearch object

gs = GridSearchCV(net, params, refit = False,cv = 3,scoring = 'accuracy')

# begin GridSearch

gs.fit(X.numpy(),y.numpy())

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 6.0313 0.1159 2.4621 3.2270

2 2.3754 0.1151 2.3347 3.2200

3 2.2875 0.1480 2.2866 3.2057

4 2.2431 0.1554 2.2638 3.1750

5 2.2145 0.1590 2.2463 3.4643

6 2.1935 0.1616 2.2360 3.1843

7 2.1772 0.1651 2.2231 3.1004

8 2.1624 0.1703 2.2109 3.1288

9 2.1495 0.1732 2.1998 3.1001

10 2.1390 0.1752 2.1893 3.2172

11 2.1296 0.1766 2.1818 3.0924

12 2.1211 0.1777 2.1751 3.1028

13 2.1138 0.1802 2.1673 3.1447

14 2.1061 0.1820 2.1595 3.1331

15 2.0984 0.1835 2.1522 3.1075

16 2.0914 0.1849 2.1443 3.0951

17 2.0849 0.1873 2.1388 3.1732

18 2.0796 0.1888 2.1343 3.1020

19 2.0747 0.1898 2.1308 3.1627

20 2.0694 0.1911 2.1262 3.1237

21 2.0637 0.1927 2.1212 3.1661

22 2.0586 0.1941 2.1162 3.1610

23 2.0543 0.1951 2.1123 3.1485

24 2.0502 0.1957 2.1081 3.0900

25 2.0464 0.1972 2.1031 3.1708

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 6.2649 0.1468 2.4181 3.0708

2 2.3269 0.1795 2.2928 3.2264

3 2.2052 0.1977 2.2165 3.1733

4 2.1408 0.2017 2.1661 3.1360

5 2.1033 0.2087 2.1416 3.1076

6 2.0769 0.2185 2.1183 3.0900

7 2.0547 0.2234 2.0922 3.1326

8 2.0324 0.2334 2.0697 3.1803

9 2.0117 0.2392 2.0451 3.1151

10 1.9922 0.2454 2.0325 3.1063

11 1.9746 0.2497 2.0143 3.0979

12 1.9547 0.2601 2.0030 3.1845

13 1.9377 0.2659 1.9857 3.1049

14 1.9168 0.2724 1.9702 3.1010

15 1.8998 0.2769 1.9564 3.2750

16 1.8830 0.2836 1.9422 3.1016

17 1.8655 0.2945 1.9220 3.1156

18 1.8453 0.2976 1.9007 3.3245

19 1.8273 0.3025 1.8821 3.6793

20 1.8080 0.3123 1.8702 3.1775

21 1.7820 0.3180 1.8286 3.0782

22 1.7508 0.3353 1.8118 3.4387

23 1.7330 0.3322 1.7928 3.1990

24 1.7213 0.3405 1.7778 3.1649

25 1.7055 0.3456 1.7642 3.1406

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 5.4118 0.1090 2.4710 3.1103

2 2.3941 0.1106 2.3606 3.1842

3 2.3138 0.1130 2.3243 3.1000

4 2.2762 0.1135 2.3112 4.5064

5 2.2485 0.1132 2.3025 3.1910

6 2.2228 0.1123 2.2787 3.1757

7 2.1976 0.1110 2.2355 3.1540

8 2.1769 0.1551 2.2020 3.1475

9 2.1605 0.1610 2.1817 3.0838

10 2.1461 0.1669 2.1665 3.1847

11 2.1318 0.1749 2.1578 3.1159

12 2.1172 0.1789 2.1535 3.1120

13 2.1040 0.1081 2.1965 3.2120

14 2.0934 0.1103 2.2831 3.0426

15 2.0841 0.1092 2.3755 3.2069

16 2.0749 0.1104 2.4459 3.1143

17 2.0651 0.1118 2.5081 3.2975

18 2.0562 0.1140 2.5487 3.1790

19 2.0462 0.1160 2.5674 3.1864

20 2.0376 0.1203 2.5652 3.1576

21 2.0286 0.1231 2.5678 3.1079

22 2.0194 0.1262 2.5613 3.1552

23 2.0098 0.1304 2.5327 3.2216

24 2.0013 0.1321 2.5349 3.5602

25 1.9920 0.1370 2.5316 3.5596

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 12.8740 0.2330 2.6193 3.5532

2 2.2984 0.2848 2.3126 3.3478

3 2.0660 0.3223 2.1439 3.1949

4 1.9223 0.3654 2.0218 3.2422

5 1.8145 0.3827 1.9347 3.2568

6 1.7285 0.4067 1.8718 3.2136

7 1.6592 0.4215 1.8292 3.6699

8 1.6045 0.4349 1.7680 3.3442

9 1.5626 0.4455 1.7280 3.2810

10 1.5211 0.4552 1.6984 3.2425

11 1.4897 0.4656 1.6773 3.3857

12 1.4599 0.4771 1.6636 3.3485

13 1.4363 0.4867 1.6603 3.2753

14 1.4142 0.4961 1.6668 3.2579

15 1.3893 0.5042 1.6495 3.3036

16 1.3709 0.5117 1.6337 3.2606

17 1.3556 0.5135 1.6128 3.3449

18 1.3344 0.5271 1.5824 3.2192

19 1.3140 0.5333 1.5707 3.3466

20 1.2934 0.5415 1.5506 3.2450

21 1.2762 0.5479 1.5291 4.5580

22 1.2565 0.5548 1.5318 3.2775

23 1.2412 0.5601 1.5142 3.3324

24 1.2291 0.5649 1.4551 3.4073

25 1.2094 0.5760 1.4994 3.3248

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 9.7134 0.1684 2.4589 3.3987

2 2.3385 0.2003 2.2624 3.3538

3 2.1711 0.2216 2.1843 3.3970

4 2.0773 0.2380 2.1146 3.3179

5 2.0096 0.2713 2.1116 3.3912

6 1.9580 0.2746 2.0417 3.2955

7 1.9157 0.2853 2.0305 3.3566

8 1.8865 0.3001 2.0288 3.2896

9 1.8550 0.3074 2.0205 3.3772

10 1.8288 0.3081 1.9780 3.3894

11 1.8004 0.3143 1.9637 3.2344

12 1.7825 0.3207 1.9504 3.2982

13 1.7613 0.3226 1.9286 3.3302

14 1.7418 0.3297 1.9060 3.2993

15 1.7260 0.3340 1.8952 3.3793

16 1.7158 0.3372 1.8853 3.4484

17 1.7033 0.3397 1.8688 3.4182

18 1.6904 0.3467 1.8585 3.3645

19 1.6799 0.3474 1.8417 3.3835

20 1.6742 0.3493 1.8272 3.3876

21 1.6610 0.3528 1.8243 3.4297

22 1.6546 0.3548 1.8059 3.4539

23 1.6453 0.3560 1.7961 3.4870

24 1.6355 0.3599 1.7852 3.8322

25 1.6259 0.3632 1.7721 3.8154

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 10.5853 0.1559 2.6480 3.5232

2 2.4561 0.1868 2.3201 3.7447

3 2.2017 0.2295 2.1812 3.4287

4 2.0820 0.2536 2.1004 3.4557

5 2.0094 0.2732 2.0602 3.5308

6 1.9513 0.2775 2.0520 3.7052

7 1.9088 0.2685 2.0615 3.6499

8 1.8706 0.2384 2.0730 3.5781

9 1.8377 0.2561 2.0714 3.3835

10 1.8115 0.3231 2.0191 3.3781

11 1.7805 0.2973 2.0390 3.4543

12 1.7544 0.3187 2.0388 3.4557

13 1.7284 0.3342 2.0536 3.5653

14 1.7053 0.3424 2.0395 3.4875

15 1.6857 0.3383 2.0462 3.5790

16 1.6648 0.3339 2.0335 3.5504

17 1.6485 0.3457 2.0222 3.5547

18 1.6289 0.3902 1.9773 3.5217

19 1.6097 0.3952 1.9769 3.5589

20 1.5919 0.3217 2.0439 3.5298

21 1.5756 0.3212 2.1214 3.5782

22 1.5620 0.4030 2.0029 3.5671

23 1.5449 0.4100 1.9985 3.6093

24 1.5272 0.4090 2.0110 3.6999

25 1.5099 0.4133 2.0287 3.6705

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 24.5777 0.1546 2.7244 3.6508

2 2.4773 0.1703 2.4349 3.7413

3 2.2761 0.1775 2.3289 3.6269

4 2.1830 0.1913 2.2611 3.6891

5 2.1360 0.1952 2.2383 3.6799

6 2.0932 0.2126 2.1830 3.6433

7 2.0652 0.2218 2.1646 3.7296

8 2.0379 0.2275 2.1526 3.6867

9 2.0162 0.2375 2.1501 3.6921

10 1.9964 0.2426 2.1348 3.6993

11 1.9730 0.2522 2.1095 3.7924

12 1.9456 0.2602 2.0970 3.8289

13 1.9262 0.2737 2.0957 3.7655

14 1.9007 0.2820 2.0893 3.9689

15 1.8789 0.2905 2.0830 3.7200

16 1.8519 0.3007 2.0710 3.7884

17 1.8194 0.3145 2.0491 3.7929

18 1.7993 0.3238 2.0404 3.7560

19 1.7783 0.3361 2.0230 3.8258

20 1.7539 0.3470 2.0271 3.8357

21 1.7360 0.3573 2.0182 3.7939

22 1.7207 0.3617 1.9896 3.8725

23 1.7002 0.3628 1.9690 3.8958

24 1.6839 0.3695 1.9378 3.8442

25 1.6739 0.3812 1.9312 3.9601

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 24.2578 0.2147 2.7902 3.9580

2 2.3812 0.2403 2.4608 3.9551

3 2.1229 0.2926 2.2770 3.9561

4 1.9808 0.3196 2.1653 3.9770

5 1.8897 0.3444 2.1189 4.0030

6 1.8246 0.3563 2.0914 3.8937

7 1.7780 0.3743 2.0562 3.9160

8 1.7338 0.3779 2.0037 3.9430

9 1.6987 0.3925 1.9865 3.9880

10 1.6681 0.4072 2.0117 4.0010

11 1.6310 0.4200 1.9684 3.9679

12 1.6010 0.4269 1.9341 3.9969

13 1.5696 0.4393 1.9273 4.0714

14 1.5350 0.4518 1.9082 4.0647

15 1.5059 0.4613 1.9106 4.0066

16 1.4712 0.4762 1.8752 4.0235

17 1.4504 0.4870 1.8314 4.1746

18 1.4190 0.4959 1.8274 4.1527

19 1.3933 0.5058 1.7948 4.0173

20 1.3770 0.5027 1.8043 4.1247

21 1.3527 0.5124 1.7893 4.0905

22 1.3301 0.5180 1.7791 4.0777

23 1.3122 0.5254 1.7453 4.3500

24 1.2933 0.5340 1.9630 4.1492

25 1.2762 0.5384 1.6954 4.1210

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 26.9580 0.1208 2.6169 4.0477

2 2.4823 0.1200 2.4307 4.2059

3 2.3282 0.1428 2.3563 4.1193

4 2.2565 0.1524 2.3155 4.1138

5 2.2001 0.1741 2.2734 4.1912

6 2.1535 0.1950 2.2287 3.9938

7 2.1076 0.2049 2.2518 4.1390

8 2.0691 0.2181 2.1822 4.0480

9 2.0253 0.2407 2.1175 4.0514

10 1.9832 0.2627 2.1390 3.9828

11 1.9417 0.2715 2.0781 3.9763

12 1.9016 0.2851 2.0370 4.0156

13 1.8707 0.2945 2.0299 4.0763

14 1.8421 0.3089 2.0177 3.9161

15 1.8153 0.3164 1.9760 3.8959

16 1.7891 0.3295 1.9760 4.0126

17 1.7632 0.3359 1.9757 4.1115

18 1.7404 0.3406 1.9256 4.0335

19 1.7311 0.3437 1.9383 3.9499

20 1.7125 0.3520 1.8989 4.2886

21 1.6965 0.3540 1.8558 4.0001

22 1.6729 0.3610 1.9086 3.9792

23 1.6736 0.3603 1.8911 4.0633

24 1.6585 0.3674 1.8734 4.0067

25 1.6434 0.3691 1.7974 4.0522

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 62.0209 0.2248 3.5409 4.0404

2 2.6936 0.2210 2.9297 4.1763

3 2.2846 0.2505 2.7578 4.0258

4 2.0930 0.2741 2.6737 4.4111

5 1.9774 0.2941 2.5916 4.1034

6 1.9114 0.3036 2.5505 4.1590

7 1.8743 0.3039 2.5382 4.0711

8 1.8394 0.3329 2.5047 4.1355

9 1.8018 0.3277 2.5169 4.0776

10 1.7703 0.3433 2.4469 4.0428

11 1.7354 0.3657 2.4254 4.1503

12 1.7022 0.3875 2.4440 4.0773

13 1.6766 0.3974 2.4036 4.0140

14 1.6402 0.4011 2.4182 4.0623

15 1.6090 0.4092 2.4008 4.1168

16 1.5899 0.4289 2.3730 4.0669

17 1.5579 0.4415 2.3985 4.0287

18 1.5242 0.4336 2.3863 4.0766

19 1.5163 0.4422 2.3718 4.1321

20 1.4861 0.4458 2.3107 4.0481

21 1.4726 0.4659 2.3541 4.0567

22 1.4527 0.4776 2.3885 4.4304

23 1.4196 0.4844 2.3437 4.0779

24 1.4033 0.4730 2.3760 4.0675

25 1.3808 0.4798 2.3722 4.0382

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 61.5609 0.2883 3.4780 4.0464

2 2.6012 0.3298 2.6968 4.0870

3 2.0821 0.3755 2.4749 4.2013

4 1.8505 0.4083 2.3529 4.0950

5 1.7028 0.4471 2.2966 4.1124

6 1.6135 0.4717 2.2474 4.0860

7 1.5415 0.4787 2.1817 4.0576

8 1.4885 0.4977 2.1969 4.0944

9 1.4297 0.5162 2.1642 4.1369

10 1.3875 0.5295 2.1125 4.0733

11 1.3501 0.5356 2.0757 4.0947

12 1.3136 0.5530 2.0944 4.0554

13 1.2853 0.5563 2.0900 4.0530

14 1.2729 0.5596 2.0249 4.0940

15 1.2448 0.5669 2.0355 4.0810

16 1.2221 0.5789 2.0534 4.0830

17 1.2010 0.5840 2.0750 4.8205

18 1.1843 0.5922 2.0150 4.7400

19 1.1604 0.5977 1.9433 4.6402

20 1.1407 0.6135 2.1202 4.8063

21 1.1190 0.6113 2.0595 4.0641

22 1.1022 0.6174 2.0286 4.1100

23 1.0758 0.6256 1.9962 4.0620

24 1.0701 0.6329 1.9976 4.1725

25 1.0517 0.6386 2.0813 4.0170

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ ------

1 63.3343 0.2328 3.8092 4.0896

2 2.7525 0.2478 3.0218 4.0329

3 2.2251 0.2839 2.9915 4.1258

4 1.9697 0.3135 2.9727 4.0695

5 1.8267 0.3382 2.6738 4.0696

6 1.7207 0.3611 2.3471 4.0802

7 1.6479 0.4243 2.1103 4.0532

8 1.5689 0.3893 2.4210 4.0428

9 1.5572 0.4438 2.0373 4.0531

10 1.4942 0.4062 2.3396 4.1457

11 1.5167 0.4694 1.9836 4.0438

12 1.4447 0.4810 1.9560 4.0287

13 1.4173 0.4839 1.9475 4.0921

14 1.3916 0.4667 2.0120 4.1334

15 1.3971 0.5029 1.9167 4.0298

16 1.3586 0.5079 1.9021 4.0661

17 1.3420 0.5171 1.8798 4.1000

18 1.3250 0.5220 1.8890 4.0520

19 1.3085 0.5272 1.8832 4.0202

20 1.3019 0.5299 1.8657 3.9432

21 1.2764 0.5341 1.8631 4.0104

22 1.2680 0.5353 1.8669 4.0785

23 1.2543 0.5387 1.8576 4.0802

24 1.2406 0.5448 1.8436 4.5671

25 1.2357 0.5476 1.8401 4.1059

GridSearchCV(cv=3, error_score='raise-deprecating',

estimator=<class 'skorch.classifier.NeuralNetClassifier'>[uninitialized](

module=<class '__main__.NeuralNet'>,

),

iid='warn', n_jobs=None,

param_grid={'module__num_units': [32, 64, 128, 256]},

pre_dispatch='2*n_jobs', refit=False, return_train_score=False,

scoring='accuracy', verbose=0)

# save results

torch.save(gs.cv_results_,'gs_linear_results.pt')

# data = torch.load('cv.pt')

results = pd.DataFrame(gs.cv_results_)

results.head()

| mean_fit_time | std_fit_time | mean_score_time | std_score_time | param_module__num_units | params | split0_test_score | split1_test_score | split2_test_score | mean_test_score | std_test_score | rank_test_score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 85.332950 | 0.884418 | 0.899595 | 0.062691 | 32 | {'module__num_units': 32} | 0.198020 | 0.352334 | 0.136085 | 0.228814 | 0.090927 | 4 |

| 1 | 91.577949 | 2.027141 | 1.021033 | 0.131573 | 64 | {'module__num_units': 64} | 0.586904 | 0.363177 | 0.424371 | 0.458157 | 0.094411 | 2 |

| 2 | 104.849806 | 3.395171 | 0.999793 | 0.010569 | 128 | {'module__num_units': 128} | 0.370029 | 0.535465 | 0.370366 | 0.425286 | 0.077908 | 3 |

| 3 | 109.364654 | 1.337694 | 1.046131 | 0.007170 | 256 | {'module__num_units': 256} | 0.481402 | 0.620709 | 0.549098 | 0.550400 | 0.056881 | 1 |

import pandas as pd

# extract mean test scores for each fold, average overall score, and rank

results = pd.DataFrame(gs.cv_results_).iloc[:,[4,6,7,8,9,11]]

results.head()

| param_module__num_units | split0_test_score | split1_test_score | split2_test_score | mean_test_score | rank_test_score | |

|---|---|---|---|---|---|---|

| 0 | 32 | 0.198020 | 0.352334 | 0.136085 | 0.228814 | 4 |

| 1 | 64 | 0.586904 | 0.363177 | 0.424371 | 0.458157 | 2 |

| 2 | 128 | 0.370029 | 0.535465 | 0.370366 | 0.425286 | 3 |

| 3 | 256 | 0.481402 | 0.620709 | 0.549098 | 0.550400 | 1 |

# format data to HiPlot

import hiplot as hip

data = []

for row in results.iterrows():

data.append(row[1].to_dict())

hip.Experiment.from_iterable(data).display()

<hiplot.ipython.IPythonExperimentDisplayed at 0x2be34806c50>

Now we can infer some unique properties about the performance of each architecture:

[32,16,10]: performed the worse on each fold. This tells us that the architecture did not have the necessary parameters to decode the input. Rank 4.[64,32,10]: By far performed the best on the 1st fold with an average accuracy of 60%. However, on the next fold, it performed the worse! This model appears to suffer from high volatility. Rank 2.[128,64,10]: Seems to be a very stable model as its mean score for each fold does not deviate as the others. Rank 3.[256, 128, 10]: On average, this model performs the best and is the most stable. Rank 1.

From the above, we see that linearly increasing the hidden units of each model does not necessarily lead to better performance. However, once we instantiated our first hidden layer with 256 parameters, our model becomes adept (and stable) at encoding our inputs.

Conclusion¶

The linear operation is a fundamental concept to understand for anyone taking a dive at the world of DL. Such concepts:

- forward/backward pass

- Training

- Visualizing

will help you branch out to more complex operations while having a chance to compare your previous knowledge of architectues with the new!

All in all, thank you for taking your time to learn from this tutorial!

Where to Next?¶

Gradient Descent Tutorial: https://nbviewer.jupyter.org/github/Erick7451/DL-with-PyTorch/blob/master/Jupyter_Notebooks/Stochastic%20Gradient%20Descent.ipynb