Lecture 2: Floating point errors, vector norms¶

Syllabus¶

Week 1: Intro week, floating point, vector norms, matrix multiplication

Recap of the previous lecture¶

- Basic course structure.

- Some Python intro (more today by Evgeny Frolov)

Today¶

- Floating point arithmetic; concept of backward and forward stability of algorithms

- How to measure accuracy: vector norms

Representation of numbers¶

The numbers in computer memory are typically represented as floating point numbers (floating means instead of fixed point).

A floating point number is represented as

$$\textrm{number} = \textrm{significand} \times \textrm{base}^{\textrm{exponent}},$$where $\textrm{significand}$ is integer, $\textrm{base}$ is positive integer and $\textrm{exponent}$ is integer (can be negative), i.e.

$$ 1.2 = 12 \cdot 10^{-1}.$$IEEE 754¶

In modern computers, the floating point representation is controlled by IEEE 754 standard which was published in 1985 and before that point different computers behaved differently with floating point numbers.

IEEE 754 has:

- Floating point representation (as described above), $(-1)^s \times c \times b^q$.

- Two infinities, $+\infty$ and $-\infty$

- Two kinds of NaN: a quiet NaN (qNaN) and signalling NaN (sNaN)

- Rules for rounding

$ 0 \leq c \leq b^p - 1, \quad 1 - emax \leq q + p - 1 \leq emax$

The two most common format, single & double¶

The two most common format, called binary32 and binary64 (called also single and double formats).

| Name | Common Name | Base | Digits | Emin | Emax |

|---|---|---|---|---|---|

| binary32 | single precision | 2 | 11 | -14 | + 15 |

| binary64 | double precision | 2 | 24 | -126 | + 127 |

Accuracy and memory¶

The relative accuracy of single precision is $10^{-7}$ - $10^{-8}$,

while for double precision is $10^{-14}$-$10^{-16}$.

Crucial note 1: A float32 takes 4 bytes, float64, or double precision, takes 8 bytes.

Crucial note 2: These are the only two floating point-types supported in hardware.

Crucial note 3: You should use double precision in 99% cases.

Can you guess how much for what (mantissa, base...)?

Some demo...

import numpy as np

import random

c = random.random()

c = np.float32(c)

a = np.float32(9)

b = np.float32(c / a)

print('{0:10.16f}'.format(b))

print a * b - c

0.0860972627997398 0.0

a = np.array(1.585858585887575775757575e-5, dtype=np.float)

b = np.sqrt(a)

print b ** 2 - a

0.0

a = np.array(1e-6, dtype=np.float)

b = np.log(a)

print np.exp(b) - a

4.23516473627e-22

Loss of significance¶

Many operations lead to the loss of digits [loss of significance] (https://en.wikipedia.org/wiki/Loss_of_significance)

For example, it is a bad idea to subtract two big numbers that are close, the difference will have fewer correct digits.

Summation algorithm¶

However, the rounding errors can depend on the algorithm.

Consider the simplest problem: given $n$ numbers floating point numbers $x_1, \ldots, x_n$

compute their sum

$$S = \sum_{i=1}^n x_i = x_1 + \ldots + x_n.$$The simplest algorithm is to add one-by-one.

What is the actual error for such algorithm?

Naive algorithm¶

Naive algorithm adds numbers one-by-one,

$$y_1 = x_1, \quad y_2 = y_1 + x_2, \quad y_3 = y_2 + x_3, \ldots.$$The worst-case error is then proportional to $\mathcal{O}(n)$, while mean-squared error is $\mathcal{O}(\sqrt{n})$.

The Kahan algorithm gives the worst-case error bound $\mathcal{O}(1)$ (i.e., independent of $n$).

Can you find the $\mathcal{O}(\log n)$ algorithm?

Kahan summation¶

The following algorithm gives $2 \varepsilon + \mathcal{O}(n \varepsilon^2)$ error, where $\varepsilon$ is the machine precision.

s = 0

c = 0

for i in range(len(x)):

y = x[i] - c

t = s + y

c = (t - s) - y

s = t

n = 10 ** 6

x = 100 * np.random.randn(n)

x16 = np.array(x, dtype=np.float32)

x = np.array(x16, dtype=np.float64)

true_sum = sum(x)

sum_16 = sum(x16)

from numba import jit

@jit

def dumb_sum(x):

s = 0

for i in range(len(x)):

s = s + x[i]

return s

@jit

def kahan_sum(x):

s = 0.0

c = 0.0

for i in range(len(x)):

y = x[i] - c

t = s + y

c = (t - s) - y

s = t

return s

k_sum = kahan_sum(x16)

d_sum = dumb_sum(x16)

print('Error in sum: {0:3.1e}, kahan: {1:3.1e}, dump_sum: {2:3.1e} '.format(sum_16 - true_sum, k_sum - true_sum, d_sum - true_sum))

Error in sum: 0.0e+00, kahan: 0.0e+00, dump_sum: 0.0e+00

More complicated example¶

import math

math.fsum([1, 1e20, 1, -1e20] * 10000)

Vectors¶

In NLA we typically work not with numbers but with vectors.

Recall that a vector is a 1D array with $n$ numbers. Typically, it is considered as an $n \times 1$ matrix (column vector).

Vector norm¶

Vectors typically provide an (approximate) description of a physical (or some other) object. One of the main question is how accurate the approximation is (1%, 10%). What is an acceptable representation, of course, depends on the particular applications. For example:

- In partial differential equations accuracies $10^{-5} - 10^{-10}$ are the typical case

- In data mining sometimes an error of $80\%$ is ok, since the interesting signal is corrupted by a huge noise.

Distances and norms¶

Norm is a qualitative measure of smallness of a vector and is typically denoted as $\Vert x \Vert$ The norm should satisfy certain properties:

- $\Vert \alpha x \Vert = |\alpha| \Vert x \Vert$,

- $\Vert x + y \Vert \leq \Vert x \Vert + \Vert y \Vert$ (triangle inequality),

- If $\Vert x \Vert = 0$ then $x = 0$.

The distance between two vectors is then defined as $$ d(x, y) = \Vert x - y \Vert. $$

Standard norms¶

The most well-known and widely used norm is euclidean norm: $$\Vert x \Vert_2 = \sqrt{\sum_{i=1}^n |x_i|^2},$$ which corresponds to the distance in our real life (the vectors might have complex elements, thus is the modulus here).

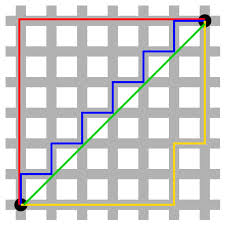

$p$-norm¶

Euclidean norm, or $2$-norm, is a subclass of an important class of $p$-norms: $$ \Vert x \Vert_p = \Big(\sum_{i=1}^n |x_i|^p\Big)^{1/p}. $$ There are two very important special cases:

- Infinity norm, or Chebyshev norm which is defined as the maximal element: $\Vert x \Vert_{\infty} = \max_i | x_i|$

- $L_1$ norm (or Manhattan distance) which is defined as the sum of modules of the elements of $x$: $\Vert x \Vert_1 = \sum_i |x_i|$

We will give examples where Manhattan is very important: it all relates to the compressed sensing methods that emerged in the mid-00s as one of the most popular research topics.

Equivalence of the norms¶

All norms are equivalent in the sense that

$$

C_1 \Vert x \Vert_* \leq \Vert x \Vert_{**} \leq C_2 \Vert x \Vert_*

$$

for some constants $C_1(n), C_2(n)$, $x \in \mathbb{R}^n$ for any pairs of norms $\Vert \cdot \Vert_*$ and $\Vert \cdot \Vert_{**}$. The equivalence of the norms basically means that if the vector is small in one norm, it is small in another norm. However, the constants can be large.

Computing norms in Python¶

The numpy package has all you need for computing norms (np.linalg.norm function)

import numpy as np

n = 100

a = np.ones(n)

b = a + 1e-3 * np.random.randn(n)

print 'Relative error:', np.linalg.norm(a - b, np.inf) / np.linalg.norm(b, np.inf)

Relative error: 0.00299119819916

Unit disks in different norms¶

A unit disk is a set of point such that $\Vert x \Vert \leq 1$. For the Frobenius norm is a disk; for other norms the "disks" look different.

%matplotlib inline

import seaborn as sns

import matplotlib.pyplot as plt

import numpy as np

p = 4 #Which norm do we use

M = 40000 #Number of sampling points

a = np.random.randn(M, 2)

b = []

for i in xrange(M):

if np.linalg.norm(a[i, :], 1) <= 1:

b.append(a[i, :])

b = np.array(b)

plt.fill(b[:, 0], b[:, 1])

plt.axis('equal')

(-1.0, 1.0, -1.0, 1.0)

Why $L_1$-norm can be important¶

$L_1$ norm, as it was discovered quite recently, plays an important role in compressed sensing. The simplest formulation is as follows:

- You have some observations $f$

- You have a linear model $Ax = f$, where $A$ is an $n \times m$ matrix, $A$ is known

- The number of equations, $n$ is less than the number of unknowns, $m$

The question: can we find the solution?

The solution is obviously non-unique, so the natural approach is to find the solution that is minimal in the certain sense: $$ \Vert x \Vert \rightarrow \min, \quad \mbox{subject to } Ax = f$$

Typical choice of $\Vert x \Vert = \Vert x \Vert_2$ leads to the linear least squares problem (and has been used for ages).

The choice $\Vert x \Vert = \Vert x \Vert_1$ leads to the compressed sensing and what happens, it typically yields the sparsest solution.

A short demo

What is a stable algorithm?¶

And we finalize the lecture by the concept of stability.

Let $x$ be an object (for example, a vector). Let $f(x)$ be the function (functional) you want to evaluate.

You also have a numerical algorithm alg(x) that actually computes approximation to $f(x)$.

The algorithm is called forward stable, if $$\Vert alg(x) - f(x) \Vert \leq \varepsilon $$

The algorithm is called backward stable, if for any $x$ there is a close vector $x + \delta x$ such that

$$alg(x) = f(x + \delta x)$$and $\Vert \delta x \Vert$ is small.

Classical example¶

A classical example is the solution of linear systems of equations using LU-factorizations

We consider the Hilbert matrix with the elements

$$a_{ij} = 1/(i + j + 1), \quad i = 0, \ldots, n-1, \quad j = 0, \ldots n-1.$$And consider a linear system

$$Ax = f.$$(We will look into matrices in more details in the next lecture, and for linear systems in the upcoming weeks, but now you actually see the linear system)

import numpy as np

n = 20

a = [[1.0/(i + j + 1) for i in range(n)] for j in range(n)] #Hil

a = np.array(a)

rhs = np.random.rand(n)

sol = np.linalg.solve(a, rhs)

print np.linalg.norm(a.dot(sol) - rhs) #Ax - y

#print sol

2.08661626788

Take home message¶

- Floating point (double, single, number of bytes), rounding error

- Norms are measures of smallness, used to compute the accuracy

- $1$, $p$ and Euclidean norms

- $L_1$ is used in compressed sensing as a surrogate for sparsity (later lectures)

- Forward/backward error (and stability of algorithms) (later lectures)

Next lecture¶

- Matrices

- Matrix multiplication

- Matrix norms, operator norms

- unitary matrices, unitary invariant norms

- Concept of block algorithms for NLA: why and how.

- Complexity of matrix multiplication

Questions?¶

from IPython.core.display import HTML

def css_styling():

styles = open("./styles/custom.css", "r").read()

return HTML(styles)

css_styling()