#!/usr/bin/env python

# coding: utf-8

# Rhythm Patterns Music Features

#

# Extraction and Application Tutorial

#

# http://www.ifs.tuwien.ac.at/mir

#

# Alexander Schindler and Thomas Lidy

Institute of Software Technology and Interactive Systems

Vienna University of Technology

http://www.ifs.tuwien.ac.at/~schindler

#

#

# ## NOTE: This Tutorial is slightly unfinished / not fully tested.

# ## Table of Contents

# 1. Requirements

# 2. Audio Processing

# 3. Audio Feature Extraction

# 4. Application Scenarios

# 4.1. Finding Similar Sounding Songs

#

#

# # 1. Requirements

# ### RP Extract Library

#

# This is the mean library for rhythmic and timbral audio feature analysis:

#

# - RP_extract Rhythm Patterns Audio Feature Extraction Library (includes Wavio for reading wav files (incl. 24 bit))

#

#

# download ZIP or check out from GitHub:

# In[ ]:

# in Terminal

git clone https://github.com/tuwien-musicir/rp_extract.git

# ### Python Libraries

# If not already included in your Python installation,

# please install these Python libraries using pip or easy_install:

#

#

# - Numpy: the fundamental package for scientific computing with Python. It implements a wide range of fast and powerful algebraic functions.

# - Scipy: Scientific Python library

#

#

#

# They can usually be installed via Python PIP installer on command line:

# In[ ]:

# in Terminal

sudo pip install numpy scipy

# ### Additional Libraries

#

# These libraries are used in the later tutorial steps, but not necessarily needed if you want to use the RP_extract library alone:

#

#

# - mir_utils: these are additional functions used for the Soundcloud Demo data set in the tutorial below

# - unicsv: used in rp_extract_files.py for batch iteration over many wav or mp3 files, and storing features in CSV (only needed when you want to do batch feature extraction to CSV)

# - sklearn: Scikit-Learn machine learning package - used in later tutorial steps for finding similar songs and/or using machine learning / classification

#

# In[ ]:

# in Terminal

git clone https://github.com/tuwien-musicir/mir_utils.git

sudo pip install unicsv scikit-learn

# ### MP3 Decoder

# If you want to use MP3 files as input, you need to have one of the following MP3 decoders installed in your system:

#

#

# - Windows: FFMpeg (ffmpeg.exe is included in RP_extract library on Github above, nothing to install)

# - Mac: Lame for Mac or FFMPeg for Mac

# - Linux: please install mpg123, lame or ffmpeg from your Software Install Center or Package Repository

#

#

# Note: If you don't install it to a path which can be found by the operating system, use this to add path where you installed the MP3 decoder binary to your system PATH so Python can call it:

# In[12]:

import os

path = '/path/to/ffmpeg/'

os.environ['PATH'] += os.pathsep + path

# ### Import + Test your Environment

# If you have installed all required libraries, the follwing imports should run without errors.

# In[1]:

get_ipython().run_line_magic('pylab', 'inline')

import warnings

warnings.filterwarnings('ignore')

get_ipython().run_line_magic('load_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

# numerical processing and scientific libraries

import numpy as np

import pandas as pd

# plotting

import matplotlib.pyplot as plt

# Rhythm Pattern Audio Extraction Library

## edit the path here where you checked out and stored the rp_extract package

#import sys

#sys.path.append("./rp_extract")

from rp_plot import *

from rp_extract import rp_extract

# reading wav and mp3 files

from audiofile_read import *

# misc

from urllib import urlopen

import urllib2

import gzip

import StringIO

# # 2. Audio Processing

# Feature Extraction is the core of content-based description of audio files. With feature extraction from audio, a computer is able to recognize the content of a piece of music without the need of annotated labels such as artist, song title or genre. This is the essential basis for information retrieval tasks, such as similarity based searches (query-by-example, query-by-humming, etc.), automatic classification into categories, or automatic organization and clustering of music archives.

#

# Content-based description requires the development of feature extraction techniques that analyze the acoustic characteristics of the signal. Features extracted from the audio signal are intended to describe the stylistic content of the music, e.g. beat, presence of voice, timbre, etc.

#

# We use methods from digital signal processing and consider psycho-acoustic models in order to extract suitable semantic information from music. We developed various feature sets, which are appropriate for different tasks.

# ## Load Audio Files

#

# ### Load audio data from wav or mp3 file

#

# We provide a library (audiofile_read.py) that is capable of reading WAV and MP3 files (MP3 through an external decoder, see Installation Requirements above)

#

# In[56]:

# provide/adjust the path to your wav or mp3 file

audiofile = "music/myaudio.wav"

#audiofile = "Acrassicauda_-_02_-_Garden_Of_Stones.wav"

samplerate, samplewidth, wavedata = audiofile_read(audiofile)

# ### Normalization

#

# Usually, an audio files stores integer values for the samples. However, for audio processing we need float values that's why the audiofile_read library already converts the input data to float values in the range of (-1,1).

#

# This is taken care of by audiofile_read. In case you don't want to normalize, use this line instead of the one above:

#

# ONLY use this if you DON'T want to normalize. RP_extract NEEDS normalization:

#

# samplerate, samplewidth, wavedata = audiofile_read(audiofile, normalize=False)

# ### Audio Information

#

# Let's print some information about the audio file just read:

# In[57]:

nsamples = wavedata.shape[0]

nchannels = wavedata.shape[1]

print "Successfully read audio file:", audiofile

print samplerate, "Hz,", samplewidth*8, "bit,", nchannels, "channel(s),", nsamples, "samples"

# ### Plot Wave form

# we use this to check if the WAV or MP3 file has been correctly loaded

# In[58]:

max_samples_plot = 150000 # limit number of samples to plot, to avoid graphical overflow

if nsamples < max_samples_plot:

max_samples_plot = nsamples

plot_waveform(wavedata[0:max_samples_plot], 16, 5);

# ### Audio Pre-processing

# For audio processing and feature extraction, we use a single channel only.

#

# Therefore in case we have a stereo signal, we combine the separate channels:

# In[59]:

# use combine the channels by calculating their geometric mean

wavedata_mono = np.mean(wavedata, axis=1)

# Below an example waveform of a mono channel after combining the stereo channels by arithmetic mean:

# In[60]:

plot_waveform(wavedata_mono[0:max_samples_plot], 16, 3)

# In[61]:

plotstft(wavedata_mono, samplerate, binsize=512, ignore=True);

# # 3. Audio Feature Extraction

# ## Rhythm Patterns

#  #

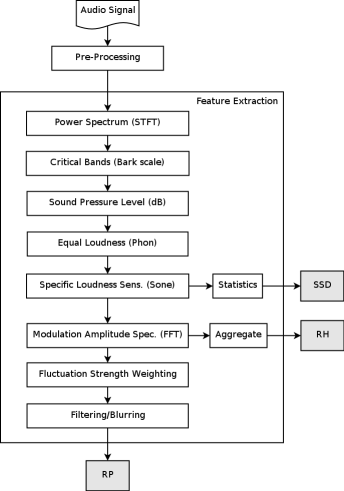

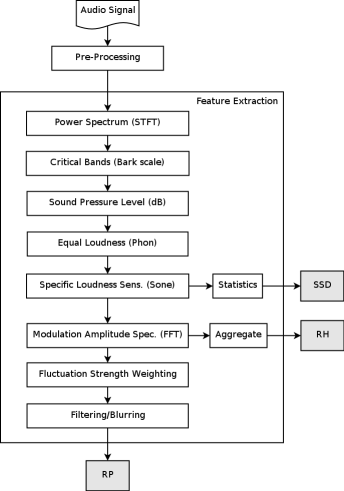

# Rhythm Patterns (also called Fluctuation Patterns) describe modulation amplitudes for a range of modulation frequencies on "critical bands" of the human auditory range, i.e. fluctuations (or rhythm) on a number of frequency bands. The feature extraction process for the Rhythm Patterns is composed of two stages:

#

# First, the specific loudness sensation in different frequency bands is computed, by using a Short Time FFT, grouping the resulting frequency bands to psycho-acoustically motivated critical-bands, applying spreading functions to account for masking effects and successive transformation into the decibel, Phon and Sone scales. This results in a power spectrum that reflects human loudness sensation (Sonogram).

#

# In the second step, the spectrum is transformed into a time-invariant representation based on the modulation frequency, which is achieved by applying another discrete Fourier transform, resulting in amplitude modulations of the loudness in individual critical bands. These amplitude modulations have different effects on human hearing sensation depending on their frequency, the most significant of which, referred to as fluctuation strength, is most intense at 4 Hz and decreasing towards 15 Hz. From that data, reoccurring patterns in the individual critical bands, resembling rhythm, are extracted, which – after applying Gaussian smoothing to diminish small variations – result in a time-invariant, comparable representation of the rhythmic patterns in the individual critical bands.

# In[62]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rp = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[63]:

plotrp(extracted_features['rp'])

# ## Statistical Spectrum Descriptor

# The Sonogram is calculated as in the first part of the Rhythm Patterns calculation. According to the occurrence of beats or other rhythmic variation of energy on a specific critical band, statistical measures are able to describe the audio content. Our goal is to describe the rhythmic content of a piece of audio by computing the following statistical moments on the Sonogram values of each of the critical bands:

#

# * mean, median, variance, skewness, kurtosis, min- and max-value

# In[66]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_ssd = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[67]:

plotssd(extracted_features['ssd'])

# ## Rhythm Histogram

# The Rhythm Histogram features we use are a descriptor for general rhythmics in an audio document. Contrary to the Rhythm Patterns and the Statistical Spectrum Descriptor, information is not stored per critical band. Rather, the magnitudes of each modulation frequency bin of all critical bands are summed up, to form a histogram of "rhythmic energy" per modulation frequency. The histogram contains 60 bins which reflect modulation frequency between 0 and 10 Hz. For a given piece of audio, the Rhythm Histogram feature set is calculated by taking the median of the histograms of every 6 second segment processed.

# In[68]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rh = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[69]:

plotrh(extracted_features['rh'])

# ## Modulation Frequency Variance Descriptor

# This descriptor measures variations over the critical frequency bands for a specific modulation frequency (derived from a rhythm pattern).

#

# Considering a rhythm pattern, i.e. a matrix representing the amplitudes of 60 modulation frequencies on 24 critical bands, an MVD vector is derived by computing statistical measures (mean, median, variance, skewness, kurtosis, min and max) for each modulation frequency over the 24 bands. A vector is computed for each of the 60 modulation frequencies. Then, an MVD descriptor for an audio file is computed by the mean of multiple MVDs from the audio file's segments, leading to a 420-dimensional vector.

# ## Temporal Statistical Spectrum Descriptor

# Feature sets are frequently computed on a per segment basis and do not incorporate time series aspects. As a consequence, TSSD features describe variations over time by including a temporal dimension. Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual statistical spec- trum descriptors extracted from segments at different time positions within a piece of audio. This captures timbral variations and changes over time in the audio spectrum, for all the critical Bark-bands. Thus, a change of rhythmic, instruments, voices, etc. over time is reflected by this feature set. The dimension is 7 times the dimension of an SSD (i.e. 1176).

# ## Temporal Rhythm Histograms

# Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual Rhythm Histograms extracted from various segments in a piece of audio. Thus, change and variation of rhythmic aspects in time are captured by this descriptor.

# # 4. Application Scenarios

# In these application scenarios we try to find similar songs or classify music into different categories.

#

# For these Use Cases we need to import a few additional functions from the sklearn package and from mir_utils (installed from git above in parallel to rp_extract):

# In[2]:

# IMPORTING mir_utils (installed from git above in parallel to rp_extract (otherwise ajust path))

import sys

sys.path.append("../mir_utils")

from demo.NotebookUtils import *

from demo.PlottingUtils import *

from demo.Soundcloud_Demo_Dataset import SoundcloudDemodatasetHandler

# In[3]:

# IMPORTS for Classification and Evaluation

from sklearn.preprocessing import StandardScaler

from sklearn import svm

from sklearn.cross_validation import StratifiedKFold, ShuffleSplit, cross_val_score

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import NearestNeighbors

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, confusion_matrix

# In[5]:

from IPython.display import HTML

myhtml= ''

HTML(myhtml)

# ## The Soundcloud Demo Dataset

# The Soundcloud Demo Dataset is a collection of commonly known mainstream radio songs hosted on the online streaming platform Soundcloud. The Dataset is available as playlist and is intended to be used to demonstrate the performance of MIR algorithms with the help of well known songs.

#

#

# The *SoundcloudDemodatasetHandler* abstracts the access to the TU-Wien server. On this server the extracted features are stored as csv-files. The *SoundcloudDemodatasetHandler* remotely loads the features and returns them by request. The features have been extracted using the method explained in the previous sections.

# In[35]:

scds = SoundcloudDemodatasetHandler("D:/Research/Data/MIR/Soundcloud_Dataset", lazy=True)

# ## 4.1. Finding Similar Sounding Songs

# The query-song:

# In[6]:

query_track_soundcloud_id = 68687842

HTML(scds.getPlayerHTMLForID(query_track_soundcloud_id))

# Fitting the siilarity search object

# In[21]:

sim_song_search = NearestNeighbors(n_neighbors = 6, metric='euclidean')

# ### Finding rhythmical similar songs

# ##### Finding rhythmical similar songs using Rhythm Histograms

# In[22]:

feature_set = 'rh'

# Normalize the extracted features.

# In[23]:

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

# Fit the Nearest-Neighbor search object to the extracted features

# In[24]:

sim_song_search.fit(scaled_feature_space);

# Retrieve the feature vector for the query song

# In[25]:

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_track_soundcloud_id]

# Search the nearest neighbors of the query-feature-vector

# In[26]:

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

# Because we are searching in the entire collection, the top-most result is the query song itself. Thus, we can skip it.

# In[27]:

similar_songs = similar_songs[1:]

# Lookup the corresponding Soundcloud-IDs

# In[28]:

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

# Listen to the results

# In[39]:

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ##### Finding rhythmical similar songs using Rhythm Patterns

# In[40]:

def search_similar_songs(query_song_id, feature_set, skip_query=True):

#

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_song_id]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

return similar_soundcloud_ids

# In[41]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='rp')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ### Finding songs based on timbral similarity

# ##### Finding songs based on timbral similarity using Statistical Spectral Descriptors

# In[42]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='ssd')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# First entry is query-track (TODO: change CSS style of query table cells!)

# In[43]:

results_track_1 = search_similar_songs(68687842, feature_set='ssd', skip_query=False)

results_track_2 = search_similar_songs(40439758, feature_set='rh', skip_query=False)

compareSimilarityResults([results_track_1, results_track_2],

width=100, height=120, visual=False,

columns=['Statistical Spectrum Descriptors', 'Rhythm Histograms'])

# ### Combining different Music Descriptors

# In[45]:

def search_similar_songs_with_combined_sets(scds, query_song_id, feature_sets, skip_query=True, n_neighbors=6):

features = scds.getCombinedFeaturesets(feature_sets)

sim_song_search = NearestNeighbors(n_neighbors = n_neighbors, metric='l2')

#

scaled_feature_space = StandardScaler().fit_transform(features)

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[scds.getFeatureIndexByID(query_song_id, feature_sets[0])]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = scds.getIdsByIndex(similar_songs, feature_sets[0])

return similar_soundcloud_ids

# In[47]:

feature_sets = ['ssd','rh']

compareSimilarityResults([search_similar_songs_with_combined_sets(scds, 68687842, feature_sets=feature_sets, n_neighbors=5),

search_similar_songs_with_combined_sets(scds, 40439758, feature_sets=feature_sets, n_neighbors=5)],

width=100, height=120, visual=False,

columns=[scds.getNameByID(68687842),

scds.getNameByID(40439758)])

# # Further Reading

# * [Audio Feature Extraction site of the MIR-Team @TU-Wien](http://www.ifs.tuwien.ac.at/mir/audiofeatureextraction.html)

# * Blog-post: [A gentle Introduction to Music Information Retrieval](http://www.europeanasounds.eu/news/a-gentle-introduction-to-music-information-retrieval-making-computers-understand-music)

# * [Same Blog-post with Python code](http://wwwnew.schindler.eu.com/blog/mir_intro/blog_with_code.html)

#

# Rhythm Patterns (also called Fluctuation Patterns) describe modulation amplitudes for a range of modulation frequencies on "critical bands" of the human auditory range, i.e. fluctuations (or rhythm) on a number of frequency bands. The feature extraction process for the Rhythm Patterns is composed of two stages:

#

# First, the specific loudness sensation in different frequency bands is computed, by using a Short Time FFT, grouping the resulting frequency bands to psycho-acoustically motivated critical-bands, applying spreading functions to account for masking effects and successive transformation into the decibel, Phon and Sone scales. This results in a power spectrum that reflects human loudness sensation (Sonogram).

#

# In the second step, the spectrum is transformed into a time-invariant representation based on the modulation frequency, which is achieved by applying another discrete Fourier transform, resulting in amplitude modulations of the loudness in individual critical bands. These amplitude modulations have different effects on human hearing sensation depending on their frequency, the most significant of which, referred to as fluctuation strength, is most intense at 4 Hz and decreasing towards 15 Hz. From that data, reoccurring patterns in the individual critical bands, resembling rhythm, are extracted, which – after applying Gaussian smoothing to diminish small variations – result in a time-invariant, comparable representation of the rhythmic patterns in the individual critical bands.

# In[62]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rp = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[63]:

plotrp(extracted_features['rp'])

# ## Statistical Spectrum Descriptor

# The Sonogram is calculated as in the first part of the Rhythm Patterns calculation. According to the occurrence of beats or other rhythmic variation of energy on a specific critical band, statistical measures are able to describe the audio content. Our goal is to describe the rhythmic content of a piece of audio by computing the following statistical moments on the Sonogram values of each of the critical bands:

#

# * mean, median, variance, skewness, kurtosis, min- and max-value

# In[66]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_ssd = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[67]:

plotssd(extracted_features['ssd'])

# ## Rhythm Histogram

# The Rhythm Histogram features we use are a descriptor for general rhythmics in an audio document. Contrary to the Rhythm Patterns and the Statistical Spectrum Descriptor, information is not stored per critical band. Rather, the magnitudes of each modulation frequency bin of all critical bands are summed up, to form a histogram of "rhythmic energy" per modulation frequency. The histogram contains 60 bins which reflect modulation frequency between 0 and 10 Hz. For a given piece of audio, the Rhythm Histogram feature set is calculated by taking the median of the histograms of every 6 second segment processed.

# In[68]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rh = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[69]:

plotrh(extracted_features['rh'])

# ## Modulation Frequency Variance Descriptor

# This descriptor measures variations over the critical frequency bands for a specific modulation frequency (derived from a rhythm pattern).

#

# Considering a rhythm pattern, i.e. a matrix representing the amplitudes of 60 modulation frequencies on 24 critical bands, an MVD vector is derived by computing statistical measures (mean, median, variance, skewness, kurtosis, min and max) for each modulation frequency over the 24 bands. A vector is computed for each of the 60 modulation frequencies. Then, an MVD descriptor for an audio file is computed by the mean of multiple MVDs from the audio file's segments, leading to a 420-dimensional vector.

# ## Temporal Statistical Spectrum Descriptor

# Feature sets are frequently computed on a per segment basis and do not incorporate time series aspects. As a consequence, TSSD features describe variations over time by including a temporal dimension. Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual statistical spec- trum descriptors extracted from segments at different time positions within a piece of audio. This captures timbral variations and changes over time in the audio spectrum, for all the critical Bark-bands. Thus, a change of rhythmic, instruments, voices, etc. over time is reflected by this feature set. The dimension is 7 times the dimension of an SSD (i.e. 1176).

# ## Temporal Rhythm Histograms

# Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual Rhythm Histograms extracted from various segments in a piece of audio. Thus, change and variation of rhythmic aspects in time are captured by this descriptor.

# # 4. Application Scenarios

# In these application scenarios we try to find similar songs or classify music into different categories.

#

# For these Use Cases we need to import a few additional functions from the sklearn package and from mir_utils (installed from git above in parallel to rp_extract):

# In[2]:

# IMPORTING mir_utils (installed from git above in parallel to rp_extract (otherwise ajust path))

import sys

sys.path.append("../mir_utils")

from demo.NotebookUtils import *

from demo.PlottingUtils import *

from demo.Soundcloud_Demo_Dataset import SoundcloudDemodatasetHandler

# In[3]:

# IMPORTS for Classification and Evaluation

from sklearn.preprocessing import StandardScaler

from sklearn import svm

from sklearn.cross_validation import StratifiedKFold, ShuffleSplit, cross_val_score

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import NearestNeighbors

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, confusion_matrix

# In[5]:

from IPython.display import HTML

myhtml= ''

HTML(myhtml)

# ## The Soundcloud Demo Dataset

# The Soundcloud Demo Dataset is a collection of commonly known mainstream radio songs hosted on the online streaming platform Soundcloud. The Dataset is available as playlist and is intended to be used to demonstrate the performance of MIR algorithms with the help of well known songs.

#

#

# The *SoundcloudDemodatasetHandler* abstracts the access to the TU-Wien server. On this server the extracted features are stored as csv-files. The *SoundcloudDemodatasetHandler* remotely loads the features and returns them by request. The features have been extracted using the method explained in the previous sections.

# In[35]:

scds = SoundcloudDemodatasetHandler("D:/Research/Data/MIR/Soundcloud_Dataset", lazy=True)

# ## 4.1. Finding Similar Sounding Songs

# The query-song:

# In[6]:

query_track_soundcloud_id = 68687842

HTML(scds.getPlayerHTMLForID(query_track_soundcloud_id))

# Fitting the siilarity search object

# In[21]:

sim_song_search = NearestNeighbors(n_neighbors = 6, metric='euclidean')

# ### Finding rhythmical similar songs

# ##### Finding rhythmical similar songs using Rhythm Histograms

# In[22]:

feature_set = 'rh'

# Normalize the extracted features.

# In[23]:

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

# Fit the Nearest-Neighbor search object to the extracted features

# In[24]:

sim_song_search.fit(scaled_feature_space);

# Retrieve the feature vector for the query song

# In[25]:

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_track_soundcloud_id]

# Search the nearest neighbors of the query-feature-vector

# In[26]:

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

# Because we are searching in the entire collection, the top-most result is the query song itself. Thus, we can skip it.

# In[27]:

similar_songs = similar_songs[1:]

# Lookup the corresponding Soundcloud-IDs

# In[28]:

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

# Listen to the results

# In[39]:

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ##### Finding rhythmical similar songs using Rhythm Patterns

# In[40]:

def search_similar_songs(query_song_id, feature_set, skip_query=True):

#

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_song_id]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

return similar_soundcloud_ids

# In[41]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='rp')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ### Finding songs based on timbral similarity

# ##### Finding songs based on timbral similarity using Statistical Spectral Descriptors

# In[42]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='ssd')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# First entry is query-track (TODO: change CSS style of query table cells!)

# In[43]:

results_track_1 = search_similar_songs(68687842, feature_set='ssd', skip_query=False)

results_track_2 = search_similar_songs(40439758, feature_set='rh', skip_query=False)

compareSimilarityResults([results_track_1, results_track_2],

width=100, height=120, visual=False,

columns=['Statistical Spectrum Descriptors', 'Rhythm Histograms'])

# ### Combining different Music Descriptors

# In[45]:

def search_similar_songs_with_combined_sets(scds, query_song_id, feature_sets, skip_query=True, n_neighbors=6):

features = scds.getCombinedFeaturesets(feature_sets)

sim_song_search = NearestNeighbors(n_neighbors = n_neighbors, metric='l2')

#

scaled_feature_space = StandardScaler().fit_transform(features)

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[scds.getFeatureIndexByID(query_song_id, feature_sets[0])]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = scds.getIdsByIndex(similar_songs, feature_sets[0])

return similar_soundcloud_ids

# In[47]:

feature_sets = ['ssd','rh']

compareSimilarityResults([search_similar_songs_with_combined_sets(scds, 68687842, feature_sets=feature_sets, n_neighbors=5),

search_similar_songs_with_combined_sets(scds, 40439758, feature_sets=feature_sets, n_neighbors=5)],

width=100, height=120, visual=False,

columns=[scds.getNameByID(68687842),

scds.getNameByID(40439758)])

# # Further Reading

# * [Audio Feature Extraction site of the MIR-Team @TU-Wien](http://www.ifs.tuwien.ac.at/mir/audiofeatureextraction.html)

# * Blog-post: [A gentle Introduction to Music Information Retrieval](http://www.europeanasounds.eu/news/a-gentle-introduction-to-music-information-retrieval-making-computers-understand-music)

# * [Same Blog-post with Python code](http://wwwnew.schindler.eu.com/blog/mir_intro/blog_with_code.html)

#

# Rhythm Patterns (also called Fluctuation Patterns) describe modulation amplitudes for a range of modulation frequencies on "critical bands" of the human auditory range, i.e. fluctuations (or rhythm) on a number of frequency bands. The feature extraction process for the Rhythm Patterns is composed of two stages:

#

# First, the specific loudness sensation in different frequency bands is computed, by using a Short Time FFT, grouping the resulting frequency bands to psycho-acoustically motivated critical-bands, applying spreading functions to account for masking effects and successive transformation into the decibel, Phon and Sone scales. This results in a power spectrum that reflects human loudness sensation (Sonogram).

#

# In the second step, the spectrum is transformed into a time-invariant representation based on the modulation frequency, which is achieved by applying another discrete Fourier transform, resulting in amplitude modulations of the loudness in individual critical bands. These amplitude modulations have different effects on human hearing sensation depending on their frequency, the most significant of which, referred to as fluctuation strength, is most intense at 4 Hz and decreasing towards 15 Hz. From that data, reoccurring patterns in the individual critical bands, resembling rhythm, are extracted, which – after applying Gaussian smoothing to diminish small variations – result in a time-invariant, comparable representation of the rhythmic patterns in the individual critical bands.

# In[62]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rp = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[63]:

plotrp(extracted_features['rp'])

# ## Statistical Spectrum Descriptor

# The Sonogram is calculated as in the first part of the Rhythm Patterns calculation. According to the occurrence of beats or other rhythmic variation of energy on a specific critical band, statistical measures are able to describe the audio content. Our goal is to describe the rhythmic content of a piece of audio by computing the following statistical moments on the Sonogram values of each of the critical bands:

#

# * mean, median, variance, skewness, kurtosis, min- and max-value

# In[66]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_ssd = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[67]:

plotssd(extracted_features['ssd'])

# ## Rhythm Histogram

# The Rhythm Histogram features we use are a descriptor for general rhythmics in an audio document. Contrary to the Rhythm Patterns and the Statistical Spectrum Descriptor, information is not stored per critical band. Rather, the magnitudes of each modulation frequency bin of all critical bands are summed up, to form a histogram of "rhythmic energy" per modulation frequency. The histogram contains 60 bins which reflect modulation frequency between 0 and 10 Hz. For a given piece of audio, the Rhythm Histogram feature set is calculated by taking the median of the histograms of every 6 second segment processed.

# In[68]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rh = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[69]:

plotrh(extracted_features['rh'])

# ## Modulation Frequency Variance Descriptor

# This descriptor measures variations over the critical frequency bands for a specific modulation frequency (derived from a rhythm pattern).

#

# Considering a rhythm pattern, i.e. a matrix representing the amplitudes of 60 modulation frequencies on 24 critical bands, an MVD vector is derived by computing statistical measures (mean, median, variance, skewness, kurtosis, min and max) for each modulation frequency over the 24 bands. A vector is computed for each of the 60 modulation frequencies. Then, an MVD descriptor for an audio file is computed by the mean of multiple MVDs from the audio file's segments, leading to a 420-dimensional vector.

# ## Temporal Statistical Spectrum Descriptor

# Feature sets are frequently computed on a per segment basis and do not incorporate time series aspects. As a consequence, TSSD features describe variations over time by including a temporal dimension. Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual statistical spec- trum descriptors extracted from segments at different time positions within a piece of audio. This captures timbral variations and changes over time in the audio spectrum, for all the critical Bark-bands. Thus, a change of rhythmic, instruments, voices, etc. over time is reflected by this feature set. The dimension is 7 times the dimension of an SSD (i.e. 1176).

# ## Temporal Rhythm Histograms

# Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual Rhythm Histograms extracted from various segments in a piece of audio. Thus, change and variation of rhythmic aspects in time are captured by this descriptor.

# # 4. Application Scenarios

# In these application scenarios we try to find similar songs or classify music into different categories.

#

# For these Use Cases we need to import a few additional functions from the sklearn package and from mir_utils (installed from git above in parallel to rp_extract):

# In[2]:

# IMPORTING mir_utils (installed from git above in parallel to rp_extract (otherwise ajust path))

import sys

sys.path.append("../mir_utils")

from demo.NotebookUtils import *

from demo.PlottingUtils import *

from demo.Soundcloud_Demo_Dataset import SoundcloudDemodatasetHandler

# In[3]:

# IMPORTS for Classification and Evaluation

from sklearn.preprocessing import StandardScaler

from sklearn import svm

from sklearn.cross_validation import StratifiedKFold, ShuffleSplit, cross_val_score

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import NearestNeighbors

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, confusion_matrix

# In[5]:

from IPython.display import HTML

myhtml= ''

HTML(myhtml)

# ## The Soundcloud Demo Dataset

# The Soundcloud Demo Dataset is a collection of commonly known mainstream radio songs hosted on the online streaming platform Soundcloud. The Dataset is available as playlist and is intended to be used to demonstrate the performance of MIR algorithms with the help of well known songs.

#

#

# The *SoundcloudDemodatasetHandler* abstracts the access to the TU-Wien server. On this server the extracted features are stored as csv-files. The *SoundcloudDemodatasetHandler* remotely loads the features and returns them by request. The features have been extracted using the method explained in the previous sections.

# In[35]:

scds = SoundcloudDemodatasetHandler("D:/Research/Data/MIR/Soundcloud_Dataset", lazy=True)

# ## 4.1. Finding Similar Sounding Songs

# The query-song:

# In[6]:

query_track_soundcloud_id = 68687842

HTML(scds.getPlayerHTMLForID(query_track_soundcloud_id))

# Fitting the siilarity search object

# In[21]:

sim_song_search = NearestNeighbors(n_neighbors = 6, metric='euclidean')

# ### Finding rhythmical similar songs

# ##### Finding rhythmical similar songs using Rhythm Histograms

# In[22]:

feature_set = 'rh'

# Normalize the extracted features.

# In[23]:

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

# Fit the Nearest-Neighbor search object to the extracted features

# In[24]:

sim_song_search.fit(scaled_feature_space);

# Retrieve the feature vector for the query song

# In[25]:

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_track_soundcloud_id]

# Search the nearest neighbors of the query-feature-vector

# In[26]:

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

# Because we are searching in the entire collection, the top-most result is the query song itself. Thus, we can skip it.

# In[27]:

similar_songs = similar_songs[1:]

# Lookup the corresponding Soundcloud-IDs

# In[28]:

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

# Listen to the results

# In[39]:

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ##### Finding rhythmical similar songs using Rhythm Patterns

# In[40]:

def search_similar_songs(query_song_id, feature_set, skip_query=True):

#

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_song_id]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

return similar_soundcloud_ids

# In[41]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='rp')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ### Finding songs based on timbral similarity

# ##### Finding songs based on timbral similarity using Statistical Spectral Descriptors

# In[42]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='ssd')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# First entry is query-track (TODO: change CSS style of query table cells!)

# In[43]:

results_track_1 = search_similar_songs(68687842, feature_set='ssd', skip_query=False)

results_track_2 = search_similar_songs(40439758, feature_set='rh', skip_query=False)

compareSimilarityResults([results_track_1, results_track_2],

width=100, height=120, visual=False,

columns=['Statistical Spectrum Descriptors', 'Rhythm Histograms'])

# ### Combining different Music Descriptors

# In[45]:

def search_similar_songs_with_combined_sets(scds, query_song_id, feature_sets, skip_query=True, n_neighbors=6):

features = scds.getCombinedFeaturesets(feature_sets)

sim_song_search = NearestNeighbors(n_neighbors = n_neighbors, metric='l2')

#

scaled_feature_space = StandardScaler().fit_transform(features)

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[scds.getFeatureIndexByID(query_song_id, feature_sets[0])]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = scds.getIdsByIndex(similar_songs, feature_sets[0])

return similar_soundcloud_ids

# In[47]:

feature_sets = ['ssd','rh']

compareSimilarityResults([search_similar_songs_with_combined_sets(scds, 68687842, feature_sets=feature_sets, n_neighbors=5),

search_similar_songs_with_combined_sets(scds, 40439758, feature_sets=feature_sets, n_neighbors=5)],

width=100, height=120, visual=False,

columns=[scds.getNameByID(68687842),

scds.getNameByID(40439758)])

# # Further Reading

# * [Audio Feature Extraction site of the MIR-Team @TU-Wien](http://www.ifs.tuwien.ac.at/mir/audiofeatureextraction.html)

# * Blog-post: [A gentle Introduction to Music Information Retrieval](http://www.europeanasounds.eu/news/a-gentle-introduction-to-music-information-retrieval-making-computers-understand-music)

# * [Same Blog-post with Python code](http://wwwnew.schindler.eu.com/blog/mir_intro/blog_with_code.html)

#

# Rhythm Patterns (also called Fluctuation Patterns) describe modulation amplitudes for a range of modulation frequencies on "critical bands" of the human auditory range, i.e. fluctuations (or rhythm) on a number of frequency bands. The feature extraction process for the Rhythm Patterns is composed of two stages:

#

# First, the specific loudness sensation in different frequency bands is computed, by using a Short Time FFT, grouping the resulting frequency bands to psycho-acoustically motivated critical-bands, applying spreading functions to account for masking effects and successive transformation into the decibel, Phon and Sone scales. This results in a power spectrum that reflects human loudness sensation (Sonogram).

#

# In the second step, the spectrum is transformed into a time-invariant representation based on the modulation frequency, which is achieved by applying another discrete Fourier transform, resulting in amplitude modulations of the loudness in individual critical bands. These amplitude modulations have different effects on human hearing sensation depending on their frequency, the most significant of which, referred to as fluctuation strength, is most intense at 4 Hz and decreasing towards 15 Hz. From that data, reoccurring patterns in the individual critical bands, resembling rhythm, are extracted, which – after applying Gaussian smoothing to diminish small variations – result in a time-invariant, comparable representation of the rhythmic patterns in the individual critical bands.

# In[62]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rp = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[63]:

plotrp(extracted_features['rp'])

# ## Statistical Spectrum Descriptor

# The Sonogram is calculated as in the first part of the Rhythm Patterns calculation. According to the occurrence of beats or other rhythmic variation of energy on a specific critical band, statistical measures are able to describe the audio content. Our goal is to describe the rhythmic content of a piece of audio by computing the following statistical moments on the Sonogram values of each of the critical bands:

#

# * mean, median, variance, skewness, kurtosis, min- and max-value

# In[66]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_ssd = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[67]:

plotssd(extracted_features['ssd'])

# ## Rhythm Histogram

# The Rhythm Histogram features we use are a descriptor for general rhythmics in an audio document. Contrary to the Rhythm Patterns and the Statistical Spectrum Descriptor, information is not stored per critical band. Rather, the magnitudes of each modulation frequency bin of all critical bands are summed up, to form a histogram of "rhythmic energy" per modulation frequency. The histogram contains 60 bins which reflect modulation frequency between 0 and 10 Hz. For a given piece of audio, the Rhythm Histogram feature set is calculated by taking the median of the histograms of every 6 second segment processed.

# In[68]:

extracted_features = rp_extract(wavedata, # the two-channel wave-data of the audio-file

samplerate, # the samplerate of the audio-file

extract_rh = True, # <== extract this feature!

transform_db = True, # apply psycho-accoustic transformation

transform_phon = True, # apply psycho-accoustic transformation

transform_sone = True, # apply psycho-accoustic transformation

fluctuation_strength_weighting=True, # apply psycho-accoustic transformation

skip_leadin_fadeout = 1, # skip lead-in/fade-out. value = number of segments skipped

step_width = 1) #

# In[69]:

plotrh(extracted_features['rh'])

# ## Modulation Frequency Variance Descriptor

# This descriptor measures variations over the critical frequency bands for a specific modulation frequency (derived from a rhythm pattern).

#

# Considering a rhythm pattern, i.e. a matrix representing the amplitudes of 60 modulation frequencies on 24 critical bands, an MVD vector is derived by computing statistical measures (mean, median, variance, skewness, kurtosis, min and max) for each modulation frequency over the 24 bands. A vector is computed for each of the 60 modulation frequencies. Then, an MVD descriptor for an audio file is computed by the mean of multiple MVDs from the audio file's segments, leading to a 420-dimensional vector.

# ## Temporal Statistical Spectrum Descriptor

# Feature sets are frequently computed on a per segment basis and do not incorporate time series aspects. As a consequence, TSSD features describe variations over time by including a temporal dimension. Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual statistical spec- trum descriptors extracted from segments at different time positions within a piece of audio. This captures timbral variations and changes over time in the audio spectrum, for all the critical Bark-bands. Thus, a change of rhythmic, instruments, voices, etc. over time is reflected by this feature set. The dimension is 7 times the dimension of an SSD (i.e. 1176).

# ## Temporal Rhythm Histograms

# Statistical measures (mean, median, variance, skewness, kurtosis, min and max) are computed over the individual Rhythm Histograms extracted from various segments in a piece of audio. Thus, change and variation of rhythmic aspects in time are captured by this descriptor.

# # 4. Application Scenarios

# In these application scenarios we try to find similar songs or classify music into different categories.

#

# For these Use Cases we need to import a few additional functions from the sklearn package and from mir_utils (installed from git above in parallel to rp_extract):

# In[2]:

# IMPORTING mir_utils (installed from git above in parallel to rp_extract (otherwise ajust path))

import sys

sys.path.append("../mir_utils")

from demo.NotebookUtils import *

from demo.PlottingUtils import *

from demo.Soundcloud_Demo_Dataset import SoundcloudDemodatasetHandler

# In[3]:

# IMPORTS for Classification and Evaluation

from sklearn.preprocessing import StandardScaler

from sklearn import svm

from sklearn.cross_validation import StratifiedKFold, ShuffleSplit, cross_val_score

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import NearestNeighbors

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, confusion_matrix

# In[5]:

from IPython.display import HTML

myhtml= ''

HTML(myhtml)

# ## The Soundcloud Demo Dataset

# The Soundcloud Demo Dataset is a collection of commonly known mainstream radio songs hosted on the online streaming platform Soundcloud. The Dataset is available as playlist and is intended to be used to demonstrate the performance of MIR algorithms with the help of well known songs.

#

#

# The *SoundcloudDemodatasetHandler* abstracts the access to the TU-Wien server. On this server the extracted features are stored as csv-files. The *SoundcloudDemodatasetHandler* remotely loads the features and returns them by request. The features have been extracted using the method explained in the previous sections.

# In[35]:

scds = SoundcloudDemodatasetHandler("D:/Research/Data/MIR/Soundcloud_Dataset", lazy=True)

# ## 4.1. Finding Similar Sounding Songs

# The query-song:

# In[6]:

query_track_soundcloud_id = 68687842

HTML(scds.getPlayerHTMLForID(query_track_soundcloud_id))

# Fitting the siilarity search object

# In[21]:

sim_song_search = NearestNeighbors(n_neighbors = 6, metric='euclidean')

# ### Finding rhythmical similar songs

# ##### Finding rhythmical similar songs using Rhythm Histograms

# In[22]:

feature_set = 'rh'

# Normalize the extracted features.

# In[23]:

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

# Fit the Nearest-Neighbor search object to the extracted features

# In[24]:

sim_song_search.fit(scaled_feature_space);

# Retrieve the feature vector for the query song

# In[25]:

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_track_soundcloud_id]

# Search the nearest neighbors of the query-feature-vector

# In[26]:

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

# Because we are searching in the entire collection, the top-most result is the query song itself. Thus, we can skip it.

# In[27]:

similar_songs = similar_songs[1:]

# Lookup the corresponding Soundcloud-IDs

# In[28]:

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

# Listen to the results

# In[39]:

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ##### Finding rhythmical similar songs using Rhythm Patterns

# In[40]:

def search_similar_songs(query_song_id, feature_set, skip_query=True):

#

scaled_feature_space = StandardScaler().fit_transform(rp_features[feature_set]["data"])

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[rp_features[feature_set]["soundcloudids"] == query_song_id]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = rp_features[feature_set]["soundcloudids"][similar_songs]

return similar_soundcloud_ids

# In[41]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='rp')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# ### Finding songs based on timbral similarity

# ##### Finding songs based on timbral similarity using Statistical Spectral Descriptors

# In[42]:

similar_soundcloud_ids = search_similar_songs(query_track_soundcloud_id,

feature_set='ssd')

SoundcloudTracklist(similar_soundcloud_ids, width=90, height=120, visual=False)

# First entry is query-track (TODO: change CSS style of query table cells!)

# In[43]:

results_track_1 = search_similar_songs(68687842, feature_set='ssd', skip_query=False)

results_track_2 = search_similar_songs(40439758, feature_set='rh', skip_query=False)

compareSimilarityResults([results_track_1, results_track_2],

width=100, height=120, visual=False,

columns=['Statistical Spectrum Descriptors', 'Rhythm Histograms'])

# ### Combining different Music Descriptors

# In[45]:

def search_similar_songs_with_combined_sets(scds, query_song_id, feature_sets, skip_query=True, n_neighbors=6):

features = scds.getCombinedFeaturesets(feature_sets)

sim_song_search = NearestNeighbors(n_neighbors = n_neighbors, metric='l2')

#

scaled_feature_space = StandardScaler().fit_transform(features)

#

sim_song_search.fit(scaled_feature_space);

#

query_track_feature_vector = scaled_feature_space[scds.getFeatureIndexByID(query_song_id, feature_sets[0])]

#

similar_songs = sim_song_search.kneighbors(query_track_feature_vector, return_distance=False)[0]

if skip_query:

similar_songs = similar_songs[1:]

#

similar_soundcloud_ids = scds.getIdsByIndex(similar_songs, feature_sets[0])

return similar_soundcloud_ids

# In[47]:

feature_sets = ['ssd','rh']

compareSimilarityResults([search_similar_songs_with_combined_sets(scds, 68687842, feature_sets=feature_sets, n_neighbors=5),

search_similar_songs_with_combined_sets(scds, 40439758, feature_sets=feature_sets, n_neighbors=5)],

width=100, height=120, visual=False,

columns=[scds.getNameByID(68687842),

scds.getNameByID(40439758)])

# # Further Reading

# * [Audio Feature Extraction site of the MIR-Team @TU-Wien](http://www.ifs.tuwien.ac.at/mir/audiofeatureextraction.html)

# * Blog-post: [A gentle Introduction to Music Information Retrieval](http://www.europeanasounds.eu/news/a-gentle-introduction-to-music-information-retrieval-making-computers-understand-music)

# * [Same Blog-post with Python code](http://wwwnew.schindler.eu.com/blog/mir_intro/blog_with_code.html)