#!/usr/bin/env python

# coding: utf-8

# # Collaborative filtering

#

# * __Collaborative filtering__: Generally, the process of filtering out some data by collaborating data from different data sources/agents. Specifically, this process with regards to building recommendation systems. Making predictions (filtering) by collecting data from lots of different users about their preferences/habits (collaboration).

#

#

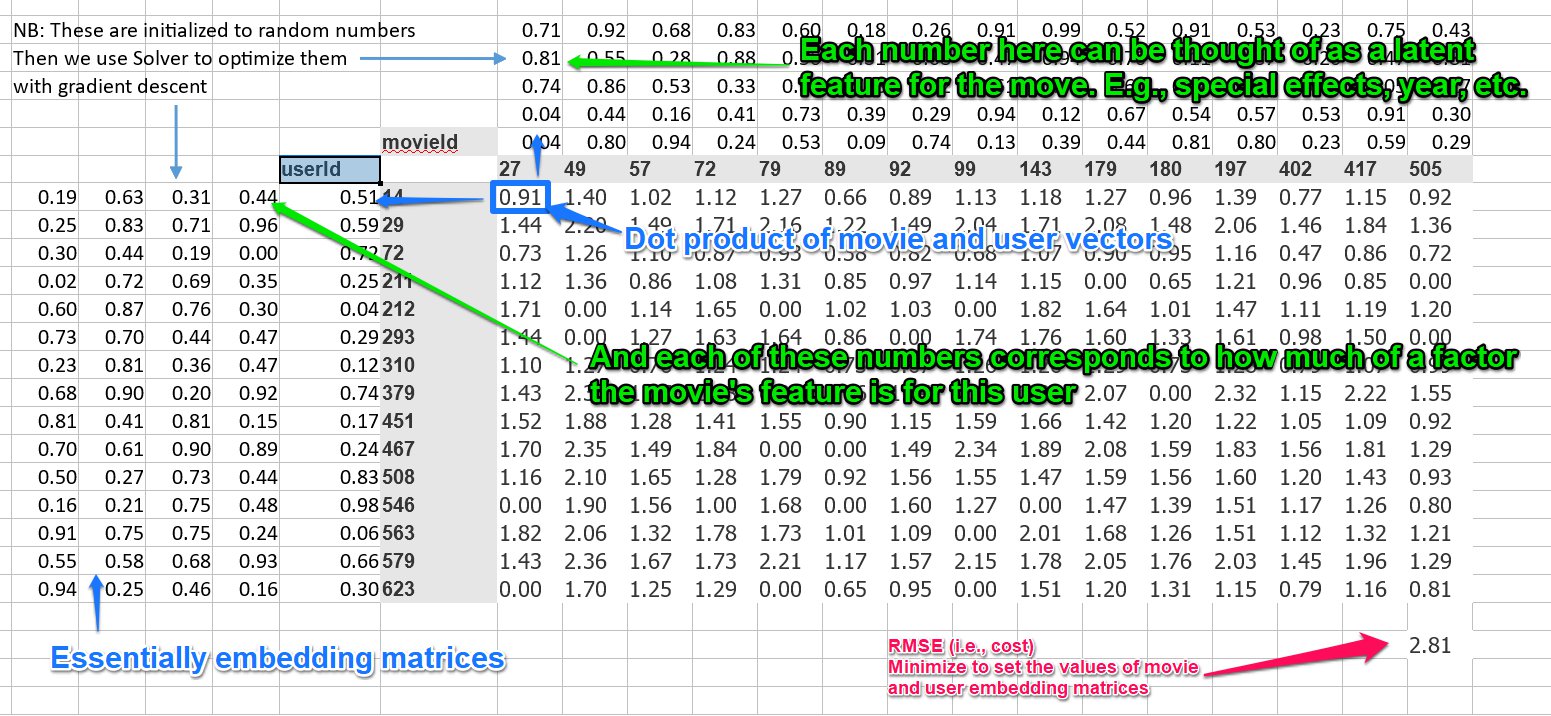

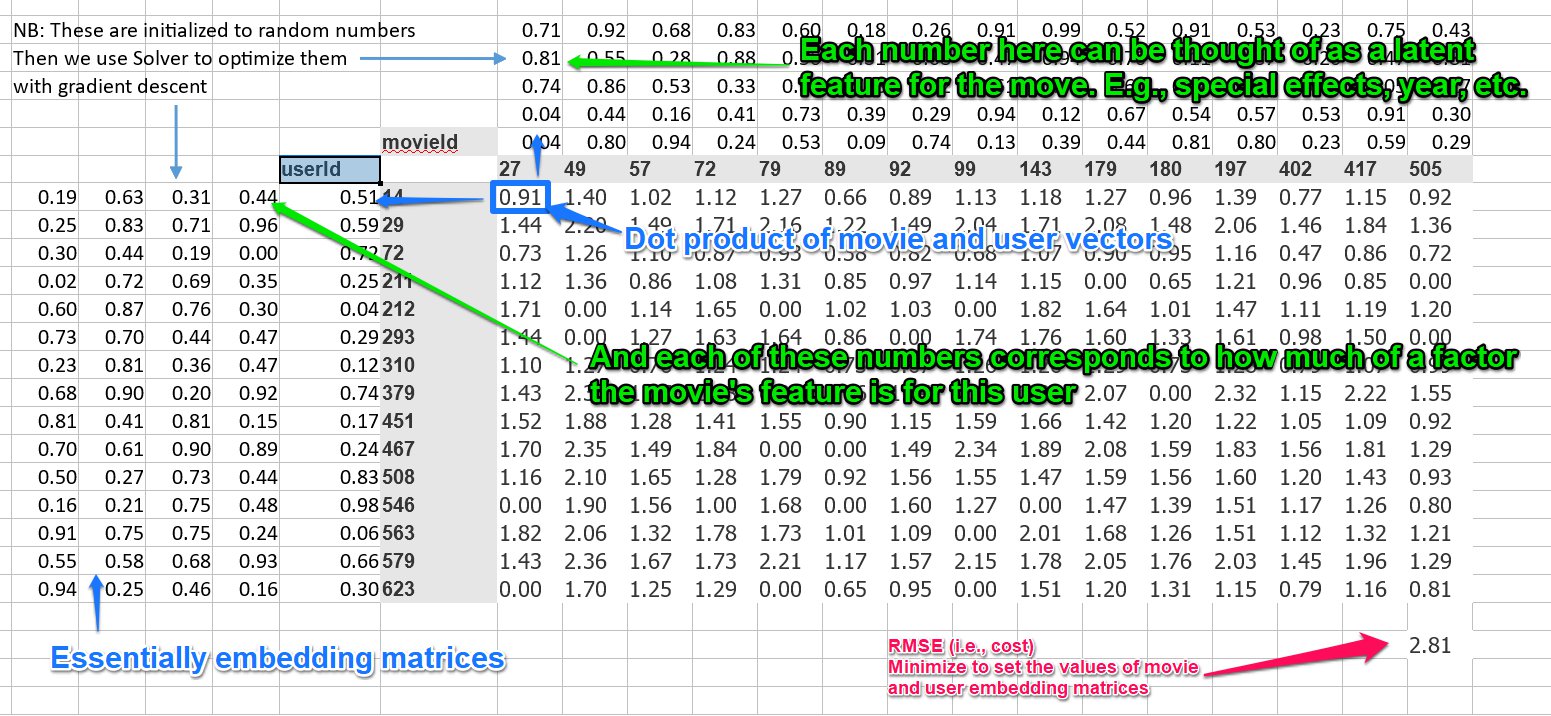

# * __Matrix decomposition/factoring__: The process of taking a single matrix, and then expressing it as the product of different matrices. You can think of gradient descent as a way of doing this. For example, if we have a table of data (matrix) that shows {users}x{movies}, and the matrix is filled with the users' scores for those movies, we could have two matrices -- one for movie factors, and one for user factors. We could then set the properties for those two matrices via gradient descent so that their product results (as closely as possible) as the actual scores users gave the movies. So we have taken the score matrix, and expressed it as the product of the movie factor and user factor matrices. (Actually, our operations are not technically matrix decomposition because we will in 0 values.)

#

#

# * __Observed features/Latent features__: (aka "factors" or "variables") Observed features are the features that are explicitly read into the model. For example, words in a text. Latent features are the "hidden" features -- usually "discovered" by some aggregate of the observed features. For example, the topic of a text. Thinking of the matrix decomposition above, each column for a movie could represent some value -- special effects, year of release, etc. An each row of the user matrix could represent how much they value that feature. Those features, like special effects, etc., would be the latent features. The features that can't be directly observed vs. those that can.

# Let's take a look at collab_filter.xlsx as an example:

#

#  #

# This whole process could be thought of as _collaborative filtering using matrix decomposition_. (We're breaking our matrix into 2 different matrices and using it to make some predictions).

#

# The matrix above the movies and the matrix to the left of the users are embedding matrices for those things.

#

# So, in summary, the process for this shallow learning is:

#

# 1. Init your user/movie/movie scores matrix using randomly initialized embedding matrices for the movies and users

# 2. Set up your cost function

# 3. Minimize your cost function using gradient descent, thus setting more accurate embedding matrix values

# ## Collaborative filtering using fast.ai

# In[1]:

# First do our usual imports

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[3]:

# Take a look at the data

# We can see it contains a userId, movieId, and rating. We want to predict the rating.

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# In[4]:

# We can also get the movie names too

movie_details = pd.read_csv(path+'movies.csv')

movie_details.head()

# In[5]:

# Though not required for modelling, we create a cross tab of the top users and top movies, like we had in our Excel file

# First get the users who have given the most ratings

group = ratings.groupby('userId')['rating'].count()

topUsers = group.sort_values(ascending=False)[:15]

topUsers

# In[6]:

# Now get the movies which are the highest rated

group = ratings.groupby('movieId')['rating'].count()

topMovies = group.sort_values(ascending=False)[:15]

topMovies

# In[7]:

# Now join them together

top_ranked = ratings.join(topUsers, rsuffix='_r', how='inner', on='userId')

# In[8]:

top_ranked = top_ranked.join(topMovies, rsuffix='_r', how='inner', on='movieId')

pd.crosstab(top_ranked.userId, top_ranked.movieId, top_ranked.rating, aggfunc=np.sum)

# ### Collaborative filtering

#

# Now we will do the actual collaborative filtering. This is pretty similar to our previous processes.

# In[12]:

# First, get the cross validation indexes -- a random 20% of rows we can use for validaton

val_idxs = get_cv_idxs(len(ratings))

# Weight decay. This will be covered later. This means 2^-4 (0.0625)

wd = 2e-4

# This is the depth of the embedding matrix. Can be thought of as the number of latent features. (see note above)

n_factors = 50

# In[13]:

# Now declare our data and learner

# We pass in the two columns and the thing we want to predict -- like we had in our Excel example earlier

collaborative_filter_data = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

learn = collaborative_filter_data.get_learner(n_factors, val_idxs, 64, opt_fn=optim.Adam)

# In[15]:

# Do the learning

# These params were figured out using trials, like usual

learn.fit(1e-2, 2, wds=wd, cycle_len=1, cycle_mult=2)

# The evaluation method here is MSE -- mean squared error (sum of actual value-predicted value)^2/num of samples).

# So we'll take the square root to get our RMSE.

# In[16]:

math.sqrt(0.765)

# ### Movie bias

# Our bias affects the movie rating, so we can also think of it as a measure of how good/bad movies are.

# In[19]:

# First, convert the IDs to contiguous values, like we did for our model.

movie_names = movie_details.set_index('movieId')['title'].to_dict()

group = ratings.groupby('movieId')['rating'].count()

top_movies = group.sort_values(ascending=False).index.values[:3000]

top_movie_idx = np.array([collaborative_filter_data.item2idx[o] for o in top_movies])

# If we want to view the layers in our PyTorch model, we can just call it.

#

# So below we have a model wth two embedding layers, and then two bias layers -- one of user biases, and one for item biases (in this case, items = movies).

#

# You can see the 0th element is the number of items, and the 1st element is the number of features. For example, in our user embedding layer, we have 671 users and 50 features, in our item bias layer we have 9066 movies and 1 bias for each movie, etc.

# In[20]:

model = learn.model

model.cuda()

# Here we take our top movie IDs and pass them into the item bias layer to get the biases for the movie.

#

# Note: PyTorch lets you do this -- pass in indices to a layer to get the corresponding values.

# The indicies must be converted to PyTorch `Variables` first. Recall that a variable is basically like a tensor that supports automatic differentiation.

#

# We then convert the resulting data to a NumPy array so that work can be done on the CPU.

# In[23]:

# Take a look at the movie bias

# Input is a movie id, and output is the movie bias (a float)

movie_bias = to_np(model.ib(V(top_movie_idx)))

# In[24]:

movie_bias

# In[33]:

movie_bias.shape

# In[ ]:

# Zip up the movie names with their respective biases

movie_ratings = [(b[0], movie_names[i]) for i,b in zip(top_movies, movie_bias)]

# Now we can look at top and bottom rated movies, corrected for reviewer sentiment, and the different types of movies viewers watch.

# In[30]:

# Sort by the 0th element in the tuple (the bias)

sorted(movie_ratings, key=lambda o: o[0])[:15]

# In[31]:

# (Same as above)

sorted(movie_ratings, key=itemgetter(0))[:15]

# In[32]:

sorted(movie_ratings, key=lambda o: o[0], reverse=True)[:15]

# ### Interpreting embedding matrices

# In[36]:

movie_embeddings = to_np(model.i(V(top_movie_idx)))

movie_embeddings.shape

# It's hard to interpret 50 different factors. We use [Principle Component Analysis (PCA)](https://plot.ly/ipython-notebooks/principal-component-analysis/) to simplify them down to 3 vectors.

#

# PCA essentially says, reduce our dimensionality down to $n$. It finds 3 linear combinations of our 50 embedding dimensions whic capture as much variation as possible, while also making those 3 linear combinations as different to each other as possible.

# In[37]:

from sklearn.decomposition import PCA

pca = PCA(n_components=3)

movie_pca = pca.fit(movie_embeddings.T).components_

# In[38]:

movie_pca.shape

# In[40]:

factor0 = movie_pca[0]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor0, top_movies)]

# In[42]:

# Looking at the first component, it looks like it's something like classier movies vs. more lighthearted

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[43]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[45]:

factor1 = movie_pca[1]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor1, top_movies)]

# In[47]:

# Looking at the second component, it looks more like CGI vs dialogue-driven

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[48]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[49]:

# We can map these two components against each other

idxs = np.random.choice(len(top_movies), 50, replace=False)

X = factor0[idxs]

Y = factor1[idxs]

plt.figure(figsize=(15,15))

plt.scatter(X, Y)

for i, x, y in zip(top_movies[idxs], X, Y):

plt.text(x,y,movie_names[i], color=np.random.rand(3)*0.7, fontsize=11)

plt.show()

# ## Collbarative Filtering from scratch

#

# In this section, we'll look at implementing collaborative filtering from scratch.

# In[1]:

# Do our imports again in case we want to run from here

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[17]:

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# ## PyTorch Arithmetic

# In[3]:

# Declare tensors (n-dimensonal matrices)

a = T([[1.,2],

[3,4]])

b = T([[2.,2],

[10,10]])

a,b

# In[7]:

# Element-wise multiplication

a*b

# ### CUDA

# To run on the graphics card, add `.cuda()` to the end of PyTorch calls. Otherwise they will run on the CPU.

# In[8]:

# This is running on the GPU

a*b.cuda()

# In[9]:

# Element-wise multiplication and sum across the columns

# This is the tensor dot product.

# I.e., the dot product of [1,2] and [2,2] = 6, and [3,4]*[10,10] = 70

(a*b).sum(1)

# ## PyTorch Modules

# We can build our own neural network layer to process inputs and compute activations.

#

# In PyTorch, we call this a __module__. I.e., we are going to build a PyTorch _module_. Modules can be passed in to neural nets.

#

# PyTorch modules are derived from `nn.Module` (neural network module).

#

# Modules must contain a function called `forward` that will compute the forward activations -- do the forward pass.

#

# This `forward` function is called automatically when the module is called with its constructor, i.e., `module(a,b)` will call `forward(a,b)`.

# In[11]:

# We can create a module that does dot products between tensors

class DotProduct(nn.Module):

def forward(self, users, movies):

return (users*movies).sum(1)

# In[12]:

model = DotProduct()

# In[14]:

# This will call the forward function.

model(a, b)

# ### A more complex module/fixing up index values

#

# Now, let's create a more complex module to do the work we were doing in our spreadsheet.

#

# But first, we have a slightly problem: user and movie IDs are not contiguous. For example, our user ID might jump from 1000 to 1400. This means that if we want to do direct indexing via the ID, we would need to have those extra 400 rows in our tensor. So we'll do some data fixing to map a series of sequential, contiguous IDs.

# In[23]:

# Get the unique user IDs

unique_users = ratings.userId.unique()

# Get a list of sequential IDs using enumerate

user_to_index = {o:i for i,o in enumerate(unique_users)}

# Map the userIds in ratings using user_to_index

ratings.userId = ratings.userId.apply(lambda x: user_to_index[x])

# In[24]:

# Do the same for movie IDs

unique_movies = ratings.movieId.unique()

movie_to_index = {o:i for i,o in enumerate(unique_movies)}

ratings.movieId = ratings.movieId.apply(lambda x: movie_to_index[x])

# In[26]:

number_of_users = int(ratings.userId.nunique())

number_of_movies = int(ratings.movieId.nunique())

number_of_users, number_of_movies

# ## Creating the module

#

# Now let's create our module. This will be a module that holds an embedding matrix for our users and movies.

# The `forward` pass will do a dot product on them.

#

# The module will use `nn.Embedding` to create the embedding matrices. These are PyTorch __`variables`__. Variables support all the operations that tensors do, except they also support automatic differentiation.

#

# When we want to access the tensor part of the variable, we call `.weight.data` on the variable.

#

# If we put `_` at the end of a PyTorch tensor function, it performs the operation in place.

#

# To initialize our embedding matrices to random numbers using values calculated using [He initialization](https://machinelearning.wtf/terms/he-initialization/). (See PyTorch's `kaiming_uniform` which can do He initialization too [link](http://pytorch.org/docs/master/_modules/torch/nn/init.html).)

#

# The flow of the module will be like this:

#

# 1. Look up the factors for the users from the embedding matrix

# 2. Look up the factors for the movies from the embedding matrix

# 3. Take the dot product

# In[46]:

number_of_factors = 50

# In[59]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Create embedding matrices for users and movies

self.user_embedding_matrix = nn.Embedding(number_of_users, number_of_factors)

self.movie_embedding_matrix = nn.Embedding(number_of_movies, number_of_factors)

# Initialize the embedding matrices

# .weight.data gets the tensor part of the variable

# Using _ performs the operation in place

self.user_embedding_matrix.weight.data.uniform_(0,0.05)

self.movie_embedding_matrix.weight.data.uniform_(0,0.05)

# Foward pass

# As with our structured data example, we can take in categorical and continuous variables

# (But both our users and movies are categorical)

def forward(self, categorical, continuous):

# Get the users and movies params

users,movies = categorical[:,0],categorical[:,1]

# Get the factors from our embedding matrices

user_factors,movie_factors = self.user_embedding_matrix(users), self.movie_embedding_matrix(movies)

# Take the dot product

return (user_factors*movie_factors).sum(1)

# In[60]:

# Now we want to set up our x and y for our crosstab

# X = everything except rating and timestamp (row/column for our cross tab)

# Y = ratings (result in our cross tab)

x = ratings.drop(['rating', 'timestamp'],axis=1)

y = ratings['rating'].astype('float32')

# In[61]:

x.head()

# In[62]:

y.head()

# In[63]:

val_idxs = get_cv_idxs(len(ratings))

# In[64]:

# Just use fast.ai to set up the dataloader

data = ColumnarModelData.from_data_frame(path, val_idxs, x, y, ['userId', 'movieId'], 64)

# In[71]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

# optim creates the optimization function

# model.parameters() fetches the weights from the nn.Module superclass (anything of type nn.[weight type] e.g. Embedding)

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[72]:

# Call the PyTorch training loop (we'll write our own later on)

fit(model, data, 3, opt, F.mse_loss)

# We can see that our loss is still quite high.

#

# We can manually do some learning rate annealing and call `fit` again.

# In[73]:

set_lrs(opt, 0.01)

# In[74]:

fit(model, data, 3, opt, F.mse_loss)

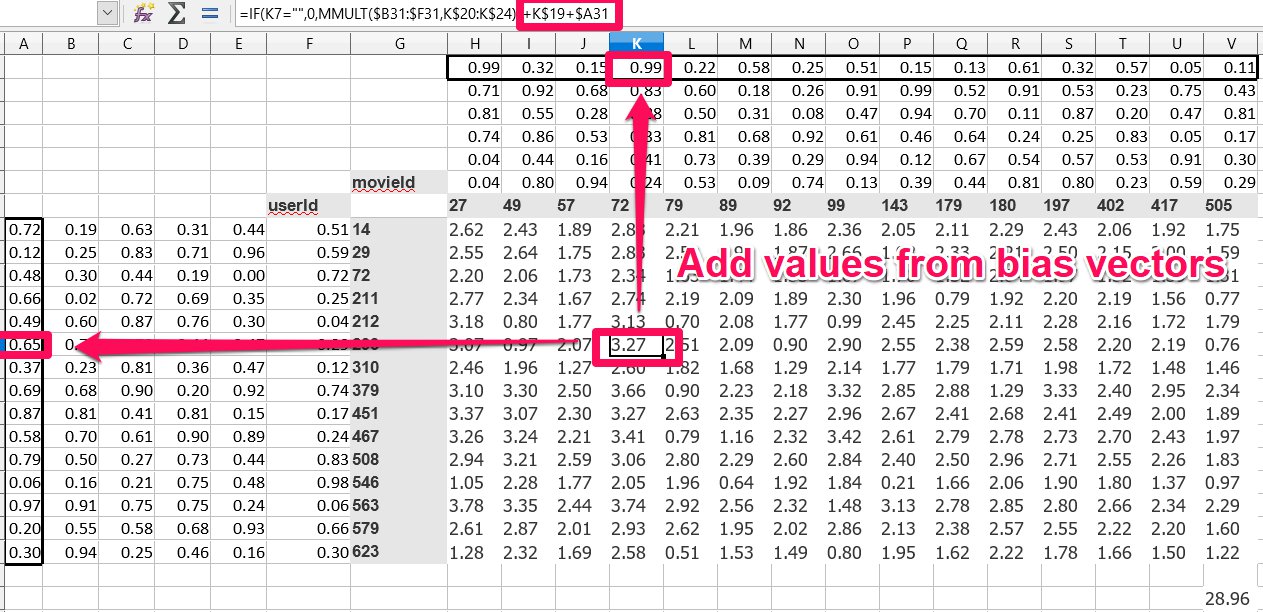

# ## Bias

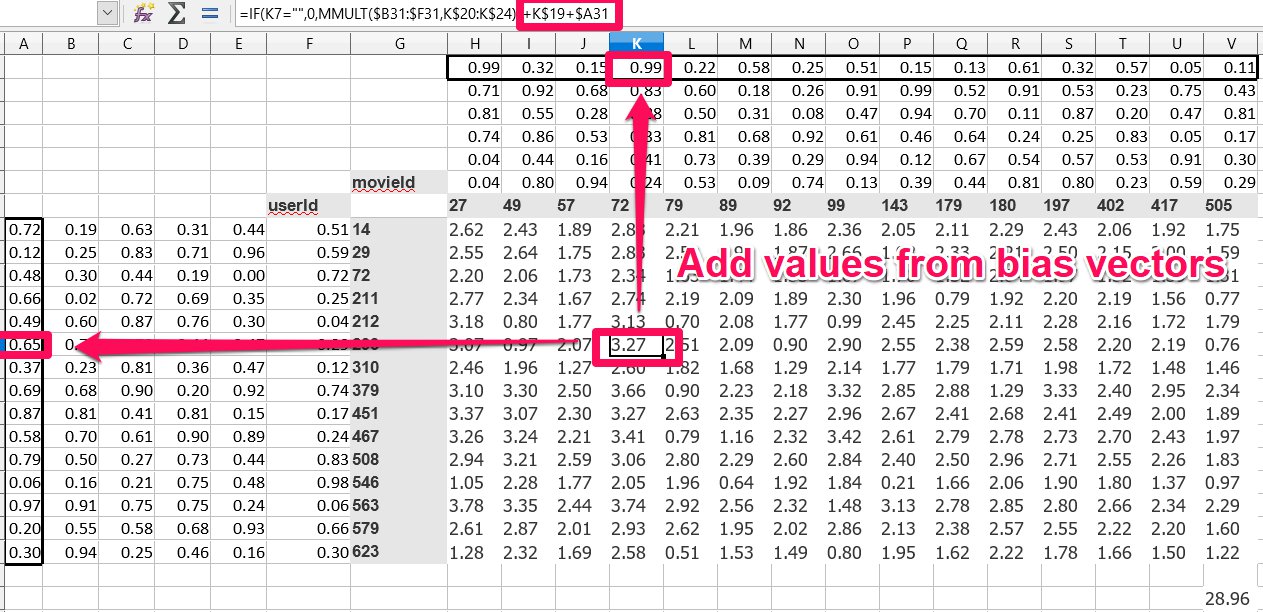

# Our loss still doesn't compete with the fast.ai library. One reason for this is lack of __bias__.

#

# Consider, one movie tends to have particularly high ratings, or a certain user tends to give low scores to movies. We want to account for these case-by-case variances. So we give each movie and user a bias and add them on to our dot product. In practice, this will be like a an extra row stuck on to our movie and user tensors.

#

#

#

# This whole process could be thought of as _collaborative filtering using matrix decomposition_. (We're breaking our matrix into 2 different matrices and using it to make some predictions).

#

# The matrix above the movies and the matrix to the left of the users are embedding matrices for those things.

#

# So, in summary, the process for this shallow learning is:

#

# 1. Init your user/movie/movie scores matrix using randomly initialized embedding matrices for the movies and users

# 2. Set up your cost function

# 3. Minimize your cost function using gradient descent, thus setting more accurate embedding matrix values

# ## Collaborative filtering using fast.ai

# In[1]:

# First do our usual imports

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[3]:

# Take a look at the data

# We can see it contains a userId, movieId, and rating. We want to predict the rating.

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# In[4]:

# We can also get the movie names too

movie_details = pd.read_csv(path+'movies.csv')

movie_details.head()

# In[5]:

# Though not required for modelling, we create a cross tab of the top users and top movies, like we had in our Excel file

# First get the users who have given the most ratings

group = ratings.groupby('userId')['rating'].count()

topUsers = group.sort_values(ascending=False)[:15]

topUsers

# In[6]:

# Now get the movies which are the highest rated

group = ratings.groupby('movieId')['rating'].count()

topMovies = group.sort_values(ascending=False)[:15]

topMovies

# In[7]:

# Now join them together

top_ranked = ratings.join(topUsers, rsuffix='_r', how='inner', on='userId')

# In[8]:

top_ranked = top_ranked.join(topMovies, rsuffix='_r', how='inner', on='movieId')

pd.crosstab(top_ranked.userId, top_ranked.movieId, top_ranked.rating, aggfunc=np.sum)

# ### Collaborative filtering

#

# Now we will do the actual collaborative filtering. This is pretty similar to our previous processes.

# In[12]:

# First, get the cross validation indexes -- a random 20% of rows we can use for validaton

val_idxs = get_cv_idxs(len(ratings))

# Weight decay. This will be covered later. This means 2^-4 (0.0625)

wd = 2e-4

# This is the depth of the embedding matrix. Can be thought of as the number of latent features. (see note above)

n_factors = 50

# In[13]:

# Now declare our data and learner

# We pass in the two columns and the thing we want to predict -- like we had in our Excel example earlier

collaborative_filter_data = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

learn = collaborative_filter_data.get_learner(n_factors, val_idxs, 64, opt_fn=optim.Adam)

# In[15]:

# Do the learning

# These params were figured out using trials, like usual

learn.fit(1e-2, 2, wds=wd, cycle_len=1, cycle_mult=2)

# The evaluation method here is MSE -- mean squared error (sum of actual value-predicted value)^2/num of samples).

# So we'll take the square root to get our RMSE.

# In[16]:

math.sqrt(0.765)

# ### Movie bias

# Our bias affects the movie rating, so we can also think of it as a measure of how good/bad movies are.

# In[19]:

# First, convert the IDs to contiguous values, like we did for our model.

movie_names = movie_details.set_index('movieId')['title'].to_dict()

group = ratings.groupby('movieId')['rating'].count()

top_movies = group.sort_values(ascending=False).index.values[:3000]

top_movie_idx = np.array([collaborative_filter_data.item2idx[o] for o in top_movies])

# If we want to view the layers in our PyTorch model, we can just call it.

#

# So below we have a model wth two embedding layers, and then two bias layers -- one of user biases, and one for item biases (in this case, items = movies).

#

# You can see the 0th element is the number of items, and the 1st element is the number of features. For example, in our user embedding layer, we have 671 users and 50 features, in our item bias layer we have 9066 movies and 1 bias for each movie, etc.

# In[20]:

model = learn.model

model.cuda()

# Here we take our top movie IDs and pass them into the item bias layer to get the biases for the movie.

#

# Note: PyTorch lets you do this -- pass in indices to a layer to get the corresponding values.

# The indicies must be converted to PyTorch `Variables` first. Recall that a variable is basically like a tensor that supports automatic differentiation.

#

# We then convert the resulting data to a NumPy array so that work can be done on the CPU.

# In[23]:

# Take a look at the movie bias

# Input is a movie id, and output is the movie bias (a float)

movie_bias = to_np(model.ib(V(top_movie_idx)))

# In[24]:

movie_bias

# In[33]:

movie_bias.shape

# In[ ]:

# Zip up the movie names with their respective biases

movie_ratings = [(b[0], movie_names[i]) for i,b in zip(top_movies, movie_bias)]

# Now we can look at top and bottom rated movies, corrected for reviewer sentiment, and the different types of movies viewers watch.

# In[30]:

# Sort by the 0th element in the tuple (the bias)

sorted(movie_ratings, key=lambda o: o[0])[:15]

# In[31]:

# (Same as above)

sorted(movie_ratings, key=itemgetter(0))[:15]

# In[32]:

sorted(movie_ratings, key=lambda o: o[0], reverse=True)[:15]

# ### Interpreting embedding matrices

# In[36]:

movie_embeddings = to_np(model.i(V(top_movie_idx)))

movie_embeddings.shape

# It's hard to interpret 50 different factors. We use [Principle Component Analysis (PCA)](https://plot.ly/ipython-notebooks/principal-component-analysis/) to simplify them down to 3 vectors.

#

# PCA essentially says, reduce our dimensionality down to $n$. It finds 3 linear combinations of our 50 embedding dimensions whic capture as much variation as possible, while also making those 3 linear combinations as different to each other as possible.

# In[37]:

from sklearn.decomposition import PCA

pca = PCA(n_components=3)

movie_pca = pca.fit(movie_embeddings.T).components_

# In[38]:

movie_pca.shape

# In[40]:

factor0 = movie_pca[0]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor0, top_movies)]

# In[42]:

# Looking at the first component, it looks like it's something like classier movies vs. more lighthearted

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[43]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[45]:

factor1 = movie_pca[1]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor1, top_movies)]

# In[47]:

# Looking at the second component, it looks more like CGI vs dialogue-driven

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[48]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[49]:

# We can map these two components against each other

idxs = np.random.choice(len(top_movies), 50, replace=False)

X = factor0[idxs]

Y = factor1[idxs]

plt.figure(figsize=(15,15))

plt.scatter(X, Y)

for i, x, y in zip(top_movies[idxs], X, Y):

plt.text(x,y,movie_names[i], color=np.random.rand(3)*0.7, fontsize=11)

plt.show()

# ## Collbarative Filtering from scratch

#

# In this section, we'll look at implementing collaborative filtering from scratch.

# In[1]:

# Do our imports again in case we want to run from here

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[17]:

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# ## PyTorch Arithmetic

# In[3]:

# Declare tensors (n-dimensonal matrices)

a = T([[1.,2],

[3,4]])

b = T([[2.,2],

[10,10]])

a,b

# In[7]:

# Element-wise multiplication

a*b

# ### CUDA

# To run on the graphics card, add `.cuda()` to the end of PyTorch calls. Otherwise they will run on the CPU.

# In[8]:

# This is running on the GPU

a*b.cuda()

# In[9]:

# Element-wise multiplication and sum across the columns

# This is the tensor dot product.

# I.e., the dot product of [1,2] and [2,2] = 6, and [3,4]*[10,10] = 70

(a*b).sum(1)

# ## PyTorch Modules

# We can build our own neural network layer to process inputs and compute activations.

#

# In PyTorch, we call this a __module__. I.e., we are going to build a PyTorch _module_. Modules can be passed in to neural nets.

#

# PyTorch modules are derived from `nn.Module` (neural network module).

#

# Modules must contain a function called `forward` that will compute the forward activations -- do the forward pass.

#

# This `forward` function is called automatically when the module is called with its constructor, i.e., `module(a,b)` will call `forward(a,b)`.

# In[11]:

# We can create a module that does dot products between tensors

class DotProduct(nn.Module):

def forward(self, users, movies):

return (users*movies).sum(1)

# In[12]:

model = DotProduct()

# In[14]:

# This will call the forward function.

model(a, b)

# ### A more complex module/fixing up index values

#

# Now, let's create a more complex module to do the work we were doing in our spreadsheet.

#

# But first, we have a slightly problem: user and movie IDs are not contiguous. For example, our user ID might jump from 1000 to 1400. This means that if we want to do direct indexing via the ID, we would need to have those extra 400 rows in our tensor. So we'll do some data fixing to map a series of sequential, contiguous IDs.

# In[23]:

# Get the unique user IDs

unique_users = ratings.userId.unique()

# Get a list of sequential IDs using enumerate

user_to_index = {o:i for i,o in enumerate(unique_users)}

# Map the userIds in ratings using user_to_index

ratings.userId = ratings.userId.apply(lambda x: user_to_index[x])

# In[24]:

# Do the same for movie IDs

unique_movies = ratings.movieId.unique()

movie_to_index = {o:i for i,o in enumerate(unique_movies)}

ratings.movieId = ratings.movieId.apply(lambda x: movie_to_index[x])

# In[26]:

number_of_users = int(ratings.userId.nunique())

number_of_movies = int(ratings.movieId.nunique())

number_of_users, number_of_movies

# ## Creating the module

#

# Now let's create our module. This will be a module that holds an embedding matrix for our users and movies.

# The `forward` pass will do a dot product on them.

#

# The module will use `nn.Embedding` to create the embedding matrices. These are PyTorch __`variables`__. Variables support all the operations that tensors do, except they also support automatic differentiation.

#

# When we want to access the tensor part of the variable, we call `.weight.data` on the variable.

#

# If we put `_` at the end of a PyTorch tensor function, it performs the operation in place.

#

# To initialize our embedding matrices to random numbers using values calculated using [He initialization](https://machinelearning.wtf/terms/he-initialization/). (See PyTorch's `kaiming_uniform` which can do He initialization too [link](http://pytorch.org/docs/master/_modules/torch/nn/init.html).)

#

# The flow of the module will be like this:

#

# 1. Look up the factors for the users from the embedding matrix

# 2. Look up the factors for the movies from the embedding matrix

# 3. Take the dot product

# In[46]:

number_of_factors = 50

# In[59]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Create embedding matrices for users and movies

self.user_embedding_matrix = nn.Embedding(number_of_users, number_of_factors)

self.movie_embedding_matrix = nn.Embedding(number_of_movies, number_of_factors)

# Initialize the embedding matrices

# .weight.data gets the tensor part of the variable

# Using _ performs the operation in place

self.user_embedding_matrix.weight.data.uniform_(0,0.05)

self.movie_embedding_matrix.weight.data.uniform_(0,0.05)

# Foward pass

# As with our structured data example, we can take in categorical and continuous variables

# (But both our users and movies are categorical)

def forward(self, categorical, continuous):

# Get the users and movies params

users,movies = categorical[:,0],categorical[:,1]

# Get the factors from our embedding matrices

user_factors,movie_factors = self.user_embedding_matrix(users), self.movie_embedding_matrix(movies)

# Take the dot product

return (user_factors*movie_factors).sum(1)

# In[60]:

# Now we want to set up our x and y for our crosstab

# X = everything except rating and timestamp (row/column for our cross tab)

# Y = ratings (result in our cross tab)

x = ratings.drop(['rating', 'timestamp'],axis=1)

y = ratings['rating'].astype('float32')

# In[61]:

x.head()

# In[62]:

y.head()

# In[63]:

val_idxs = get_cv_idxs(len(ratings))

# In[64]:

# Just use fast.ai to set up the dataloader

data = ColumnarModelData.from_data_frame(path, val_idxs, x, y, ['userId', 'movieId'], 64)

# In[71]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

# optim creates the optimization function

# model.parameters() fetches the weights from the nn.Module superclass (anything of type nn.[weight type] e.g. Embedding)

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[72]:

# Call the PyTorch training loop (we'll write our own later on)

fit(model, data, 3, opt, F.mse_loss)

# We can see that our loss is still quite high.

#

# We can manually do some learning rate annealing and call `fit` again.

# In[73]:

set_lrs(opt, 0.01)

# In[74]:

fit(model, data, 3, opt, F.mse_loss)

# ## Bias

# Our loss still doesn't compete with the fast.ai library. One reason for this is lack of __bias__.

#

# Consider, one movie tends to have particularly high ratings, or a certain user tends to give low scores to movies. We want to account for these case-by-case variances. So we give each movie and user a bias and add them on to our dot product. In practice, this will be like a an extra row stuck on to our movie and user tensors.

#

#  #

# So now we will create a new model that takes bias into account.

#

# This will have a few other differences:

#

# 1. It uses a convenience method to create embeddings

# 2. It normalizes scores returns from the forward pass to 1-5

#

# This second step is not strictly necessary, but it will make it easier to fit parameters.

#

# The sigmoid function is called from `F`, which is PyTorch's functional library.

# In[77]:

# For step 2, score normalizing

min_rating, max_rating = ratings.rating.min(), ratings.rating.max()

min_rating, max_rating

# In[91]:

# number_of_inputs = rows in the embedding matrix

# number_of_factors = columns in the embedding matrix

def get_embedding(number_of_inputs, number_of_factors):

embedding = nn.Embedding(number_of_inputs, number_of_factors)

embedding.weight.data.uniform_(-0.01, 0.01)

return embedding

class EmbeddingDotBias(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Initialize embedding matrices and bias vectors

(self.user_embedding_matrix, self.movie_embedding_matrix, self.user_biases, self.movie_biases) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors), (number_of_users, 1), (number_of_movies, 1)

]]

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Do our dot product

user_dot_movies = (self.user_embedding_matrix(users)*self.movie_embedding_matrix(movies)).sum(1)

# Add on our bias vectors

results = user_dot_movies + self.user_biases(users).squeeze() + self.movie_biases(movies).squeeze()

# Normalize results

results = F.sigmoid(results) * (max_rating-min_rating)+min_rating

return results

# In[92]:

cf = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

weight_decay=2e-4

model = EmbeddingDotBias(cf.n_users, cf.n_items).cuda()

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[93]:

fit(model, data, 3, opt, F.mse_loss)

# In[94]:

set_lrs(opt, 1e-2)

# In[95]:

fit(model, data, 3, opt, F.mse_loss)

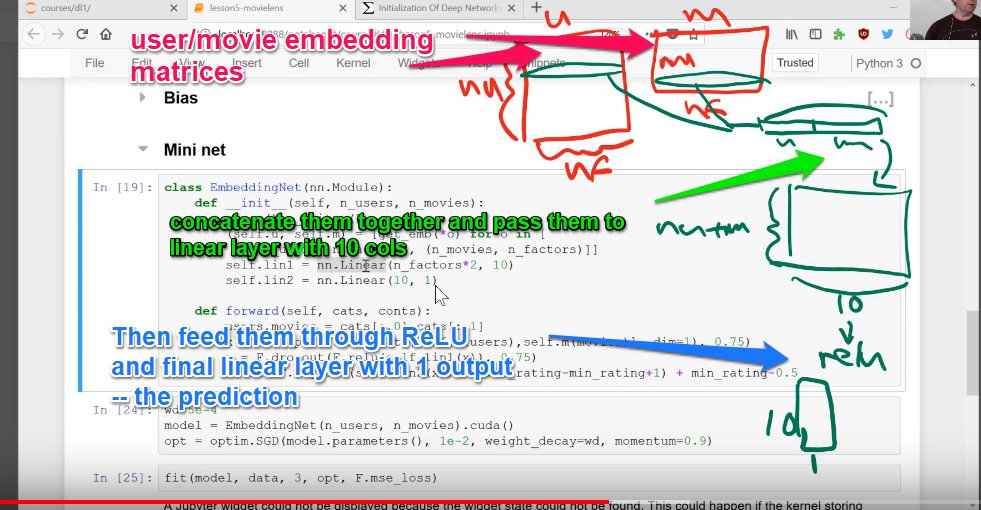

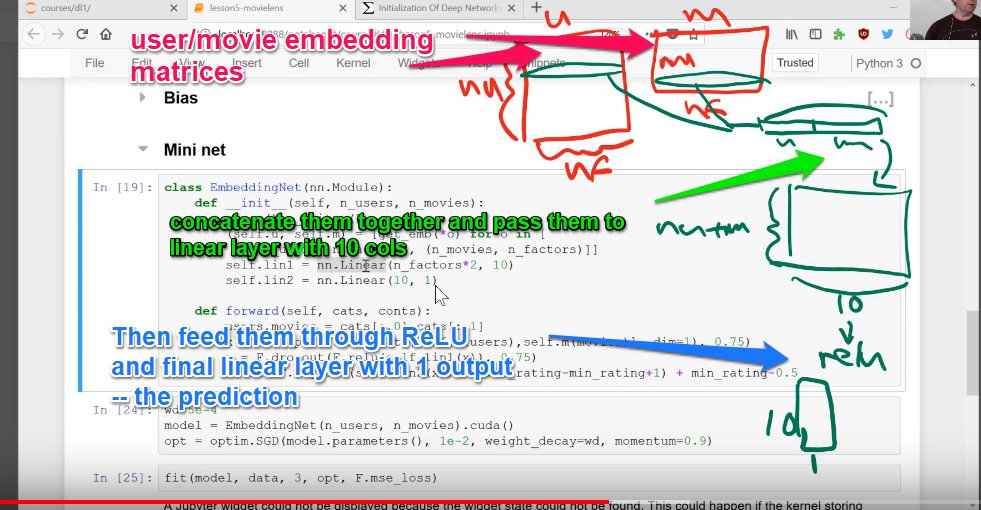

# ## Mini neural net

#

# Now, we could take our user and movie embedding values, stick them together, and feed them into a linear layer, effectively creating a neural network.

#

#

#

# So now we will create a new model that takes bias into account.

#

# This will have a few other differences:

#

# 1. It uses a convenience method to create embeddings

# 2. It normalizes scores returns from the forward pass to 1-5

#

# This second step is not strictly necessary, but it will make it easier to fit parameters.

#

# The sigmoid function is called from `F`, which is PyTorch's functional library.

# In[77]:

# For step 2, score normalizing

min_rating, max_rating = ratings.rating.min(), ratings.rating.max()

min_rating, max_rating

# In[91]:

# number_of_inputs = rows in the embedding matrix

# number_of_factors = columns in the embedding matrix

def get_embedding(number_of_inputs, number_of_factors):

embedding = nn.Embedding(number_of_inputs, number_of_factors)

embedding.weight.data.uniform_(-0.01, 0.01)

return embedding

class EmbeddingDotBias(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Initialize embedding matrices and bias vectors

(self.user_embedding_matrix, self.movie_embedding_matrix, self.user_biases, self.movie_biases) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors), (number_of_users, 1), (number_of_movies, 1)

]]

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Do our dot product

user_dot_movies = (self.user_embedding_matrix(users)*self.movie_embedding_matrix(movies)).sum(1)

# Add on our bias vectors

results = user_dot_movies + self.user_biases(users).squeeze() + self.movie_biases(movies).squeeze()

# Normalize results

results = F.sigmoid(results) * (max_rating-min_rating)+min_rating

return results

# In[92]:

cf = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

weight_decay=2e-4

model = EmbeddingDotBias(cf.n_users, cf.n_items).cuda()

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[93]:

fit(model, data, 3, opt, F.mse_loss)

# In[94]:

set_lrs(opt, 1e-2)

# In[95]:

fit(model, data, 3, opt, F.mse_loss)

# ## Mini neural net

#

# Now, we could take our user and movie embedding values, stick them together, and feed them into a linear layer, effectively creating a neural network.

#

#  #

# To create linear layers, we will use the PyTorch `nn.Linear` class. Note, this class already has biases built into it, so there is no need for separate bias vectors.

# In[106]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies, number_hidden_activations=10, p1=0.05, p2=0.5):

super().__init__()

# Set up our embedding layers

(self.user_embedding_matrix, self.movie_embedding_matrix) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors)

]]

# Set up the first linear layer. Since we are sticking together our users and movies, *2

self.linear_layer_1 = nn.Linear(number_of_factors*2, number_hidden_activations)

# Set up second linear layer, which will give the output

self.linear_layer_2 = nn.Linear(number_hidden_activations, 1)

self.dropout1 = nn.Dropout(p1)

self.dropout2 = nn.Dropout(p2)

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Now, first we get the values from our embedding matrix, and concatenate the columns (dim=1)

# and then run dropout on them

x = self.dropout1(torch.cat([self.user_embedding_matrix(users),self.movie_embedding_matrix(movies)], dim=1))

# Next, feed this into our first linear layer, run it through ReLU, and perform dropout

x = self.dropout2(F.relu(self.linear_layer_1(x)))

# Lastly, we feed it into our second linear layer, run it through sigmoid and normalize

# Linear output function

return F.sigmoid(self.linear_layer_2(x)) * (max_rating-min_rating+1) + min_rating-0.5

# In[107]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

opt = optim.Adam(model.parameters(), 1e-3, weight_decay=weight_decay)

# ### Note on fit.

# When calling `fit`, we pass it a loss/cost function that it can use to measure the success of the function with.

#

# E.g., `F.mse_loss`.

# In[108]:

fit(model, data, 3, opt, F.mse_loss)

# In[109]:

set_lrs(opt, 1e-3)

# In[110]:

fit(model, data, 3, opt, F.mse_loss)

# In[ ]:

#

# To create linear layers, we will use the PyTorch `nn.Linear` class. Note, this class already has biases built into it, so there is no need for separate bias vectors.

# In[106]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies, number_hidden_activations=10, p1=0.05, p2=0.5):

super().__init__()

# Set up our embedding layers

(self.user_embedding_matrix, self.movie_embedding_matrix) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors)

]]

# Set up the first linear layer. Since we are sticking together our users and movies, *2

self.linear_layer_1 = nn.Linear(number_of_factors*2, number_hidden_activations)

# Set up second linear layer, which will give the output

self.linear_layer_2 = nn.Linear(number_hidden_activations, 1)

self.dropout1 = nn.Dropout(p1)

self.dropout2 = nn.Dropout(p2)

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Now, first we get the values from our embedding matrix, and concatenate the columns (dim=1)

# and then run dropout on them

x = self.dropout1(torch.cat([self.user_embedding_matrix(users),self.movie_embedding_matrix(movies)], dim=1))

# Next, feed this into our first linear layer, run it through ReLU, and perform dropout

x = self.dropout2(F.relu(self.linear_layer_1(x)))

# Lastly, we feed it into our second linear layer, run it through sigmoid and normalize

# Linear output function

return F.sigmoid(self.linear_layer_2(x)) * (max_rating-min_rating+1) + min_rating-0.5

# In[107]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

opt = optim.Adam(model.parameters(), 1e-3, weight_decay=weight_decay)

# ### Note on fit.

# When calling `fit`, we pass it a loss/cost function that it can use to measure the success of the function with.

#

# E.g., `F.mse_loss`.

# In[108]:

fit(model, data, 3, opt, F.mse_loss)

# In[109]:

set_lrs(opt, 1e-3)

# In[110]:

fit(model, data, 3, opt, F.mse_loss)

# In[ ]:

#

# This whole process could be thought of as _collaborative filtering using matrix decomposition_. (We're breaking our matrix into 2 different matrices and using it to make some predictions).

#

# The matrix above the movies and the matrix to the left of the users are embedding matrices for those things.

#

# So, in summary, the process for this shallow learning is:

#

# 1. Init your user/movie/movie scores matrix using randomly initialized embedding matrices for the movies and users

# 2. Set up your cost function

# 3. Minimize your cost function using gradient descent, thus setting more accurate embedding matrix values

# ## Collaborative filtering using fast.ai

# In[1]:

# First do our usual imports

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[3]:

# Take a look at the data

# We can see it contains a userId, movieId, and rating. We want to predict the rating.

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# In[4]:

# We can also get the movie names too

movie_details = pd.read_csv(path+'movies.csv')

movie_details.head()

# In[5]:

# Though not required for modelling, we create a cross tab of the top users and top movies, like we had in our Excel file

# First get the users who have given the most ratings

group = ratings.groupby('userId')['rating'].count()

topUsers = group.sort_values(ascending=False)[:15]

topUsers

# In[6]:

# Now get the movies which are the highest rated

group = ratings.groupby('movieId')['rating'].count()

topMovies = group.sort_values(ascending=False)[:15]

topMovies

# In[7]:

# Now join them together

top_ranked = ratings.join(topUsers, rsuffix='_r', how='inner', on='userId')

# In[8]:

top_ranked = top_ranked.join(topMovies, rsuffix='_r', how='inner', on='movieId')

pd.crosstab(top_ranked.userId, top_ranked.movieId, top_ranked.rating, aggfunc=np.sum)

# ### Collaborative filtering

#

# Now we will do the actual collaborative filtering. This is pretty similar to our previous processes.

# In[12]:

# First, get the cross validation indexes -- a random 20% of rows we can use for validaton

val_idxs = get_cv_idxs(len(ratings))

# Weight decay. This will be covered later. This means 2^-4 (0.0625)

wd = 2e-4

# This is the depth of the embedding matrix. Can be thought of as the number of latent features. (see note above)

n_factors = 50

# In[13]:

# Now declare our data and learner

# We pass in the two columns and the thing we want to predict -- like we had in our Excel example earlier

collaborative_filter_data = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

learn = collaborative_filter_data.get_learner(n_factors, val_idxs, 64, opt_fn=optim.Adam)

# In[15]:

# Do the learning

# These params were figured out using trials, like usual

learn.fit(1e-2, 2, wds=wd, cycle_len=1, cycle_mult=2)

# The evaluation method here is MSE -- mean squared error (sum of actual value-predicted value)^2/num of samples).

# So we'll take the square root to get our RMSE.

# In[16]:

math.sqrt(0.765)

# ### Movie bias

# Our bias affects the movie rating, so we can also think of it as a measure of how good/bad movies are.

# In[19]:

# First, convert the IDs to contiguous values, like we did for our model.

movie_names = movie_details.set_index('movieId')['title'].to_dict()

group = ratings.groupby('movieId')['rating'].count()

top_movies = group.sort_values(ascending=False).index.values[:3000]

top_movie_idx = np.array([collaborative_filter_data.item2idx[o] for o in top_movies])

# If we want to view the layers in our PyTorch model, we can just call it.

#

# So below we have a model wth two embedding layers, and then two bias layers -- one of user biases, and one for item biases (in this case, items = movies).

#

# You can see the 0th element is the number of items, and the 1st element is the number of features. For example, in our user embedding layer, we have 671 users and 50 features, in our item bias layer we have 9066 movies and 1 bias for each movie, etc.

# In[20]:

model = learn.model

model.cuda()

# Here we take our top movie IDs and pass them into the item bias layer to get the biases for the movie.

#

# Note: PyTorch lets you do this -- pass in indices to a layer to get the corresponding values.

# The indicies must be converted to PyTorch `Variables` first. Recall that a variable is basically like a tensor that supports automatic differentiation.

#

# We then convert the resulting data to a NumPy array so that work can be done on the CPU.

# In[23]:

# Take a look at the movie bias

# Input is a movie id, and output is the movie bias (a float)

movie_bias = to_np(model.ib(V(top_movie_idx)))

# In[24]:

movie_bias

# In[33]:

movie_bias.shape

# In[ ]:

# Zip up the movie names with their respective biases

movie_ratings = [(b[0], movie_names[i]) for i,b in zip(top_movies, movie_bias)]

# Now we can look at top and bottom rated movies, corrected for reviewer sentiment, and the different types of movies viewers watch.

# In[30]:

# Sort by the 0th element in the tuple (the bias)

sorted(movie_ratings, key=lambda o: o[0])[:15]

# In[31]:

# (Same as above)

sorted(movie_ratings, key=itemgetter(0))[:15]

# In[32]:

sorted(movie_ratings, key=lambda o: o[0], reverse=True)[:15]

# ### Interpreting embedding matrices

# In[36]:

movie_embeddings = to_np(model.i(V(top_movie_idx)))

movie_embeddings.shape

# It's hard to interpret 50 different factors. We use [Principle Component Analysis (PCA)](https://plot.ly/ipython-notebooks/principal-component-analysis/) to simplify them down to 3 vectors.

#

# PCA essentially says, reduce our dimensionality down to $n$. It finds 3 linear combinations of our 50 embedding dimensions whic capture as much variation as possible, while also making those 3 linear combinations as different to each other as possible.

# In[37]:

from sklearn.decomposition import PCA

pca = PCA(n_components=3)

movie_pca = pca.fit(movie_embeddings.T).components_

# In[38]:

movie_pca.shape

# In[40]:

factor0 = movie_pca[0]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor0, top_movies)]

# In[42]:

# Looking at the first component, it looks like it's something like classier movies vs. more lighthearted

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[43]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[45]:

factor1 = movie_pca[1]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor1, top_movies)]

# In[47]:

# Looking at the second component, it looks more like CGI vs dialogue-driven

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[48]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[49]:

# We can map these two components against each other

idxs = np.random.choice(len(top_movies), 50, replace=False)

X = factor0[idxs]

Y = factor1[idxs]

plt.figure(figsize=(15,15))

plt.scatter(X, Y)

for i, x, y in zip(top_movies[idxs], X, Y):

plt.text(x,y,movie_names[i], color=np.random.rand(3)*0.7, fontsize=11)

plt.show()

# ## Collbarative Filtering from scratch

#

# In this section, we'll look at implementing collaborative filtering from scratch.

# In[1]:

# Do our imports again in case we want to run from here

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[17]:

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# ## PyTorch Arithmetic

# In[3]:

# Declare tensors (n-dimensonal matrices)

a = T([[1.,2],

[3,4]])

b = T([[2.,2],

[10,10]])

a,b

# In[7]:

# Element-wise multiplication

a*b

# ### CUDA

# To run on the graphics card, add `.cuda()` to the end of PyTorch calls. Otherwise they will run on the CPU.

# In[8]:

# This is running on the GPU

a*b.cuda()

# In[9]:

# Element-wise multiplication and sum across the columns

# This is the tensor dot product.

# I.e., the dot product of [1,2] and [2,2] = 6, and [3,4]*[10,10] = 70

(a*b).sum(1)

# ## PyTorch Modules

# We can build our own neural network layer to process inputs and compute activations.

#

# In PyTorch, we call this a __module__. I.e., we are going to build a PyTorch _module_. Modules can be passed in to neural nets.

#

# PyTorch modules are derived from `nn.Module` (neural network module).

#

# Modules must contain a function called `forward` that will compute the forward activations -- do the forward pass.

#

# This `forward` function is called automatically when the module is called with its constructor, i.e., `module(a,b)` will call `forward(a,b)`.

# In[11]:

# We can create a module that does dot products between tensors

class DotProduct(nn.Module):

def forward(self, users, movies):

return (users*movies).sum(1)

# In[12]:

model = DotProduct()

# In[14]:

# This will call the forward function.

model(a, b)

# ### A more complex module/fixing up index values

#

# Now, let's create a more complex module to do the work we were doing in our spreadsheet.

#

# But first, we have a slightly problem: user and movie IDs are not contiguous. For example, our user ID might jump from 1000 to 1400. This means that if we want to do direct indexing via the ID, we would need to have those extra 400 rows in our tensor. So we'll do some data fixing to map a series of sequential, contiguous IDs.

# In[23]:

# Get the unique user IDs

unique_users = ratings.userId.unique()

# Get a list of sequential IDs using enumerate

user_to_index = {o:i for i,o in enumerate(unique_users)}

# Map the userIds in ratings using user_to_index

ratings.userId = ratings.userId.apply(lambda x: user_to_index[x])

# In[24]:

# Do the same for movie IDs

unique_movies = ratings.movieId.unique()

movie_to_index = {o:i for i,o in enumerate(unique_movies)}

ratings.movieId = ratings.movieId.apply(lambda x: movie_to_index[x])

# In[26]:

number_of_users = int(ratings.userId.nunique())

number_of_movies = int(ratings.movieId.nunique())

number_of_users, number_of_movies

# ## Creating the module

#

# Now let's create our module. This will be a module that holds an embedding matrix for our users and movies.

# The `forward` pass will do a dot product on them.

#

# The module will use `nn.Embedding` to create the embedding matrices. These are PyTorch __`variables`__. Variables support all the operations that tensors do, except they also support automatic differentiation.

#

# When we want to access the tensor part of the variable, we call `.weight.data` on the variable.

#

# If we put `_` at the end of a PyTorch tensor function, it performs the operation in place.

#

# To initialize our embedding matrices to random numbers using values calculated using [He initialization](https://machinelearning.wtf/terms/he-initialization/). (See PyTorch's `kaiming_uniform` which can do He initialization too [link](http://pytorch.org/docs/master/_modules/torch/nn/init.html).)

#

# The flow of the module will be like this:

#

# 1. Look up the factors for the users from the embedding matrix

# 2. Look up the factors for the movies from the embedding matrix

# 3. Take the dot product

# In[46]:

number_of_factors = 50

# In[59]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Create embedding matrices for users and movies

self.user_embedding_matrix = nn.Embedding(number_of_users, number_of_factors)

self.movie_embedding_matrix = nn.Embedding(number_of_movies, number_of_factors)

# Initialize the embedding matrices

# .weight.data gets the tensor part of the variable

# Using _ performs the operation in place

self.user_embedding_matrix.weight.data.uniform_(0,0.05)

self.movie_embedding_matrix.weight.data.uniform_(0,0.05)

# Foward pass

# As with our structured data example, we can take in categorical and continuous variables

# (But both our users and movies are categorical)

def forward(self, categorical, continuous):

# Get the users and movies params

users,movies = categorical[:,0],categorical[:,1]

# Get the factors from our embedding matrices

user_factors,movie_factors = self.user_embedding_matrix(users), self.movie_embedding_matrix(movies)

# Take the dot product

return (user_factors*movie_factors).sum(1)

# In[60]:

# Now we want to set up our x and y for our crosstab

# X = everything except rating and timestamp (row/column for our cross tab)

# Y = ratings (result in our cross tab)

x = ratings.drop(['rating', 'timestamp'],axis=1)

y = ratings['rating'].astype('float32')

# In[61]:

x.head()

# In[62]:

y.head()

# In[63]:

val_idxs = get_cv_idxs(len(ratings))

# In[64]:

# Just use fast.ai to set up the dataloader

data = ColumnarModelData.from_data_frame(path, val_idxs, x, y, ['userId', 'movieId'], 64)

# In[71]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

# optim creates the optimization function

# model.parameters() fetches the weights from the nn.Module superclass (anything of type nn.[weight type] e.g. Embedding)

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[72]:

# Call the PyTorch training loop (we'll write our own later on)

fit(model, data, 3, opt, F.mse_loss)

# We can see that our loss is still quite high.

#

# We can manually do some learning rate annealing and call `fit` again.

# In[73]:

set_lrs(opt, 0.01)

# In[74]:

fit(model, data, 3, opt, F.mse_loss)

# ## Bias

# Our loss still doesn't compete with the fast.ai library. One reason for this is lack of __bias__.

#

# Consider, one movie tends to have particularly high ratings, or a certain user tends to give low scores to movies. We want to account for these case-by-case variances. So we give each movie and user a bias and add them on to our dot product. In practice, this will be like a an extra row stuck on to our movie and user tensors.

#

#

#

# This whole process could be thought of as _collaborative filtering using matrix decomposition_. (We're breaking our matrix into 2 different matrices and using it to make some predictions).

#

# The matrix above the movies and the matrix to the left of the users are embedding matrices for those things.

#

# So, in summary, the process for this shallow learning is:

#

# 1. Init your user/movie/movie scores matrix using randomly initialized embedding matrices for the movies and users

# 2. Set up your cost function

# 3. Minimize your cost function using gradient descent, thus setting more accurate embedding matrix values

# ## Collaborative filtering using fast.ai

# In[1]:

# First do our usual imports

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[3]:

# Take a look at the data

# We can see it contains a userId, movieId, and rating. We want to predict the rating.

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# In[4]:

# We can also get the movie names too

movie_details = pd.read_csv(path+'movies.csv')

movie_details.head()

# In[5]:

# Though not required for modelling, we create a cross tab of the top users and top movies, like we had in our Excel file

# First get the users who have given the most ratings

group = ratings.groupby('userId')['rating'].count()

topUsers = group.sort_values(ascending=False)[:15]

topUsers

# In[6]:

# Now get the movies which are the highest rated

group = ratings.groupby('movieId')['rating'].count()

topMovies = group.sort_values(ascending=False)[:15]

topMovies

# In[7]:

# Now join them together

top_ranked = ratings.join(topUsers, rsuffix='_r', how='inner', on='userId')

# In[8]:

top_ranked = top_ranked.join(topMovies, rsuffix='_r', how='inner', on='movieId')

pd.crosstab(top_ranked.userId, top_ranked.movieId, top_ranked.rating, aggfunc=np.sum)

# ### Collaborative filtering

#

# Now we will do the actual collaborative filtering. This is pretty similar to our previous processes.

# In[12]:

# First, get the cross validation indexes -- a random 20% of rows we can use for validaton

val_idxs = get_cv_idxs(len(ratings))

# Weight decay. This will be covered later. This means 2^-4 (0.0625)

wd = 2e-4

# This is the depth of the embedding matrix. Can be thought of as the number of latent features. (see note above)

n_factors = 50

# In[13]:

# Now declare our data and learner

# We pass in the two columns and the thing we want to predict -- like we had in our Excel example earlier

collaborative_filter_data = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

learn = collaborative_filter_data.get_learner(n_factors, val_idxs, 64, opt_fn=optim.Adam)

# In[15]:

# Do the learning

# These params were figured out using trials, like usual

learn.fit(1e-2, 2, wds=wd, cycle_len=1, cycle_mult=2)

# The evaluation method here is MSE -- mean squared error (sum of actual value-predicted value)^2/num of samples).

# So we'll take the square root to get our RMSE.

# In[16]:

math.sqrt(0.765)

# ### Movie bias

# Our bias affects the movie rating, so we can also think of it as a measure of how good/bad movies are.

# In[19]:

# First, convert the IDs to contiguous values, like we did for our model.

movie_names = movie_details.set_index('movieId')['title'].to_dict()

group = ratings.groupby('movieId')['rating'].count()

top_movies = group.sort_values(ascending=False).index.values[:3000]

top_movie_idx = np.array([collaborative_filter_data.item2idx[o] for o in top_movies])

# If we want to view the layers in our PyTorch model, we can just call it.

#

# So below we have a model wth two embedding layers, and then two bias layers -- one of user biases, and one for item biases (in this case, items = movies).

#

# You can see the 0th element is the number of items, and the 1st element is the number of features. For example, in our user embedding layer, we have 671 users and 50 features, in our item bias layer we have 9066 movies and 1 bias for each movie, etc.

# In[20]:

model = learn.model

model.cuda()

# Here we take our top movie IDs and pass them into the item bias layer to get the biases for the movie.

#

# Note: PyTorch lets you do this -- pass in indices to a layer to get the corresponding values.

# The indicies must be converted to PyTorch `Variables` first. Recall that a variable is basically like a tensor that supports automatic differentiation.

#

# We then convert the resulting data to a NumPy array so that work can be done on the CPU.

# In[23]:

# Take a look at the movie bias

# Input is a movie id, and output is the movie bias (a float)

movie_bias = to_np(model.ib(V(top_movie_idx)))

# In[24]:

movie_bias

# In[33]:

movie_bias.shape

# In[ ]:

# Zip up the movie names with their respective biases

movie_ratings = [(b[0], movie_names[i]) for i,b in zip(top_movies, movie_bias)]

# Now we can look at top and bottom rated movies, corrected for reviewer sentiment, and the different types of movies viewers watch.

# In[30]:

# Sort by the 0th element in the tuple (the bias)

sorted(movie_ratings, key=lambda o: o[0])[:15]

# In[31]:

# (Same as above)

sorted(movie_ratings, key=itemgetter(0))[:15]

# In[32]:

sorted(movie_ratings, key=lambda o: o[0], reverse=True)[:15]

# ### Interpreting embedding matrices

# In[36]:

movie_embeddings = to_np(model.i(V(top_movie_idx)))

movie_embeddings.shape

# It's hard to interpret 50 different factors. We use [Principle Component Analysis (PCA)](https://plot.ly/ipython-notebooks/principal-component-analysis/) to simplify them down to 3 vectors.

#

# PCA essentially says, reduce our dimensionality down to $n$. It finds 3 linear combinations of our 50 embedding dimensions whic capture as much variation as possible, while also making those 3 linear combinations as different to each other as possible.

# In[37]:

from sklearn.decomposition import PCA

pca = PCA(n_components=3)

movie_pca = pca.fit(movie_embeddings.T).components_

# In[38]:

movie_pca.shape

# In[40]:

factor0 = movie_pca[0]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor0, top_movies)]

# In[42]:

# Looking at the first component, it looks like it's something like classier movies vs. more lighthearted

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[43]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[45]:

factor1 = movie_pca[1]

movie_component = [(factor, movie_names[i]) for factor,i in zip(factor1, top_movies)]

# In[47]:

# Looking at the second component, it looks more like CGI vs dialogue-driven

sorted(movie_component, key=itemgetter(0), reverse=True)[:10]

# In[48]:

sorted(movie_component, key=itemgetter(0))[:10]

# In[49]:

# We can map these two components against each other

idxs = np.random.choice(len(top_movies), 50, replace=False)

X = factor0[idxs]

Y = factor1[idxs]

plt.figure(figsize=(15,15))

plt.scatter(X, Y)

for i, x, y in zip(top_movies[idxs], X, Y):

plt.text(x,y,movie_names[i], color=np.random.rand(3)*0.7, fontsize=11)

plt.show()

# ## Collbarative Filtering from scratch

#

# In this section, we'll look at implementing collaborative filtering from scratch.

# In[1]:

# Do our imports again in case we want to run from here

get_ipython().run_line_magic('reload_ext', 'autoreload')

get_ipython().run_line_magic('autoreload', '2')

get_ipython().run_line_magic('matplotlib', 'inline')

from fastai.learner import *

from fastai.column_data import *

# In[2]:

# Then set up the path

path = "data/ml-latest-small/"

# In[17]:

ratings = pd.read_csv(path+'ratings.csv')

ratings.head()

# ## PyTorch Arithmetic

# In[3]:

# Declare tensors (n-dimensonal matrices)

a = T([[1.,2],

[3,4]])

b = T([[2.,2],

[10,10]])

a,b

# In[7]:

# Element-wise multiplication

a*b

# ### CUDA

# To run on the graphics card, add `.cuda()` to the end of PyTorch calls. Otherwise they will run on the CPU.

# In[8]:

# This is running on the GPU

a*b.cuda()

# In[9]:

# Element-wise multiplication and sum across the columns

# This is the tensor dot product.

# I.e., the dot product of [1,2] and [2,2] = 6, and [3,4]*[10,10] = 70

(a*b).sum(1)

# ## PyTorch Modules

# We can build our own neural network layer to process inputs and compute activations.

#

# In PyTorch, we call this a __module__. I.e., we are going to build a PyTorch _module_. Modules can be passed in to neural nets.

#

# PyTorch modules are derived from `nn.Module` (neural network module).

#

# Modules must contain a function called `forward` that will compute the forward activations -- do the forward pass.

#

# This `forward` function is called automatically when the module is called with its constructor, i.e., `module(a,b)` will call `forward(a,b)`.

# In[11]:

# We can create a module that does dot products between tensors

class DotProduct(nn.Module):

def forward(self, users, movies):

return (users*movies).sum(1)

# In[12]:

model = DotProduct()

# In[14]:

# This will call the forward function.

model(a, b)

# ### A more complex module/fixing up index values

#

# Now, let's create a more complex module to do the work we were doing in our spreadsheet.

#

# But first, we have a slightly problem: user and movie IDs are not contiguous. For example, our user ID might jump from 1000 to 1400. This means that if we want to do direct indexing via the ID, we would need to have those extra 400 rows in our tensor. So we'll do some data fixing to map a series of sequential, contiguous IDs.

# In[23]:

# Get the unique user IDs

unique_users = ratings.userId.unique()

# Get a list of sequential IDs using enumerate

user_to_index = {o:i for i,o in enumerate(unique_users)}

# Map the userIds in ratings using user_to_index

ratings.userId = ratings.userId.apply(lambda x: user_to_index[x])

# In[24]:

# Do the same for movie IDs

unique_movies = ratings.movieId.unique()

movie_to_index = {o:i for i,o in enumerate(unique_movies)}

ratings.movieId = ratings.movieId.apply(lambda x: movie_to_index[x])

# In[26]:

number_of_users = int(ratings.userId.nunique())

number_of_movies = int(ratings.movieId.nunique())

number_of_users, number_of_movies

# ## Creating the module

#

# Now let's create our module. This will be a module that holds an embedding matrix for our users and movies.

# The `forward` pass will do a dot product on them.

#

# The module will use `nn.Embedding` to create the embedding matrices. These are PyTorch __`variables`__. Variables support all the operations that tensors do, except they also support automatic differentiation.

#

# When we want to access the tensor part of the variable, we call `.weight.data` on the variable.

#

# If we put `_` at the end of a PyTorch tensor function, it performs the operation in place.

#

# To initialize our embedding matrices to random numbers using values calculated using [He initialization](https://machinelearning.wtf/terms/he-initialization/). (See PyTorch's `kaiming_uniform` which can do He initialization too [link](http://pytorch.org/docs/master/_modules/torch/nn/init.html).)

#

# The flow of the module will be like this:

#

# 1. Look up the factors for the users from the embedding matrix

# 2. Look up the factors for the movies from the embedding matrix

# 3. Take the dot product

# In[46]:

number_of_factors = 50

# In[59]:

class EmbeddingNet(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Create embedding matrices for users and movies

self.user_embedding_matrix = nn.Embedding(number_of_users, number_of_factors)

self.movie_embedding_matrix = nn.Embedding(number_of_movies, number_of_factors)

# Initialize the embedding matrices

# .weight.data gets the tensor part of the variable

# Using _ performs the operation in place

self.user_embedding_matrix.weight.data.uniform_(0,0.05)

self.movie_embedding_matrix.weight.data.uniform_(0,0.05)

# Foward pass

# As with our structured data example, we can take in categorical and continuous variables

# (But both our users and movies are categorical)

def forward(self, categorical, continuous):

# Get the users and movies params

users,movies = categorical[:,0],categorical[:,1]

# Get the factors from our embedding matrices

user_factors,movie_factors = self.user_embedding_matrix(users), self.movie_embedding_matrix(movies)

# Take the dot product

return (user_factors*movie_factors).sum(1)

# In[60]:

# Now we want to set up our x and y for our crosstab

# X = everything except rating and timestamp (row/column for our cross tab)

# Y = ratings (result in our cross tab)

x = ratings.drop(['rating', 'timestamp'],axis=1)

y = ratings['rating'].astype('float32')

# In[61]:

x.head()

# In[62]:

y.head()

# In[63]:

val_idxs = get_cv_idxs(len(ratings))

# In[64]:

# Just use fast.ai to set up the dataloader

data = ColumnarModelData.from_data_frame(path, val_idxs, x, y, ['userId', 'movieId'], 64)

# In[71]:

weight_decay=1e-5

model = EmbeddingNet(number_of_users, number_of_movies).cuda()

# optim creates the optimization function

# model.parameters() fetches the weights from the nn.Module superclass (anything of type nn.[weight type] e.g. Embedding)

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[72]:

# Call the PyTorch training loop (we'll write our own later on)

fit(model, data, 3, opt, F.mse_loss)

# We can see that our loss is still quite high.

#

# We can manually do some learning rate annealing and call `fit` again.

# In[73]:

set_lrs(opt, 0.01)

# In[74]:

fit(model, data, 3, opt, F.mse_loss)

# ## Bias

# Our loss still doesn't compete with the fast.ai library. One reason for this is lack of __bias__.

#

# Consider, one movie tends to have particularly high ratings, or a certain user tends to give low scores to movies. We want to account for these case-by-case variances. So we give each movie and user a bias and add them on to our dot product. In practice, this will be like a an extra row stuck on to our movie and user tensors.

#

#  #

# So now we will create a new model that takes bias into account.

#

# This will have a few other differences:

#

# 1. It uses a convenience method to create embeddings

# 2. It normalizes scores returns from the forward pass to 1-5

#

# This second step is not strictly necessary, but it will make it easier to fit parameters.

#

# The sigmoid function is called from `F`, which is PyTorch's functional library.

# In[77]:

# For step 2, score normalizing

min_rating, max_rating = ratings.rating.min(), ratings.rating.max()

min_rating, max_rating

# In[91]:

# number_of_inputs = rows in the embedding matrix

# number_of_factors = columns in the embedding matrix

def get_embedding(number_of_inputs, number_of_factors):

embedding = nn.Embedding(number_of_inputs, number_of_factors)

embedding.weight.data.uniform_(-0.01, 0.01)

return embedding

class EmbeddingDotBias(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Initialize embedding matrices and bias vectors

(self.user_embedding_matrix, self.movie_embedding_matrix, self.user_biases, self.movie_biases) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors), (number_of_users, 1), (number_of_movies, 1)

]]

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Do our dot product

user_dot_movies = (self.user_embedding_matrix(users)*self.movie_embedding_matrix(movies)).sum(1)

# Add on our bias vectors

results = user_dot_movies + self.user_biases(users).squeeze() + self.movie_biases(movies).squeeze()

# Normalize results

results = F.sigmoid(results) * (max_rating-min_rating)+min_rating

return results

# In[92]:

cf = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

weight_decay=2e-4

model = EmbeddingDotBias(cf.n_users, cf.n_items).cuda()

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[93]:

fit(model, data, 3, opt, F.mse_loss)

# In[94]:

set_lrs(opt, 1e-2)

# In[95]:

fit(model, data, 3, opt, F.mse_loss)

# ## Mini neural net

#

# Now, we could take our user and movie embedding values, stick them together, and feed them into a linear layer, effectively creating a neural network.

#

#

#

# So now we will create a new model that takes bias into account.

#

# This will have a few other differences:

#

# 1. It uses a convenience method to create embeddings

# 2. It normalizes scores returns from the forward pass to 1-5

#

# This second step is not strictly necessary, but it will make it easier to fit parameters.

#

# The sigmoid function is called from `F`, which is PyTorch's functional library.

# In[77]:

# For step 2, score normalizing

min_rating, max_rating = ratings.rating.min(), ratings.rating.max()

min_rating, max_rating

# In[91]:

# number_of_inputs = rows in the embedding matrix

# number_of_factors = columns in the embedding matrix

def get_embedding(number_of_inputs, number_of_factors):

embedding = nn.Embedding(number_of_inputs, number_of_factors)

embedding.weight.data.uniform_(-0.01, 0.01)

return embedding

class EmbeddingDotBias(nn.Module):

def __init__(self, number_of_users, number_of_movies):

super().__init__()

# Initialize embedding matrices and bias vectors

(self.user_embedding_matrix, self.movie_embedding_matrix, self.user_biases, self.movie_biases) = [get_embedding(*o) for o in [

(number_of_users, number_of_factors), (number_of_movies, number_of_factors), (number_of_users, 1), (number_of_movies, 1)

]]

def forward(self, categorical, continuous):

users, movies = categorical[:,0], categorical[:,1]

# Do our dot product

user_dot_movies = (self.user_embedding_matrix(users)*self.movie_embedding_matrix(movies)).sum(1)

# Add on our bias vectors

results = user_dot_movies + self.user_biases(users).squeeze() + self.movie_biases(movies).squeeze()

# Normalize results

results = F.sigmoid(results) * (max_rating-min_rating)+min_rating

return results

# In[92]:

cf = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

weight_decay=2e-4

model = EmbeddingDotBias(cf.n_users, cf.n_items).cuda()

opt = optim.SGD(model.parameters(), 1e-1, weight_decay=weight_decay, momentum=0.9)

# In[93]: