%load_ext rmagic

%R .libPaths("/home/c3h3/R/library")

array(['/home/c3h3/R/library', '/usr/local/lib/R/site-library',

'/usr/lib/R/site-library', '/usr/lib/R/library'],

dtype='|S29')

Hacking Models With R¶

Chia-Chi Chang¶

What is Modeling ?¶

Modeling ... 其實 ...¶

就好像 「畫畫」 一樣¶

通常,畫畫的第一步 ...¶

並「不是」¶

拿起你的畫筆 ...¶

畫畫的第一步 ...¶

通常是選定某一個角度 ...¶

對於描繪的物體 ...¶

作一番「用心」的觀察 ...¶

觀察物體的形狀,光度,陰影,... 等等¶

從巨觀 (macroscopic) 到微觀 (microscopic)¶

「先畫輪廓,再描細節!」¶

OK...問題來囉...¶

要如何描出 Data 的輪廓呢?¶

%%R

load("lm_line_data.RData")

sample1_x <- lm_line_data$x

sample1_y <- lm_line_data$y

par(mfrow=c(1,1))

plot(lm_line_data)

動手試試看囉 ... (Hacking... )¶

大家覺得哪一張圖畫的比較 「像」 呢?¶

%%R -w 960 -h 480 -u px

sample_models = cbind(3+runif(7, -1, 1),1+runif(7, -1, 1))

par(mfrow=c(1,2))

plot(lm_line_data)

for (i in 1:NROW(sample_models)){

abline(sample_models[i,1],sample_models[i,2],col=i)

}

plot(sample_models,xlim=c(2,4),ylim=c(0,2),col=1:NROW(sample_models),xlab="a0",ylab="a1")

「像」 V.S 「不像」 ... ?¶

這就好像是 ...¶

用不同角度看事物時 ... 總會有著不同的 「像」 的標準 ...¶

在傳統的線性回歸當中 ...¶

%%R

min.RSS <- function(data, par) {

with(data, sum((par[1] + par[2] * x - y)^2))

}

有圖有真相的 $ RSS(a_0,a_1) $¶

%%R

par(mfrow=c(1,1))

a0_range = (-10:10)*0.1 + 3

a1_range = (-10:10)*0.1 + 1

a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

a_outer[i,j] = min.RSS(lm_line_data,c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,a_outer,xlab="a0",ylab="a1")

%%R -w 960 -h 480 -u px

par(mfrow=c(1,2))

plot(lm_line_data)

for (i in 1:NROW(sample_models)){

abline(sample_models[i,1],sample_models[i,2],col=i)

}

contour(a0_range,a1_range,a_outer,xlab="a0",ylab="a1")

points(sample_models,col=1:NROW(sample_models))

運用 R 內建的最佳化函數:optim¶

我們可以很輕鬆地 ... 用一行指令 ...¶

找到最佳解 ...¶

%%R

result <- optim(par = c(3, 1), min.RSS, data = lm_line_data)

%%R -w 960 -h 480 -u px

par(mfrow=c(1,2))

plot(lm_line_data)

for (i in 1:NROW(sample_models)){

abline(sample_models[i,1],sample_models[i,2],col=i)

}

abline(result$par[1],result$par[2],col=NROW(sample_models)+1,lwd=10)

contour(a0_range,a1_range,a_outer,xlab="a0",ylab="a1")

points(sample_models,col=1:NROW(sample_models))

points(result$par[1],result$par[2],col=NROW(sample_models)+1,pch=19,cex = 3)

身為一個 Model Hacker ...¶

當然 ... 您也可以選擇不要使用最佳化函數:optim¶

%%R

cost <- function(X, y, theta) {

sum( (X %*% theta - y)^2 ) / (2*length(y))

}

# learning rate and iteration limit

alpha <- 0.01

num_iters <- 1000

# keep history

cost_history <- double(num_iters)

theta_history <- list(num_iters)

# initialize coefficients

theta <- matrix(c(3.5,1.5), nrow=2)

# add a column of 1's for the intercept coefficient

X <- cbind(1, matrix(sample1_x))

# gradient descent

for (i in 1:num_iters) {

error <- (X %*% theta - sample1_y)

delta <- t(X) %*% error / length(sample1_y)

theta <- theta - alpha * delta

cost_history[i] <- cost(X, sample1_y, theta)

theta_history[[i]] <- theta

}

直接自己作 Gradient Decent ... (Hacking...)¶

%%R -w 960 -h 480 -u px

par(mfrow=c(1,2))

plot(sample1_x,sample1_y, col=rgb(0.2,0.4,0.6,0.4), main='Linear regression by gradient descent',xlab="x",ylab="y")

for (i in c(1,3,6,10,14,seq(20,num_iters,by=10))) {

abline(coef=theta_history[[i]], col=rgb(0.8,0,0,0.3))

}

abline(coef=theta, col='blue')

contour(a0_range,a1_range,a_outer,xlab="a0",ylab="a1")

for (i in c(1,3,6,10,14,seq(20,num_iters,by=10))) {

points(theta_history[[i]][1],theta_history[[i]][2], col=rgb(0.8,0,0,0.3))

if (i>1){

last_pt = c(theta_history[[i-1]][1],theta_history[[i-1]][2])

now_pt = c(theta_history[[i]][1],theta_history[[i]][2])

arrows(last_pt[1],last_pt[2],now_pt[1],now_pt[2],length=0.05)

}

}

There were 50 or more warnings (use warnings() to see the first 50)

了解了這些基本的原理與工具後 ...¶

接下來,我們就可以換個角度畫畫看了...¶

換個 「角度」 ... 意思就是 ...¶

換個 「看事物的標準」 ...¶

換個 「評價 Model 的方式」 ...¶

%%R

min.RSS.W <- function(data ,w ,par) {

with(data, sum(w*(par[1] + par[2] * x - y)^2))

}

接著,我們試著找出重誤差最大的兩個 Data 的位置 ...¶

%%R

Old_Model_Error_Vec <- (cbind(1,lm_line_data$x) %*% result$par - lm_line_data$y)^2

First_Change_Data_Index <- which(Old_Model_Error_Vec == max(Old_Model_Error_Vec))

Second_Change_Data_Index <- which(Old_Model_Error_Vec[-First_Change_Data_Index] == max(Old_Model_Error_Vec[-First_Change_Data_Index]))

if (Second_Change_Data_Index > First_Change_Data_Index){

Second_Change_Data_Index <- Second_Change_Data_Index + 1

}

Change_Data_Index = c(First_Change_Data_Index,Second_Change_Data_Index)

並且,嘗試著加重他們的 Weight 試試 ......¶

%%R -w 960 -h 480 -u px

NEW_WEIGHT = 1000

Change_Data_Index = c(First_Change_Data_Index,Second_Change_Data_Index)

# Change_Data_Index = c( which(lm_line_data$y == max(lm_line_data$y)),which(lm_line_data$y == min(lm_line_data$y)))

data_w <- rep(1,NROW(lm_line_data))

data_w[Change_Data_Index] = NEW_WEIGHT

data_w <- data_w / sum(data_w)

result_w <- optim(par = c(3, 1), min.RSS.W, data = lm_line_data, w = data_w)

par(mfrow=c(1,2))

plot(lm_line_data)

abline(result$par,lwd=10,col=8)

points(lm_line_data[First_Change_Data_Index,],pch=19,cex=2)

points(lm_line_data[Second_Change_Data_Index,],pch=19,cex=2)

abline(result_w$par,lwd=2,col=4)

a0_range = (-50:50)*0.1 + 3

a1_range = (-50:50)*0.1 + 1

old_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

old_a_outer[i,j] = min.RSS(lm_line_data,c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,old_a_outer,xlab="a0",ylab="a1")

new_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

new_a_outer[i,j] = min.RSS.W(lm_line_data, w = data_w, c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,new_a_outer,col=4,xlab="a0",ylab="a1",add=TRUE)

加重 1000 倍的結果¶

順便觀察一下,動態加重的過程 ...¶

%%R -w 960 -h 480 -u px

par(mfrow=c(1,2))

plot(lm_line_data)

abline(result$par,lwd=10,col=8)

data_w <- rep(1,NROW(lm_line_data))

for (i in 1:3){

NEW_WEIGHT = 10^i

data_w <- rep(1,NROW(lm_line_data))

data_w[Change_Data_Index] = NEW_WEIGHT

data_w <- data_w / sum(data_w)

result_w <- optim(par = c(3, 1), min.RSS.W, data = lm_line_data, w = data_w)

points(lm_line_data[First_Change_Data_Index,],pch=19,cex=2)

points(lm_line_data[Second_Change_Data_Index,],pch=19,cex=2)

abline(result_w$par,lwd=2,col=i+1)

}

a0_range = (-50:50)*0.1 + 3

a1_range = (-50:50)*0.1 + 1

old_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

old_a_outer[i,j] = min.RSS(lm_line_data,c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,old_a_outer,xlab="a0",ylab="a1")

for (k in 1:3){

NEW_WEIGHT = 10^k

data_w <- rep(1,NROW(lm_line_data))

data_w[Change_Data_Index] = NEW_WEIGHT

data_w <- data_w / sum(data_w)

new_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

new_a_outer[i,j] = min.RSS.W(lm_line_data, w = data_w, c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,new_a_outer,col=k+1,xlab="a0",ylab="a1",add=TRUE)

}

分別加重 10, 100, 1000 倍的結果¶

回到畫畫的比喻 ...¶

從畫畫的譬喻中,我們可以學習到 ...¶

相信,在各位 Model Hacker 的心中,一定會很想問 ...¶

其實 ... 這問題就好像是 ...¶

這也好像是 ...¶

How to find the position of shooter ?¶

Listen to the Data (Observations) ...¶

Data will tell you lots of things ...¶

Back to the inversed problem of Regression ...¶

Notation:¶

Vector Notation: $$¶

| \left[ \begin{array}{c} a \\ b \end{array} \right] > = \left[ \begin{array}{c} a \\ b \end{array} \right] $$

Dual Vector Notation: $$¶

< \left[ \begin{array}{c} a \\ b \end{array} \right] | = \left[ \begin{array}{c} a \\ b \end{array} \right]^T = \left[ \begin{array}{cc} a & b \end{array} \right] $$

Inner Product Notation: $$¶

< \left[ \begin{array}{c} a \\ b \end{array} \right] | \left[ \begin{array}{c} c \\ d \end{array} \right] > = ac + bd $$

Kronecker (Tensor) Product Notation:¶

$$¶

| \left[ \begin{array}{c} a \\ b \end{array} \right] >< \left[ \begin{array}{c} c \\ d \end{array} \right] | = \left[ \begin{array}{c} a \\ b \end{array} \right] \left[ \begin{array}{cc} c & d \end{array} \right] = \left[ \begin{array}{c} a \\ b \end{array} \right] \otimes \left[ \begin{array}{cc} c & d \end{array} \right] = \left[ \begin{array}{cc} ac & ad \\ bc & bd \end{array} \right] $$

Optimal Condition of Weighted Linear Regression :¶

$$ RSS(a_0,a_1,\vec{W}) = \sum_{j=1}^N W_j || y_j - (a_0 + a_1 x_j) ||^2 $$¶

$$¶

\sum_{j=1}^N w_j | \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] > < \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] | \left[ \begin{array}{c} a_0 \\ a_1 \end{array} \right] > = \sum_{j=1}^N w_j y_j | \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] > $$

$$¶

\sum_{j=1}^N w_j | \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] > \left( y_j - < \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] | \left[ \begin{array}{c} a_0 \\ a_1 \end{array} \right] > \right) = | \left[ \begin{array}{c} 0 \\ 0 \end{array} \right] > $$

Inversed Problem:¶

$$¶

\sum_{j=1}^N w_j | \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] > \left( y_j - < \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] | \left[ \begin{array}{c} a_0 \\ a_1 \end{array} \right] > \right) = \left[ \begin{array}{c} e_0 \\ e_1 \end{array} \right] $$

%%R

min.W <- function(ww)

Xw = rbind(X[1,]*(ww^2),X[2,]*(ww^2))

proj = (lm_line_data$y - t(model_w_coef) %*%X ) %*% t(Xw)

sum(proj^2) + 0.001*sum((ww -1)^2)

}

sol_ww = optim(rep(1,NROW(lm_line_data)), min.W,method = "BFGS")

sol_w = sol_ww$par^2

new_result_w <- optim(par = c(3, 1), min.RSS.W, data = lm_line_data, w = sol_w)

print(new_result_w$par)

print(t(model_w_coef))

[1] 3.775299 2.808490

[,1] [,2]

[1,] 3.775304 2.808396

%%R -w 960 -h 480 -u px

par(mfrow=c(1,2))

plot(lm_line_data)

abline(result_w$par,lwd=2,col=4)

abline(new_result_w$par,lwd=2,col=3)

a0_range = (-50:50)*0.1 + 3

a1_range = (-50:50)*0.1 + 1

old_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

old_a_outer[i,j] = min.RSS.W(lm_line_data, w = data_w, c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,old_a_outer,xlab="a0",ylab="a1",col=4)

new_a_outer = matrix(0,nrow=length(a0_range),ncol=length(a1_range))

for (i in 1:length(a0_range)){

for (j in 1:length(a1_range)){

new_a_outer[i,j] = min.RSS.W(lm_line_data, w = sol_w, c(a0_range[i],a1_range[j]))

}

}

contour(a0_range,a1_range,new_a_outer,col=3,xlab="a0",ylab="a1",add=TRUE)

Plotting Wieghts in Direct Problem¶

Plotting Wieghts in Inversed Problem¶

疑...怎麼長的不太一樣呢 ?¶

There are infinite solutions of it ...¶

$$¶

\sum_{j=1}^N w_j | \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] > \left( y_j - < \left[ \begin{array}{c} 1 \\ x_j \end{array} \right] | \left[ \begin{array}{c} a_0 \\ a_1 \end{array} \right] > \right) = | \left[ \begin{array}{c} 0 \\ 0 \end{array} \right] > $$

所以,到此我們可以簡單的作個小結 ...¶

到此,我已經介紹完 What is Modeling 的部份了 ...¶

Next ... We will discuss about ...¶

The Limitation of Frameworks¶

The Disappearance of the Units of measurement¶

Two primary frameworks ...¶

In order to classify APPLE and ORANGE ...¶

Maybe we would use complex features ...¶

Finally ... we will get ...¶

Supplement: "Covervage - Accuracy Tradeoff"¶

In Stock Trading ...¶

How to make $p$ grows up ?¶

遇到 ...¶

For Clustering Methods ...¶

K-means (K-based)¶

Spectral Clustering (K-based)¶

DBSCAN ($\epsilon$-based)¶

# The Limitation of Clustering ...¶

As a Model Hacker ...¶

We are always think ... How to hack the models ... ?¶

How to hack the clustering methods ... ?¶

The Distance (or Metric) plays a very important roles ...¶

In clustering methods ...¶

In classification methods ...¶

In everywhere ...¶

Let me show you some examples ...¶

However, if we adding back to the UNITs of measurement ...¶

Some people would think ...¶

$$ \frac{103 - 100}{100} = 3 \% < 100 \% = \frac{6 - 3}{3}$$¶

or more actually ...¶

$$ distance(103, 100) < distance(6, 3) $$¶

and maybe you can also find ...¶

Another Example ... from text mining ...¶

import PlaYnlp.tokenizer as tkr

import PlaYnlp.vectorizer as vcr

from PlaYnlp import dataio

import PlaYnlp.analysis.heuristics.text_clustering.weighted_features_methods as wfms

from PlaYnlp.sparse import L0_norm_col_summarizer as L0_col_sum

from PlaYnlp.sparse import L1_norm_col_summarizer as L1_col_sum

import numpy as np

import scipy as sp

jieba_without_html_tokenizer = tkr.tokenize_gen(lambda text:tkr.jieba.cut(tkr.nltk.clean_html(text)))

unigram_without_html_tokenizer = tkr.tokenize_gen(lambda text:tkr.ngram(tkr.nltk.clean_html(text),n=[1]))

bigram_without_html_tokenizer = tkr.tokenize_gen(lambda text:tkr.ngram(tkr.nltk.clean_html(text),n=2))

unigram_bigram_without_html_tokenizer = tkr.tokenize_gen(lambda text:tkr.ngram(tkr.nltk.clean_html(text),n=[1,2]))

jieba_vec_count_kwargs = {"tokenizer":jieba_without_html_tokenizer,"lowercase":False}

unigram_vec_count_kwargs = {"tokenizer":unigram_without_html_tokenizer,"lowercase":False}

bigram_vec_count_kwargs = {"tokenizer":bigram_without_html_tokenizer,"lowercase":False}

unigram_bigram_vec_count_kwargs = {"tokenizer":unigram_bigram_without_html_tokenizer,"lowercase":False}

import pandas as pd

test_text_df = pd.DataFrame([u"今天天氣很好",u"今天天氣很爛",u"今天天氣很讚",u"我恨它",u"它恨我",u"我愛它",u"它愛我",u"今天很衰","日子一天一天過","天天刷牙洗臉"])

test_text_df.columns = ["text"]

test_text_df = test_text_df.reset_index()

test_text_df

| index | text | |

|---|---|---|

| 0 | 0 | 今天天氣很好 |

| 1 | 1 | 今天天氣很爛 |

| 2 | 2 | 今天天氣很讚 |

| 3 | 3 | 我恨它 |

| 4 | 4 | 它恨我 |

| 5 | 5 | 我愛它 |

| 6 | 6 | 它愛我 |

| 7 | 7 | 今天很衰 |

| 8 | 8 | 日子一天一天過 |

| 9 | 9 | 天天刷牙洗臉 |

10 rows × 2 columns

unigram_sdtm = vcr.vectorize_text(df=test_text_df,

text_col="text",

idx_col="index",

cond_query={},

idx_query= [],

vect_gen=vcr.CountVectorizer,

vect_gen_init_kwargs = unigram_vec_count_kwargs,

summarizer=L1_col_sum)

unigram_sdtm.to_pandas_df

| 一 | 今 | 刷 | 天 | 好 | 子 | 它 | 很 | 恨 | 愛 | 我 | 日 | 氣 | 洗 | 爛 | 牙 | 臉 | 衰 | 讚 | 過 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 0 | 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 6 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 7 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 8 | 2 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| 9 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 |

10 rows × 20 columns

bigram_sdtm = vcr.vectorize_text(df=test_text_df,

text_col="text",

idx_col="index",

cond_query={},

idx_query= [],

vect_gen=vcr.CountVectorizer,

vect_gen_init_kwargs = bigram_vec_count_kwargs,

summarizer=L1_col_sum)

bigram_sdtm.to_pandas_df

| 一天 | 今天 | 刷牙 | 天一 | 天刷 | 天天 | 天很 | 天氣 | 天過 | 子一 | 它恨 | 它愛 | 很好 | 很爛 | 很衰 | 很讚 | 恨它 | 恨我 | 愛它 | 愛我 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... |

| 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | ... |

| 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | ... |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | ... |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | ... |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | ... |

| 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | ... |

| 7 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | ... |

| 8 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... |

| 9 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... |

10 rows × 26 columns

import numpy

n = unigram_sdtm.to_pandas_df.values.shape[1]

vec = unigram_sdtm.to_pandas_df.values

d12 = vec[0] - vec[1]

d13 = vec[0] - vec[2]

d12, d13

(array([ 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, -1, 0, 0,

0, 0, 0]),

array([ 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, -1, 0]))

Compute original dist(v1,v2) = ?¶

((d12**2).sum() / float(n))**0.5

0.31622776601683794

Euclidean Norm ...¶

M = np.eye(n)

print np.dot(np.dot(d12,M),d12)

print (np.trace(M*d12.reshape(1,n)*d12.reshape(n,1))/ float(n))**0.5

print (np.dot(np.dot(d12,M),d12)/ float(n))**0.5

2.0 0.316227766017 0.316227766017

Change another metric ...¶

Get smaller distance ... (Pull Data together)¶

M = np.abs(d12).reshape(1,n)*np.abs(d12).reshape(n,1)

print np.dot(np.dot(d12,M),d12)

(np.dot(np.dot(d12,M),d12)/ float(n))**0.5

0

0.0

Get larger distance ... (Push Data each other)¶

M = d12.reshape(1,n)*d12.reshape(n,1)

print np.dot(np.dot(d12,M),d12)

(np.dot(np.dot(d12,M),d12)/ float(n))**0.5

4

0.44721359549995793

ML12 = d12.reshape(1,n)*d12.reshape(n,1)

MS13 = np.abs(d13).reshape(1,n)*np.abs(d13).reshape(n,1)

p12 = 0.5

M = p12*ML12 + (1-p12)*MS13

print (np.dot(np.dot(d12,M),d12)/ float(n))**0.5

print (np.dot(np.dot(d13,M),d13)/ float(n))**0.5

0.353553390593 0.158113883008

How to breack through the Limitations ?¶

Semi-supervised Learning¶

One-VS-All classification + MIL (Computer Vision)¶

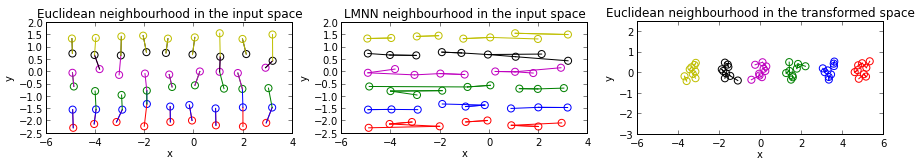

Learning the Metric from Data¶

Example 1¶

Change Metric in Example 1 :¶

Example 2 :¶

Example 2 :¶

The Metric is also like the eyes of the model ...¶

Which can help you to break through the blindness of models¶

Remember that ...¶

Whatever you observe ...¶

Whatever you draw ...¶

只要換個心境,換個角度 ...¶

就能看到一幅嶄新的畫 ...¶

謝謝大家¶

最後補充一點:¶

畫就算畫的再像 ... 他也只是畫 ... 不能吃的 ...¶