#!/usr/bin/env python

# coding: utf-8

# # Artificial Intelligence Nanodegree

# ## Computer Vision Capstone

# ## Project: Facial Keypoint Detection

#

# ---

#

# Welcome to the final Computer Vision project in the Artificial Intelligence Nanodegree program!

#

# In this project, you’ll combine your knowledge of computer vision techniques and deep learning to build and end-to-end facial keypoint recognition system! Facial keypoints include points around the eyes, nose, and mouth on any face and are used in many applications, from facial tracking to emotion recognition.

#

# There are three main parts to this project:

#

# **Part 1** : Investigating OpenCV, pre-processing, and face detection

#

# **Part 2** : Training a Convolutional Neural Network (CNN) to detect facial keypoints

#

# **Part 3** : Putting parts 1 and 2 together to identify facial keypoints on any image!

#

# ---

#

# **Here's what you need to know to complete the project:*

#

# 1. In this notebook, some template code has already been provided for you, and you will need to implement additional functionality to successfully complete this project. You will not need to modify the included code beyond what is requested.

#

# a. Sections that begin with **'(IMPLEMENTATION)'** in the header indicate that the following block of code will require additional functionality which you must provide. Instructions will be provided for each section, and the specifics of the implementation are marked in the code block with a 'TODO' statement. Please be sure to read the instructions carefully!

#

#

# 2. In addition to implementing code, there will be questions that you must answer which relate to the project and your implementation.

#

# a. Each section where you will answer a question is preceded by a **'Question X'** header.

#

# b. Carefully read each question and provide thorough answers in the following text boxes that begin with **'Answer:'**.

#

# >**Note:** Code and Markdown cells can be executed using the **Shift + Enter** keyboard shortcut. Markdown cells can be edited by double-clicking the cell to enter edit mode.

#

# The rubric contains **optional** suggestions for enhancing the project beyond the minimum requirements. If you decide to pursue the "(Optional)" sections, you should include the code in this IPython notebook.

#

# Your project submission will be evaluated based on your answers to *each* of the questions and the code implementations you provide.

# ### Steps to Complete the Project

#

# Each part of the notebook is further broken down into separate steps. Feel free to use the links below to navigate the notebook.

#

# In this project you will get to explore a few of the many computer vision algorithms built into the OpenCV library. This expansive computer vision library is now [almost 20 years old](https://en.wikipedia.org/wiki/OpenCV#History) and still growing!

#

# The project itself is broken down into three large parts, then even further into separate steps. Make sure to read through each step, and complete any sections that begin with **'(IMPLEMENTATION)'** in the header; these implementation sections may contain multiple TODOs that will be marked in code. For convenience, we provide links to each of these steps below.

#

# **Part 1** : Investigating OpenCV, pre-processing, and face detection

#

# * [Step 0](#step0): Detect Faces Using a Haar Cascade Classifier

# * [Step 1](#step1): Add Eye Detection

# * [Step 2](#step2): De-noise an Image for Better Face Detection

# * [Step 3](#step3): Blur an Image and Perform Edge Detection

# * [Step 4](#step4): Automatically Hide the Identity of an Individual

#

# **Part 2** : Training a Convolutional Neural Network (CNN) to detect facial keypoints

#

# * [Step 5](#step5): Create a CNN to Recognize Facial Keypoints

# * [Step 6](#step6): Compile and Train the Model

# * [Step 7](#step7): Visualize the Loss and Answer Questions

#

# **Part 3** : Putting parts 1 and 2 together to identify facial keypoints on any image!

#

# * [Step 8](#step7): Build a Robust Facial Keypoints Detector (Complete the CV Pipeline)

#

#

# ---

#

# ## Step 0: Detect Faces Using a Haar Cascade Classifier

#

# Have you ever wondered how Facebook automatically tags images with your friends' faces? Or how high-end cameras automatically find and focus on a certain person's face? Applications like these depend heavily on the machine learning task known as *face detection* - which is the task of automatically finding faces in images containing people.

#

# At its root face detection is a classification problem - that is a problem of distinguishing between distinct classes of things. With face detection these distinct classes are 1) images of human faces and 2) everything else.

#

# We use OpenCV's implementation of [Haar feature-based cascade classifiers](http://docs.opencv.org/trunk/d7/d8b/tutorial_py_face_detection.html) to detect human faces in images. OpenCV provides many pre-trained face detectors, stored as XML files on [github](https://github.com/opencv/opencv/tree/master/data/haarcascades). We have downloaded one of these detectors and stored it in the `detector_architectures` directory.

#

# ### Import Resources

#

# In the next python cell, we load in the required libraries for this section of the project.

# In[1]:

# Import required libraries for this section

get_ipython().run_line_magic('matplotlib', 'inline')

import numpy as np

import matplotlib.pyplot as plt

import math

import cv2 # OpenCV library for computer vision

from PIL import Image

import time

# Next, we load in and display a test image for performing face detection.

#

# *Note*: by default OpenCV assumes the ordering of our image's color channels are Blue, then Green, then Red. This is slightly out of order with most image types we'll use in these experiments, whose color channels are ordered Red, then Green, then Blue. In order to switch the Blue and Red channels of our test image around we will use OpenCV's ```cvtColor``` function, which you can read more about by [checking out some of its documentation located here](http://docs.opencv.org/3.2.0/df/d9d/tutorial_py_colorspaces.html). This is a general utility function that can do other transformations too like converting a color image to grayscale, and transforming a standard color image to HSV color space.

# In[2]:

# Load in color image for face detection

image = cv2.imread('images/test_image_1.jpg')

# Convert the image to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Plot our image using subplots to specify a size and title

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

# There are a lot of people - and faces - in this picture. 13 faces to be exact! In the next code cell, we demonstrate how to use a Haar Cascade classifier to detect all the faces in this test image.

#

# This face detector uses information about patterns of intensity in an image to reliably detect faces under varying light conditions. So, to use this face detector, we'll first convert the image from color to grayscale.

#

# Then, we load in the fully trained architecture of the face detector -- found in the file *haarcascade_frontalface_default.xml* - and use it on our image to find faces!

#

# To learn more about the parameters of the detector see [this post](https://stackoverflow.com/questions/20801015/recommended-values-for-opencv-detectmultiscale-parameters).

# In[3]:

# Convert the RGB image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray, 4, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(image)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(image_with_detections, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Image with Face Detections')

ax1.imshow(image_with_detections)

# In the above code, `faces` is a numpy array of detected faces, where each row corresponds to a detected face. Each detected face is a 1D array with four entries that specifies the bounding box of the detected face. The first two entries in the array (extracted in the above code as `x` and `y`) specify the horizontal and vertical positions of the top left corner of the bounding box. The last two entries in the array (extracted here as `w` and `h`) specify the width and height of the box.

# ---

#

#

# ## Step 1: Add Eye Detections

#

# There are other pre-trained detectors available that use a Haar Cascade Classifier - including full human body detectors, license plate detectors, and more. [A full list of the pre-trained architectures can be found here](https://github.com/opencv/opencv/tree/master/data/haarcascades).

# To test your eye detector, we'll first read in a new test image with just a single face.

# In[4]:

# Load in color image for face detection

image = cv2.imread('images/james.jpg')

# Convert the image to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Plot the RGB image

fig = plt.figure(figsize = (6,6))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

# Notice that even though the image is a black and white image, we have read it in as a color image and so it will still need to be converted to grayscale in order to perform the most accurate face detection.

#

# So, the next steps will be to convert this image to grayscale, then load OpenCV's face detector and run it with parameters that detect this face accurately.

# In[5]:

# Convert the RGB image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray, 1.25, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(image)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(image_with_detections, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (6,6))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Image with Face Detection')

ax1.imshow(image_with_detections)

# ### (IMPLEMENTATION) Add an eye detector to the current face detection setup.

#

# A Haar-cascade eye detector can be included in the same way that the face detector was and, in this first task, it will be your job to do just this.

# To set up an eye detector, use the stored parameters of the eye cascade detector, called ```haarcascade_eye.xml```, located in the `detector_architectures` subdirectory. In the next code cell, create your eye detector and store its detections.

#

# **A few notes before you get started**:

#

# First, make sure to give your loaded eye detector the variable name

#

# ``eye_cascade``

#

#

# and give the list of eye regions you detect the variable name

#

# ``eyes``

#

# Second, since we've already run the face detector over this image, you should only search for eyes *within the rectangular face regions detected in ``faces``*. This will minimize false detections.

#

# Lastly, once you've run your eye detector over the facial detection region, you should display the RGB image with both the face detection boxes (in red) and your eye detections (in green) to verify that everything works as expected.

# In[6]:

# Make a copy of the original image to plot rectangle detections

image_with_detections = np.copy(image)

# Loop over the detections and draw their corresponding face detection boxes

for (x,y,w,h) in faces:

cv2.rectangle(image_with_detections, (x,y), (x+w,y+h),(255,0,0), 3)

# Do not change the code above this comment!

## TODO: Add eye detection, using haarcascade_eye.xml, to the current face detector algorithm

## TODO: Loop over the eye detections and draw their corresponding boxes in green on image_with_detections

eye_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_eye.xml')

eyes = eye_cascade.detectMultiScale(gray, 1.01, 6)

for (x,y,w,h) in eyes:

cv2.rectangle(image_with_detections, (x,y), (x+w, y+h), (0,255,0), 3)

# Plot the image with both faces and eyes detected

fig = plt.figure(figsize = (6,6))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Image with Face and Eye Detection')

ax1.imshow(image_with_detections)

# ## (Optional) Add face and eye detection to your laptop camera

#

# It's time to kick it up a notch, and add face and eye detection to your laptop's camera! Afterwards, you'll be able to show off your creation like in the gif shown below - made with a completed version of the code!

#

#  #

# Notice that not all of the detections here are perfect - and your result need not be perfect either. You should spend a small amount of time tuning the parameters of your detectors to get reasonable results, but don't hold out for perfection. If we wanted perfection we'd need to spend a ton of time tuning the parameters of each detector, cleaning up the input image frames, etc. You can think of this as more of a rapid prototype.

#

# The next cell contains code for a wrapper function called ``laptop_camera_face_eye_detector`` that, when called, will activate your laptop's camera. You will place the relevant face and eye detection code in this wrapper function to implement face/eye detection and mark those detections on each image frame that your camera captures.

#

# Before adding anything to the function, you can run it to get an idea of how it works - a small window should pop up showing you the live feed from your camera; you can press any key to close this window.

#

# **Note:** Mac users may find that activating this function kills the kernel of their notebook every once in a while. If this happens to you, just restart your notebook's kernel, activate cell(s) containing any crucial import statements, and you'll be good to go!

# In[7]:

### Add face and eye detection to this laptop camera function

# Make sure to draw out all faces/eyes found in each frame on the shown video feed

import cv2

import time

# wrapper function for face/eye detection with your laptop camera

def laptop_camera_go():

# Create instance of video capturer

cv2.namedWindow("face detection activated")

vc = cv2.VideoCapture(0)

# Try to get the first frame

if vc.isOpened():

rval, frame = vc.read()

else:

rval = False

# Keep the video stream open

while rval:

# Plot the image from camera with all the face and eye detections marked

# Detect the faces and eyes in image

faces = face_cascade.detectMultiScale(frame, 1.25, 6)

eyes = eye_cascade.detectMultiScale(frame, 1.1, 12)

for (x,y,w,h) in faces:

cv2.rectangle(frame, (x,y), (x+w,y+h),(255,0,0), 3)

for (x,y,w,h) in eyes:

cv2.rectangle(frame, (x,y), (x+w, y+h), (0,255,0), 3)

cv2.imshow("face detection activated", frame)

# Exit functionality - press any key to exit laptop video

key = cv2.waitKey(20)

if key > 0: # Exit by pressing any key

# Destroy windows

cv2.destroyAllWindows()

# Make sure window closes on OSx

for i in range (1,5):

cv2.waitKey(1)

return

# Read next frame

time.sleep(0.05) # control framerate for computation - default 20 frames per sec

rval, frame = vc.read()

# In[8]:

# Call the laptop camera face/eye detector function above

laptop_camera_go()

#

#

# Notice that not all of the detections here are perfect - and your result need not be perfect either. You should spend a small amount of time tuning the parameters of your detectors to get reasonable results, but don't hold out for perfection. If we wanted perfection we'd need to spend a ton of time tuning the parameters of each detector, cleaning up the input image frames, etc. You can think of this as more of a rapid prototype.

#

# The next cell contains code for a wrapper function called ``laptop_camera_face_eye_detector`` that, when called, will activate your laptop's camera. You will place the relevant face and eye detection code in this wrapper function to implement face/eye detection and mark those detections on each image frame that your camera captures.

#

# Before adding anything to the function, you can run it to get an idea of how it works - a small window should pop up showing you the live feed from your camera; you can press any key to close this window.

#

# **Note:** Mac users may find that activating this function kills the kernel of their notebook every once in a while. If this happens to you, just restart your notebook's kernel, activate cell(s) containing any crucial import statements, and you'll be good to go!

# In[7]:

### Add face and eye detection to this laptop camera function

# Make sure to draw out all faces/eyes found in each frame on the shown video feed

import cv2

import time

# wrapper function for face/eye detection with your laptop camera

def laptop_camera_go():

# Create instance of video capturer

cv2.namedWindow("face detection activated")

vc = cv2.VideoCapture(0)

# Try to get the first frame

if vc.isOpened():

rval, frame = vc.read()

else:

rval = False

# Keep the video stream open

while rval:

# Plot the image from camera with all the face and eye detections marked

# Detect the faces and eyes in image

faces = face_cascade.detectMultiScale(frame, 1.25, 6)

eyes = eye_cascade.detectMultiScale(frame, 1.1, 12)

for (x,y,w,h) in faces:

cv2.rectangle(frame, (x,y), (x+w,y+h),(255,0,0), 3)

for (x,y,w,h) in eyes:

cv2.rectangle(frame, (x,y), (x+w, y+h), (0,255,0), 3)

cv2.imshow("face detection activated", frame)

# Exit functionality - press any key to exit laptop video

key = cv2.waitKey(20)

if key > 0: # Exit by pressing any key

# Destroy windows

cv2.destroyAllWindows()

# Make sure window closes on OSx

for i in range (1,5):

cv2.waitKey(1)

return

# Read next frame

time.sleep(0.05) # control framerate for computation - default 20 frames per sec

rval, frame = vc.read()

# In[8]:

# Call the laptop camera face/eye detector function above

laptop_camera_go()

#  # ---

#

#

# ## Step 2: De-noise an Image for Better Face Detection

# Image quality is an important aspect of any computer vision task. Typically, when creating a set of images to train a deep learning network, significant care is taken to ensure that training images are free of visual noise or artifacts that hinder object detection. While computer vision algorithms - like a face detector - are typically trained on 'nice' data such as this, new test data doesn't always look so nice!

#

# When applying a trained computer vision algorithm to a new piece of test data one often cleans it up first before feeding it in. This sort of cleaning - referred to as *pre-processing* - can include a number of cleaning phases like blurring, de-noising, color transformations, etc., and many of these tasks can be accomplished using OpenCV.

#

# In this short subsection we explore OpenCV's noise-removal functionality to see how we can clean up a noisy image, which we then feed into our trained face detector.

# ### Create a noisy image to work with

#

# In the next cell, we create an artificial noisy version of the previous multi-face image. This is a little exaggerated - we don't typically get images that are this noisy - but [image noise](https://digital-photography-school.com/how-to-avoid-and-reduce-noise-in-your-images/), or 'grainy-ness' in a digitial image - is a fairly common phenomenon.

# In[8]:

# Load in the multi-face test image again

image = cv2.imread('images/test_image_1.jpg')

# Convert the image copy to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Make an array copy of this image

image_with_noise = np.asarray(image)

# Create noise - here we add noise sampled randomly from a Gaussian distribution: a common model for noise

noise_level = 40

noise = np.random.randn(image.shape[0],image.shape[1],image.shape[2])*noise_level

# Add this noise to the array image copy

image_with_noise = image_with_noise + noise

# Convert back to uint8 format

image_with_noise = np.asarray([np.uint8(np.clip(i,0,255)) for i in image_with_noise])

# Plot our noisy image!

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Noisy Image')

ax1.imshow(image_with_noise)

# In the context of face detection, the problem with an image like this is that - due to noise - we may miss some faces or get false detections.

#

# In the next cell we apply the same trained OpenCV detector with the same settings as before, to see what sort of detections we get.

# In[9]:

# Convert the RGB image to grayscale

gray_noise = cv2.cvtColor(image_with_noise, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray_noise, 4, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(image_with_noise)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(image_with_detections, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Noisy Image with Face Detections')

ax1.imshow(image_with_detections)

# With this added noise we now miss one of the faces!

# ### (IMPLEMENTATION) De-noise this image for better face detection

#

# Time to get your hands dirty: using OpenCV's built in color image de-noising functionality called ```fastNlMeansDenoisingColored``` - de-noise this image enough so that all the faces in the image are properly detected. Once you have cleaned the image in the next cell, use the cell that follows to run our trained face detector over the cleaned image to check out its detections.

#

# You can find its [official documentation here]([documentation for denoising](http://docs.opencv.org/trunk/d1/d79/group__photo__denoise.html#ga21abc1c8b0e15f78cd3eff672cb6c476) and [a useful example here](http://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_photo/py_non_local_means/py_non_local_means.html).

#

#

# **Note:** you can keep all parameters *except* `photo_render` fixed as shown in the second link above. Play around with the value of this parameter - see how it affects the resulting cleaned image.

# In[16]:

## TODO: Use OpenCV's built in color image de-noising function to clean up our noisy image!

denoised = cv2.fastNlMeansDenoisingColored(image_with_noise, None, 17, 25,7, 21)

# Plot our noisy image!

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('De-Noised Image')

ax1.imshow(denoised)

# In[17]:

## TODO: Run the face detector on the de-noised image to improve your detections and display the result

# Convert the RGB image to grayscale

gray_noise = cv2.cvtColor(denoised, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray_noise, 4, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(denoised)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(denoised, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('De-Noised Image with Face Detections')

ax1.imshow(denoised)

# ---

#

#

# ## Step 3: Blur an Image and Perform Edge Detection

# Now that we have developed a simple pipeline for detecting faces using OpenCV - let's start playing around with a few fun things we can do with all those detected faces!

# ### Importance of Blur in Edge Detection

#

# Edge detection is a concept that pops up almost everywhere in computer vision applications, as edge-based features (as well as features built on top of edges) are often some of the best features for e.g., object detection and recognition problems.

#

# Edge detection is a dimension reduction technique - by keeping only the edges of an image we get to throw away a lot of non-discriminating information. And typically the most useful kind of edge-detection is one that preserves only the important, global structures (ignoring local structures that aren't very discriminative). So removing local structures / retaining global structures is a crucial pre-processing step to performing edge detection in an image, and blurring can do just that.

#

# Below is an animated gif showing the result of an edge-detected cat [taken from Wikipedia](https://en.wikipedia.org/wiki/Gaussian_blur#Common_uses), where the image is gradually blurred more and more prior to edge detection. When the animation begins you can't quite make out what it's a picture of, but as the animation evolves and local structures are removed via blurring the cat becomes visible in the edge-detected image.

#

#

# ---

#

#

# ## Step 2: De-noise an Image for Better Face Detection

# Image quality is an important aspect of any computer vision task. Typically, when creating a set of images to train a deep learning network, significant care is taken to ensure that training images are free of visual noise or artifacts that hinder object detection. While computer vision algorithms - like a face detector - are typically trained on 'nice' data such as this, new test data doesn't always look so nice!

#

# When applying a trained computer vision algorithm to a new piece of test data one often cleans it up first before feeding it in. This sort of cleaning - referred to as *pre-processing* - can include a number of cleaning phases like blurring, de-noising, color transformations, etc., and many of these tasks can be accomplished using OpenCV.

#

# In this short subsection we explore OpenCV's noise-removal functionality to see how we can clean up a noisy image, which we then feed into our trained face detector.

# ### Create a noisy image to work with

#

# In the next cell, we create an artificial noisy version of the previous multi-face image. This is a little exaggerated - we don't typically get images that are this noisy - but [image noise](https://digital-photography-school.com/how-to-avoid-and-reduce-noise-in-your-images/), or 'grainy-ness' in a digitial image - is a fairly common phenomenon.

# In[8]:

# Load in the multi-face test image again

image = cv2.imread('images/test_image_1.jpg')

# Convert the image copy to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Make an array copy of this image

image_with_noise = np.asarray(image)

# Create noise - here we add noise sampled randomly from a Gaussian distribution: a common model for noise

noise_level = 40

noise = np.random.randn(image.shape[0],image.shape[1],image.shape[2])*noise_level

# Add this noise to the array image copy

image_with_noise = image_with_noise + noise

# Convert back to uint8 format

image_with_noise = np.asarray([np.uint8(np.clip(i,0,255)) for i in image_with_noise])

# Plot our noisy image!

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Noisy Image')

ax1.imshow(image_with_noise)

# In the context of face detection, the problem with an image like this is that - due to noise - we may miss some faces or get false detections.

#

# In the next cell we apply the same trained OpenCV detector with the same settings as before, to see what sort of detections we get.

# In[9]:

# Convert the RGB image to grayscale

gray_noise = cv2.cvtColor(image_with_noise, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray_noise, 4, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(image_with_noise)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(image_with_detections, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Noisy Image with Face Detections')

ax1.imshow(image_with_detections)

# With this added noise we now miss one of the faces!

# ### (IMPLEMENTATION) De-noise this image for better face detection

#

# Time to get your hands dirty: using OpenCV's built in color image de-noising functionality called ```fastNlMeansDenoisingColored``` - de-noise this image enough so that all the faces in the image are properly detected. Once you have cleaned the image in the next cell, use the cell that follows to run our trained face detector over the cleaned image to check out its detections.

#

# You can find its [official documentation here]([documentation for denoising](http://docs.opencv.org/trunk/d1/d79/group__photo__denoise.html#ga21abc1c8b0e15f78cd3eff672cb6c476) and [a useful example here](http://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_photo/py_non_local_means/py_non_local_means.html).

#

#

# **Note:** you can keep all parameters *except* `photo_render` fixed as shown in the second link above. Play around with the value of this parameter - see how it affects the resulting cleaned image.

# In[16]:

## TODO: Use OpenCV's built in color image de-noising function to clean up our noisy image!

denoised = cv2.fastNlMeansDenoisingColored(image_with_noise, None, 17, 25,7, 21)

# Plot our noisy image!

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('De-Noised Image')

ax1.imshow(denoised)

# In[17]:

## TODO: Run the face detector on the de-noised image to improve your detections and display the result

# Convert the RGB image to grayscale

gray_noise = cv2.cvtColor(denoised, cv2.COLOR_RGB2GRAY)

# Extract the pre-trained face detector from an xml file

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# Detect the faces in image

faces = face_cascade.detectMultiScale(gray_noise, 4, 6)

# Print the number of faces detected in the image

print('Number of faces detected:', len(faces))

# Make a copy of the orginal image to draw face detections on

image_with_detections = np.copy(denoised)

# Get the bounding box for each detected face

for (x,y,w,h) in faces:

# Add a red bounding box to the detections image

cv2.rectangle(denoised, (x,y), (x+w,y+h), (255,0,0), 3)

# Display the image with the detections

fig = plt.figure(figsize = (8,8))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('De-Noised Image with Face Detections')

ax1.imshow(denoised)

# ---

#

#

# ## Step 3: Blur an Image and Perform Edge Detection

# Now that we have developed a simple pipeline for detecting faces using OpenCV - let's start playing around with a few fun things we can do with all those detected faces!

# ### Importance of Blur in Edge Detection

#

# Edge detection is a concept that pops up almost everywhere in computer vision applications, as edge-based features (as well as features built on top of edges) are often some of the best features for e.g., object detection and recognition problems.

#

# Edge detection is a dimension reduction technique - by keeping only the edges of an image we get to throw away a lot of non-discriminating information. And typically the most useful kind of edge-detection is one that preserves only the important, global structures (ignoring local structures that aren't very discriminative). So removing local structures / retaining global structures is a crucial pre-processing step to performing edge detection in an image, and blurring can do just that.

#

# Below is an animated gif showing the result of an edge-detected cat [taken from Wikipedia](https://en.wikipedia.org/wiki/Gaussian_blur#Common_uses), where the image is gradually blurred more and more prior to edge detection. When the animation begins you can't quite make out what it's a picture of, but as the animation evolves and local structures are removed via blurring the cat becomes visible in the edge-detected image.

#

#  #

# Edge detection is a **convolution** performed on the image itself, and you can read about Canny edge detection on [this OpenCV documentation page](http://docs.opencv.org/2.4/doc/tutorials/imgproc/imgtrans/canny_detector/canny_detector.html).

# ### Canny edge detection

#

# In the cell below we load in a test image, then apply *Canny edge detection* on it. The original image is shown on the left panel of the figure, while the edge-detected version of the image is shown on the right. Notice how the result looks very busy - there are too many little details preserved in the image before it is sent to the edge detector. When applied in computer vision applications, edge detection should preserve *global* structure; doing away with local structures that don't help describe what objects are in the image.

# In[18]:

# Load in the image

image = cv2.imread('images/fawzia.jpg')

# Convert to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Convert to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

# Perform Canny edge detection

edges = cv2.Canny(gray,100,200)

# Dilate the image to amplify edges

edges = cv2.dilate(edges, None)

# Plot the RGB and edge-detected image

fig = plt.figure(figsize = (15,15))

ax1 = fig.add_subplot(121)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

ax2 = fig.add_subplot(122)

ax2.set_xticks([])

ax2.set_yticks([])

ax2.set_title('Canny Edges')

ax2.imshow(edges, cmap='gray')

# Without first blurring the image, and removing small, local structures, a lot of irrelevant edge content gets picked up and amplified by the detector (as shown in the right panel above).

# ### (IMPLEMENTATION) Blur the image *then* perform edge detection

#

# In the next cell, you will repeat this experiment - blurring the image first to remove these local structures, so that only the important boudnary details remain in the edge-detected image.

#

# Blur the image by using OpenCV's ```filter2d``` functionality - which is discussed in [this documentation page](http://docs.opencv.org/3.1.0/d4/d13/tutorial_py_filtering.html) - and use an *averaging kernel* of width equal to 4.

# In[19]:

### TODO: Blur the test imageusing OpenCV's filter2d functionality,

# Use an averaging kernel, and a kernel width equal to 4

image = cv2.imread('images/fawzia.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

blur = np.copy(gray)

kernal = np.ones((4,4), dtype=np.float32)/16

blur = cv2.filter2D(blur, -1, kernal)

## TODO: Then perform Canny edge detection and display the output

edges = cv2.Canny(blur, 100, 200)

edges = cv2.dilate(edges, None)

fig = plt.figure(figsize=(15,15))

ax1 = fig.add_subplot(121)

ax2 = fig.add_subplot(122)

ax1.set_title('Blurred Image')

ax1.imshow(blur, cmap='gray')

ax2.set_title('Canny Edges')

ax2.imshow(edges, cmap='gray')

# ---

#

#

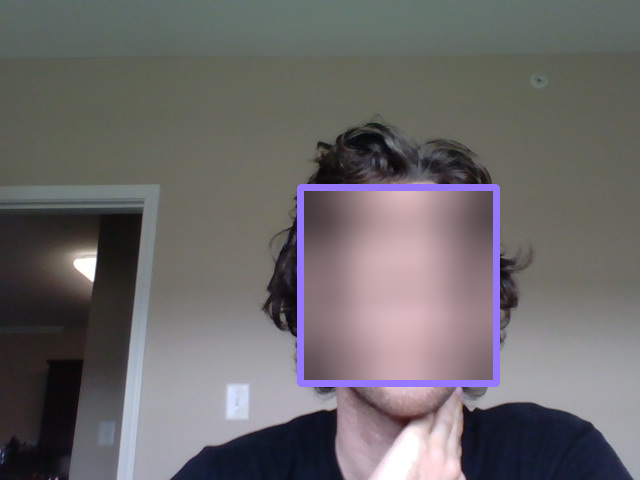

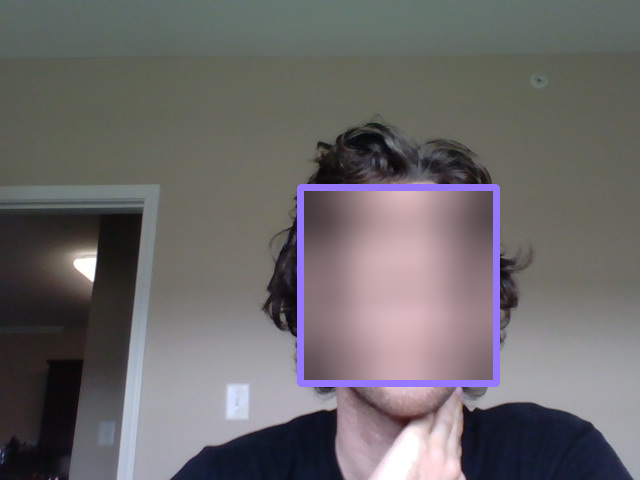

# ## Step 4: Automatically Hide the Identity of an Individual

#

# If you film something like a documentary or reality TV, you must get permission from every individual shown on film before you can show their face, otherwise you need to blur it out - by blurring the face a lot (so much so that even the global structures are obscured)! This is also true for projects like [Google's StreetView maps](https://www.google.com/streetview/) - an enormous collection of mapping images taken from a fleet of Google vehicles. Because it would be impossible for Google to get the permission of every single person accidentally captured in one of these images they blur out everyone's faces, the detected images must automatically blur the identity of detected people. Here's a few examples of folks caught in the camera of a Google street view vehicle.

#

#

#

# Edge detection is a **convolution** performed on the image itself, and you can read about Canny edge detection on [this OpenCV documentation page](http://docs.opencv.org/2.4/doc/tutorials/imgproc/imgtrans/canny_detector/canny_detector.html).

# ### Canny edge detection

#

# In the cell below we load in a test image, then apply *Canny edge detection* on it. The original image is shown on the left panel of the figure, while the edge-detected version of the image is shown on the right. Notice how the result looks very busy - there are too many little details preserved in the image before it is sent to the edge detector. When applied in computer vision applications, edge detection should preserve *global* structure; doing away with local structures that don't help describe what objects are in the image.

# In[18]:

# Load in the image

image = cv2.imread('images/fawzia.jpg')

# Convert to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Convert to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

# Perform Canny edge detection

edges = cv2.Canny(gray,100,200)

# Dilate the image to amplify edges

edges = cv2.dilate(edges, None)

# Plot the RGB and edge-detected image

fig = plt.figure(figsize = (15,15))

ax1 = fig.add_subplot(121)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

ax2 = fig.add_subplot(122)

ax2.set_xticks([])

ax2.set_yticks([])

ax2.set_title('Canny Edges')

ax2.imshow(edges, cmap='gray')

# Without first blurring the image, and removing small, local structures, a lot of irrelevant edge content gets picked up and amplified by the detector (as shown in the right panel above).

# ### (IMPLEMENTATION) Blur the image *then* perform edge detection

#

# In the next cell, you will repeat this experiment - blurring the image first to remove these local structures, so that only the important boudnary details remain in the edge-detected image.

#

# Blur the image by using OpenCV's ```filter2d``` functionality - which is discussed in [this documentation page](http://docs.opencv.org/3.1.0/d4/d13/tutorial_py_filtering.html) - and use an *averaging kernel* of width equal to 4.

# In[19]:

### TODO: Blur the test imageusing OpenCV's filter2d functionality,

# Use an averaging kernel, and a kernel width equal to 4

image = cv2.imread('images/fawzia.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

blur = np.copy(gray)

kernal = np.ones((4,4), dtype=np.float32)/16

blur = cv2.filter2D(blur, -1, kernal)

## TODO: Then perform Canny edge detection and display the output

edges = cv2.Canny(blur, 100, 200)

edges = cv2.dilate(edges, None)

fig = plt.figure(figsize=(15,15))

ax1 = fig.add_subplot(121)

ax2 = fig.add_subplot(122)

ax1.set_title('Blurred Image')

ax1.imshow(blur, cmap='gray')

ax2.set_title('Canny Edges')

ax2.imshow(edges, cmap='gray')

# ---

#

#

# ## Step 4: Automatically Hide the Identity of an Individual

#

# If you film something like a documentary or reality TV, you must get permission from every individual shown on film before you can show their face, otherwise you need to blur it out - by blurring the face a lot (so much so that even the global structures are obscured)! This is also true for projects like [Google's StreetView maps](https://www.google.com/streetview/) - an enormous collection of mapping images taken from a fleet of Google vehicles. Because it would be impossible for Google to get the permission of every single person accidentally captured in one of these images they blur out everyone's faces, the detected images must automatically blur the identity of detected people. Here's a few examples of folks caught in the camera of a Google street view vehicle.

#

#  #

#  #

# ### Read in an image to perform identity detection

#

# Let's try this out for ourselves. Use the face detection pipeline built above and what you know about using the ```filter2D``` to blur and image, and use these in tandem to hide the identity of the person in the following image - loaded in and printed in the next cell.

# In[20]:

# Load in the image

image = cv2.imread('images/gus.jpg')

# Convert the image to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Display the image

fig = plt.figure(figsize = (6,6))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

# ### (IMPLEMENTATION) Use blurring to hide the identity of an individual in an image

#

# The idea here is to 1) automatically detect the face in this image, and then 2) blur it out! Make sure to adjust the parameters of the *averaging* blur filter to completely obscure this person's identity.

# In[21]:

## TODO: Implement face detection

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

faces = face_cascade.detectMultiScale(gray,4,4)

print('Number of faces detected:', len(faces))

image_blurred = np.copy(image)

kernal = np.ones((100,100), dtype=np.float32)/10000

## TODO: Blur the bounding box around each detected face using an averaging filter and display the result

for (x,y,w,h) in faces:

image_blurred[y:y+w,x:x+h] = cv2.filter2D(image_blurred[y:y+w,x:x+h], -1, kernal)

cv2.rectangle(image_blurred, (x,y), (x+w,y+h),(150,120,255), 10)

# Display Image

fig = plt.figure(figsize=(10,10))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Face Blurred Image')

plt.imshow(image_blurred)

# ### (Optional) Build identity protection into your laptop camera

#

# In this optional task you can add identity protection to your laptop camera, using the previously completed code where you added face detection to your laptop camera - and the task above. You should be able to get reasonable results with little parameter tuning - like the one shown in the gif below.

#

#

#

# ### Read in an image to perform identity detection

#

# Let's try this out for ourselves. Use the face detection pipeline built above and what you know about using the ```filter2D``` to blur and image, and use these in tandem to hide the identity of the person in the following image - loaded in and printed in the next cell.

# In[20]:

# Load in the image

image = cv2.imread('images/gus.jpg')

# Convert the image to RGB colorspace

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Display the image

fig = plt.figure(figsize = (6,6))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Original Image')

ax1.imshow(image)

# ### (IMPLEMENTATION) Use blurring to hide the identity of an individual in an image

#

# The idea here is to 1) automatically detect the face in this image, and then 2) blur it out! Make sure to adjust the parameters of the *averaging* blur filter to completely obscure this person's identity.

# In[21]:

## TODO: Implement face detection

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

faces = face_cascade.detectMultiScale(gray,4,4)

print('Number of faces detected:', len(faces))

image_blurred = np.copy(image)

kernal = np.ones((100,100), dtype=np.float32)/10000

## TODO: Blur the bounding box around each detected face using an averaging filter and display the result

for (x,y,w,h) in faces:

image_blurred[y:y+w,x:x+h] = cv2.filter2D(image_blurred[y:y+w,x:x+h], -1, kernal)

cv2.rectangle(image_blurred, (x,y), (x+w,y+h),(150,120,255), 10)

# Display Image

fig = plt.figure(figsize=(10,10))

ax1 = fig.add_subplot(111)

ax1.set_xticks([])

ax1.set_yticks([])

ax1.set_title('Face Blurred Image')

plt.imshow(image_blurred)

# ### (Optional) Build identity protection into your laptop camera

#

# In this optional task you can add identity protection to your laptop camera, using the previously completed code where you added face detection to your laptop camera - and the task above. You should be able to get reasonable results with little parameter tuning - like the one shown in the gif below.

#

#  #

# As with the previous video task, to make this perfect would require significant effort - so don't strive for perfection here, strive for reasonable quality.

#

# The next cell contains code a wrapper function called ``laptop_camera_identity_hider`` that - when called - will activate your laptop's camera. You need to place the relevant face detection and blurring code developed above in this function in order to blur faces entering your laptop camera's field of view.

#

# Before adding anything to the function you can call it to get a hang of how it works - a small window will pop up showing you the live feed from your camera, you can press any key to close this window.

#

# **Note:** Mac users may find that activating this function kills the kernel of their notebook every once in a while. If this happens to you, just restart your notebook's kernel, activate cell(s) containing any crucial import statements, and you'll be good to go!

# In[22]:

### Insert face detection and blurring code into the wrapper below to create an identity protector on your laptop!

import cv2

import time

def laptop_camera_go():

# Create instance of video capturer

cv2.namedWindow("face detection activated")

vc = cv2.VideoCapture(0)

# Try to get the first frame

if vc.isOpened():

rval, frame = vc.read()

else:

rval = False

# Keep video stream open

while rval:

# Plot image from camera with detections marked

faces = face_cascade.detectMultiScale(frame, 1.25, 6)

for (x,y,w,h) in faces:

frame[y:y+w,x:x+h] = cv2.filter2D(frame[y:y+w,x:x+h], -1, kernal)

cv2.rectangle(frame, (x,y), (x+w,y+h),(255,120,150), 5)

cv2.imshow("face detection activated", frame)

# Exit functionality - press any key to exit laptop video

key = cv2.waitKey(20)

if key > 0: # Exit by pressing any key

# Destroy windows

cv2.destroyAllWindows()

for i in range (1,5):

cv2.waitKey(1)

return

# Read next frame

time.sleep(0.05) # control framerate for computation - default 20 frames per sec

rval, frame = vc.read()

# In[22]:

# Run laptop identity hider

laptop_camera_go()

#

#

# As with the previous video task, to make this perfect would require significant effort - so don't strive for perfection here, strive for reasonable quality.

#

# The next cell contains code a wrapper function called ``laptop_camera_identity_hider`` that - when called - will activate your laptop's camera. You need to place the relevant face detection and blurring code developed above in this function in order to blur faces entering your laptop camera's field of view.

#

# Before adding anything to the function you can call it to get a hang of how it works - a small window will pop up showing you the live feed from your camera, you can press any key to close this window.

#

# **Note:** Mac users may find that activating this function kills the kernel of their notebook every once in a while. If this happens to you, just restart your notebook's kernel, activate cell(s) containing any crucial import statements, and you'll be good to go!

# In[22]:

### Insert face detection and blurring code into the wrapper below to create an identity protector on your laptop!

import cv2

import time

def laptop_camera_go():

# Create instance of video capturer

cv2.namedWindow("face detection activated")

vc = cv2.VideoCapture(0)

# Try to get the first frame

if vc.isOpened():

rval, frame = vc.read()

else:

rval = False

# Keep video stream open

while rval:

# Plot image from camera with detections marked

faces = face_cascade.detectMultiScale(frame, 1.25, 6)

for (x,y,w,h) in faces:

frame[y:y+w,x:x+h] = cv2.filter2D(frame[y:y+w,x:x+h], -1, kernal)

cv2.rectangle(frame, (x,y), (x+w,y+h),(255,120,150), 5)

cv2.imshow("face detection activated", frame)

# Exit functionality - press any key to exit laptop video

key = cv2.waitKey(20)

if key > 0: # Exit by pressing any key

# Destroy windows

cv2.destroyAllWindows()

for i in range (1,5):

cv2.waitKey(1)

return

# Read next frame

time.sleep(0.05) # control framerate for computation - default 20 frames per sec

rval, frame = vc.read()

# In[22]:

# Run laptop identity hider

laptop_camera_go()

#  # ---

#

#

# ## Step 5: Create a CNN to Recognize Facial Keypoints

#

# OpenCV is often used in practice with other machine learning and deep learning libraries to produce interesting results. In this stage of the project you will create your own end-to-end pipeline - employing convolutional networks in keras along with OpenCV - to apply a "selfie" filter to streaming video and images.

#

# You will start by creating and then training a convolutional network that can detect facial keypoints in a small dataset of cropped images of human faces. We then guide you towards OpenCV to expanding your detection algorithm to more general images. What are facial keypoints? Let's take a look at some examples.

#

#

# ---

#

#

# ## Step 5: Create a CNN to Recognize Facial Keypoints

#

# OpenCV is often used in practice with other machine learning and deep learning libraries to produce interesting results. In this stage of the project you will create your own end-to-end pipeline - employing convolutional networks in keras along with OpenCV - to apply a "selfie" filter to streaming video and images.

#

# You will start by creating and then training a convolutional network that can detect facial keypoints in a small dataset of cropped images of human faces. We then guide you towards OpenCV to expanding your detection algorithm to more general images. What are facial keypoints? Let's take a look at some examples.

#

#  #

# Facial keypoints (also called facial landmarks) are the small blue-green dots shown on each of the faces in the image above - there are 15 keypoints marked in each image. They mark important areas of the face - the eyes, corners of the mouth, the nose, etc. Facial keypoints can be used in a variety of machine learning applications from face and emotion recognition to commercial applications like the image filters popularized by Snapchat.

#

# Below we illustrate a filter that, using the results of this section, automatically places sunglasses on people in images (using the facial keypoints to place the glasses correctly on each face). Here, the facial keypoints have been colored lime green for visualization purposes.

#

#

#

# Facial keypoints (also called facial landmarks) are the small blue-green dots shown on each of the faces in the image above - there are 15 keypoints marked in each image. They mark important areas of the face - the eyes, corners of the mouth, the nose, etc. Facial keypoints can be used in a variety of machine learning applications from face and emotion recognition to commercial applications like the image filters popularized by Snapchat.

#

# Below we illustrate a filter that, using the results of this section, automatically places sunglasses on people in images (using the facial keypoints to place the glasses correctly on each face). Here, the facial keypoints have been colored lime green for visualization purposes.

#

#  # ### Make a facial keypoint detector

#

# But first things first: how can we make a facial keypoint detector? Well, at a high level, notice that facial keypoint detection is a *regression problem*. A single face corresponds to a set of 15 facial keypoints (a set of 15 corresponding $(x, y)$ coordinates, i.e., an output point). Because our input data are images, we can employ a *convolutional neural network* to recognize patterns in our images and learn how to identify these keypoint given sets of labeled data.

#

# In order to train a regressor, we need a training set - a set of facial image / facial keypoint pairs to train on. For this we will be using [this dataset from Kaggle](https://www.kaggle.com/c/facial-keypoints-detection/data). We've already downloaded this data and placed it in the `data` directory. Make sure that you have both the *training* and *test* data files. The training dataset contains several thousand $96 \times 96$ grayscale images of cropped human faces, along with each face's 15 corresponding facial keypoints (also called landmarks) that have been placed by hand, and recorded in $(x, y)$ coordinates. This wonderful resource also has a substantial testing set, which we will use in tinkering with our convolutional network.

#

# To load in this data, run the Python cell below - notice we will load in both the training and testing sets.

#

# The `load_data` function is in the included `utils.py` file.

# In[87]:

from utils import *

# Load training set

X_train, y_train = load_data()

print("X_train.shape == {}".format(X_train.shape))

print("y_train.shape == {}; y_train.min == {:.3f}; y_train.max == {:.3f}".format(

y_train.shape, y_train.min(), y_train.max()))

# Load testing set

X_test, _ = load_data(test=True)

print("X_test.shape == {}".format(X_test.shape))

# The `load_data` function in `utils.py` originates from this excellent [blog post](http://danielnouri.org/notes/2014/12/17/using-convolutional-neural-nets-to-detect-facial-keypoints-tutorial/), which you are *strongly* encouraged to read. Please take the time now to review this function. Note how the output values - that is, the coordinates of each set of facial landmarks - have been normalized to take on values in the range $[-1, 1]$, while the pixel values of each input point (a facial image) have been normalized to the range $[0,1]$.

#

# Note: the original Kaggle dataset contains some images with several missing keypoints. For simplicity, the `load_data` function removes those images with missing labels from the dataset. As an __*optional*__ extension, you are welcome to amend the `load_data` function to include the incomplete data points.

# ### Visualize the Training Data

#

# Execute the code cell below to visualize a subset of the training data.

# In[88]:

# Make a true test set from the original data

import sklearn

X_train, y_train = sklearn.utils.shuffle(X_train, y_train)

X_test, y_test = X_train[:500], y_train[:500]

X_train, y_train = X_train[500:], y_train[500:]

# In[89]:

import matplotlib.pyplot as plt

get_ipython().run_line_magic('matplotlib', 'inline')

fig = plt.figure(figsize=(20,20))

fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.05, wspace=0.05)

for i in range(9):

ax = fig.add_subplot(3, 3, i + 1, xticks=[], yticks=[])

plot_data(X_train[i], y_train[i], ax)

# In[90]:

print(X_train.shape, X_test.shape)

# ### Data Augmentation

# #### Rotation and Scaling

# In[91]:

image_number = 7

scale_factor = .9

rotation = 5 #In degrees

# Unnormalize the keypoints

keypoints = y_train[image_number] * 48 + 48

# Use openCV to get a rotation matrix

M = cv2.getRotationMatrix2D((48,48),15, .9)

dst = cv2.warpAffine(np.squeeze(X_train[image_number]),M,(96,96))

new_keypoints = np.zeros(30)

for i in range(15):

coord_idx = 2*i

old_coord = keypoints[coord_idx:coord_idx+2]

new_coord = np.matmul(M,np.append(old_coord,1))

new_keypoints[coord_idx] += new_coord[0]

new_keypoints[coord_idx+1] += new_coord[1]

# Plot the image and the augmented image

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(121)

ax.imshow(np.squeeze(X_train[image_number]), cmap='gray')

ax.scatter(keypoints[0::2],

keypoints[1::2],

marker='o',

c='c',

s=20)

ax2 = fig.add_subplot(122)

ax2.imshow(dst, cmap='gray')

ax2.scatter(new_keypoints[0::2],

new_keypoints[1::2],

marker='o',

c='c',

s=20)

# #### Horizontal Reflection

# The process for flipping the data horizontally is from this [blog post](http://danielnouri.org/notes/2014/12/17/using-convolutional-neural-nets-to-detect-facial-keypoints-tutorial/).

# In[92]:

image_number = 12

y_train[image_number]

# In[93]:

keypoints = y_train[image_number]

new_keypoints = np.zeros(30)

new_keypoints += keypoints

new_keypoints[0::2] *= -1

# Flip the indices of the left right keypoints

flip_indices = [

(0, 2), (1, 3),

(4, 8), (5, 9), (6, 10), (7, 11),

(12, 16), (13, 17), (14, 18), (15, 19),

(22, 24), (23, 25),

]

for a,b in flip_indices:

new_keypoints[a], new_keypoints[b] = new_keypoints[b], new_keypoints[a]

keypoints = keypoints * 48 + 48

new_keypoints = new_keypoints * 48 + 48

# Flip the image horizontally

flipped_img = X_train[image_number][:,::-1,:]

# In[94]:

y_train[image_number]

# In[95]:

# Unnormalize keypoints for plotting

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(121)

ax.imshow(np.squeeze(X_train[image_number]), cmap='gray')

ax.set_title('Original Image')

ax.scatter(keypoints[0::2],

keypoints[1::2],

marker='o',

c='c',

s=20)

ax2 = fig.add_subplot(122)

ax2.imshow(np.squeeze(flipped_img), cmap='gray')

ax2.set_title('Flipped Image')

ax2.scatter(new_keypoints[0::2],

new_keypoints[1::2],

marker='o',

c='c',

s=20)

# Below it is shown that the coordinate for the right eye (the person's left) accuratly identifies that eye in both normal and reflected images.

# #### Final Random Transformation Function

# Here the augmentation process outlined above is organized into several functions to augment the data.

# In[96]:

def scale_rotate_transform(data, labels, rotation_range=10, scale_range=.1):

'''

Scales and rotates an image and the keypoints.

'''

aug_data = np.copy(data)

aug_labels = np.copy(labels)

# Apply rotation and scale transform

for i in range(len(data)):

# Unnormalize the keypoints

aug_labels[i] = aug_labels[i]*48 + 48

scale_factor = 1.0 + (np.random.uniform(-1,1)) * scale_range

rotation_factor = (np.random.uniform(-1,1)) * rotation_range

# Use openCV to get a rotation matrix

M = cv2.getRotationMatrix2D((48,48), rotation_factor, scale_factor)

aug_data[i] = np.expand_dims(cv2.warpAffine(np.squeeze(aug_data[i]),M,(96,96)), axis=2)

for j in range(15):

coord_idx = 2*j

old_coord = aug_labels[i][coord_idx:coord_idx+2]

new_coord = np.matmul(M,np.append(old_coord,1))

aug_labels[i][coord_idx] = new_coord[0]

aug_labels[i][coord_idx+1] = new_coord[1]

#normalize aug_labels

aug_labels[i] = (aug_labels[i] - 48)/48

return aug_data, aug_labels

# In[97]:

def horizontal_flip(data, labels):

'''

Takes a image set and keypoint labels and flips them horizontally.

'''

# Flip the images horizontally

flipped_data = np.copy(data)[:,:,::-1,:]

flipped_labels = np.zeros(labels.shape)

for i in range(data.shape[0]):

# Flip the x coordinates of the key points

flipped_labels[i] += labels[i]

flipped_labels[i, 0::2] *= -1

# Flip the indices of the left right keypoints

flip_indices = [

(0, 2), (1, 3),

(4, 8), (5, 9), (6, 10), (7, 11),

(12, 16), (13, 17), (14, 18), (15, 19),

(22, 24), (23, 25),

]

for a,b in flip_indices:

flipped_labels[i,a], flipped_labels[i,b] = flipped_labels[i,b], flipped_labels[i,a]

return flipped_data, flipped_labels

# In[98]:

def data_augmentation(data, labels, rotation_range=10, scale_range=.1, h_flip=True):

'''

Takes in a the images and keypoints, applys a random rotation and scaling. Then flips the image

and keypoints horizontally if specified.

'''

aug_data, aug_labels = scale_rotate_transform(data, labels, rotation_range, scale_range)

if h_flip:

aug_data, aug_labels = horizontal_flip(aug_data, aug_labels)

return aug_data, aug_labels

# #### Augment the Dataset

# To augment the dataset, the original data and the horizontal reflection of the dataset is combined.

# In[99]:

data_hflip, labels_hflip = data_augmentation(X_train, y_train, 0.0, 0.0, True)

# In[100]:

keypoints = y_train[image_number]*48 +48

new_keypoints = labels_hflip[image_number]*48+48

fig = plt.figure(figsize=(12,12))

ax = fig.add_subplot(121)

ax.imshow(np.squeeze(X_train[image_number]), cmap='gray')

ax.scatter(keypoints[0::2],

keypoints[1::2],

marker='o',

c='c',

s=20)

ax2 = fig.add_subplot(122)

ax2.imshow(np.squeeze(data_hflip[image_number]), cmap='gray')

ax2.scatter(new_keypoints[0::2],

new_keypoints[1::2],

marker='o',

c='c',

s=20)

# In[101]:

X_aug = np.concatenate((X_train, data_hflip), axis=0)

y_aug = np.concatenate((y_train, labels_hflip), axis=0)

# Now that the orignial dataset is doubled, a version that is randomly scaled and rotated is created and added on. At this point the original dataset is 4 times the original size.

# In[102]:

X_train_transformed, y_train_transformed = data_augmentation(X_aug, y_aug, 15.0, .1, False)

X_train_transformed2, y_train_transformed2 = data_augmentation(X_aug, y_aug, 15.0, .1, False)

# Below is a sample of the transformed images and their keypoints.

# In[103]:

fig = plt.figure(figsize=(20,20))

fig.subplots_adjust(left=0, right=1, bottom=0, top=1, hspace=0.05, wspace=0.05)

for i in range(9):

ax = fig.add_subplot(3, 3, i + 1, xticks=[], yticks=[])

plot_data(X_train_transformed[i], y_train_transformed[i], ax)

# In[104]:

X_aug = np.concatenate((X_aug, X_train_transformed, X_train_transformed2), axis=0)

y_aug = np.concatenate((y_aug, y_train_transformed, y_train_transformed2), axis=0)

# In[105]:

X_aug.shape

# Let's repeat the above to really increase the amount of data our model is training on.

# In[106]:

X_train_transformed, y_train_transformed = data_augmentation(X_aug, y_aug, 15.0, .1, False)

X_train_transformed2, y_train_transformed2 = data_augmentation(X_aug, y_aug, 15.0, .1, False)

# In[107]:

X_aug = np.concatenate((X_aug, X_train_transformed, X_train_transformed2), axis=0)

y_aug = np.concatenate((y_aug, y_train_transformed, y_train_transformed2), axis=0)

# In[108]:

X_aug.shape

# For each training image, there are two landmarks per eyebrow (**four** total), three per eye (**six** total), **four** for the mouth, and **one** for the tip of the nose.

#

# Review the `plot_data` function in `utils.py` to understand how the 30-dimensional training labels in `y_train` are mapped to facial locations, as this function will prove useful for your pipeline.

# ### (IMPLEMENTATION) Specify the CNN Architecture

#

# In this section, you will specify a neural network for predicting the locations of facial keypoints. Use the code cell below to specify the architecture of your neural network. We have imported some layers that you may find useful for this task, but if you need to use more Keras layers, feel free to import them in the cell.

#

# Your network should accept a $96 \times 96$ grayscale image as input, and it should output a vector with 30 entries, corresponding to the predicted (horizontal and vertical) locations of 15 facial keypoints. If you are not sure where to start, you can find some useful starting architectures in [this blog](http://danielnouri.org/notes/2014/12/17/using-convolutional-neural-nets-to-detect-facial-keypoints-tutorial/), but you are not permitted to copy any of the architectures that you find online.

# In[207]:

# Import deep learning resources from Keras

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Dropout, GlobalAveragePooling2D, BatchNormalization

from keras.layers import Flatten, Dense

## TODO: Specify a CNN architecture

# Your model should accept 96x96 pixel graysale images in

# It should have a fully-connected output layer with 30 values (2 for each facial keypoint)

model = Sequential()

# Conv layer1

model.add(Conv2D(32, 3, strides=(1,1), padding='same', activation='elu', input_shape=(96,96,1)))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer2

model.add(Conv2D(64, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer3

model.add(Conv2D(128, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer4

model.add(Conv2D(256, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer5

model.add(Conv2D(256, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

#Flatten Layer

model.add(GlobalAveragePooling2D())

model.add(BatchNormalization())

#Fully Connected Layer 2

model.add(Dense(30, activation='elu'))

# Summarize the model

model.summary()

# ---

#

#

# ## Step 6: Compile and Train the Model

#

# After specifying your architecture, you'll need to compile and train the model to detect facial keypoints'

# ### (IMPLEMENTATION) Compile and Train the Model

#

# Use the `compile` [method](https://keras.io/models/sequential/#sequential-model-methods) to configure the learning process. Experiment with your choice of [optimizer](https://keras.io/optimizers/); you may have some ideas about which will work best (`SGD` vs. `RMSprop`, etc), but take the time to empirically verify your theories.

#

# Use the `fit` [method](https://keras.io/models/sequential/#sequential-model-methods) to train the model. Break off a validation set by setting `validation_split=0.2`. Save the returned `History` object in the `history` variable.

#

# Experiment with your model to minimize the validation loss (measured as mean squared error). A very good model will achieve about 0.0015 loss (though it's possible to do even better). When you have finished training, [save your model](https://keras.io/getting-started/faq/#how-can-i-save-a-keras-model) as an HDF5 file with file path `my_model.h5`.

# In[268]:

from keras.optimizers import SGD, RMSprop, Adagrad, Adadelta, Adam, Adamax, Nadam

learning_rates = [.001, .0001, .00001]

batch_sizes = [32, 64, 128]

full_loss_hist = []

full_val_loss =[]

for lr in learning_rates:

## TODO: Compile the model

model.compile(optimizer=Adam(lr=lr), loss='mean_squared_error')

for batch_size in batch_sizes:

print('learning rate: {}, batch size: {}'.format(lr,batch_size))

## TODO: Train the model

hist = model.fit(X_aug, y_aug, batch_size=batch_size, epochs=15, validation_data=(X_test, y_test), verbose=2)

full_loss_hist.append(hist.history['loss'])

full_val_loss.append(hist.history['val_loss'])

## TODO: Save the model as model.h5

model.save('my_model.h5')

# ## Psuedo-Labeling

# With the model trained on data with complete keypoints, it can now be used to predict the keypoints that are missing in the original data set. This should allow the model to learn from the keypoints that are available allowing it the generalize better since it is seeing new examples.

#

# This process can be repeated to get better predictions after it has learned from the new data. So the process will be to take the model and generate predictions on the full dataset. Using the labels from the full data set, the keypoints in the predictions will be replaced by the keypoints that do exist in the labels.

#

# Next, this data set will be augmented to increase the number of examples. The model will be trained from scratch using this new more general dataset. The model can then be trained on the original dataset which has only complete keypoints. Then this, hopefully more accurate model, can be used to generate another more accurate set of predictions at which point the cycle can be repeated until there is no longer an improvement.

# In[269]:

# Get full dataset including incomplet labels

X_full, y_full = load_data(complete_points=False)

# In[270]:

# Find incomplete label to see what it looks like

y_full[0]

# In[271]:

# Use previously trained model to predict the label; replace nan values with predictions. Keep real labels.

predictions = model.predict(X_full)

# In[272]:

print(predictions.shape, y_full.shape)

# In[273]:

# Difference between prediction and labels for incomplete keypoint data

y_full[0] - predictions[0]

# In[274]:

# Loop through all labels

for i in range(len(y_full)):

# Loop through all coordinates in label

for j in range(len(y_full[i])):

if not np.isnan(y_full[i][j]):

# If there is a coordinate in the actual label, replace value in the prediction

predictions[i][j] = y_full[i][j]

# In[275]:

# Difference after replacing predictions with actual values

y_full[0] - predictions[0]

# In[276]:

y_full[0]

# In[277]:

# Add horizontal flip of the new data

data_full_hflip, labels_full_hflip = data_augmentation(X_full, predictions, 0.0, 0.0, True)

# In[278]:

X_full = np.concatenate((X_full, data_full_hflip), axis=0)

predictions = np.concatenate((predictions, labels_full_hflip), axis=0)

# In[279]:

X_full_transformed, predictions_transformed = data_augmentation(X_full, predictions, 15.0, .1, False)

# In[280]:

X_full = np.concatenate((X_full, X_full_transformed), axis=0)

predictions = np.concatenate((predictions, predictions_transformed), axis=0)

# In[281]:

print(X_full.shape, predictions.shape)

# In[282]:

# Start with a new model; same architechture

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Dropout, GlobalAveragePooling2D, BatchNormalization

from keras.layers import Flatten, Dense

## TODO: Specify a CNN architecture

# Your model should accept 96x96 pixel graysale images in

# It should have a fully-connected output layer with 30 values (2 for each facial keypoint)

model = Sequential()

# Conv layer1

model.add(Conv2D(32, 3, strides=(1,1), padding='same', activation='elu', input_shape=(96,96,1)))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer2

model.add(Conv2D(64, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer3

model.add(Conv2D(128, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(Dropout(.2))

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer4

model.add(Conv2D(256, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

# Conv layer5

model.add(Conv2D(256, 3, strides=(1,1), padding='same', activation='elu'))

model.add(BatchNormalization())

model.add(MaxPooling2D((2,2), strides= 2, padding='same'))

#Flatten Layer

model.add(GlobalAveragePooling2D())

model.add(BatchNormalization())

#Fully Connected Layer 2

model.add(Dense(30, activation='elu'))

# Summarize the model

model.summary()

# In[283]:

# Train Model on new dataset

learning_rates = [.001, .0001, .00001]

batch_sizes = [32, 64, 128]

full_loss_hist = []

full_val_loss =[]

for lr in learning_rates:

model.compile(optimizer=Adam(lr=lr), loss='mean_squared_error')

for batch_size in batch_sizes:

print('learning rate: {}, batch size: {}'.format(lr,batch_size))

#Check that it works good on base dataset

hist = model.fit(X_full, predictions, batch_size=batch_size, epochs=15, validation_data=(X_test, y_test), verbose=2)

full_loss_hist.append(hist.history['loss'])

full_val_loss.append(hist.history['val_loss'])

model.save('my_model_full.h5')

# ---

#

#

# ## Step 7: Visualize the Loss and Test Predictions

# ### (IMPLEMENTATION) Answer a few questions and visualize the loss

#

# __Question 1:__ Outline the steps you took to get to your final neural network architecture and your reasoning at each step.

#

# __Answer:__